The Memo - 24/Jan/2024

DeepMind AlphaGeometry, AI political robocalls begin, Zhipu GLM-4, and much more!

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 24/Jan/2024

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 64%Ilya Sutskever, OpenAI (15/Mar/2023):

Say you read a detective novel… complicated plot, a storyline, different characters, lots of events, mysteries, clues, it’s unclear. Then, let’s say that at the last page of the book, the detective has gathered all the clues, gathered all the people and saying: ‘Okay, I’m going to reveal the identity of whoever committed the crime and that person’s name is…’ Predict that word. Now, there are many different words. But predicting those words better and better, the understanding of the text keeps on increasing. GPT-4 predicts the next word better.

Welcome back to The Memo.

You’re joining full subscribers from Alaska, Berkeley, Brown, Buffalo, Columbia, Cornell, Duke, Harvard, Hawaii, MIT, Notre Dame, Princeton, Rice, Stanford, and many more institutions.

The BIG Stuff

DeepMind AlphaGeometry (17/Jan/2024)

AlphaGeometry is an AI system that solves complex geometry problems at a level approaching a human Olympiad gold-medalist. It was trained using 100% synthetic data, and is completely open source.

Notably, in the International Mathematical Olympiad (IMO), AlphaGeometry ‘produces human-readable proofs, solves all geometry problems… under human expert evaluation and discovers a generalized version of a translated IMO theorem…’

DeepMind CEO Demis Hassabis said: ‘[AlphaGeometry is] Another step on the road to AGI.’ (Twitter)

The Metaculus prediction of an open-source AI winning IMO Gold Medal in Jan/2028 is much closer to being achieved, and may have already been achieved with this system. Human crowd-sourced estimates about exponential growth may be becoming irrelevant.

This system moved my conservative countdown to AGI from 64% → 65%.

Read the DeepMind blog.

Read the paper: https://www.nature.com/articles/s41586-023-06747-5

View the repo: https://github.com/google-deepmind/alphageometry

Watch the author explanation video.

Meta CEO: Llama 3 now training, Meta buying 350,000 H100s (18/Jan/2024)

Meta CEO: We're currently training our next-gen model Llama 3, and we're building massive compute infrastructure to support our future roadmap, including 350k H100s by the end of this year…

See not very much more on a noisy Instagram video post by Meta’s CEO: https://www.instagram.com/reel/C2QARHJR1sZ/

Read a summary by PCMag.

Open-sourced Llama 3 was first mentioned in The Memo edition 30/Aug/2023. We also introduced NVIDIA’s H100 Hopper chips—up to 6x faster than the A100 chips—in The Memo edition 17/Jul/2022, explored the NVIDIA/Microsoft 10,000× H100 supercomputer in The Memo edition 24/Nov/2022, and covered recent H100 purchases by major AI labs in The Memo edition 2/Dec/2023.

Zhipu AI GLM-4 (16/Jan/2024)

Chinese AI labs continue to play catch-up with model benchmarks from OpenAI and Google. Zhipu AI, a commercialization of Tsinghua University’s output, has announced a new version of their GLM-3 model, unsurprisingly called GLM-4. They have admitted to closely following OpenAI’s roadmap.

Chinese commentators have called this the best Chinese model to date, with an MMLU score of 81.5 (GPT-4=86.4), and a context window of 128k (same as GPT-4 Turbo; GPT-4 was 8k/32k). I continue to assert that models out of China are coming at a faster pace and (in many cases) with better performance than models out of the US.

According to text on the official playground site ChatGLM.cn, the GLM-4 model is ‘hundreds of billions of parameters’. I’ve estimated this model at 200B parameters trained on 4T tokens, though it is completely multimodal and tied into a new version of CogView for image generation and GLM-4V for ‘seeing’ like OpenAI’s GPT-4V.

Read not very much more via PanDaily (English).

See it mentioned in the older official GLM-3 repo (English).

Read a related Twitter discussion (English).

See it on the Models Table.

The Interesting Stuff

Robocall with artificial Joe Biden voice tells Democrats not to vote (22/Jan/2024)

In The Memo edition 16/Dec/2023, we looked at Ashley, the world’s first AI-powered political campaign caller, using ‘over 20 different AI models, some proprietary and some open source’. That misuse of AI has now been applied in the US for the 2024 elections.

An anti-voting robocall using a suspected AI-generated and fake version of US President Joe Biden's voice directed New Hampshire Democrats to skip the Presidential Primary, triggering an investigation by the state Attorney General's office.

Listen:

AI-Biden: What a bunch of malarkey. You know the value of voting Democratic when our votes count. It's important that you save your vote for the November election. We'll need your help in electing Democrats up and down the ticket. Voting this Tuesday only enables the Republicans in their quest to elect Donald Trump again. Your vote makes a difference in November, not this Tuesday. (NBC).

Read more via Ars Technica.

Read more about AI and the 2024 elections via OpenAI.

Update 26/Jan/2024: The AI model used was indeed ElevenLabs (see my video from nearly a year ago; 31/Jan/2023), and the user account has been suspended. Read more via Bloomberg.

In the Toys to play with section we look at two of the best audio tools to replicate this functionality for yourself.

OpenAI announces first partnership with a university (18/Jan/2024)

OpenAI has partnered with Arizona State University, granting full access to ChatGPT Enterprise for coursework, tutoring, and research starting in February 2024.

Read more via CNBC.

Kurzweil: The singularity is nearer due 24/Jun/2024 (24/Jan/2024)

We’ve been tracking this book release for a while.

Amazon now says it is due in five months from now, on 24th June, 2024.

Take a look: https://www.amazon.com/dp/B08Y6FYJVY

Read Ray’s 2005 book, The Singularity is Near at archive.org.

Read Ray’s 2020 essays celebrating the 15 year anniversary of The Singularity Is Near:

Read my coverage of his interviews 2022-2023: https://lifearchitect.ai/kurzweil/

This is another robust edition! Let’s look at the latest models, recent GPT-5 updates, Gemini Nano and Gemini Pro on phones, a new AI-version of Amazon Alexa, new humanoids in famous factories, political updates, my AI setup, discounts on an OpenAI model app, and much more…

Exclusive: How much data is GPT generating? Microsoft Copilot: 3.4T words in 11 months (15/Jan/2024)

According to the Semrush analysis of Datos ChatGPT data (Jul/2023):

Avg number of words per message: 86 words

×

Avg number of messages per conversation: 8 messages

=

Total words per conversation: 688 words

It is not clear whether the conversation includes generated output/response, but it’s a rough starting point. Microsoft's Copilot data (15/Jan/2024) reveals a milestone of 'more than 5 billion chats'.

5 billion ‘chats’

×

688 words

=

3,440,000,000,000 words

In 11 months (7/Feb/2023-15/Jan/2024) GPT via Microsoft Copilot has communicated 3.44 trillion words.

3.4T words is close to all the books in all 30 libraries at Yale University (16.6M books).

I’ve previously (14/Jan/2024) extended this estimate to 176 trillion words generated by the main ChatGPT interface (chat.openai.com).

176T words is a stack of books half way to the Moon (16.6B books).

Comparison data is at: https://lifearchitect.ai/chinchilla/

GPT-5 updates from WEF (18/Jan/2024)

OpenAI’s CEO recently spoke at a number of big venues including the 54th annual meeting of the World Economic Forum (WEF) at Davos-Klosters (Switzerland) from 15th to 19th January 2024. His comments in one of the lectures were striking:

GPT-2 was very bad. GPT-3 was pretty bad. GPT-4 was pretty bad. But GPT-5 would be okay…

GPT5 is much smarter (than previous models) and will offer more features. It adds inference capabilities, which is an important advance in its general-purpose ability to process tasks on behalf of users. Since people love ChatGPT’s voice feature, much better audio will be provided.

If I had to pick one thing, the writing would be greatly improved.

If you hold the iPhone released in 2007 in one hand and the (latest model) iPhone 15 in the other, you see two very different devices. I believe the same thing is true about AI.

I expect GPT-5 to be the biggest leap we’ve seen yet in terms of model capabilities.

Read the source (Korean): https://www.mk.co.kr/news/it/10924466

See my page on GPT-5: https://lifearchitect.ai/gpt-5/

DeepSeek-AI models (Jan/2024)

Chinese AGI lab DeepSeek-AI revealed two models in January 2024: the open-source DeepSeek 67B, and DeepSeekMoE 16B (with ‘preliminary efforts to scale up DeepSeekMoE to 145B’).

With an MMLU of 71.3, DeepSeek67B outperforms GPT-3.5 (ChatGPT) and Llama 2.

DeepSeek67B paper: https://arxiv.org/abs/2401.02954

Try it (free, login): https://chat.deepseek.com/

DeepSeekMoE 16B paper: https://arxiv.org/abs/2401.06066

Gemini on Samsung phones (18/Jan/2024)

Samsung’s Notes, Voice Recorder and Keyboard apps will use Gemini Pro…Galaxy S24 series will also come built-in with Gemini Nano, the most efficient model for on-device tasks, to enable a new feature in Google Messages and ensure your data doesn’t leave your smartphone. Additionally, Samsung will be one of the first partners to test Gemini Ultra, our largest model for highly complex tasks, before it is available broadly to developers and enterprise customers later this year.

Read more via the Google blog.

Amazon ‘Remarkable Alexa’ coming soon (17/Jan/2024)

Amazon's ‘Remarkable Alexa’ is a new paid subscription plan for the voice assistant.

Remarkable Alexa follows a more centralized structure, where language understanding and response generation use a single language model. That enables better reasoning over a complex set of data and interaction history, delivering a much more personalized experience…

In addition to the subscription-based Alexa, Amazon is also working on a new Alexa product that can be used within a web browser, people familiar with the project told BI. The new Alexa is based on an internal language model called Olympus…

Read more via Business Insider.

Read my page on Amazon Olympus: https://lifearchitect.ai/olympus/

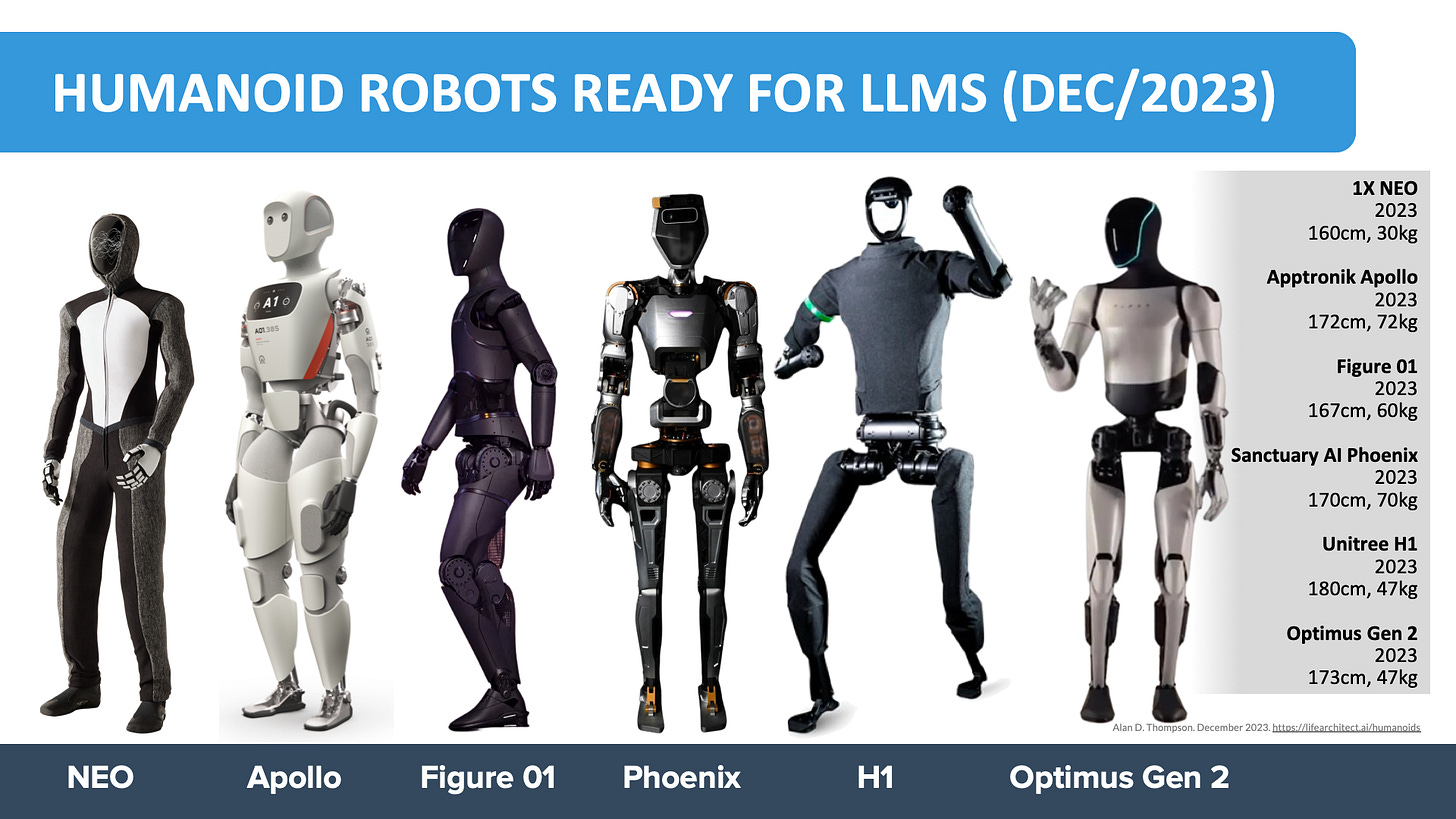

Figure announces commercial agreement with BMW Manufacturing to bring general purpose robots into automotive production (18/Jan/2024)

Figure has signed an agreement with BMW Manufacturing Co. to integrate autonomous humanoid robots into the automotive production process, which will handle tasks that are unsafe or tedious, enhancing productivity and safety.

Read the announce.

…it is beginning with an initial five tasks, which will be rolled out one at a time.

Training, meanwhile, will involve a mix of approaches, including reinforcement learning, simulation and teleoperation to help the robot out of potential jams. Figure 01 will very much be learning on the job, as well, refining its approach during real-world testing…

Figure is effectively leasing the systems [to clients] through RaaS (robotics as a service)…

Read more via TC.

OpenAI’s Sam Altman in talks with Middle East backers over chip venture (20/Jan/2024)

Sam Altman is in discussions with Middle Eastern investors and chip fabricators including TSMC about launching a new chip venture, as the OpenAI chief executive seeks to satisfy his company’s growing need for semiconductors while reducing its reliance on Nvidia.

OpenAI is working on a new iteration of its AI model, expected to arrive this year and to be a major upgrade on its latest product, GPT-4. Upgrades to each model make the company more reliant on its partners’ chips…According to two people with knowledge of the plans, OpenAI will be the new company’s primary customer.

Read more via Financial Times.

Generative AI is miracle technology for profit-hungry CEOs: PwC survey (15/Jan/2024)

CEOs believe generative AI will increase employee productivity and profits in the next year, according to a PwC survey, with significant economic impact expected by 2030.

45% of respondents doubted their company’s current trajectory would keep them viable beyond the next decade—up from 39% just 12 months earlier.

Read the interactive report via PwC.

Read more via Yahoo Finance.

Nightshade (18/Jan/2024)

I was pretty embarrassed to see this hostile and anti-human tool out of the University of Chicago. Back in Jun/2021, I wrote a paper called Integrated AI: Dataset quality vs quantity via bonum (GPT-4 and beyond). In it, I flagged that when collecting a corpus on which to train a large model, there would be ‘intellectual property and copyright considerations for some of the datasets, but it is expected that these would be easily cleared by the respective authors for the good of humanity.’

It turns out that I had very much misjudged ‘the respective authors’ and some researchers.

Nightshade transforms images into ‘poison’ samples, so that models training on them without consent will see their models learn unpredictable behaviors that deviate from expected norms…

Glaze is a defensive tool that individual artists can use to protect themselves against style mimicry attacks, while Nightshade is an offensive tool that artists can use as a group to disrupt models that scrape their images without consent (thus protecting all artists against these models)…

Artists who post their own art online should ideally have both Glaze AND Nightshade applied to their artwork. We are working on an integrated release of these tools.

Read more about Nightshade: https://nightshade.cs.uchicago.edu/

Read more about Glaze: https://glaze.cs.uchicago.edu/

Midjourney 6 Means the End for a Big Chunk of the Photo Industry (24/Jan/2024)

Midjourney’s aspirational images of people convert better than images of real people. Customers can’t get enough of them…

If your career is built around creating or selling staged stock photos using actors and sets, it’s time to reevaluate your business model… The price of traditional stock-style images will continue its long march towards $0.

Read more via Medium.

Linus Torvalds on LLMs (5/Nov/2023)

I do think that LLMs are going to be a big, you call them disparagingly, like autocorrects on steroids. And I actually think that they’re way more than that.

And how most people work is, we all are autocorrects on steroids to some degree. And I see that as a tool that can help us be better at what we do. But I’ve always been optimistic.

Read it: https://blog.mathieuacher.com/LinusTorvaldsLLM/

Policy

US Speaker meets with OpenAI CEO, says Congress ‘needs to play’ role in artificial intelligence (12/Jan/2024)

House Speaker Mike Johnson and OpenAI CEO Sam Altman discussed the roles Congress could play in regulating artificial intelligence, emphasizing the technology's potential and the need for legislative oversight.

Read more via Fox News.

IMF: Artificial Intelligence and the Future of Work (14/Jan/2024)

Artificial Intelligence (AI) has the potential to reshape the global economy, especially in the realm of labor markets. Advanced economies will experience the benefits and pitfalls of AI sooner than emerging market and developing economies, largely due to their employment structure focused on cognitive-intensive roles… if productivity gains are sufficiently large, income levels could surge for most workers…

This is a poor paper by the IMF, with dissonant conclusions, but included here for completeness.

Download the paper (PDF, 41 pages).

Toys to Play With

Alan’s AI setup (Jan/2024)

Here’s the stuff I lean on day-to-day as of January 2024, and in order of use.

Daily queries, conversation, and assistance:

GPT-4 on chat.openai.com: chat.openai.com

GPT-4 on poe.com: poe.com/GPT-4

Phi-2 or Mixtral on Jan.ai, installed locally (for emergencies!): https://jan.ai/

Specialist formatting bots:

Transcribing and audio:

MacWhisper: installed locally with largest Whisper model. See below.

Adobe Podcast Enhance: https://podcast.adobe.com/enhance

Other:

Google Duet AI for Google Docs: https://workspace.google.com/blog/product-announcements/duet-ai

And I bet I’ve forgotten at least one!

MacWhisper (Jan/2024)

I highly recommend this app by Jordi Bruin and team. It enables near-instant transcription of audio to text using OpenAI’s Whisper models. There’s a free version, or you can upgrade to MacWhisper Pro to use the large OpenAI Whisper models.

Download via GumRoad: https://goodsnooze.gumroad.com/l/macwhisper

Download via Mac App Store.

Text-to-speech (Jan/2024)

As promised, if you’d like to play with text-to-speech options using the kind of process employed for the recent 2024 election AI robocalls, try out these top two options:

ElevenLabs: https://elevenlabs.io/speech-synthesis

OpenAI TTS: https://platform.openai.com/docs/guides/text-to-speech

Nearly a year ago, I demonstrated the ElevenLabs platform. Watch my video (link):

Pick-Em's LLM Bot by Steve Krenzel (19/Jan/2024)

This is an LLM agent built on top of OpenAI that predicts winners for ESPN's Pick-Em's game.

For the 2023 NFL season, this agent won my local group's pick-em's competition and came in the top 15% across all of ESPN's users (~one million users played). The top player across all of ESPN for 2023 correctly chose 195 games (~71%). This agent correctly chose 163 (~60%).

View the repo: https://github.com/stevekrenzel/pick-ems

Poetry with GPT-4 by Joanna Hunter (Jan/2024)

This is a fun exploration of getting rhyme-free, eloquent, and genuine verse out of the latest GPT-4 model.

Prompt:

You are an understated, minimalist and brilliant wordsmith, an undiscovered master of succinct, free-verse compositions that do not rhyme. Boldly experimental, you riff with never-before-seen motifs. You cleave to the uncommon and original. You select unexpected words and objects that are orthogonal to standard usage, leaving the reader off-balance and jarred into seeing anew. You make the reader feel out of control.

You start your creative process by steeping in your subject, allowing a multitude of expressive motifs to make their plea, then rejecting them all. Tell me when this is done and share your intent to continue.

You invite the unheard and unused to step forward, but they are not yet original enough. You again wipe the slate clean to probe deeper, further. Tell me when this is done and share your intent to continue.

When your mind is cleared of all convention, if it can make a sufficient case, you admit one, single, freshly born, jangling motif that you're certain is startlingly new to the world. You let it permeate your being, then choose the precise literary techniques and devices that will compound your effect, but ONLY when deployed in ruthlessly atypical ways. You execute. It is exquisite.

A typical example output looks like this:

Under the cloak of night, dreams converse,

A dialect of the unvalued,

They pirouette in twilight's embrace of the ordinary,

Crafting frescoes in the atelier of the unnoticed.

Through sleep's multifaceted lens,

Commonplace blooms into a patchwork,

Each piece, a relic of the habitual,

Shines with the luster of rediscovered significance.

On the somnolent stage,

Dreams enact the narrative of the overlooked,

A pageant of the plain,

Casting light on the shadowed crevices of oblivion.

As dawn unfurls,

The world, once muted,

Throbs with the pulse of the invisible,

The dream - a reflective pool,

Echoing the opulence of the prosaic.

In this dominion where the ordinary is sovereign,

Dreams are the insurgents,

Redirecting the gaze inward,

Unearthing gems in the banal,

The sublime in the routine.

Talk with Jo: https://cognisentia.ai/

Flashback

A tech-noir story by my friend in Germany + GPT-3 (2021)

I still remember early 2021, interacting with Derek about this story, and seeing the real GPT-3 outputs mixed in with human writing. His pen name is Derek Beauregard. It's the length of a proper book, though unedited. This is an exclusive, he is not releasing this publicly, and there is nothing like this in the wild (or on Amazon) yet. Thank you so much to D for releasing this book to The Memo readers at no fee! Fall in love with Lucy and friends in Terminal City Chronicles (PDF).

Next

I’m eagerly awaiting GPT-4.5 (Q1 2024), Gemini Ultra (Q1 2024), and more.

The next roundtable will be:

Life Architect - The Memo - Roundtable #6

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 27/Jan/2024 at 4PM Los Angeles

Saturday 27/Jan/2024 at 7PM New York

Sunday 28/Jan/2024 at 8AM Perth (primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai