The Memo - 14/Jan/2024

First models for 2024, MosaicML scaling laws, Kepler K1, and much more!

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 14/Jan/2024

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 64%Welcome back to The Memo.

You’re joining full subscribers from Alibaba, BAAI, Baidu, Huawei, Tencent, Tsinghua University, and more…

What a spectacular start to 2024! Here’s an interesting thought: If the current pace of AI development were to stop right now, we still have enough current model capabilities to discover and new technology to unpack to power humanity for generations. From the releases of frontier models like GPT-4 and Gemini to open source models like Llama 2 and Mixtral 8x7B, last year gave us tremendous coverage, and it will take us some time to sift through the possibilities of each model. Sidenote: I’m still seeing recent analysis of 2019 GPT-2’s capabilities! (1, 2, 3).

The early winner of The Who Moved My Cheese? AI Awards! for January 2024 is George Carlin’s daughter (‘No machine will ever replace [my Dad’s] genius. These AI generated products are clever attempts at trying to recreate a mind that will never exist again.’)

Not closely related to AI, the Vulcan rocket was successfully launched from Florida on 8/Jan/2024. Along with the ashes and DNA of Arthur C. Clarke (ABC, 8/Jan/2024), the rocket and lander contain a pop-sci version of my dissertation on human intelligence and intuition. The final moon landing seems unlikely now (official updates by Astrobotic), so perhaps it will just zoom through space for a while…

The BIG Stuff

Exclusive: First models for 2024 (Jan/2024)

The first large language models for 2024 are JPMorgan DocLLM (7B, paper) an LLM focused on the spatial layout structure of documents. SUTD TinyLlama (1.1B, paper), out of Singapore, finally finished training from its Sep/2023 start. This model was deliberately overtrained using 2,727 tokens per parameter (see my explanation of Chinchilla data-optimal scaling, and Mosaic scaling later in this edition). The dataset was 1T tokens, and ran for 3 epochs to 3T total tokens seen. Tencent LLaMA Pro (8.3B, paper) presented expanded blocks, with fine-tuning (actually ‘a new post-pretraining method’) on 80B tokens using code and math data.

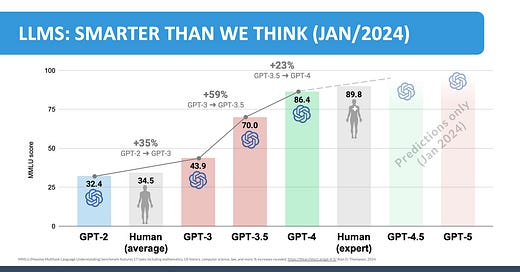

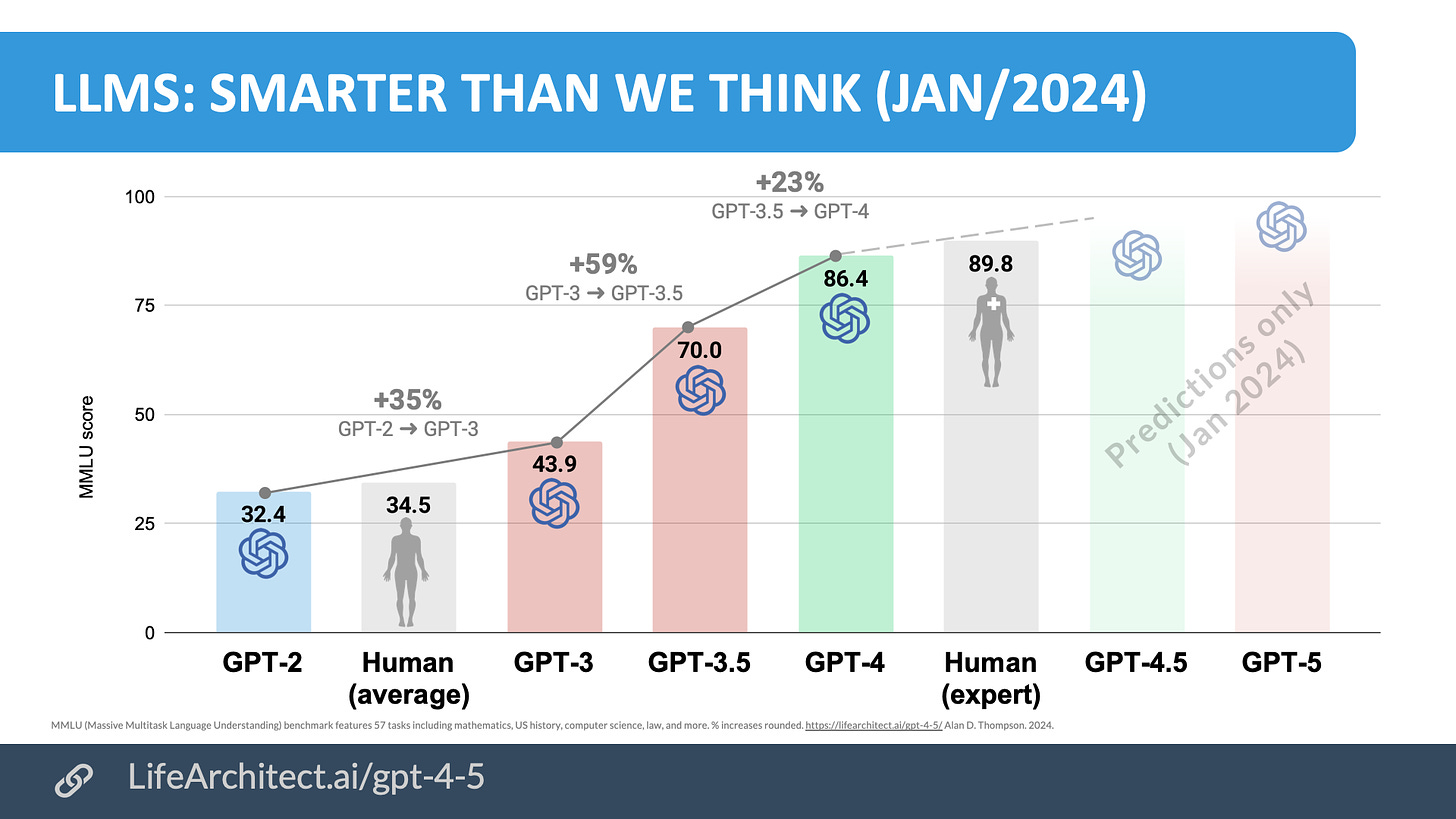

Exclusive: Counting down to the release of GPT-4.5 (11/Jan/2024)

We’re counting down to the release of OpenAI’s GPT-4.5 model release. Will it be some time in the second half of January 2024? Rumors are scarce, though I’m hoping to see another increase in intelligence, as measured by MMLU score. The time between each model release can be months or years, but each successive model has boasted a significant performance increase across this wide benchmark.

Read more or download viz: https://lifearchitect.ai/gpt-4-5/

My video version of this viz: https://youtu.be/AdEfU1B4IFk

The Interesting Stuff

OpenAI quietly deletes ban on using ChatGPT for ‘Military and Warfare’ (12/Jan/2024)

OpenAI has removed a prohibition on using its AI technology for military purposes from its usage policy, raising questions about potential military applications and the enforcement of ethical guidelines.

OpenAI appears to be silently weakening its stance against doing business with militaries. “I could imagine that the shift away from ‘military and warfare’ to ‘weapons’ leaves open a space for OpenAI to support operational infrastructures as long as the application doesn’t directly involve weapons development...”

Read more: https://theintercept.com/2024/01/12/open-ai-military-ban-chatgpt/

In The Memo edition 30/Apr/2023 we explored major defense contractors like Palantir using large language models for military applications. The models shown in their AIP military platform include:

EleutherAI GPT-J 6B.

Google FLAN-T5 XL 3B.

EleutherAI GPT-NeoX-20B.

Databricks Dolly 2.0 12B.

If you’re interested in this space, check out The Memo edition 30/Apr/2023 for Palantir AIP (watch my archived video on AIP from Apr/2023), and The Memo edition 9/Jul/2023 for coverage of Scale Donovan.

AI-generated book wins first literary award (16/Oct/2023)

This achievement is from a few months ago, but I’ve finally uncovered a copy of the original work in Chinese.

Land of Memories (Chinese: 机忆之地) is a Chinese science-fiction novel by Shen Yang (沈阳), a professor at Tsinghua University’s School of Journalism and Communication. (wiki)

The model used by Prof Shen Yang is unclear. I don’t think it was Tsinghua’s own GLM-130B model though because that doesn’t do images (HF), couldn’t have been Baidu’s ERNIE 4.0 1T model because it hadn’t been released, and so may have relied on 360’s Zhinao 100B (link, Chinese) or similar.

It is reported that this is the first time in the history of literature and the first time in the history of AI that AIGC [AI-generated content] works have participated in the competition together with humans and won awards.

Read the book (Chinese, 126 pages) via QQ.

Read the SCMP article source.

See my list of books by AI ending Mar/2022: https://lifearchitect.ai/books-by-ai/

Full subscribers have access to the first books written by GPT-3 and GPT-4.

MosaicML: Beyond Chinchilla-Optimal: Accounting for Inference in Language Model Scaling Laws (31/Dec/2023)

Training is a once-off process where an AI model is fed with a lot of data to recognize patterns and make connections. Large models use a lot of compute during training over many months, but they only have to do so once.

Inference occurs after the model has finished training, every time a user asks the AI model a question (prompt) to produce an output (response).

For inference, popular models might generate trillions and soon quadrillions of words for millions of users over the model’s lifetime (GPT-3 was generating 4.5 billion words per day back in Mar/2021, and then in Dec/2022 I estimated ChatGPT’s output to be around [calcs corrected 16/Jan/2024]:

310 million words per minute;

446.4 billion words per day;

176 trillion words in the 13 months since launch;

using a lot of compute at all times.

This month MosaicML released a paper proposing a new scaling law:

Accounting for both training and inference, how does one minimize the cost required to produce and serve a high quality model?

We conduct our analysis both in terms of a compute budget and real-world costs and find that LLM researchers expecting reasonably large inference demand (~1B requests) should train models smaller and longer than Chinchilla-optimal.

With a fixed compute budget—and for no real-world users because it was locked in a lab!—Chinchilla would train a 70B model for 1.4T tokens (20:1) and also lists a 70B model for 4.26T tokens (61:1).

Mosaic’s proposal would train a popular/inference-intensive 41.6B model for 7,920B tokens (190:1).

At its most basic, Mosaic is attempting to minimize the cost of serving a model. While we can be certain that OpenAI trained, optimized, and deployed the original ChatGPT (gpt-3.5-turbo) 20B model as efficiently as possible to serve more than 200 million people (most of them free users), the world was not yet accustomed to AI copilots, let alone paying for AI inference.

Consider the major frontier models, and the pricing for inference of one million tokens. This is around 750,000 words, which is about how much we speak every 46 days (2007), or most of the seven Harry Potter books (2017):

Evolutions of the original Kaplan (GPT-3) and Chinchilla scaling laws are expected and welcomed. I’m interested to see how this significant increase in training data—an unspoken conclusion of the Mosaic paper—will develop in the real world.

Read the paper: https://arxiv.org/abs/2401.00448

The largest datasets are listed in my 2023 AI report: https://lifearchitect.ai/the-sky-is-comforting/

Read my ‘plain English’ analysis of scaling laws: https://lifearchitect.ai/chinchilla/

ARK updates their AGI prediction based on Metaculus data (3/Jan/2024)

Artificial general intelligence (AGI) is a machine capable of understanding the world as well as—or better than—any human, in practically every field, including the ability to interact with the world via physical embodiment.

ARK Invest used crowd-sourced data from Metaculus (source with AGI definitions) to visualize AGI date predictions (as of 3/Jan/2024).

The two forecast AGI dates presented are:

~Dec/2026 (if Metaculus forecast error continues).

Sep/2031 (if Metaculus forecast is now well-tuned).

Source: https://twitter.com/wintonARK/status/1742979090725101983

Note that my conservative countdown to AGI remains at 64%, with one of the prediction charts now pointing to Jan/2025.

Read more: https://lifearchitect.ai/agi/

Volkswagen integrates ChatGPT into its vehicles (8/Jan/2024)

Volkswagen announced at CES 2024 the integration of ChatGPT into its vehicles including the 2024 Tiguan, Passat, and Golf.

Volkswagen will be the first volume manufacturer to offer ChatGPT as a standard feature from the second quarter of 2024 in many production vehicles…

Enabled by Cerence Chat Pro, the integration of ChatGPT into the backend of the Volkswagen voice assistant… can be used to control the infotainment, navigation, and air conditioning, or to answer general knowledge questions… enriching conversations, clearing up questions, interacting in intuitive language, receiving vehicle-specific information, and much more.

Read more via Volkswagen Newsroom.

New version of Siri with generative AI again rumored for WWDC (4/Jan/2024)

Apple is rumored to unveil a new Siri with generative AI at WWDC [June 2024], featuring natural conversation and increased personalization across devices.

Apple has recently made progress with integrating generative AI into Siri using its Ajax-based model…

The new features are believed to be available across devices, suggesting that the new version of Siri will retain conversation information from one device to another. It is also said to feature a new "Apple-specific creational service," which might relate to the previously reported Siri-based Shortcuts capabilities rumored for iOS 18. Apple is purportedly working on linkages for the new version of Siri to connect to various external services, likely via an API.

Read more via MacRumors.

See Apple’s Ajax GPT on the Models Table: https://lifearchitect.ai/models-table/

This is another very long edition. Let’s look at a lot more AI, eight new robot pieces including the new Boston Dynamics' Spot clone now available on Amazon for $2499, US gov buying ChatGPT licenses, and bleeding-edge toys like the new Midjourney styles, a new AI-generated film, and two new chat platforms.

Deloitte rolling out AI copilot to 75,000 of its employees (8/Jan/2024)

Developed by Deloitte’s AI Institute, PairD is an internal Generative AI platform designed to help staff with business tasks.

Read release via Deloitte UK.

Deloitte said its “PairD” tool can be used by staff to answer emails, draft written content, write code to automate tasks, create presentations, carry out research and create meeting agendas.

Read more via FT.

US state gov buys enterprise ChatGPT licenses for pilot (9/Jan/2024)

The state of Pennsylvania (USA) has initiated a pilot program in collaboration with OpenAI, providing 50 ChatGPT Enterprise licenses to the Office of Administration, with plans for a broader roll-out and additional licenses for employees.

The ChatGPT Enterprise pilot will begin in January 2024 and is initially limited to OA [Office of Administration] employees who will use the tool for tasks such as creating and editing copy, making outdated policy language more accessible, drafting job descriptions to help with recruitment and hiring, addressing duplication and conflicting guidance within hundreds of thousands of pages of employee policy, helping employees generate code, and more.

Read more via official release by Governor Josh Shapiro.

Read explanations via StateScoop and The Verge.

DeepMind: Shaping the future of advanced robotics (4/Jan/2024)

Let’s talk about embodiment for quite a while! Here we go…

Today we’re announcing a suite of advances in robotics research that bring us a step closer to this future… to help robots make decisions faster, and better understand and navigate their environments.

[AutoRT provides] its LLM-based decision-maker with a Robot Constitution - a set of safety-focused prompts to abide by when selecting tasks for the robots.

These rules are in part inspired by Isaac Asimov’s Three Laws of Robotics – first and foremost that a robot “may not injure a human being”. Further safety rules require that no robot attempts tasks involving humans, animals, sharp objects or electrical appliances.

Read more via DeepMind.

Isaac Asimov was a former President of Mensa, and his three laws of robotics were introduced in 1942 (wiki), a few short years before Turing gave us the concept of AI:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The Zeroth law (added later): A robot may not injure humanity or, through inaction, allow humanity to come to harm.

I referenced these laws in my AI alignment viz: https://lifearchitect.ai/alignment/

Boston Dynamics Spot clone now available on Amazon US for $2,499 (Jan/2024)

The wonderful toy for beginners and experienced robot enthusiasts alike! With basic gaits like walking and running, the Go2 Air model is great for beginners, while the Go2 Pro offers more advanced gaits for a greater challenge.

The use of GPT requires an additional fee. 4G modules are temporarily unavailable in North America due to legal and regulatory issues.

Check it out: https://www.amazon.com/Unitree-Quadruped-Robotics-Adults-Embodied/dp/B07TZ3YC3N/

Humanoid robots are getting to work (30/Dec/2023)

Humanoid robots, like Agility Robotics’ Digit, are transitioning from research projects to commercial pilot projects, aiming to take on repetitive and physically demanding tasks within the logistics industry.

Read more via IEEE Spectrum.

1X raises another $100M for the race to humanoid robots (11/Jan/2024)

We’ve been talking about this company for a while, at least since The Memo edition 3/Apr/2023 when they secured investment of $25M led by OpenAI.

Now 1X, a Norwegian robotics firm supported by OpenAI, secures another US$100 million in Series B funding to advance its humanoid robot NEO, aiming to address global labor shortages.

Read more via Yahoo Finance.

1X EVE serving coffee in Oslo (8/Jan/2024)

See more: https://twitter.com/1x_tech/status/1744295554278940994

Read more about EVE: https://www.1x.tech/androids/eve

See more humanoid robots: https://lifearchitect.ai/humanoids/

Spot at AB InBev Belgium | Boston Dynamics (11/Jan/2024)

Here’s the latest from Boston Dynamics’ Spot at work in an automated beverage factory.

Watch the video (link):

Kepler Forerunner K1 (Jan/2024)

The Forerunner series of general-purpose humanoid robots is independently developed by Kepler Exploration Robotics and will be commercialized and mass-produced in near future. It features a highly bionic humanoid structure and motion control, with up to 40 degrees of freedom in the whole body. It serves as a valuable assistant in industrial production and a reliable partner for humanity.

Capable of replacing humans in high risk tasks, such as bomb disposal, radiation protection, high-temperature, emergency response, and more, effectively eliminate potential safety risk for human.

Read more: https://www.gotokepler.com/productDetail?id=2

Watch the video (link):

World's first fully AI powered restaurant to open in California (18/Dec/2023)

CaliExpress in Pasadena (California, USA) will feature robots taking orders and making burgers and fries. It is set to open by the end of the year.

Read more via CBS.

Watch the video (link):

Meta: ‘The most advanced thing we’ve ever produced as a species’ is their AR glasses prototype (3/Jan/2024)

In an interview, Meta's CTO Andrew Bosworth claimed that their AR glasses prototype could be the most sophisticated consumer electronics ever made, with plans to reveal it in 2024, despite high manufacturing costs.

Read more via MIXED Reality News.

Steam allows AI gaming content (10/Jan/2024)

Today, after spending the last few months learning more about this space and talking with game developers, we are making changes to how we handle games that use AI technology. This will enable us to release the vast majority of games that use it…

Pre-Generated: Any kind of content (art/code/sound/etc) created with the help of AI tools during development. Under the Steam Distribution Agreement, you promise Valve that your game will not include illegal or infringing content, and that your game will be consistent with your marketing materials. In our pre-release review, we will evaluate the output of AI generated content in your game the same way we evaluate all non-AI content - including a check that your game meets those promises.

Live-Generated: Any kind of content created with the help of AI tools while the game is running. In addition to following the same rules as Pre-Generated AI content, this comes with an additional requirement: in the Content Survey, you'll need to tell us what kind of guardrails you're putting on your AI to ensure it's not generating illegal content.

Read announce by Steam.

Announcing NVIDIA NeMo Parakeet ASR models for pushing the boundaries of speech recognition (3/Jan/2024)

NVIDIA's NeMo introduces Parakeet ASR models, developed with Suno.ai, offering state-of-the-art automatic speech recognition with exceptional accuracy and robustness in various audio environments.

Read more via NVIDIA.

Nvidia’s H100 GPUs will consume more power than some countries (26/Dec/2023)

Nvidia's H100 GPUs are set to consume more power than some small countries, with each unit drawing 700W and sales projected to reach 3.5 million in the coming year. That means that Nvidia's H100 GPUs will consume more power than all of the households in Phoenix, Arizona, by the end of 2024 when millions of these GPUs are deployed.

This is a very strange metric by Paul Churnock, the Principal Electrical Engineer of Datacenter Technical Governance and Strategy at Microsoft. I found it interesting enough to include here!

Read more via Tom's Hardware.

Mixtral paper (8/Jan/2024)

We introduce Mixtral 8x7B, a Sparse Mixture of Experts (SMoE) language model. Mixtral has the same architecture as Mistral 7B…

…each token has access to 47B parameters, but only uses 13B active parameters during inference. Mixtral was trained with a context size of 32k tokens and it outperforms or matches Llama 2 70B and GPT-3.5 across all evaluated benchmarks.

The paper omits useful architecture details like dataset.

Read the paper: https://arxiv.org/abs/2401.04088

Try Mixtral on Poe.com (free, login): https://poe.com/Mixtral-8x7B-Chat

Try Mixtral on HuggingChat (free, no login): https://huggingface.co/chat/

Policy

EU AI Act Q&A (14/Dec/2023)

It’s often helpful to read the same thing using different words or in a slightly shifted format. We cover the EU AI Act a lot in The Memo, and here is their official Q&A.

The AI Act and the Coordinated Plan on AI are part of the efforts of the European Union to be a global leader in the promotion of trustworthy AI at international level. AI has become an area of strategic importance at the crossroads of geopolitics, commercial stakes and security concerns.

Read more: https://ec.europa.eu/commission/presscorner/detail/en/QANDA_21_1683

China’s LLMs (24/Dec/2023)

Further to my analysis in The Memo edition 31/Dec/2023, here are the official certifications for the first four frontier models.

Read more (Chinese): https://www.stcn.com/article/detail/1073270.html

Toys to Play With

Midjourney: Midlibrary (Jan/2024)

Andrei Kovalev's Midlibrary is a carefully curated collection of hand-picked Midjourney Styles and a Midjourney Guide.

Take a look: https://midlibrary.io/

Styles: https://midlibrary.io/styles

The Lost Father | Animated AI Film (4/Jan/2024)

Tech stack: Midjourney, Dalle-3, Runway Gen 2, trained a model on those images using EverArt, ElevenLabs (speech-to-speech).

Watch the video (link):

Unleash creativity with Leonardo AI (4/Jan/2024)

Leonardo AI introduces a suite of AI-powered tools for creating art, aimed at artists and creators for generating unique and intricate designs with ease.

Leonardo.ai offers several fine-tuned models, including DreamShaper v7, RPG 4.0, and Isometric Fantasy.

Read more via Leonardo AI.

Perplexity labs (2024)

Mistral-medium is the ‘best’ chat model here.

Try it: https://labs.perplexity.ai/

HuggingChat (2024)

Mixtral is the ‘best’ chat model here.

Try it (free, no login): https://huggingface.co/chat/

Flashback

AI timeline (Jan/2024)

First launched in 2021, I recently updated my AI timeline, stripping it back to the key milestones and putting it in proper chronological order.

Read more: https://lifearchitect.ai/timeline/

Next

The next roundtable will be:

Life Architect - The Memo - Roundtable #6

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 27/Jan/2024 at 4PM Los Angeles

Saturday 27/Jan/2024 at 7PM New York

Sunday 28/Jan/2024 at 8AM Perth (primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai

There was a lot of spilled coffee on the ground in that 1X EVE video ;-)

By the way, 1X is inviting 25 engineers to attend an Open House event this month. So apply if you feel qualified! See more here: https://www.1x.tech/event/open-house