The Memo - 24/Nov/2022

Stable Diffusion 2.0, Meta Galactica 120B, Microsoft/NVIDIA H100 supercomputer, and much more!

FOR IMMEDIATE RELEASE: 24/Nov/2022

Welcome back to The Memo.

This edition features a bunch of exclusive content, including a Chinese AI-generated song, one of which has 100M views. We also hear some reggae music via Jukebox, and play with GPT-3 in Roblox (based on Google SayCan) and a “human or AI” GPT-3 game!

I’ve been experimenting with livestreams to allow more interaction and Q&A during videos. You’re welcome to join the next one. You can click the ‘notify’ button to be pinged when a new livestream begins.

The BIG Stuff

Stable Diffusion 2.0 released (24/Nov/2022)

The new Stable Diffusion 2.0 base model ("SD 2.0") was released two hours ago. It was trained from scratch using the OpenCLIP-ViT/H text encoder that generates 512×512 images, with improvements over previous releases (better FID and CLIP-g scores).

It also features upscaling to 2048×2048 and beyond!

Read the release notes: https://github.com/Stability-AI/StableDiffusion

There is a demo at HF, or wait for the update to hit mage.space and the official dreamstudio.

The Interesting Stuff

Meta Galactica 120B (16/Nov/2022)

Meta AI has released Galactica, a 120B-parameter model specializing in scientific data. Meta have hit on some very interesting innovations here. Training on prompts is fascinating. Maintaining full reference data is fascinating.

- “Chinchilla scaling laws”… did not take into the account of fresh versus repeated tokens. In this work, we show that we can improve upstream and downstream performance by training on repeated tokens.

- Our corpus consists of 106 billion tokens from papers, reference material, encyclopedias and other scientific sources.

- We train the models for 450 billion tokens.

- For inference Galactica 120B requires a single A100 node.

See my report card: https://lifearchitect.ai/report-card/

Read the paper: https://galactica.org/static/paper.pdf

Play with the demo: https://galactica.org/

Note: The slick demo site was swiftly pulled within 72 hours, seemingly for political/sensitivity reasons. Read the update by MIT: https://www.technologyreview.com/2022/11/18/1063487/meta-large-language-model-ai-only-survived-three-days-gpt-3-science/

It was reinstated by a HF user without the nice interface.

Watch my 1-hour livestream of the model release a few hours before the model demo was suspended:

VectorFusion by UC Berkeley (21/Nov/2022)

Text-to-image for vectors (SVG exports).

Prompt: the Sydney Opera House. minimal flat 2d vector icon. lineal color. on a white background. trending on artstation

MagicVideo by Bytedance (22/Nov/2022)

Efficient text-to-video by Chinese company, Bytedance.

Read the paper: https://arxiv.org/abs/2211.11018

View the gallery: https://magicvideo.github.io/

SceneComposer by Johns Hopkins & Adobe (22/Nov/2022)

Text-to-image by researchers.

Read the paper: https://arxiv.org/abs/2211.11742

View the gallery: https://zengyu.me/scenec/

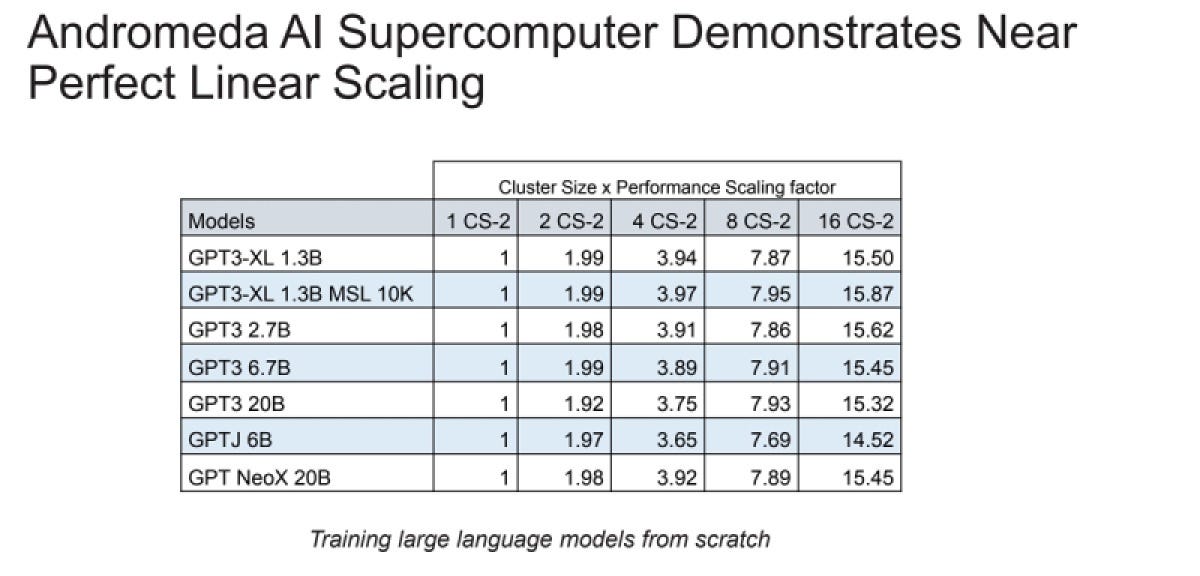

Andromeda: Cerebras’ supercomputer (14/Nov/2022)

Andromeda delivers 13.5 million AI cores and near perfect linear scaling across the largest language models. It is not really comparable to a standard supercomputer with GPUs. Andromeda is deployed in Santa Clara, California.

Read a related article by The Verge.

NVIDIA & Microsoft building a supercomputer based on the H100 (16/Nov/2022)

Back in the Jul/2022 edition of The Memo, we talked about NVIDIA’s newest H100 Hopper GPUs, the fastest AI-specific GPUs, designed for—and by—AI training!

…[H100] Hopper chips, which are up to 6x faster than the A100 chips used to train current AI models in 2021 and 2022:

“We demonstrate that not only can AI learn to design these circuits from scratch, but AI-designed circuits are also smaller and faster than those designed by [humans and even] state-of-the-art electronic design automation (EDA) tools. The latest NVIDIA Hopper GPU architecture has nearly 13,000 instances of AI-designed circuits.” — NVIDIA (8/Jul/2022)

Now, NVIDIA and Microsoft are putting 10,000+ H100s into a new supercomputer.

NVIDIA today announced a multi-year collaboration with Microsoft to build one of the most powerful AI supercomputers in the world, powered by Microsoft Azure’s advanced supercomputing infrastructure combined with NVIDIA GPUs, networking and full stack of AI software to help enterprises train, deploy and scale AI, including large, state-of-the-art models…

Future [Azure instances] will be integrated with NVIDIA Quantum-2 400Gb/s InfiniBand networking and NVIDIA H100 GPUs. Combined with Azure’s advanced compute cloud infrastructure, networking and storage, these AI-optimized offerings will provide scalable peak performance for AI training and deep learning inference workloads of any size.

Read the NVIDIA press release.

Read a related article by Ars.

The Beatles’ Revolver album de-mixed and re-mixed with AI (Nov/2022)

I first addressed this amazing AI milestone one year ago, in my Dec/2021 report The Sky is on Fire. Peter Jackson had hired a crack team of AI scientists to de-mix the single microphone recordings of The Beatles.

In the last few months, his technology has been used to de-mix the one microphone into separate tracks, and he has had the Revolver album remixed properly. Producer Giles Martin, son of producer George Martin, says:

“There’s no one who’s getting audio even close as to what Peter Jackson’s guys can do. The funny thing, they won’t let anyone else use it — they may do eventually. But Peter’s such a big Beatles fan, he’s willing to help out. I quite like that in a way, that the Beatles are still using technologies that no one else is using. It’s really groundbreaking. The simplest way I can explain it: It’s like you giving me a cake, and then me going back to you about an hour later with flour, eggs, sugar, and all the ingredients to that cake, that all haven’t got any cake mix left on them.”

“Taxman,” for example, was famously recorded with the drums, bass, and rhythm guitar all on one track. The new tech allows separate tracks for Ringo’s kick drum, toms, hi-hats, etc. Nothing is being altered, obviously — but now we can hear more of what the lads played in the room that day. You can hear details buried way down in the mix, like the acoustic guitar in “For No One,” or the finger snaps in “Here, There, and Everywhere.” — Rolling Stone (Sep/2022) and Vulture (Nov/2022).

Listen to the new multi-track remix in the trailer video:

State of AI report by AI investors Nathan Benaich and Ian Hogarth (Oct/2022)

Key themes in the 2022 Report include:

New independent research labs are rapidly open sourcing the closed source output of major labs. Despite the dogma that AI research would be increasingly centralised among a few large players, the lowered cost of and access to compute has led to state-of-the-art research coming out of much smaller, previously unknown labs. Meanwhile, AI hardware remains strongly consolidated to NVIDIA.

Safety is gaining awareness among major AI research entities, with an estimated 300 safety researchers working at large AI labs, compared to under 100 in last year's report, and the increased recognition of major AI safety academics is a promising sign when it comes to AI safety becoming a mainstream discipline.

The China-US AI research gap has continued to widen, with Chinese institutions producing 4.5 times as many papers than American institutions since 2010, and significantly more than the US, India, UK, and Germany combined. Moreover, China is significantly leading in areas with implications for security and geopolitics, such as surveillance, autonomy, scene understanding, and object detection.

AI-driven scientific research continues to lead to breakthroughs, but major methodological errors like data leakage need to be interrogated further. Even though AI breakthroughs in science continue, researchers warn that methodological errors in AI can leak to these disciplines, leading to a growing reproducibility crisis in AI-based science driven in part by data leakage.

Take a look: https://www.stateof.ai/ (114 slides)

Notion and BundleIQ writing tools integrate new language models (Nov/2022)

Notion: https://www.notion.so/ai

BundleIQ: https://bundleiq.medium.com/bundleiq-an-ai-powered-writing-assistant-6f823138e37e

Midjourney v4 used for commercialized products (7/Nov/2022)

The latest Midjourney v4 (as reported in the previous edition of The Memo) was launched this month, and users are already applying it to business and real products…

Notice just how Midjourney v4 enables very simple new prompting, which is obviously expanded during priming.

Prompts:

commercial shot of raspberry, teal background, splashes, juicy --v 4

commercial shot of passionfruit, yellow background, splashes, juicy --v 4

commercial shot of hops, green background, splashes, juicy --v 4

Read more from the reddit thread.

AI at the FIFA World Cup (Nov/2022)

DeepMind’s animation compares the real movements of players during a football match (attackers, dark blue; defenders, dark red) with predictions from a model that forecasts the paths of off-camera players. The grey shaded area is the television camera’s field of view (FOV), which follows the ball (black line). For players outside the FOV, the model predicts the position of attackers (green) and defenders (orange; actual off-camera positions are coloured light blue and pink, respectively).

Through November and December I’ll be checking in on my ‘once every four years’ viewing of the FIFA World Cup. There is certainly some interesting tech being implemented here, related to AI and ML. Outside of the game (and not currently related to FIFA), DeepMind has been working with Liverpool FC.

Karl Tuyls, a computer scientist at DeepMind, says the off-camera modelling work is the first step towards creating a virtual, AI-driven assistant coach that uses real-time data to guide decision-making in football and other sports. “You can imagine the AI looking at the first-half performance and suggesting a change in formation that might do better,” he says.

Read more: Nature (15/Nov/2022).

Inside the FIFA event, ML tech is helping with air-conditioning, crowd movement tracking, security, and in-game tracking of players and the ball.

The new technology uses 12 dedicated tracking cameras mounted underneath the roof of the stadium to track the ball and up to 29 data points of each individual player, 50 times per second, calculating their exact position on the pitch. The 29 collected data points include all limbs and extremities that are relevant for making offside calls…

…adidas’ official match ball for Qatar 2022™, will provide a further vital element for the detection of tight offside incidents as an inertial measurement unit (IMU) sensor will be placed inside the ball. This sensor, positioned in the centre of the ball, sends ball data to the video operation room 500 times per second…

Read more: FIFA (Nov/2022).

Bonus: The in-stadium AR (on a phone/device) is phenomenal!

Toys to Play With

Exclusive: Chinese songs generated by AI, some with 100M views (Nov/2022)

…by the end of September, TME says it had created and released over 1,000 songs with human-style vocals manufactured by the [AI] Lingyin Engine.

One of those tracks has set the standard for popularity… a version of one song, which appears to be called Today (English translation), “has become the first song by an AI singer to be streamed over 100 million times across the internet”.

…TME’s Executive Chairman, explained to analysts earlier today (November 15) that TME used the Lingyin Engine to “pay tribute” to Anita Mui by “creating an AI code based on her [voice]” for a new track – May You Be Treated Kindly By This World [English transation].

Anita Mui passed away in Dec/2003, this song was generated by AI around Sep/2022:

GPT-3 in VR in Rotterdam (28/Nov/2022)

“Our GPT3 powered VR venue https://quantumbar.ai will be serving engaging AI conversations in SocialVR on its World Premiere at VRDays’ ChurchOfVR in Rotterdam next week - November 28th until December 2nd, 2022.”

Read more: https://quantumbar.ai/

Watch the demo video:

Connect GPT-3 with Google Sheets (21/Nov/2022)

Read my steps: https://lifearchitect.ai/sheets/

Watch my livestream (1 hour):

Reggae music generated by AI (Nov/2022)

The Memo reader Efosa has been using OpenAI’s Jukebox—released around the same time as GPT-3, but largely ignored by the media—to generate new Reggae music. The results are astonishing. Track and album names by GPT-2 (Replika) and GPT-3 (Emerson), and music completely generated by the Transformer-based music model, Jukebox. Take a listen to this track, ‘Epic’.

The ‘full length, 13 track reggae album completely improvised by AI on its own’ will be mastered and available soon.

GPT-3 in Roblox based on Google SayCan (23/Nov/2022)

Designed and developed by James Weaver from IBM.

Play it yourself: https://www.roblox.com/games/11462889413/GPT-3-SayCan-NPC

Watch the video:

Text written by human or GPT-3? (Oct/2020)

It’s an older game, but I haven’t mentioned it before. Real or fake text?

How good are you at knowing when text has been written by a computer? How many sentences can a computer write before it no longer has you fooled? Find out for yourself!

Play the game: http://www.roft.io/

Next

End of year report

My end of year report will be available soon, and the first draft will be sent to paid readers of The Memo.

“AI Panic”

I’ve been addressing the very-real AI panic that shows up almost be default in members of the public. You may enjoy this older article by Diane Proudfoot published in the IEEE Spectrum five years before GPT-3: https://lifearchitect.ai/ai-panic/

All my very best,

Alan

LifeArchitect.ai

Housekeeping…

Unsubscribe:

Older subscriptions before 17/Jul/2022, please use the older interface or just reply to this email and we’ll stop your payments and take you off the list!

Newer subscriptions from 17/Jul/2022, please use Substack as usual.

Note that the subscription fee for new subscribers will increase from 1/Jan/2023. If you’re a current subscriber, you’ll always be on your old/original rate while you’re subbed.

Gift a subscription to a friend or colleague for the holiday season: