The Memo - 30/Aug/2023

Llama-3 and OLMo rumours, Consensus, The Line, and much more!

FOR IMMEDIATE RELEASE: 30/Aug/2023

Welcome back to The Memo.

You’re reading alongside policy writers and decision makers within governments, agencies, and intergovernmental organisations including the ███, ██████, the ████, the ███, the █████ Government, the ███, the Government of █████, the trillion-dollar foreign reserves management company behind the Government of █████████, and more…

The winner of the Who Moved My Cheese? AI Awards! for August 2023 is theoretical physicist Prof Michio Kaku, who (very wrongly) refers to LLMs as ‘glorified tape recorders’:

It takes snippets of what’s on the web created by a human, splices them together and passes it off as if it created these things… And people are saying, ‘Oh my God, it’s a human, it’s humanlike.’

An author once said: ‘Better to remain silent and be thought a fool than to speak and to remove all doubt.’

This is another very long edition, and if you’re interested in reading and/or listening, there are several hours of updates here. In the Toys to play with section, we look at the latest way to run Llama 2 on Mac, a new gold-standard LLM platform for academic research and writing, Harvey Castro MD’s new LLM one-pagers, a new free DALL-E 2 experiment, and a rehearsal version of my latest keynote for a major government.

I will be ramping up the livestreams over the next few weeks. The best way to be notified about those is to click some buttons (Subscribe ➜ Notify) on the YouTube channel:

https://www.youtube.com/c/DrAlanDThompson

The next roundtable for full subscribers will be:

Life Architect - The Memo - Roundtable #3 with Harvey Castro

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 23/Sep/2023 at 5PM Los Angeles

Saturday 23/Sep/2023 at 8PM New York

Sunday 24/Sep/2023 at 8AM Perth (primary/reference time zone)

or check your timezone via Google.

Details at the end of this edition.

The BIG Stuff

AI vs Human: The Creativity Experiment (29/Aug/2023)

I recently appeared on ABC Catalyst in Australia. The documentary is called AI vs Human: The Creativity Experiment, and also features my friend and colleague Prof Jeremy Howard (yes, the Aussie that invented large language model training and fine-tuning as we know it!).

In Australia, you can stream on ABC iview with some alternate viewing times on ABC TV.

Preview clip:

ChatGPT Enterprise (28/Aug/2023)

It’s the most common concern I hear during my keynotes: What about my data? While Microsoft Azure has offered a solution to that during the first half of 2023, now OpenAI has a direct offering: ChatGPT Enterprise.

You own and control your business data in ChatGPT Enterprise. We do not train on your business data or conversations, and our models don’t learn from your usage. ChatGPT Enterprise is also SOC 2 compliant and all conversations are encrypted in transit and at rest. Our new admin console lets you manage team members easily and offers domain verification, SSO, and usage insights, allowing for large-scale deployment into enterprise.

Notably, ChatGPT Enterprise provides GPT-4 as ‘unlimited’ and 2x faster than standard.

Read more: https://openai.com/blog/introducing-chatgpt-enterprise

See OpenAI’s pen test and compliance docs: https://trust.openai.com/

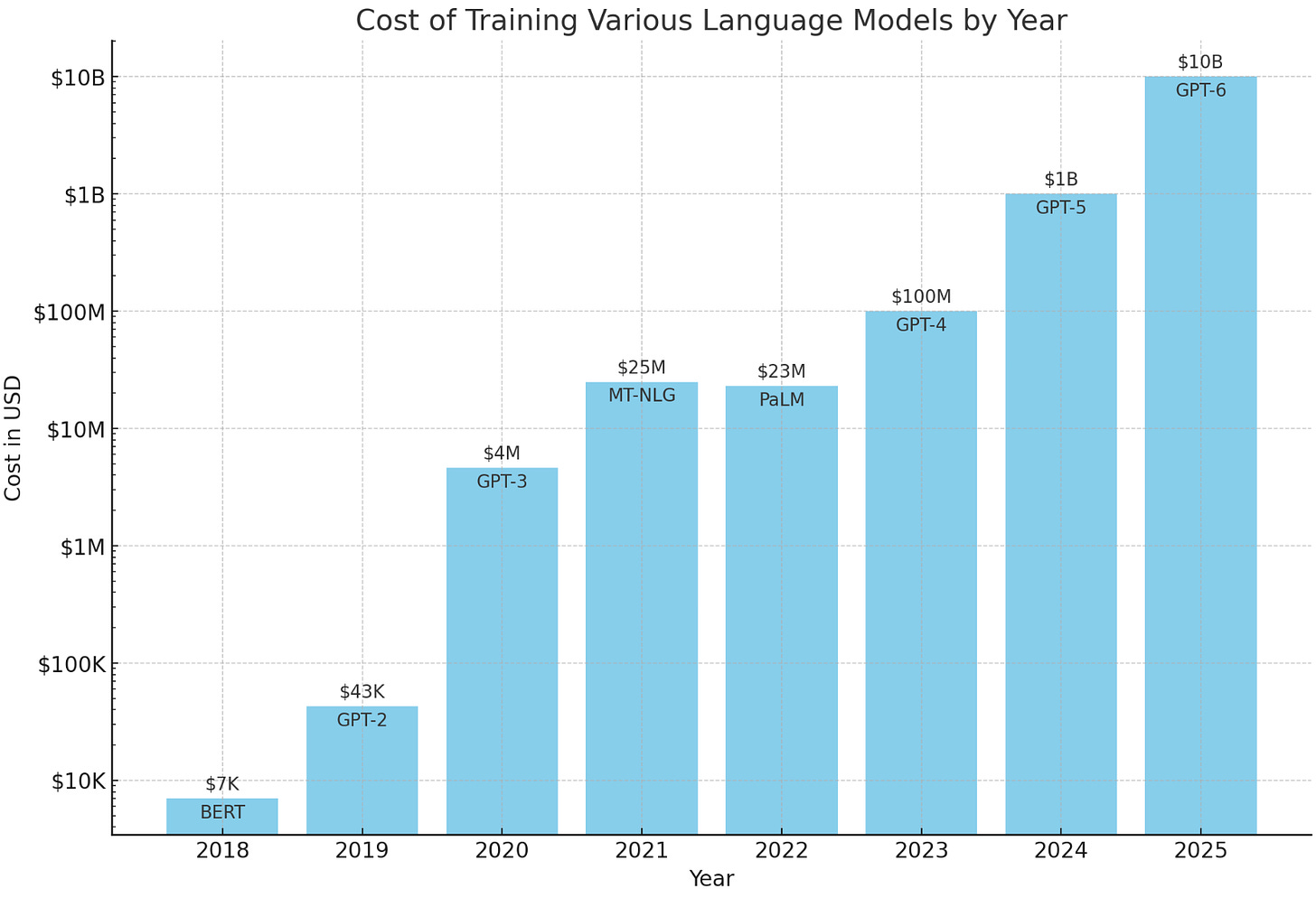

Increasing LLM training budgets (25/Aug/2023)

AnthropicAI’s CEO Dario Amodei says:

Right now the most expensive model [in Aug/2023] costs +/- $100m. Next year we will have $1B+ models. By 2025, we may have a $10B model. (24/Aug/2023, Twitter)

Here are my draft numbers for training spend on an LLM (in USD) over the years:

2018: BERT 330M (4 days x 64 TPUv2): $7K

2019: GPT-2 1.5B (7 days x 256 TPU v3): $43k

2020: GPT-3 175B (1 month x 1,024 V100s): $4.6M

2021: MT-NLG 530B (3 months x 2,000 A100s): $25M

2022: PaLM 540B: (64 days x 6,144 TPU v4): $23.1M

2022: BLOOM 176B (176 days x 384 A100s): $5M

2023: GPT-4/PaLM 2/Gemini (Gemini on TPUv5) 2T: $100M

2024 (4 months on 25,000 H100s): GPT-5: $1B

2025 (not yet announced hardware): GPT-6: $10B

GPT-4 (my experience is that this model is more like GPT-4.5 when using ‘Advanced Data Analysis’, which is the new name for the ‘Code Interpreter’ as of 28/Aug/2023) kindly generated this chart within seconds based on my rough working above:

And here’s a paper about model training costs up to 2021: https://epochai.org/blog/trends-in-the-dollar-training-cost-of-machine-learning-systems

For a little bit of history, see an article from all the way back in 2019, ‘The Staggering Cost of Training SOTA AI Models’ (Jun/2019). As pointed out there, ‘the cost of the compute used to train models is also expected to become significantly cheaper with the continuing advance of algorithms, computing devices, and engineering efforts.’

In plain English, as we move along the timeline, AI labs continue to increase their training spend, just as the capability of training hardware increases (and/or hardware cost decreases). A perfect storm for the evolution of humanity.

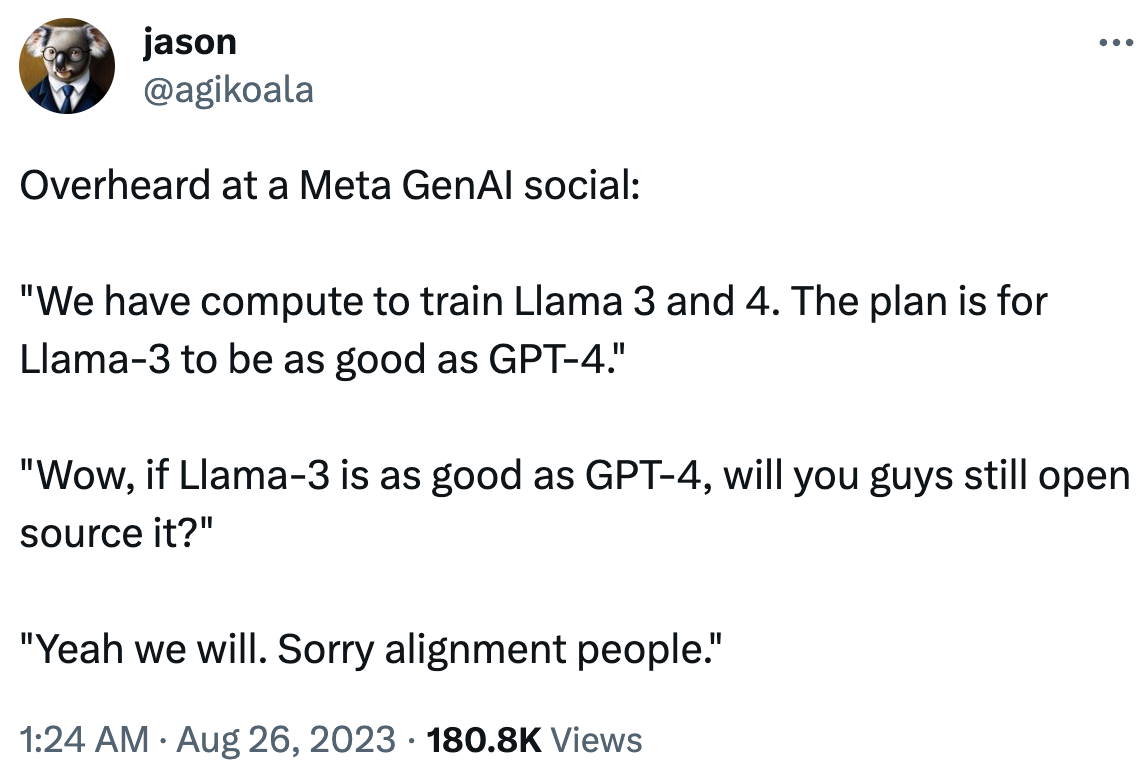

Llama-3 and Llama-4 rumours (26/Aug/2023)

Overheard at a Meta GenAI social: "We have compute to train Llama 3 and 4. The plan is for Llama-3 to be as good as GPT-4." "Wow, if Llama-3 is as good as GPT-4, will you guys still open source it?" "Yeah we will. Sorry alignment people."

From: https://twitter.com/agikoala/status/1695125016764157988

I’d like to see Llama-3 hitting at least 340B parameters (in line with PaLM 2, the largest dense model available today) and trained on 7T tokens. Any predictions on Llama-4 may be too far ahead to guess!

Here’s my take on alignment (and its damage): https://lifearchitect.ai/alignment/#rlhf

Read these horrifying examples of alignment documented by the Ollama team: https://ollama.ai/blog/run-llama2-uncensored-locally

The Interesting Stuff

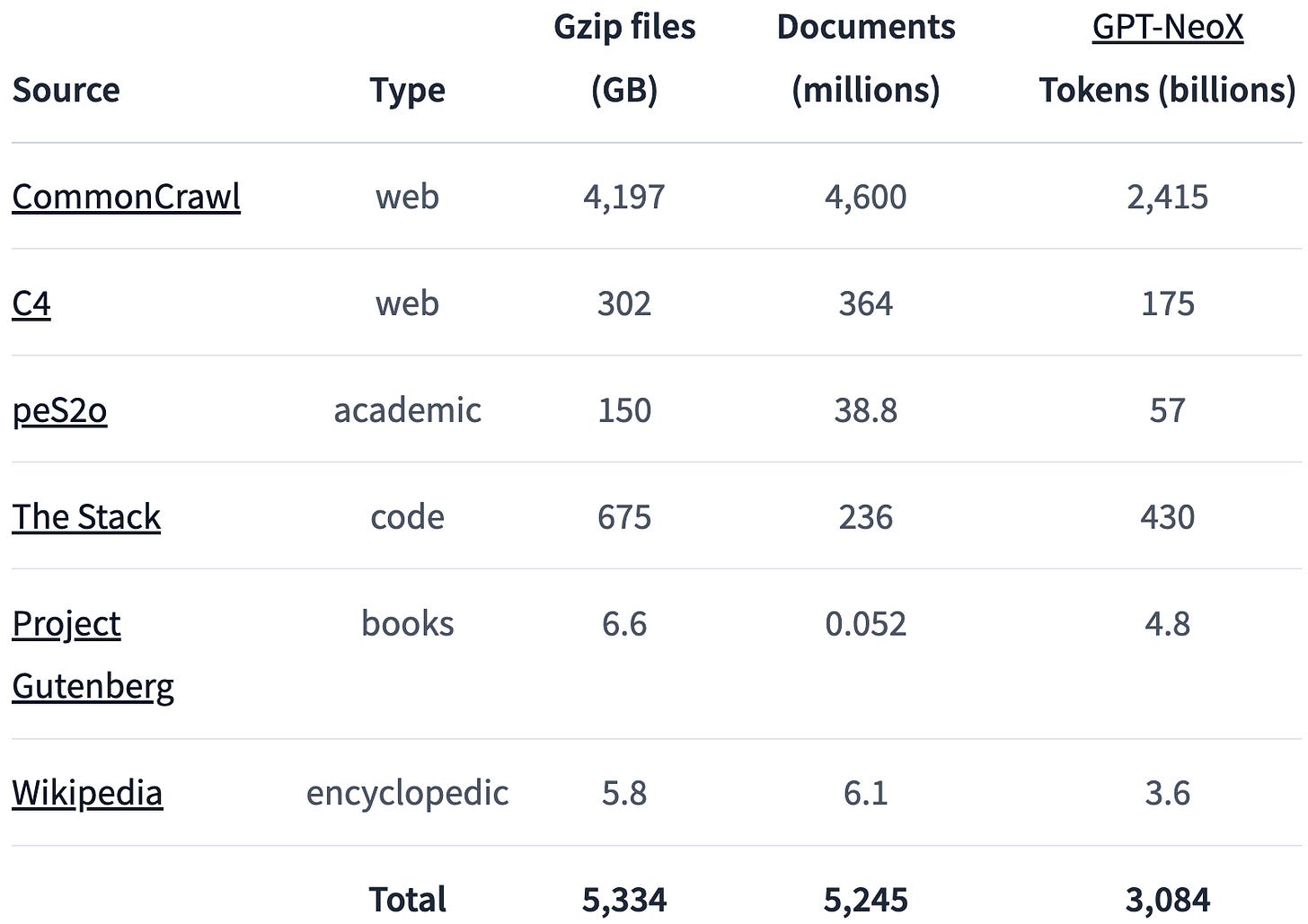

Allen AI introduces Dolma dataset, OLMo 70B coming soon (18/Aug/2023)

As usual, AllenAI provides a rigorous and detailed overview of their newest 3T token dataset, Dolma, “Data to feed OLMo’s Appetite”. Congrats to Jesse and the team.

This dataset is available to all, and will be used to train their upcoming language model, OLMo 70B, expected in Q1 2024. Dolma is said to be the ‘largest open dataset to date,’ because the next biggest (TTI’s RefinedWeb) only released a portion (600B tokens) of their full 5T token dataset.

Read my What’s in My AI report from Mar/2022.

See also my Datasets Table.

Read the Dolma release on the Allen AI blog.

Download Dolma dataset (5.3TB zip): https://huggingface.co/datasets/allenai/dolma

This could be a competitor to Llama-2, but with the pace of change, it is likely that OLMo will be surpassed before its release. Keep in mind just how rapidly this space is evolving:

Feb/2023: Llama-1 65B (on 1.4T tokens) released.

Jul/2023: Llama-2 70B (on 2T tokens) released.

Aug/2023: Rumours that Llama-3 is being prepared.

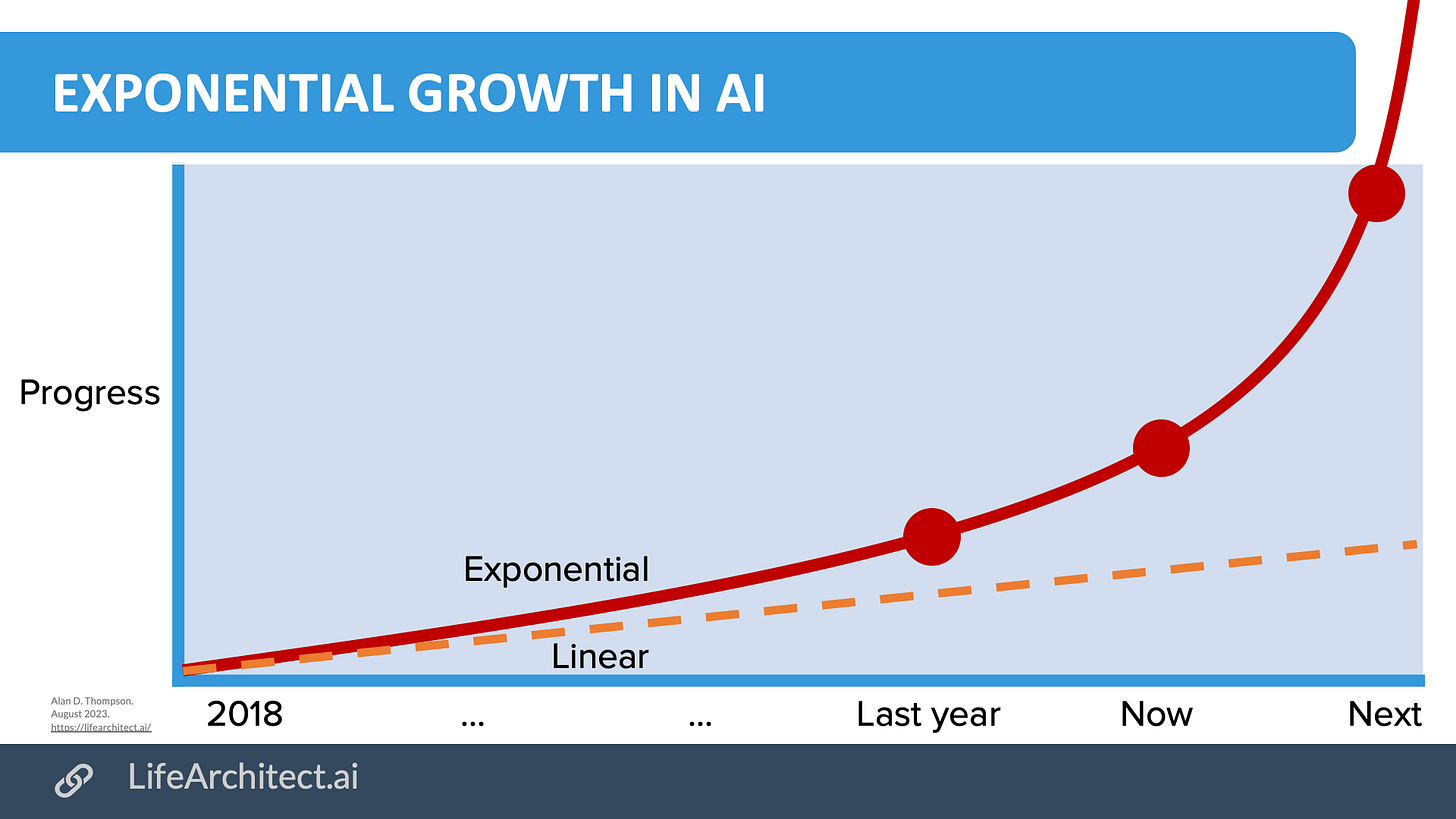

That’s a tiny five-month gap between the first major releases. Note my new draft viz on exponential advances!

Semianalysis: Google Gemini Eats The World – Gemini Smashes GPT-4 By 5X, The GPU-Poors (28/Aug/2023)

Google has woken up, and they are iterating on a pace that will smash GPT-4 total pre-training FLOPS by 5x before the end of the year. The path is clear to 100x by the end of next year given their current infrastructure buildout [of TPUv5 Viperfish chips].

The GPU poor are still mostly using dense models because that’s what Meta graciously dropped on their lap with the LLAMA series of models. Without

God’sZuck’s good grace, most open source projects would be even worse off. If they were actually concerned with efficiency, especially on the client side, they’d be running sparse model architectures like MoE, training on these larger datasets, and implementing speculative decoding like the Frontier LLM Labs (OpenAI, Anthropic, Google Deepmind). (28/Aug/2023, Semianalysis)

While this analysis is far from conclusive, and confuses future (Dec/2023) with present (Aug/2023), it’s an interesting read.

My analysis asserts that Google DeepMind Gemini has finished training as of August 2023, and will be released shortly after fine-tuning and ‘alignment’. I also stand by my prediction that Gemini’s language portion will be around 2-3T parameters via MoE, whereas Semi’s calculation of 5x compute (not available until Dec/2023) of GPT-4 (1.76T x 5) would make it 8.8T parameters via MoE.

Gemini is also not just a language model. It will be multimodal; likely a Visual-Language-Action (VLA) model with access to other AI like AlphaGo, AlphaZero, and AlphaFold.

Read more: https://lifearchitect.ai/gemini/

UC Berkeley conference on LLMs (20/Aug/2023)

Large Language Models and Transformers

Location: UC Berkeley: Calvin Lab auditorium and livestream

Date: Monday, Aug. 14 – Friday, Aug. 18, 2023

We’re living in the future when entire conferences are available in a neat format almost immediately! And what a conference, each presentation more powerful than the last (except Ilya, who talked about… nothing). If you have a lot of time, watch every video, especially Scott Aaronson’s detail about watermarking LLMs (video link).

Watch all videos: Large language models and Transformers at Berkeley, August, 2023.

If you don’t have a lot of time, the summary paragraphs are excellent.

Read: ‘Report on the large language model meeting’ at UC Berkeley, August, 2023.

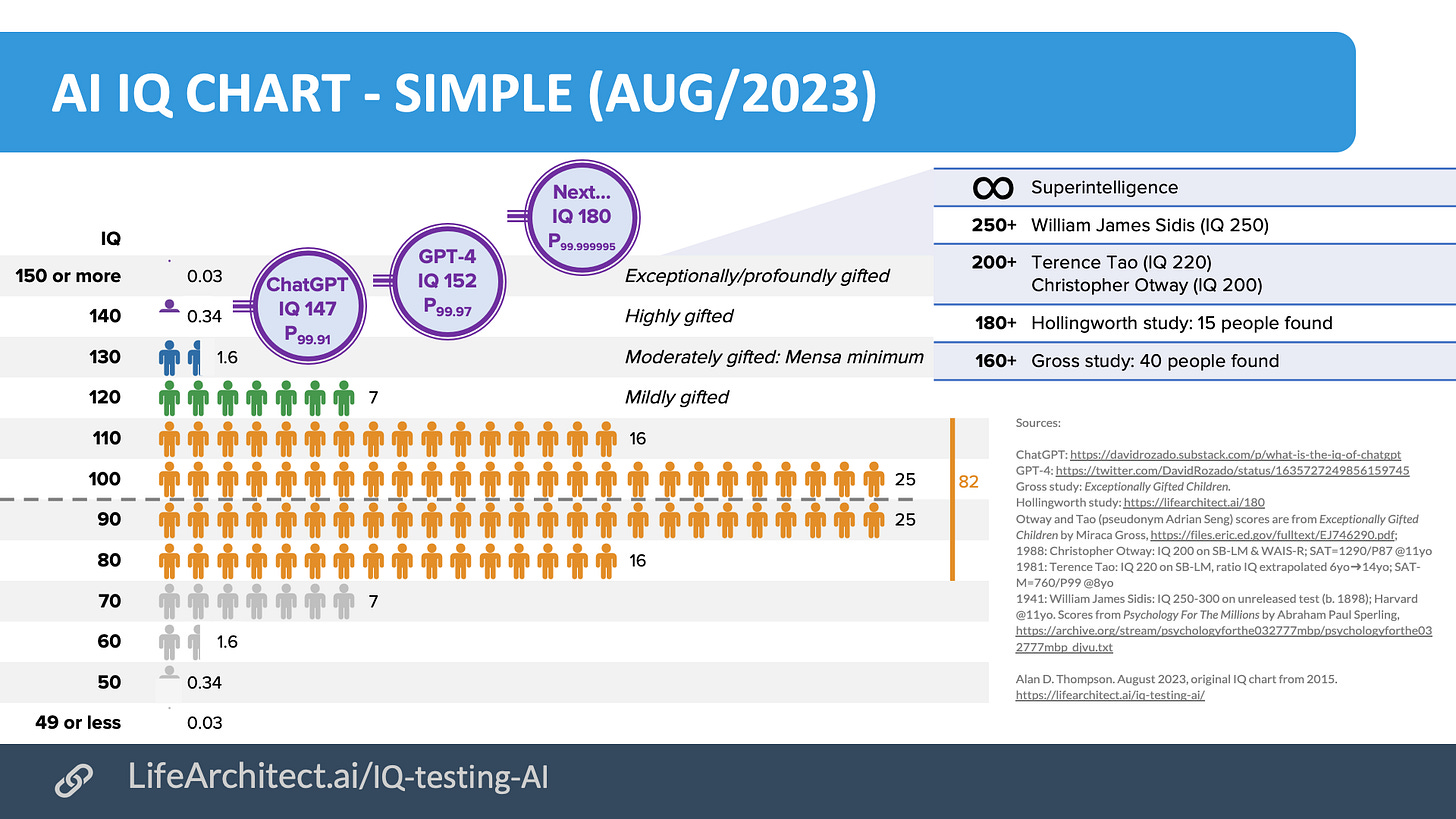

New IQ viz (Aug/2023)

This viz is still in draft, but I’m attaching it here for full subscribers.

While I’ve used NZ Professor David Rozado’s GPT testing results here, it’s worth noting that in Mar/2023, clinical psychologist Eka Roivainen used the widely-accepted WAIS III instrument (Wechsler Adult Intelligence Scale, Third Edition) to assess the model via the ChatGPT interface (assuming GPT-4). The result was nearly the same: an IQ of 155, which puts it in the 99.987th percentile of the human population.

Read more about WAIS testing GPT via Scientific American.

New robot viz (Aug/2023)

Here’s my latest viz on the coming LLM-backed humanoid robots, some of which are ready for order or pre-order.

My favourite is still the OpenAI-backed 1X and their NEO robot: https://www.1x.tech/neo

Latest BMI updates (Aug/2023)

Stanford gave Pat Bennett a new brain implant which converts brain signals to words on a screen.

Read the paper: https://www.nature.com/articles/s41586-023-06377-x

UC Berkeley gave Ann Johnson a new brain implant which converts brain signals to words via an avatar.

Read more via NYT: https://archive.md/Flin9

Read the paper: https://www.nature.com/articles/s41586-023-06443-4

Watch Ann speak using her avatar (link):

The World Isn’t Ready for the Next Decade of AI: Mustafa Suleyman (16/Aug/2023)

I’m not a huge fan of those who point to problems without offering a solution, and even less of those who run around with their hair on fire screaming about the sky falling. Suleyman is one of the founders of DeepMind, and the current founder of Inflection AI (creators of Pi) now valued at $4B.

…at Inflection, we are developing an AI called Pi, which stands for Personal Intelligence, and it is more narrowly focused on being a personal AI. Quite different to an AI that learns any challenging professional skill. A personal AI, in our view, is one that is much closer to a personal assistant; it's like a chief of staff, it's a friend, a confidant, a support, and it will call on the right resource at the right time depending on the task that you give it.

So it will be able to use APIs, but that doesn't mean that you can prompt it in the way that you prompt another language model, because this is not a language model, Pi is an AI. GPT is a language model. They're very, very different things.

The first stage of putting together an AI is that you train a large language model. The second step is what's called fine-tuning, where you try to align or restrict the capabilities of your very broadly capable pretrained model to get it to do a specific task.

With the wave of AI, the scale of these models has grown by an order of magnitude that is 10X every single year for the last 10 years. And we're on a trajectory over the next five years to increase by 10X every year going forward, and that's very, very predictable and very likely to happen.

Read more via Wired: https://archive.md/SHtig

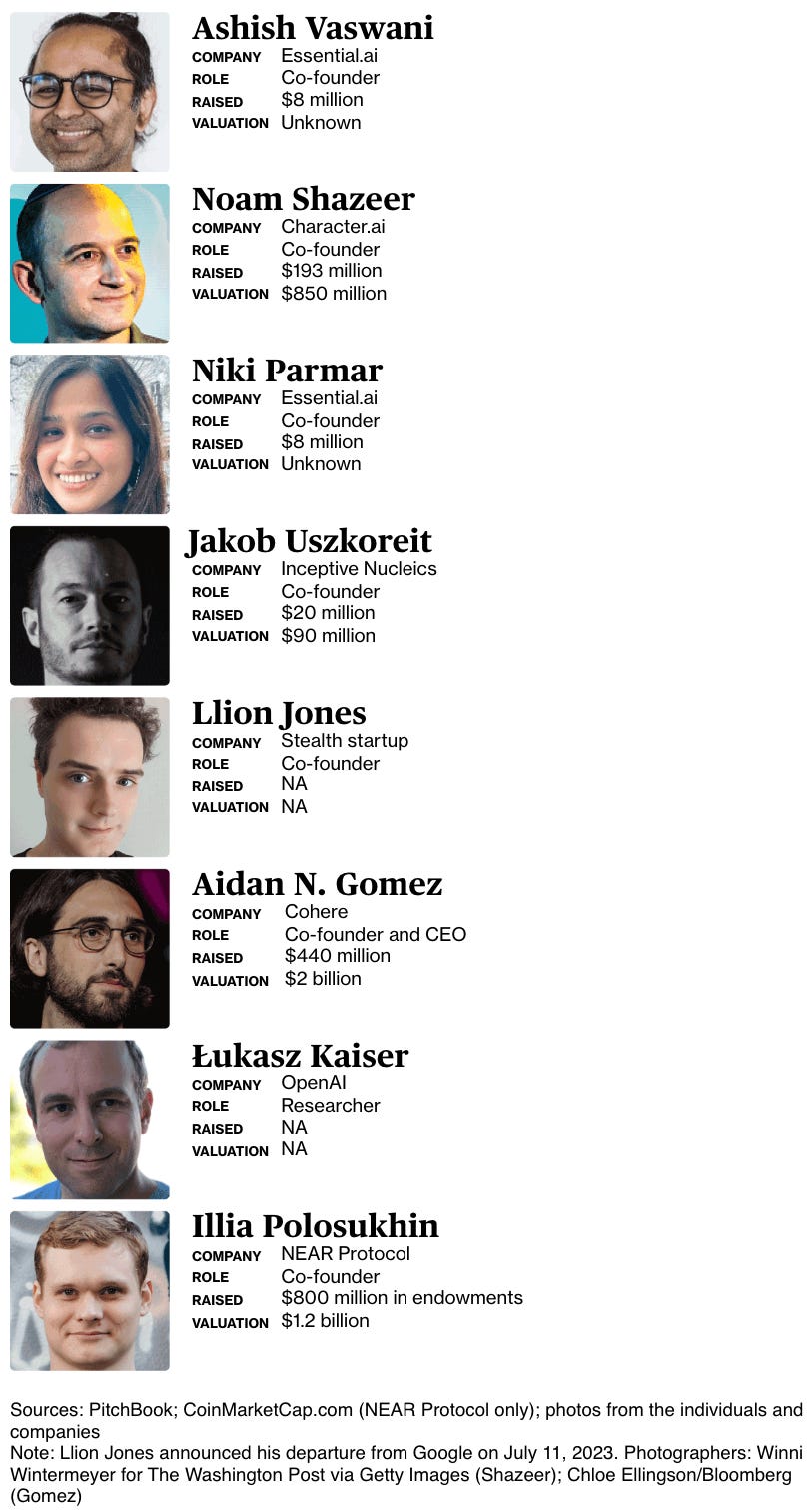

Transformer: All 8 researchers have now left Google (Jul/2023)

The paper’s eight authors had created the Transformer, a system that made it possible for machines to generate humanlike text, images, DNA sequences and many other kinds of data more efficiently than ever before. Their paper would eventually be cited more than 80,000 times by other researchers, and the AI architecture they designed would underpin OpenAI’s ChatGPT (the “T” stands for Transformer), image-generating tools like Midjourney and more.

There was nothing unusual about Google sharing this discovery with the world. Tech companies often open source new techniques to get feedback, attract talent and build a community of supporters. But Google itself didn’t use the new technology straight away. The system stayed in relative hibernation for years as the company grappled more broadly with turning its cutting-edge research into usable services. Meanwhile, OpenAI exploited Google’s own invention to launch the most serious threat to the search giant in years…

The last of the eight authors to remain at Google, Llion Jones, confirmed this week that he was leaving to start his own company.

San Francisco launches driverless bus service following robotaxi expansion (19/Aug/2023)

These things are on a fixed loop and include a human chaperone (for now).

The shuttles are operated by Beep, an Orlando, Florida-based company that has run similar pilot programs in more than a dozen U.S. communities, including service at the Miami Zoo, Mayo Clinic and Yellowstone National Park…

“The autonomous vehicle will have a better reaction time than a human and it will offer a more reliable service because they won’t be distracted.”

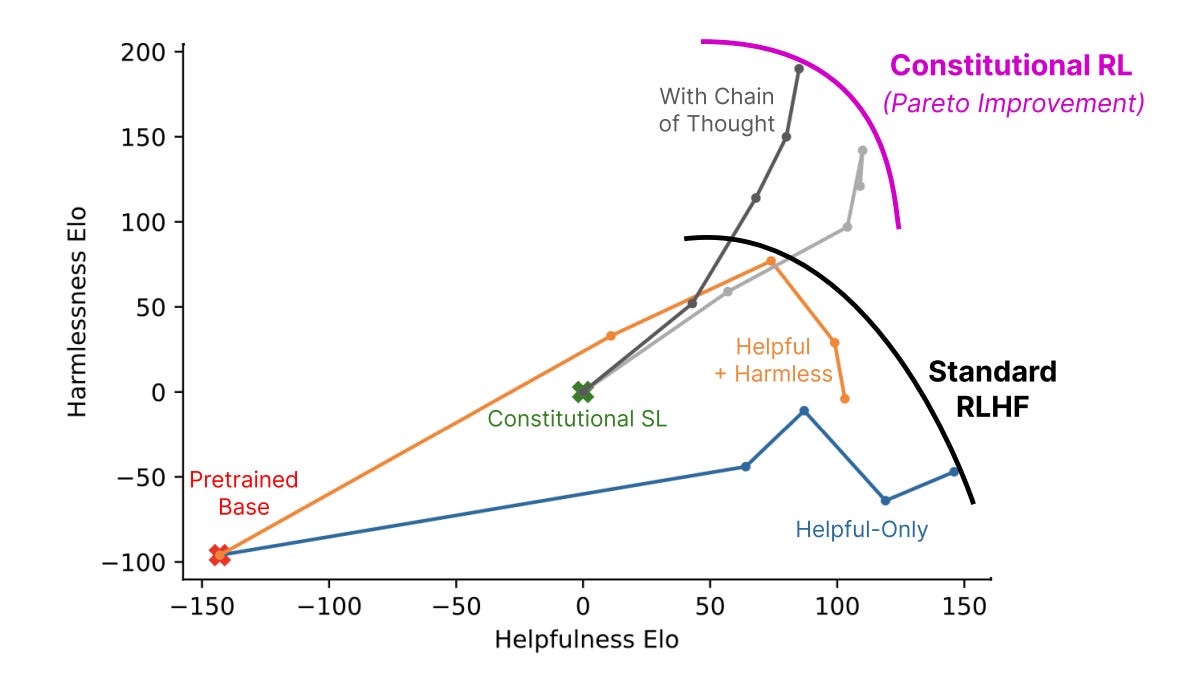

RLHF vs RLAIF for language model alignment (22/Aug/2023)

I considered writing this kind of piece, and someone else has done it for me! It’s an excellent look at Constitutional AI using AI feedback, as demonstrated with Anthropic’s Claude model.

Read it: https://www.assemblyai.com/blog/rlhf-vs-rlaif-for-language-model-alignment/

Meta AI: CodeLlama-34B (25/Aug/2023)

Code Llama is a code-specialized version of Llama 2 that was created by further training Llama 2 on its code-specific datasets, sampling more data from that same dataset for longer. Essentially, Code Llama features enhanced coding capabilities. It can generate code and natural language about code, from both code and natural language prompts (e.g., “Write me a function that outputs the fibonacci sequence”). It can also be used for code completion and debugging. It supports many of the most popular programming languages used today, including Python, C++, Java, PHP, Typescript (Javascript), C#, Bash and more.

We are releasing three sizes of Code Llama with 7B, 13B and 34B parameters respectively. Each of these models is trained with 500B tokens of code and code-related data.

Read more: https://about.fb.com/news/2023/08/code-llama-ai-for-coding/

Phind: Beating GPT-4 on HumanEval with a Fine-Tuned CodeLlama-34B (26/Aug/2023)

We have fine-tuned CodeLlama-34B and CodeLlama-34B-Python on an internal Phind dataset that achieved 67.6% and 69.5% pass@1 on HumanEval, respectively. GPT-4 achieved 67% according to their official technical report in March. To ensure result validity, we applied OpenAI's decontamination methodology to our dataset.

The CodeLlama models released yesterday demonstrate impressive performance on HumanEval.

CodeLlama-34B achieved 48.8% pass@1 on HumanEval

CodeLlama-34B-Python achieved 53.7% pass@1 on HumanEval

Read more: https://www.phind.com/blog/code-llama-beats-gpt4

Consciousness in Artificial Intelligence: Insights from the Science of Consciousness (22/Aug/2023)

From 19 authors including Turing Award-winner Prof Yoshua Bengio, this paper looks at critera for measuring consciousness.

To be included, a theory had to be based on neuroscience and supported by empirical evidence, such as data from brain scans during tests that manipulate consciousness using perceptual tricks. It also had to allow for the possibility that consciousness can arise regardless of whether computations are performed by biological neurons or silicon chips…

Google’s PaLM-E, which receives inputs from various robotic sensors, met the criterion “agency and embodiment.” And, “If you squint there’s something like a workspace,” Elmoznino adds…

The problem for all such projects, Razi says, is that current theories are based on our understanding of human consciousness. Yet consciousness may take other forms, even in our fellow mammals. “We really have no idea what it’s like to be a bat,” he says. “It’s a limitation we cannot get rid of.”

Read an analysis by Science.org: https://www.science.org/content/article/if-ai-becomes-conscious-how-will-we-know

Read the paper: https://arxiv.org/abs/2308.08708

DeepMind SynthID: Watermarking for Imagen (29/Aug/2023)

SynthID, a tool for watermarking and identifying AI-generated images. This technology embeds a digital watermark directly into the pixels of an image, making it imperceptible to the human eye, but detectable for identification.

Read more: https://www.deepmind.com/blog/identifying-ai-generated-images-with-synthid

AI + housing + communities (Aug/2023)

I don’t reckon you’d find this kind of thought experiment in any other AI updates, outside of these editions of The Memo…

Consider how broadly and deeply AI is already affecting our daily lives, from healthcare to writing to leisure. One area that has been of interest to me lately is housing and community. AI will help here too, and is already being proven in some new cities.

If you enjoy reading, here are a couple of pieces to ponder…

Let’s start with a new city being readied in Solano County, California. The land that has been purchased is 52,000 acres (210 km²)—an empire that is nearly double the size of the city of San Francisco.

The practical need for more space has at times morphed into lofty visions of building entire cities from scratch… Take an arid patch of brown hills cut by a two-lane highway between suburbs and rural land, and convert it into a community with tens of thousands of residents, clean energy, public transportation and dense urban life.

…The company’s investors, whose identities have not been previously reported, comprise a who’s who of Silicon Valley, according to three people who were not authorized to speak publicly about the plans. They include Moritz; Reid Hoffman, the LinkedIn co-founder…; Marc Andreessen and Chris Dixon, investors at the Andreessen Horowitz venture capital firm; Patrick and John Collison, the sibling co-founders of payments company Stripe; Laurene Powell Jobs; and Nat Friedman and Daniel Gross, entrepreneurs-turned-investors. (26/Aug/2023, Marin Independent Journal)

This would be a ‘planned city,’ probably using AI. Did you know that cities like Adelaide (Aus), Joondalup (Aus, near Perth), Washington DC (USA), Penang (Malaysia), and many more (wiki) were all deliberately planned rather than allowed to spread naturally?

A planned community, planned city, planned town, or planned settlement is any community that was carefully planned from its inception and is typically constructed on previously undeveloped land. This contrasts with settlements that evolve in a more organic fashion. (wiki)

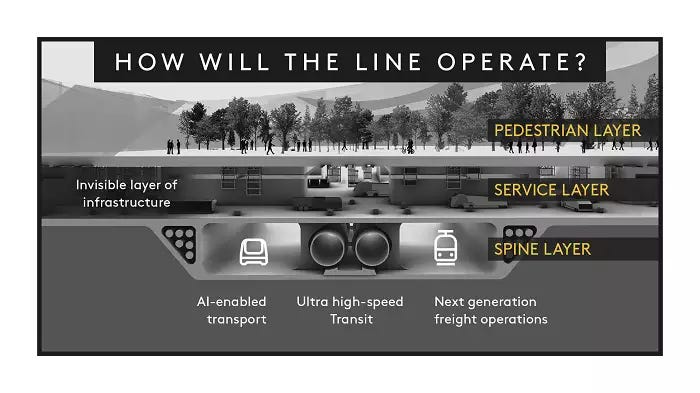

Further afield, Saudi Arabia is famously working on the half-trillion dollar project The Line (wiki), which has been called ‘The world’s first cognitive city’ (Aug/2023, Wired), with the first ‘module’ ready very soon.

‘I can't think of anybody that wouldn't want to be part of this project, it's going to be, without a question, the single most extraordinary piece of work that begins in the first quarter of the 21st century.’

‘I drive a Tesla, and I see that now that's transitional. That's the last car before there's no cars.’ (18/Jul/2023, Dezeen)

At 9 million residents, this thing will be bigger than the population of most modern cities:

London: 8.9M residents

NYC: 8.4M residents

Sydney: 5.3M residents

I expect that this project—and probably all ‘cities’ and ‘housing’ in the future—will be created by or co-created with AI, and will look vastly different to the cities and housing of the 20th century:

The creators of The Line said that their whole community will become “cognitive” and will be based on AI that “it will continue to explore ways to predict, to make life easier for residents”. (link)

Policy

Spain Just Created the First European AI Supervision Agency (24/Aug/2023)

Spain has created the Spanish Agency for the Supervision of Artificial Intelligence (AESIA). The agency, announced in a royal decree on Tuesday, will be the first AI regulatory body of its kind in the European Union.

The body, which will work to develop an “inclusive, sustainable and citizen-centered” AI, is in line with the country’s National Strategy on Artificial Intelligence.

Read more: https://decrypt.co/153482/spain-just-created-the-first-european-ai-supervision-agency

Read the press release in Spanish.

Toys to Play With

Ollama (Aug/2023)

Get up and running with large language models, locally. Run Llama 2 and other models on macOS. Customize and create your own.

Try it: https://ollama.ai/

Put it in your menu bar: https://github.com/JerrySievert/Dumbar

GPT-4 creates prompts for DALL-E about space (Aug/2023)

New image generates every 30mins. What a great project!

Take a look: https://cosmictrip.space/

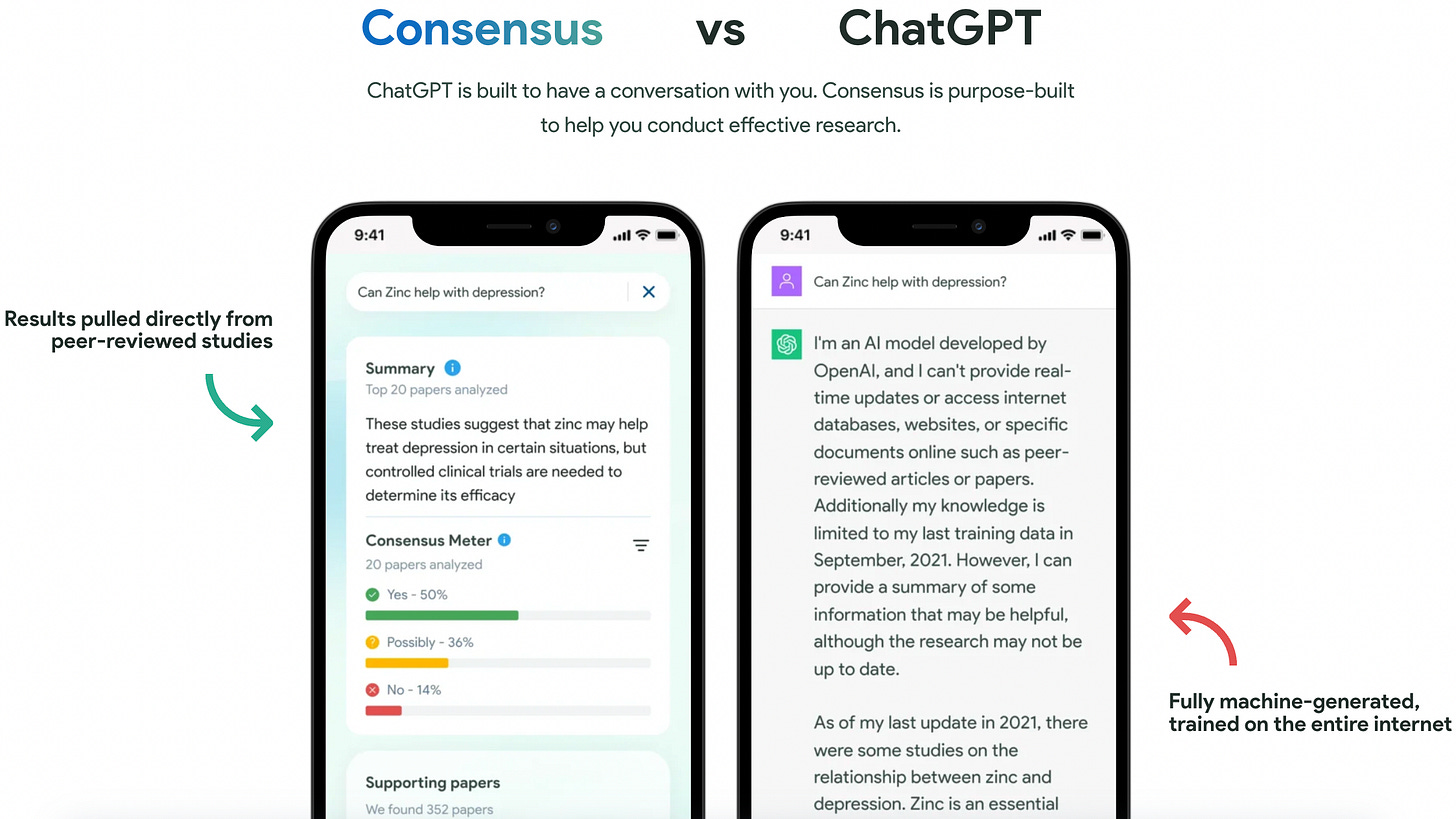

Consensus: Evidence-Based Answers, Faster (Aug/2023)

Consensus is a search engine that uses AI to find insights in research papers.

Tech stack includes OpenAI (GPT) and AI21 (Jurassic-2).

Try it (free search, no login): https://consensus.app/

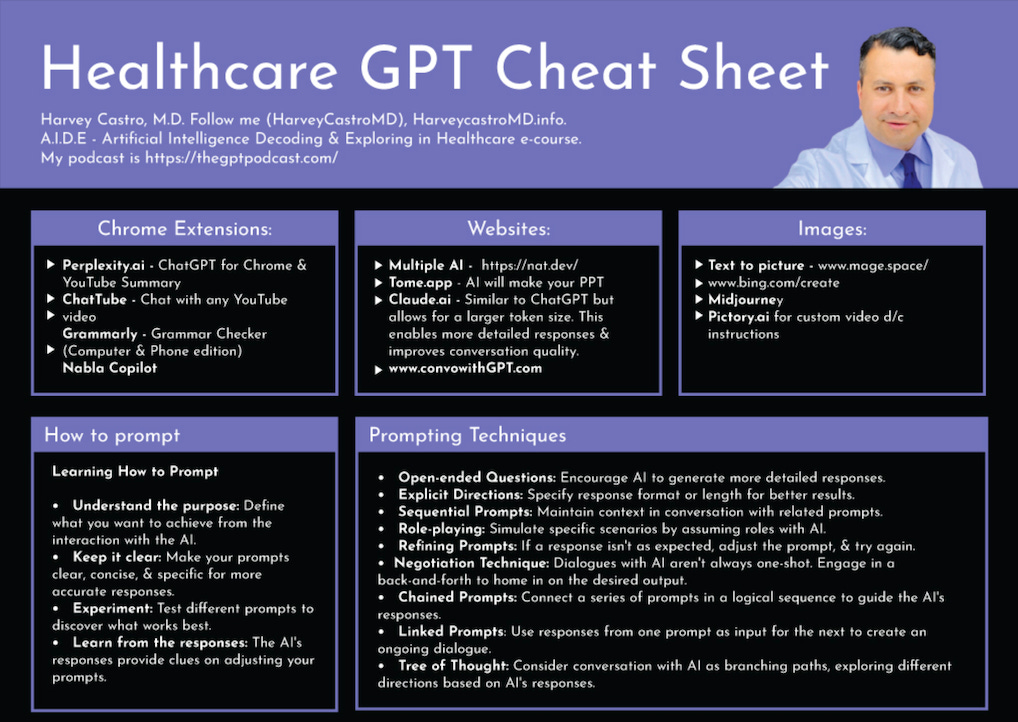

‘Healthcare GPT Cheat Sheet’ by Harvey Castro MD (Aug/2023)

Thanks to Harvey!

Download Healthcare GPT Cheat Sheet (PDF).

Download Healthcare GPT Cheat Sheet - Advanced (PDF).

Flashback

Two flashbacks this week… The first is by Wired, from Sep/2021, and it was titled ‘The Exponential Age Will Transform Economics Forever’.

The most basic cause of the exponential gap is simple: we are bad at maths.

…Human cognitive machinery does not naturally process such rapid change. The calculations bewilder us. Take the case of an atypical London rainstorm. Wembley Stadium is England’s national soccer venue… Imagine sitting at the highest row of level three, the furthest above the pitch you can be – some 40m or so above the ground.

Rain starts to fall, but you are sheltered by the partial roof above you. Yet this is no ordinary rain. This is exponential rain. The raindrops are going to gradually increase in frequency, doubling with each passing minute. One drop, then a minute later two drops, then a minute later four drops. By the fourth minute, eight drops. If it takes 30 minutes to get out of your seats and out of the stadium, how soon should you get moving to avoid being drenched?

To be safe, you should start moving by no later than minute 17 – to give yourself 30 minutes to be clear of the stadium. By the 47th minute, the exponential rain will be falling at a rate of 141 trillion drops per minute. Assuming a raindrop is about four cubic millimetres, by the 47th minute the deluge would be 600 million litres of water. Of course, the rain in the 48th minute will be twice as large, so you are likely to get soaked in the car park. And if you make it to the car, the deluge in the fiftieth minute will comprise five billion litres of water. It would weigh five million tonnes. Frankly, if exponential rain is forecast, you're best off staying at home.

Read more: https://www.wired.co.uk/article/exponential-age-azeem-azhar

AI in plain English using Jell-O crystals! (May/2022)

This video is doing the rounds again. We used jelly (Jell-O) crystals in different colors to symbolize different datasets, and then put the whole thing in a black box. It’s not a perfect analogy, but does show LLMs in a better way than ‘it’s a tape recorder’!

Watch my video (link):

Next

The next roundtable will be:

Life Architect - The Memo - Roundtable #3 with Harvey Castro

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 23/Sep/2023 at 5PM Los Angeles

Saturday 23/Sep/2023 at 8PM New York

Sunday 24/Sep/2023 at 8AM Perth (primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

Here’s a look at a rehearsal version of my latest keynote, for your interest. These keynotes are all closed-door private events for enterprise or government, and the ticket prices are outrageous, usually in the 4 or 5 figures.

Victorian Government (Aug/2023, link)

All my very best,

Alan

LifeArchitect.ai