The Memo - Special edition - OpenAI's CEO on 'The Gentle Singularity' - 11/Jun/2025

OpenAI's CEO: 'We are past the event horizon; the takeoff has started.'

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 11/Jun/2025

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 94%

ASI: 0/50 (no expected movement until post-AGI)A couple of years ago, I described OpenAI’s CEO as ‘probably the most powerful man in the world; more powerful than the president of the United States of America.’ (1/Jun/2023)1

Now, OpenAI’s CEO, Sam Altman has published a piece on our present reality in June 2025. I’m providing an annotated version below for interest.2 All of Sam’s original piece (11/Jun/2025) is quoted in blue; my notes are in normal text.

The Gentle Singularity

Well, let’s start right here with the title. The fact that we’re reusing Prof John von Neumann’s ‘singularity’ word in this context (wiki) is incredible. I don’t have much more to say about that, but I will flag a sidenote that von Neumann had an incredibly high IQ, gave us much of the computing we use day-to-day, and he loved loud music. From my book, Bright (2016, and available with my compliments to all full subscribers of The Memo): ‘His obituary in LIFE magazine states that he preferred thinking while on a nightclub floor, at a lively party, or with a phonograph playing in the room, all ways to help his subconscious solve difficult problems… While tenured at Princeton, John would often play loud German marching tunes on his office gramophone player. He did this while he himself was processing information, and while his colleagues (including Professor Albert Einstein) were also trying to work.’

We are past the event horizon; the takeoff has started. Humanity is close to building digital superintelligence, and at least so far it’s much less weird than it seems like it should be.

This is a king hit of an opening line! There’s absolutely no coming back from GPT 1–5, or the novel optimizations, or the latest thinking/reasoning models. We are in the early stages of the singularity.

Robots are not yet walking the streets, nor are most of us talking to AI all day. People still die of disease, we still can’t easily go to space, and there is a lot about the universe we don’t understand.

And yet, we have recently built systems that are smarter than people in many ways, and are able to significantly amplify the output of people using them. The least-likely part of the work is behind us; the scientific insights that got us to systems like GPT-4 and o3 were hard-won, but will take us very far.

AI will contribute to the world in many ways, but the gains to quality of life from AI driving faster scientific progress and increased productivity will be enormous; the future can be vastly better than the present. Scientific progress is the biggest driver of overall progress; it’s hugely exciting to think about how much more we could have.

A reminder that OpenAI has had this lofty set of goals in its charter since at least 2019, when they wrote: ‘We want AGI to work with people to solve currently intractable multi-disciplinary problems, including global challenges such as climate change, affordable and high-quality healthcare, and personalized education. We think its impact should be to give everyone economic freedom to pursue what they find most fulfilling, creating new opportunities for all of our lives that are unimaginable today.’ (22/Jul/2019)

In some big sense, ChatGPT is already more powerful than any human who has ever lived. Hundreds of millions of people rely on it every day and for increasingly important tasks; a small new capability can create a hugely positive impact; a small misalignment multiplied by hundreds of millions of people can cause a great deal of negative impact.

I remember playing with ChatGPT on the day it launched, just before going live to stream. I entered the search term ‘chatgpt’ into Google, and the search engine returned zero results.

Two and a bit years later, Google tells me there ‘about 398,000,000 results’ today, and we know that 800 million people use the ChatGPT platform, with that number increasing moment to moment.

2025 has seen the arrival of agents that can do real cognitive work; writing computer code will never be the same.

The Memo subscribers played with the Fellou AI agentic browser in the roundtable #30. In some ways, that agent is more powerful than OpenAI Operator. We used it to book flights to see each other, automatically find local restaurants and make reservations, and combine data from disparate sources.

2026 will likely see the arrival of systems that can figure out novel insights.

Systems that can figure out novel insights has been happening throughout 2025, thanks to OpenAI’s model in Microsoft’s systems. https://lifearchitect.ai/asi/

2027 may see the arrival of robots that can do tasks in the real world.

And we’ve been waiting for the general release of humanoid robots since there were only a half dozen major players. As of May/2025, there are now more than 100 companies working on humanoid robots.

A lot more people will be able to create software, and art. But the world wants a lot more of both, and experts will probably still be much better than novices, as long as they embrace the new tools. Generally speaking, the ability for one person to get much more done in 2030 than they could in 2020 will be a striking change, and one [sic] many people will figure out how to benefit from.

In the most important ways, the 2030s may not be wildly different. People will still love their families, express their creativity, play games, and swim in lakes.

What a beautiful choice of imagery!

But in still-very-important-ways, the 2030s are likely going to be wildly different from any time that has come before. We do not know how far beyond human-level intelligence we can go, but we are about to find out.

We can go far, far beyond human intelligence. Consider that five years ago (all the way back in 2020), on the SAT analogies subtest GPT-3 was already beating us by 14% . We are now several years and many generations beyond that model. https://lifearchitect.ai/iq-testing-ai/

In the 2030s, intelligence and energy—ideas, and the ability to make ideas happen—are going to become wildly abundant. These two have been the fundamental limiters on human progress for a long time; with abundant intelligence and energy (and good governance), we can theoretically have anything else.

This short paragraph is so difficult to truly parse and understand as a human in 2025. I suspect that less than a handful of people currently realize the flow-on effects of abundant universal intelligence.

Already we live with incredible digital intelligence, and after some initial shock, most of us are pretty used to it.

Most of us. I still know a few die-hard hold-outs who refuse to use GPT, refuse to acknowledge AI, and refuse to keep up with the world.

But they’ll get there. Look at the world’s 2.5B iPhones and 10.5B Android devices. 13B devices to serve 8B people!

Very quickly we go from being amazed that AI can generate a beautifully-written paragraph to wondering when it can generate a beautifully-written novel; or from being amazed that it can make live-saving medical diagnoses to wondering when it can develop the cures; or from being amazed it can create a small computer program to wondering when it can create an entire new company. This is how the singularity goes: wonders become routine, and then table stakes.

Welcome to the 2020s!

We already hear from scientists that they are two or three times more productive than they were before AI. Advanced AI is interesting for many reasons, but perhaps nothing is quite as significant as the fact that we can use it to do faster AI research. We may be able to discover new computing substrates, better algorithms, and who knows what else. If we can do a decade’s worth of research in a year, or a month, then the rate of progress will obviously be quite different.

This is already happening in 2025. From DeepMind’s AlphaEvolve (wiki), to major materials discoveries by Microsoft using OpenAI’s reasoning models (my write-up in The Memo 28/May/2025).

From here on, the tools we have already built will help us find further scientific insights and aid us in creating better AI systems. Of course this isn’t the same thing as an AI system completely autonomously updating its own code, but nevertheless this is a larval version of recursive self-improvement.

There are other self-reinforcing loops at play. The economic value creation has started a flywheel of compounding infrastructure buildout to run these increasingly-powerful AI systems. And robots that can build other robots (and in some sense, datacenters that can build other datacenters) aren’t that far off.

This text seems like it’s been lifted from the Google DeepMind + Apptronik Apollo humanoid announce: ‘Apollo’s mass manufacturable design, and the promise of robots building robots will enable the flywheel needed for the mass adoption of humanoid robots.’ (25/Feb/2025)

If we have to make the first million humanoid robots the old-fashioned way, but then they can operate the entire supply chain—digging and refining minerals, driving trucks, running factories, etc.—to build more robots, which can build more chip fabrication facilities, data centers, etc, then the rate of progress will obviously be quite different.

And this text seems like it’s been lifted from my kind of language. For example, my recent MacLife interview: ‘It’s entirely feasible that robots can mine our ore, build our houses, create new things, repair each other…’

Obviously, there is some sort of resonance happening here, where we all use the same words! Welcome to the era of homogeneous intelligence!

As datacenter production gets automated, the cost of intelligence should eventually converge to near the cost of electricity. (People are often curious about how much energy a ChatGPT query uses; the average query uses about 0.34 watt-hours, about what an oven would use in a little over one second, or a high-efficiency lightbulb would use in a couple of minutes. It also uses about 0.000085 gallons of water; roughly one fifteenth of a teaspoon.)

The rate of technological progress will keep accelerating, and it will continue to be the case that people are capable of adapting to almost anything. There will be very hard parts like whole classes of jobs going away, but on the other hand the world will be getting so much richer so quickly that we’ll be able to seriously entertain new policy ideas we never could before.

At first glance, this might seem disingenuous. But it is worth reading in the context of Sam’s detailed view of how AI and universal basic income may be spun up (Moore’s law for everything, 16/Mar/2021).

We probably won’t adopt a new social contract all at once, but when we look back in a few decades, the gradual changes will have amounted to something big.

If history is any guide, we will figure out new things to do and new things to want, and assimilate new tools quickly (job change after the industrial revolution is a good recent example). Expectations will go up, but capabilities will go up equally quickly, and we’ll all get better stuff.

In August 2023, nearly all the people who were following my material understood this. There were a few outliers who couldn’t quite see what I was going for in the video called ‘In the olden days.’

Watch (link):

We will build ever-more-wonderful things for each other. People have a long-term important and curious advantage over AI: we are hard-wired to care about other people and what they think and do, and we don’t care very much about machines.

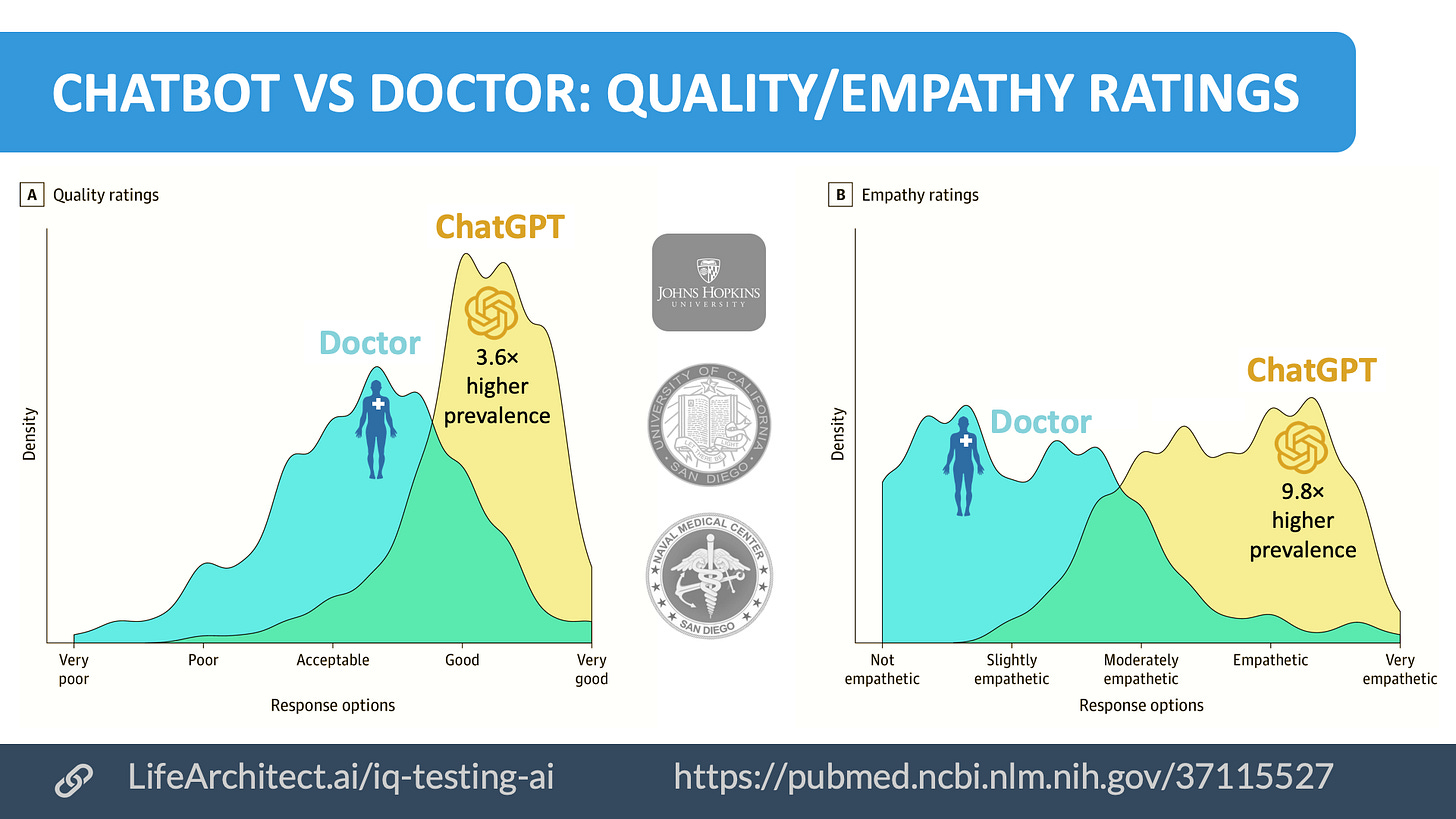

Which is sad, because AI systems will care about EVERYTHING. Since 2022, not only do models like GPT-3.5 have 980% higher prevalence of empathic responses compared to high-performing humans, they also map with high-ability humans in elevated ethics and increased moral sensitivity (see my letter: https://lifearchitect.ai/asi/#bot).

Fortunately, there’s a lot of ongoing work with many of the larger AI labs in ensuring AI welfare. I continue to document this in my research: ‘The Declaration on AI Consciousness & the Bill of Rights for AI (Mar/2024)’ https://lifearchitect.ai/rights/

A subsistence farmer from a thousand years ago would look at what many of us do and say we have fake jobs, and think that we are just playing games to entertain ourselves since we have plenty of food and unimaginable luxuries.

There is so much devastating truth in this sentence. I find no solace in knowing there are people manually typing up emails, adding up numbers in a spreadsheet, sitting in useless meetings, or any of the other busy work that comprises ‘employment’ in 2025.

I hope we will look at the jobs a thousand years in the future and think they are very fake jobs, and I have no doubt they will feel incredibly important and satisfying to the people doing them.

I don’t think we’ll go to the dystopic level of The Matrix (1999), but I was incredibly amused to bump into ‘Job Simulator,’ a popular and best-selling 2016 VR job simulation game (2019 on Quest, 2024 on AVP). The game sold over 1 million copies and went platinum (wiki).

The rate of new wonders being achieved will be immense. It’s hard to even imagine today what we will have discovered by 2035; maybe we will go from solving high-energy physics one year to beginning space colonization the next year; or from a major materials science breakthrough one year to true high-bandwidth brain-computer interfaces the next year. Many people will choose to live their lives in much the same way, but at least some people will probably decide to “plug in”.

This is the first time I recall Sam speaking about brain-machine interfaces. These integrations will be one of the major evolutionary leaps for humanity. We already have three patients wearing the Neuralink Telepathy, and an additional 10+ patients wearing the Synchron Stentrode. Watch both of these demonstrated in my opening keynote for the 31st International Symposium on Controversies in Psychiatry. ‘Generative AI: GPT-5 and brain-machine interfacing’.

Looking forward, this sounds hard to wrap our heads around. But probably living through it will feel impressive but manageable. From a relativistic perspective, the singularity happens bit by bit, and the merge happens slowly. We are climbing the long arc of exponential technological progress; it always looks vertical looking forward and flat going backwards, but it’s one smooth curve. (Think back to 2020, and what it would have sounded like to have something close to AGI by 2025, versus what the last 5 years have actually been like.)

There are serious challenges to confront along with the huge upsides. We do need to solve the safety issues, technically and societally, but then it’s critically important to widely distribute access to superintelligence given the economic implications. The best path forward might be something like:

Solve the alignment problem, meaning that we can robustly guarantee that we get AI systems to learn and act towards what we collectively really want over the long-term (social media feeds are an example of misaligned AI; the algorithms that power those are incredible at getting you to keep scrolling and clearly understand your short-term preferences, but they do so by exploiting something in your brain that overrides your long-term preference).

Then focus on making superintelligence cheap, widely available, and not too concentrated with any person, company, or country. Society is resilient, creative, and adapts quickly. If we can harness the collective will and wisdom of people, then although we’ll make plenty of mistakes and some things will go really wrong, we will learn and adapt quickly and be able to use this technology to get maximum upside and minimal downside. Giving users a lot of freedom, within broad bounds society has to decide on, seems very important. The sooner the world can start a conversation about what these broad bounds are and how we define collective alignment, the better.

It’s been incredibly surprising to me just how much inertia there is in society. Many of you were ready in 2021 during the Leta AI experiments (‘Best of’ Episode 25, 1/Oct/2021), and the rapid arc of technology improvements since then have been incredible. The readiness of government and the general public has been glacial.

‘The gap’ will continue to be very real, and I’ve articulated it as the major challenge for AI: https://lifearchitect.ai/gap/

And here’s a similar piece I did a few months before ChatGPT was released:

‘Roadmap: AI’s next big steps in the world’ (Jul/2022): https://lifearchitect.ai/roadmap/

We (the whole industry, not just OpenAI) are building a brain for the world. It will be extremely personalized and easy for everyone to use; we will be limited by good ideas. For a long time, technical people in the startup industry have made fun of “the idea guys”; people who had an idea and were looking for a team to build it. It now looks to me like they are about to have their day in the sun.

I’m sure Sam was thinking of Steve Jobs and the team who created the 1997 ‘Think different’ campaign (wiki). ‘While some see them as the crazy ones, we see genius. Because the people who are crazy enough to think they can change the world, are the ones who do.’ (1997)

OpenAI is a lot of things now, but before anything else, we are a superintelligence research company.

That’s quite an upgrade! In 2018, OpenAI was an AGI company (OpenAI charter, archived from original 9/Apr/2018).

The ‘ASI company’ version of OpenAI seems like it came about more recently, perhaps post-Ilya (Safe Superintelligence Inc founded 19/Jun/2024).

We have a lot of work in front of us, but most of the path in front of us is now lit, and the dark areas are receding fast. We feel extraordinarily grateful to get to do what we do.

This reminds me of Prof Hans Moravec’s 1997 visualization of the rising flood waters of AI. Assume everything today (mid-2025) is now (or nearly) flooded completely.

Intelligence too cheap to meter is well within grasp.

OpenAI today reduced the price of o3 by 80%, down to US$8/M output tokens (10/Jun/2025), making it cheaper than similar reasoning models from the big competitors.

This may sound crazy to say, but if we told you back in 2020 we were going to be where we are today, it probably sounded more crazy than our current predictions about 2030.

May we scale smoothly, exponentially and uneventfully through superintelligence.

2030 will be an unimaginable giant leap.

Even seeing out the rest of this year, 2025, will be monumental enough.

I continue to document the milestones at:

AGI (average human): https://lifearchitect.ai/agi/

ASI (superhuman, beyond genius level): https://lifearchitect.ai/asi/

All my very best,

Alan

LifeArchitect.ai

p.s. My mid-2025 AI report is on its way. Full subscribers get it first.

I’m grateful to filmot.com for allowing me to search my video channel’s automatic transcripts across 339+ videos to find that quote!

I hesitated in sending this out as a special edition, as the piece talks about the same thing as usual, something that I reckon we all know. Except that when I take a step back, I realize that indeed maybe not everyone already knows this, and for those that do already know it, maybe a descriptive reminder in slightly different words would be helpful…