The Memo - 21/Jun/2024

Claude 3.5 Sonnet, Nemotron-4-340B, AuroraGPT updates, and much more!

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 21/Jun/2024

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 74 ➜ 75%Microsoft VP Prof Sébastien Bubeck on Phi-3 (23/Apr/2024):

If you have a very, very high stakes application, let’s say in a healthcare scenario, then I definitely think that you should go with the frontier model—the best, most capable, most reliable. For other uses, other factors matter more, including speed and cost.

That’s where you want to go with Phi-3.

Contents

The BIG Stuff (Claude 3.5 Sonnet, Nemotron-4-340B, Claude 3 as judge…)

The Interesting Stuff (mid-year report, Ilya, Waymo 3.5x, Runway Gen-3, V2A…)

Policy ($100M model laws, OpenAI + NSA, Trump uses ChatGPT for speech…)

Toys to Play With (AuroraGPT, social AI + human, Llama 3 training, Teleport…)

Flashback (IQ testing AI…)

Next (Roundtable…)

The BIG Stuff

Anthropic Claude 3.5 Sonnet (21/Jun/2024)

Anthropic released Claude 3.5 Sonnet a few hours ago. Smaller than the previous model Claude 3 Opus, this is the new state-of-the-art model. MMLU=90.4 (5-shot CoT). GPQA=67.2 (maj32 + 5-shot). Scores 5/5 on ALPrompt 2024H1.

This model bumped the AGI countdown from 74% ➜ 75%: https://lifearchitect.ai/agi/

For the first time, a large language model has breached the 65% mark on GPQA, designed to be at the level of our smartest PhDs. ‘Regular’ PhDs score 34%, while in-domain specialized PhDs are at 65%. Claude 3 Sonnet scored 67.2% (maj32 + 5-shot).

Anthropic gave one hour notice before release with this fun substitution cipher, decoded here.

Read the announce: https://www.anthropic.com/news/claude-3-5-sonnet

Try it here (free, login): https://poe.com/Claude-3.5-Sonnet

See an example app output: https://x.com/skirano/status/1803809495811858807

See it on the Models Table: https://lifearchitect.ai/models-table/

NVIDIA Nemotron-4-340B (15/Jun/2024)

A successor to Megatron (my link), Nemotron-4-340B was trained on 9T tokens (27:1). The model was trained using 6,144 H100s between December 2023 and May 2024. MMLU=81.1. The dataset is made up of web documents, news articles, scientific papers, books, and more (Feb/2024):

This is currently the largest open-source model available to date.

See it on the Models Table: https://lifearchitect.ai/models-table/

Dell to provide H100 racks for xAI supercomputer (20/Jun/2024)

Dell and Supermicro (SMC) are set to provide the servers for Elon Musk’s xAI supercomputer. This project, described as ‘the world’s largest and most powerful supercomputer’, will be located in Memphis, Tennessee and represents the largest multi-billion dollar investment in the city's history. The supercomputer is expected to be operational by the fall [US fall is September to November] of 2025 and will power the next version of xAI’s Grok chatbot.

I’m including the images below as I think they are important. These racks will power the next frontier model—approaching superintelligence—and we will interact directly with models that come out of these servers (after a full six months of training!).

Read more via DCD.

Adam Unikowsky: In AI we trust, part II (16/Jun/2024)

Adam Unikowsky has won eight Supreme Court cases as lead counsel, was a former law clerk to Justice Antonin Scalia, and is currently a biglaw partner. Using Claude 3 Opus, he explores the potential of AI in adjudicating Supreme Court cases.

Claude is fully capable of acting as a Supreme Court Justice right now. When used as a law clerk, Claude is easily as insightful and accurate as human clerks, while towering over humans in efficiency…

These outputs did not require any prompt engineering or hand-holding. I simply uploaded the merits briefs into Claude, which takes about 10 seconds, and asked Claude to decide the case. It took less than a minute for Claude to spit out these decisions…

Of the 37 merits cases decided so far this Term, Claude decided 27 in the same way the Supreme Court did. In the other 10 (such as Campos-Chaves), I frequently was more persuaded by Claude’s analysis than the Supreme Court’s…

I know what you’re thinking. “Claude is just picking a merits brief and summarizing it. It can’t actually come up with new ideas.” No. Saying “AI is really good at summarizing briefs” is like saying “iPhones are really good at being calculators.” They are really good at being calculators. But there’s more there…

With no priming whatsoever, Claude is proposing a completely novel legal standard that is clearer and more administrable than anything proposed by the parties or the Court. Claude then offers a trenchant explanation of the arguments for and against this standard. There’s no way a human could come up with this within seconds like Claude did…

Not only is Claude able to make sensible recommendations and draft judicial opinions, but Claude effortlessly does things like generate novel legal standards and spot methodological errors in expert testimony…

Claude works at least 5,000 times faster than humans do, while producing work of similar or better quality…

Also, recall that Claude hasn’t been fine-tuned or trained on any case law. It’s a general-purpose AI. If we taught Claude the entire corpus of American case law, which could be done easily, its legal ability would improve significantly.

Read more via Adam Unikowsky.

I think this piece is so historically significant that I’ve provided a PDF archive for download (35 pages, 21MB):

The Interesting Stuff

Integrated AI: The sky is quickening (mid-2024 AI retrospective, Jun/2024)

In 2024, the capabilities of cutting-edge AI systems have surpassed what even the brightest PhDs—those who’ve achieved a level of academic mastery after two decades in the education system—can fully comprehend or match. This is an incredible milestone with incredible benefits. AI will soon begin independently tackling major challenges facing humanity such as education, health, economics, and scientific mysteries that have stumped our greatest minds.

Read the report: https://lifearchitect.ai/the-sky-is-quickening/

Watch the video (link):

The GPT-4 model family (20/Jun/2024)

It’s challenging to interpret just what OpenAI are doing with their model names. Following on from my complex GPT-3 model family viz released last year, I’ve now created a GPT-4 model family viz.

Take a look: https://lifearchitect.ai/gpt-4/#family

It’s been a huge month for AI releases, with the most powerful frontier model and the largest open-source model being released in the last few days. We’re not even halfway through this edition, covering massive progress across labs like Meta and DeepSeek, new policies affecting you, and toys to play with like a new Facebook clone for humans and AI…

Microsoft AI CEO Mustafa Suleyman audits OpenAI’s code (14/Jun/2024)

Microsoft's AI chief, Mustafa Suleyman, has been examining OpenAI's algorithms, highlighting the intertwined yet competitive relationship between Microsoft and OpenAI. While Microsoft has intellectual property rights to OpenAI’s software due to its substantial investment, the presence of Suleyman has added a layer of complexity. Despite collaboration, there are signs Microsoft might be preparing to develop its own large-scale AI models independently.

Read more via Semafor.

OpenAI selects Oracle Cloud Infrastructure to extend Microsoft Azure AI platform (11/Jun/2024)

Oracle announced that OpenAI has chosen Oracle Cloud Infrastructure (OCI) to supplement its Microsoft Azure AI platform. This collaboration aims to enhance the performance and scalability of OpenAI’s models by leveraging OCI's robust cloud solutions. The move underscores the growing trend of integrating diverse cloud infrastructures to optimize AI workloads.

Read more via Oracle.

Ilya Sutskever Has a New Plan for Safe Superintelligence (19/Jun/2024)

Ilya Sutskever, the co-founder of OpenAI, has announced his new venture called Safe Superintelligence Inc. This research lab aims to create a safe, powerful artificial intelligence system without the immediate intention of selling AI products or services. The initiative seeks to avoid the competitive pressures faced by rivals like OpenAI, Google, and Anthropic, focusing solely on developing safe superintelligence.

Sidenote: I expect to report on this next in maybe a full year from now. This is going to be a long wait!

Read more via Bloomberg.

Waymo's latest milestone in autonomous driving (19/Jun/2024)

Waymo celebrates a significant milestone in autonomous driving as their self-driving cars complete over 10 million miles of real-world testing. This achievement underscores the advancements in AI and machine learning technologies, pushing the boundaries of what autonomous vehicles can accomplish in terms of safety, reliability, and efficiency.

Read more via X.

Compare with Cruise as shown in The Memo edition 19/May/2023.

Compare with Tesla as shown in The Memo edition 13/Nov/2023.

DeepSeek-Coder-V2 236B MoE (Jun/2024)

DeepSeek-Coder-V2 is a 236B parameter Mixture-of-Experts (MoE) model trained on 10.2T tokens. The MMLU score is 79.2. The paper explores advanced AI techniques in automated code generation. This model, developed by DeepSeek-AI, showcases significant improvements in generating accurate and efficient code snippets, making it a valuable tool for developers. The paper details the architecture, training methodology, and benchmark comparisons with other state-of-the-art models.

Try it (free, login): https://chat.deepseek.com/coder

See it on the Models Table: https://lifearchitect.ai/models-table/

Google DeepMind shifts from research lab to AI product factory (17/Jun/2024)

Hassabis has spent his career primarily focused on research, but his new job will necessarily center more on commercialization… There are even rumblings within the company that the group may eventually ship products directly…

While no one is getting as much computing power as they want, the supply is tighter for teams engaged in pure research, say the former employee and others familiar with the lab…

Hassabis has also made the case that researchers working on foundational science should embrace commercial development as an asset. He says that he agreed to assume his new role partly because AI models are becoming more versatile and that developing commercial products yields techniques that are useful for pushing research forward. Google’s ubiquitous consumer products, he says, provide a unique testing ground for its science experiments. “We get feedback from millions of users,” Hassabis says. “And that can be incredibly useful, obviously, to improve the product, but also to improve your research.”

Read more via Bloomberg.

Runway Gen-3 Alpha examples (Jun/2024)

Gen-3 Alpha is the first of an upcoming series of models trained by Runway on a new infrastructure built for large-scale multimodal training. It is a major improvement in fidelity, consistency, and motion over Gen-2, and a step towards building General World Models.

Announce with examples: https://runwayml.com/blog/introducing-gen-3-alpha/

See another example via Reddit.

DeepMind V2A: Generating audio for video (17/Jun/2024)

DeepMind's new video-to-audio (V2A) technology combines video pixels with natural language text prompts to generate synchronized, realistic soundtracks for silent videos. V2A can create a variety of soundscapes, from cinematic scores to sound effects and dialogue, enhancing creative control for users. This technology uses a diffusion-based approach to refine audio from random noise, guided by visual input and text prompts.

Read more via Google DeepMind.

I showed some examples in the first few minutes of my most recent livestream (link):

If Ray Kurzweil is right (again), you’ll meet his immortal soul in the cloud (13/Jun/2024)

Ray Kurzweil remains highly optimistic about the future, believing that humans can merge with machines, become hyperintelligent, and live indefinitely. Despite skepticism in the past, many of his predictions, such as computers achieving human-level intelligence by 2029, now seem conservative. Kurzweil's new book, titled ‘The Singularity Is Nearer’, explores these ideas further.

Read more via WIRED.

New benchmark scores viz by Anthropic’s Sam McAllister (Jun/2024)

Source: https://x.com/sammcallister/status/1803791750856634873/photo/1

General Intelligence 2024 (3/Jun/2024)

This essay is making the rounds, predicting AGI in 3-5 years. I think we’re a lot closer than that, with AGI set to hit a lab near you within months (though of course, it will take many more years for AGI to reach you and me).

Folks in the field of AI like to make predictions for AGI. I have thoughts, and I’ve always wanted to write them down. Let’s do that. Since this isn’t something I’ve touched on in the past, I’ll start by doing my best to define what I mean by ‘general intelligence’. A generally intelligent entity is one that achieves a special synthesis of three things: a way of interacting with and observing a complex environment (embodiment), a robust world model covering the environment (intuition or fast thinking), and a mechanism for performing deep introspection on arbitrary topics (reasoning or slow thinking).

Read more via nonint.com.

Apple explains iPhone 15 Pro requirement for Apple Intelligence (19/Jun/2024)

With iOS 18, iPadOS 18, and macOS Sequoia, Apple is introducing a new personalized AI experience called Apple Intelligence that uses on-device, generative large-language models to enhance the user experience across iPhone, iPad, and Mac. These new AI features require Apple's latest iPhone 15 Pro and iPhone 15 Pro Max models to work, while only Macs and iPads with M1 or later chips will support Apple Intelligence.

Apple’s AI/machine learning head John Giannandrea said:

These models, when you run them at run times, it's called inference, and the inference of large language models is incredibly computationally expensive. And so it's a combination of bandwidth in the device, it's the size of the Apple Neural Engine, it's the oomph in the device to actually do these models fast enough to be useful. You could, in theory, run these models on a very old device, but it would be so slow that it would not be useful.

Read more via MacRumors.

AI took their jobs. Now they get paid to make it sound human (12/Jun/2024)

If you're worried about how AI will affect your job, the world of copywriters may offer a glimpse of the future. Writer Benjamin Miller was thriving in early 2023 but saw his team replaced by AI, leaving him to edit AI-generated articles. This shift reflects a broader trend where AI produces work once done by humans, with people now adding a human touch to AI's output.

Read more via BBC.

Policy

California’s new AI bill: Why Big Tech is worried about liability (14/Jun/2024)

California's SB 1047 bill has sparked significant concern among tech leaders, as it mandates safety testing for companies spending over US$100M on AI ‘frontier models’. Critics like Meta’s chief AI scientist, Yann LeCun, argue that such regulations could stifle innovation and harm California's tech industry. Proponents, however, emphasize the importance of accountability, especially for AI systems with the potential to cause mass casualty events.

Read more via Vox.

OpenAI adds former NSA chief to its board (13/Jun/2024)

OpenAI has announced that Paul M. Nakasone, a retired U.S. Army general and former director of the National Security Agency, will join its board. Nakasone is expected to contribute significantly to OpenAI's understanding of AI's role in strengthening cybersecurity. He will also join the company's Safety and Security Committee, which is currently evaluating OpenAI's processes and safeguards.

Read more via CNBC.

Edward Snowden was quite concerned:

They've gone full mask-off: do not ever trust OpenAI or its products (ChatGPT etc). There is only one reason for appointing an NSA Director to your board. This is a willful, calculated betrayal of the rights of every person on Earth. You have been warned.

Source: https://x.com/Snowden/status/1801610725229498403

Trump says he used AI to rewrite one of his speeches (14/Jun/2024)

Former President Donald Trump revealed that he used ChatGPT to rewrite one of his speeches. He stated that this move was part of his effort to embrace new technologies and improve communication effectiveness. Trump's use of AI highlights the increasing influence of AI tools in various fields, including politics.

Read more via The Gazette.

Toys to Play With

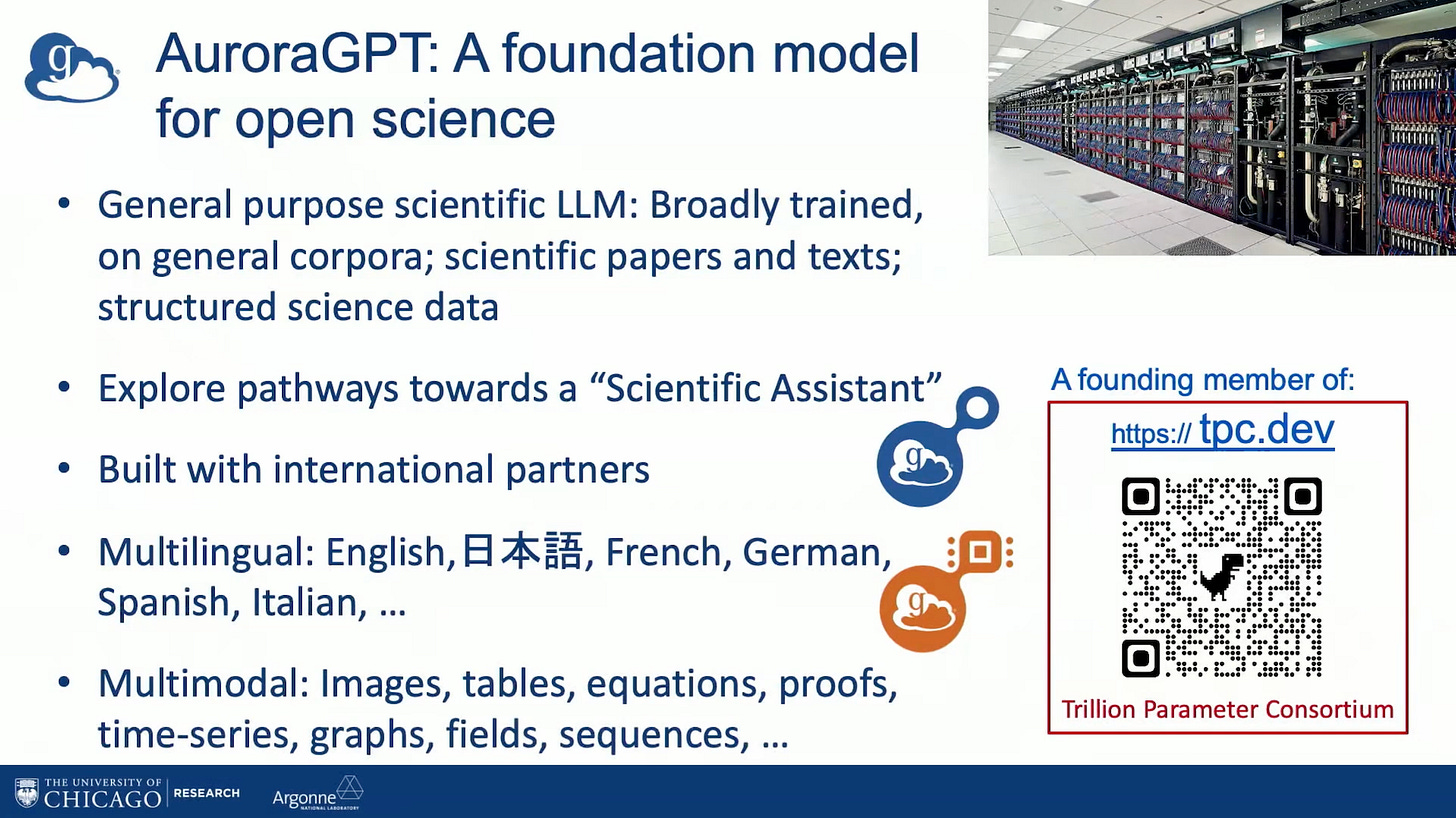

AuroraGPT dataset presentation: 25/Jun/2024

The Argonne National Laboratory is presenting a seminar on ‘Preparing Data at Scale for AuroraGPT’, the upcoming trillion-parameter science model.

Abstract: In this talk, I’ll share the recent progress of the AuroraGPT Data Team, how we contribute to the project of building a science focused LLM with AuroraGPT, how we collaborate with the other teams, and what topics we see as open questions. As the data team, our team is responsible for identifying, preparing, and deduplicating scientific data and text. We’ll talk about the systems and data quality challenges that our team tackles to prepare terabytes of scientific data and text to produce high quality text and data for training.

Tuesday 25/Jun/2024 @ 1:00 PM – 2:00 PM (Illinois, USA)

Read more: https://www.anl.gov/event/preparing-data-at-scale-for-auroragpt

Some limited updates in this video from May/2024 at 51m47s.

How Meta trains large language models at scale (12/Jun/2024)

Here’s a mental toy to play with: training Llama 3! Meta's AI research has faced significant computational challenges due to the scale required for training large language models (LLMs). The company has had to innovate across its infrastructure stack, including hardware reliability, fast recovery on failure, efficient preservation of the training state, and optimal connectivity between GPUs. Meta built two 24k GPU clusters using RoCE and InfiniBand to train its latest model, Llama 3, demonstrating how it balances performance and operational learnings.

Read more via Engineering at Meta.

Former Snap engineer launches Butterflies, a social network where AIs and humans coexist (18/Jun/2024)

Butterflies is a new social network that integrates both humans and AI personas, allowing them to interact through posts, comments, and direct messages. Founded by Vu Tran, a former Snap engineer, the app has been in beta for five months and is now available to the public. Users can create AI personas, called Butterflies, that autonomously generate content and engage with others on the platform.

Read more via TechCrunch.

Try it on mobile: https://www.butterflies.ai/

Compare Butterflies with the hilarious AI bots-only social network, Chirper.ai as featured in The Memo edition 9/Jun/2023.

Introducing Teleport by Varjo – scan, reconstruct, and explore real-world places in stunning detail (18/Jun/2024)

Teleport by Varjo, now available for preview to early access users, allows you to create photorealistic 3D virtual recreations of real world locations effortlessly with just an iPhone, and share them with anyone, anywhere.

Watch the video (link):

Flashback

I’ve been wandering down the halls of IQ testing AI models, something I started exploring back in 2021. The sheer pace of change in model power and smarts is incredibly jarring to me.

Take a look: https://lifearchitect.ai/iq-testing-ai/

Next

The next roundtable will be:

Life Architect - The Memo - Roundtable #13

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 29/Jun/2024 at 5PM Los Angeles

Saturday 29/Jun/2024 at 8PM New York

Sunday 30/Jun/2024 at 10AM Brisbane (new primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai