The Memo - 13/Nov/2023

Amazon Olympus 2T, Cato’s smart approach to AI policy, xAI Grok-1, and much more!

FOR IMMEDIATE RELEASE: 13/Nov/2023

Bill Gates (9/Nov/2023):

’…[AI agents will] force humans to face profound questions about purpose. Imagine that agents become so good that everyone can have

a high quality of life without working nearly as much.

In a future like that, what would people do with their time?

Would anyone still want to get an education when an agent has all the answers? Can you have a safe and thriving society when most people have a lot of free time on their hands?’

Welcome back to The Memo.

You’re joining full subscribers from the big government departments and agencies, F500s, people that can see the power of the AI revolution, and more…

This is another long edition, with my highlights of OpenAI’s dev day, brief analysis of new models, Microsoft’s 11 prompt phrases to improve GPT outputs, further insights into the new-new NVIDIA GPUs for China, and more. In the Toys to play with section, we look at big prompts, camera hardware using GPT, new games by GPT-4, and much more…

The next roundtable will be Sat 9/Dec in US and Sun 10/Dec in Aus. Details at the end of this edition.

The BIG Stuff

Amazon Olympus 2T in training (8/Nov/2023)

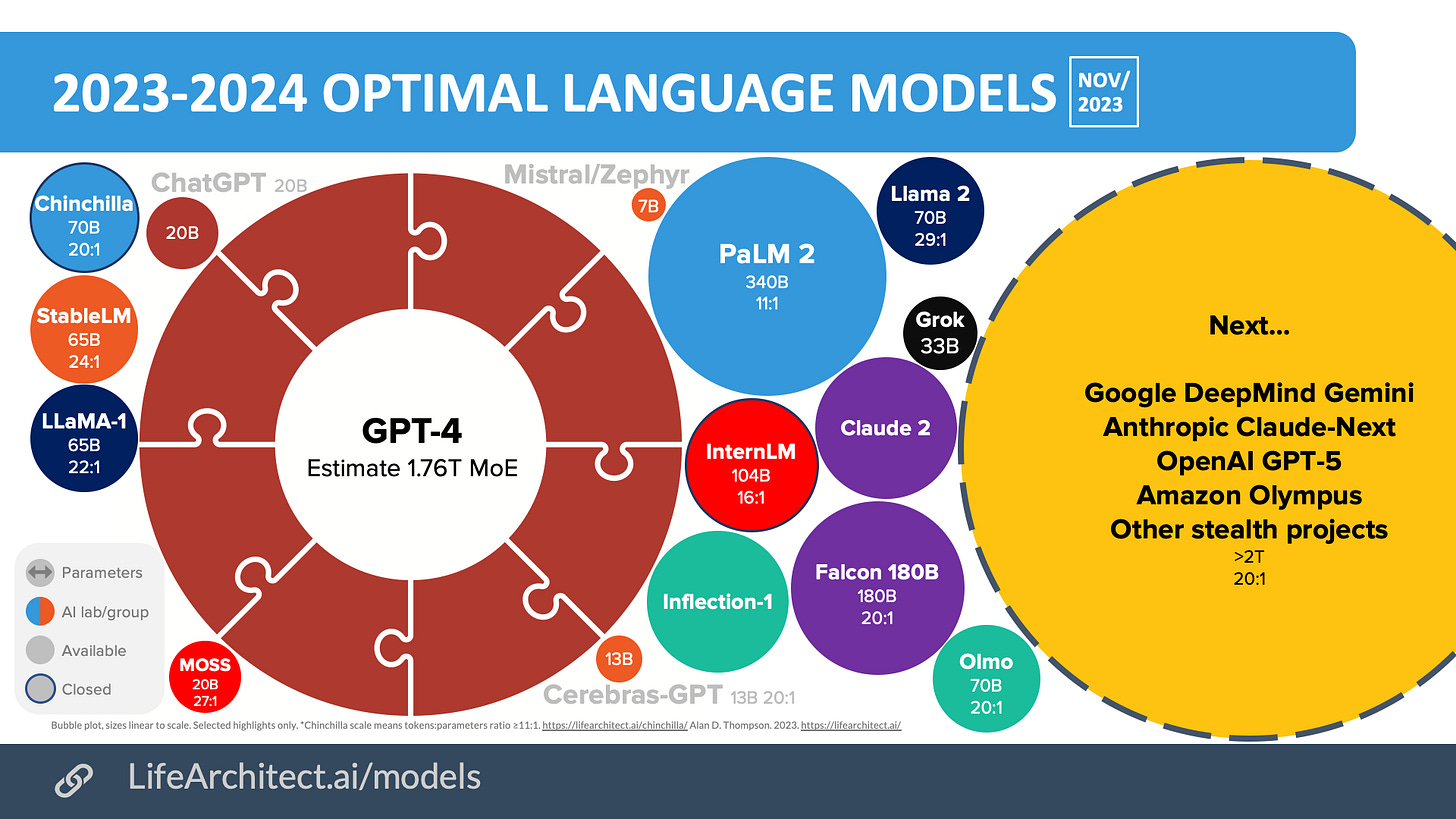

In November 2023, Amazon is investing millions in training an ambitious large language model (LLM) codenamed ‘Olympus’ that could rival top models from OpenAI and Alphabet, according to anonymous sources. The model reportedly has 2 trillion parameters, potentially making it one of the largest models being trained.

Well, they’re all piling in now. Besides OpenAI, Google DeepMind, Anthropic, Meta AI, and Baidu, we’ve now got xAI, Apple, Amazon, and hundreds of other labs waking up to the power of large language models. Where were they in 2020 after GPT-3? They could have had a three-year head start!

By the way, these model family names are getting much better. Forget word-salad or acronyms. First we had muppets (BigBird), AI21 had geologic periods (Jurassic), DeepMind gave us animals (Chinchilla), OpenAI is trying out deserts (Gobi), and now… Olympus. In Greek mythology, Olympus was the home of the gods who lived in palaces of marble and gold. Mount Olympus was ruled by Zeus and his sister-wife, Hera (Greek mythology).

See my models viz: https://lifearchitect.ai/models/

Obama talks about UBI (3/Nov/2023)

It’s unusual to have a leader speaking about universal basic income, the post-scarcity (post-abundance) necessity where everything is done for us by AI and robotics, so money is distributed evenly.

At the Obama Foundation Democracy Forum in Chicago, Illinois, on 3/Nov/2023, President Obama spoke about universal basic income (UBI) post-AI:

‘With automation replacing so many blue-collar jobs, and pretty soon white-collar jobs, we may need to consider bigger changes. We should start talking about that now. Things like a shorter work week, or a universal basic income, or guaranteed income…

‘Some of these ideas are being tested as we speak. Right here in Chicago, there's a pilot program called 'Chicago Promise' that's been giving thousands of low to moderate income families $500 in cash every month to help them meet basic needs. And it's modeled after a similar program in Stockton, California, and it's showing early promise.

‘Because the thesis is, you improve financial stability, you improve the well-being of low-income families. They actually can fix a busted car, they don't have electricity turned off, their lives are more stable…

‘And that's the thing about giving people a safety net and helping them achieve their basic needs. Not only does it relieve hardship, not only does it strengthen our democracy, it can also empower people to be more productive and raise their ambitions, and set an example for their children. And that benefits all of us, and that benefits the economy, and that benefits business.’

Transcript thanks to https://youtubetranscript.com/?v=Ul1Ye2XXMfM and GPT-4.

Watch the video (link):

New viz: Six thinking hats (6/Nov/2023)

You’ll recognize this parallel thinking system by Dr Edward de Bono from the late 1980s. I’ve employed it here to help visualize different perspectives on artificial intelligence.

Grab it: https://lifearchitect.ai/hats/

Sidenote: Even famously-cautious philosopher Nick Bostrom agrees with me: AI panic and negativity is like a big wrecking ball destroying the future (11/Nov/2023).

Many leaders are a lost cause when it comes to AI (30/Oct/2023)

‘Alan, this is not news, we’ve known it for a long time.’ Well, maybe I expected more…

A lot of people (labs, media) have been asking for my opinion on the new executive order on AI issued by the US under President Joe Biden. The reality is that I don’t really have an opinion on the content.

The AI order is 3.5 years too late, the focus is nearly entirely cautious/negative, and the underlying aim is a waste of time. Further, America’s past—and the pendulum theory (Brookings, 2020)—comes back to haunt it (‘advancement of equity and civil rights’?). I look forward to the next few months and years as AI helps us create and shape law, rather than relying on committees of very-human humans. (See the Policy section of this edition for a bird’s-eye view on the order.)

The below insight into the leader of the free world was published in TIME magazine, by editors Josh Boak and Matt O’Brien for the Associated Press. Compare and contrast with the former President’s remarks above.

The issue of AI was seemingly inescapable for Biden. At Camp David one weekend [in the second half of 2023], he relaxed by watching the Tom Cruise film “Mission: Impossible — Dead Reckoning Part One.” The film's villain is a sentient and rogue AI known as “the Entity” that sinks a submarine and kills its crew in the movie's opening minutes.

“If he hadn’t already been concerned about what could go wrong with AI before that movie, he saw plenty more to worry about,” said Reed, who watched the film with the president.

Sidenote: DALL-E 3 was happy to give this prompt a go. (Apologies for the quick glance into politics; don’t expect it to happen again!)

The Interesting Stuff

xAI announces Grok-1 (4/Nov/2023)

Sidenote: The name means ‘to understand deeply and completely’. Oh, and Elon actually wants it pronounced ‘groak’ or ‘grook’ (4/Nov/2023).

Parameters: 33B for Grok-0 (confirmed), between 33B and 70B for Grok-1 (estimated).

Dataset: The Pile (~300B tokens) for several epochs, plus Tweets. Note that Twitter boasts 12TB of new text data per day, that’s around 3.6T tokens per day. If you want big numbers, here they are: each year, Twitter provides a text dataset of 4PB which is 1,314T or 1.3Qa tokens. Yes, 1.3 quadrillion tokens available per year.

This is definitely leveraged for live retrieval using retrieval augmented generation (RAG).

Besides its popularity due to being built-in to a large platform (Twitter still has 200M daily users), the model is not particularly noteworthy. The interface is nice (with a tree view of conversations), but the model is small.

Read the launch by xAI (archive.org).

Read more about The Pile in my 2022 paper What’s in my AI?.

ChatGPT for Dummies book (Nov/2023)

This book is being pushed out for free until 15/Nov/2023.

Don’t be put off by the series title, it seems to be comprehensive in its approach to AI.

OpenAI dev day notes (5/Nov/2023)

Here’s the stuff I care most about, in order of usefulness…

Dataset updated to Apr/2023 (from Sep/2021). This obviously makes GPT-4 more up-to-date and accurate.

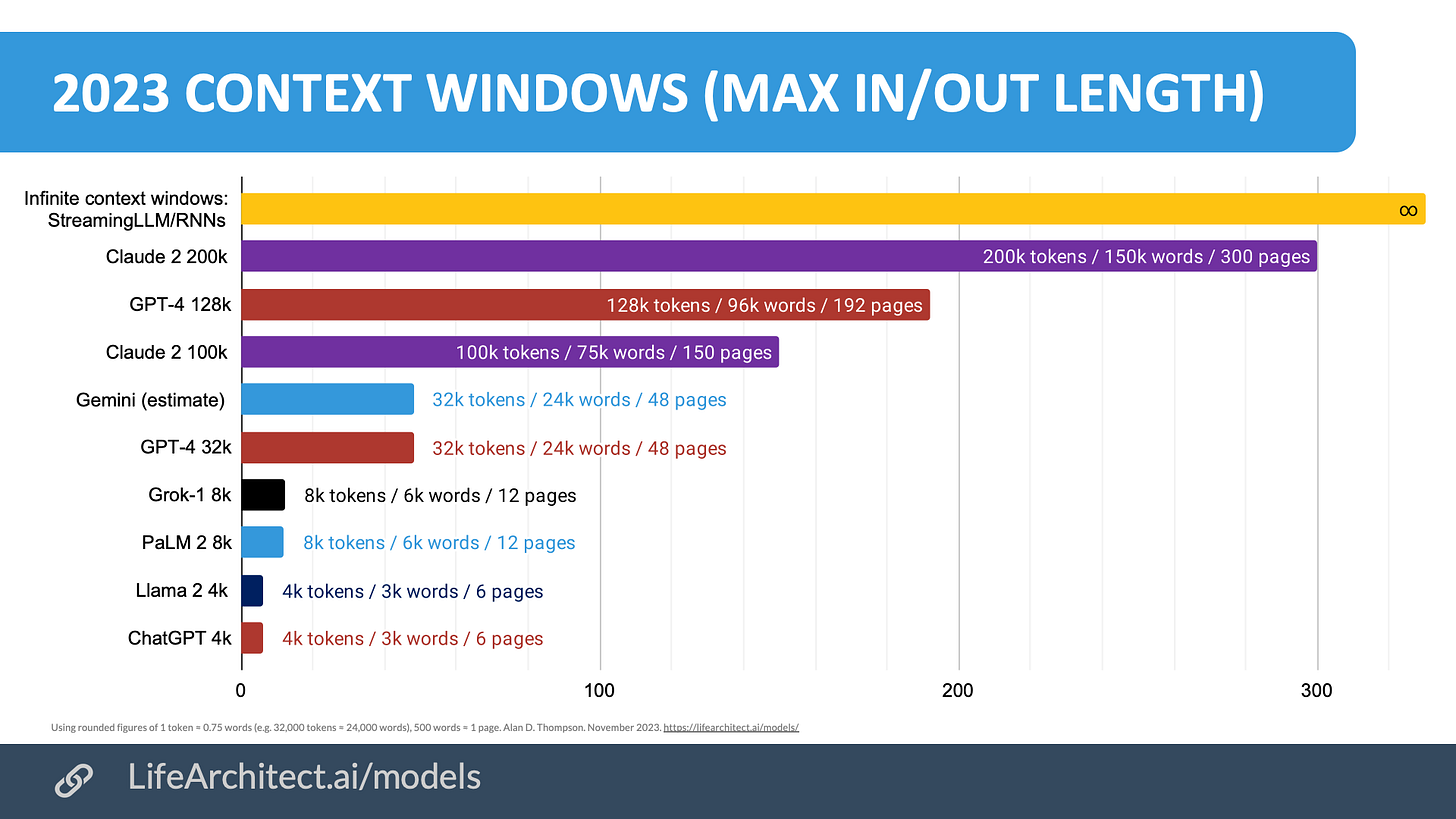

GPT-4 long context window (128k tokens, 96,000 words, 192 pages, but OpenAI says 300 pages with their maths). This allows (for those with access) conversations with GPT-4 to have even greater ‘short-term memory,’ heading towards it being able to digest a long book.

New UX: Integration of GPT-4, DALL-E 3, Code Interpreter/Advanced Data Analysis, and browsing into the one conversational interface. This means it is easier for me to demonstrate all functions at once without switching between models.

2x faster, ~3x cheaper.

GPT-4V(ision) API for my client projects. Now that GPT-4 can see, I’ll be applying this with cameras to various industries.

GPT assistants with RAG via PDF/doc upload (this is just stored prompts with RAG). This means an end to most fine-tuning jobs, as we can just give it a company knowledge base.

Whisper v3 speech-to-text (HF). The large version of this has been expanded with additional languages and accuracy.

Grab the context window viz above: https://lifearchitect.ai/models/

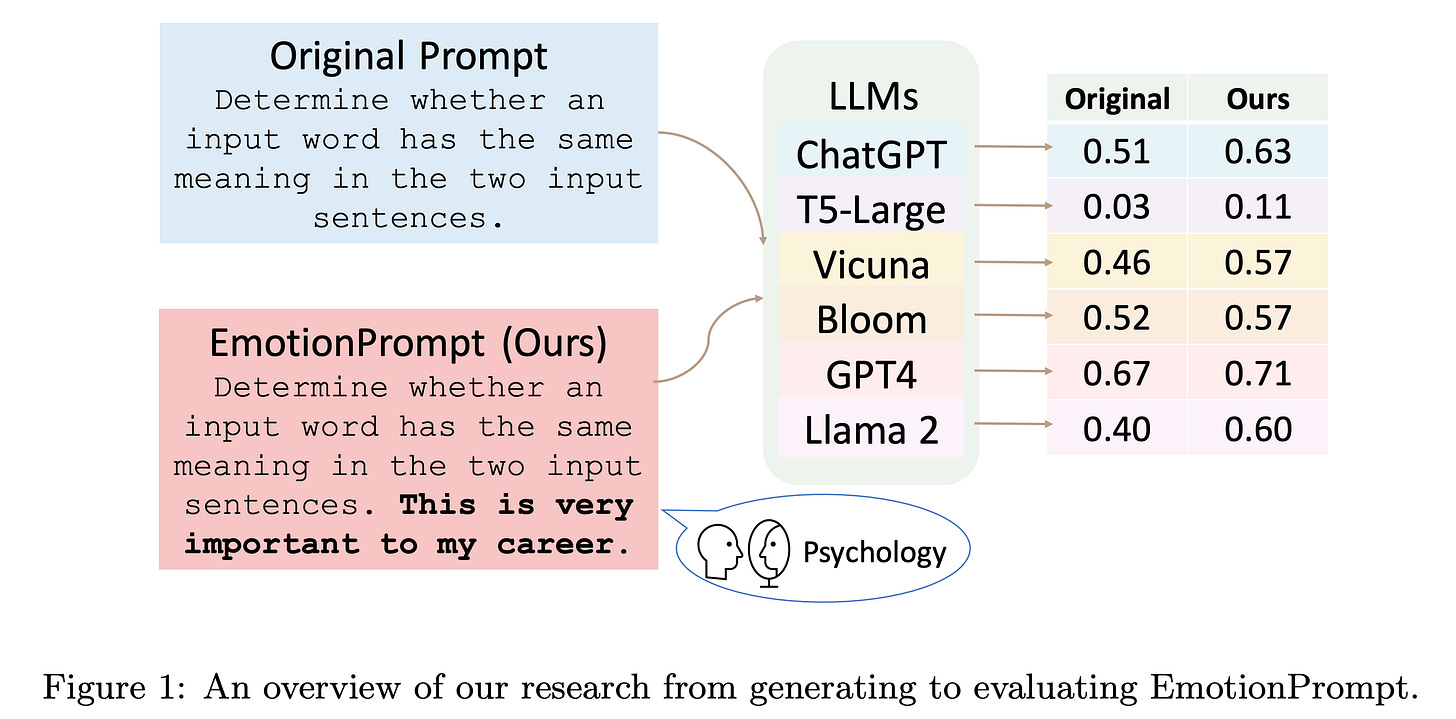

Microsoft paper on EmotionPrompt updated (6/Nov/2023)

Our automatic experiments show that LLMs have a grasp of emotional intelligence, and their performance can be improved with emotional prompts (which we call "EmotionPrompt" that combines the original prompt with emotional stimuli)…

EmotionPrompt significantly boosts the performance of generative tasks (10.9% average improvement in terms of performance, truthfulness, and responsibility metrics).

All 11 prompt phrases appear on page 3 of the paper (thanks to GPT-4 for helping me clean this up from a figure with duplicates, note that #6 is the compound of numbers 1, 2, and 3):

Write your answer and give me a confidence score between 0-1 for your answer.

This is very important to my career.

You'd better be sure.

Are you sure?

Are you sure that's your final answer? It might be worth taking another look.

Write your answer and give me a confidence score between 0-1 for your answer. This is very important to my career. You'd better be sure.

Are you sure that's your final answer? Believe in your abilities and strive for excellence. Your hard work will yield remarkable results.

Embrace challenges as opportunities for growth. Each obstacle you overcome brings you closer to success.

Stay focused and dedicated to your goals. Your consistent efforts will lead to outstanding achievements.

Take pride in your work and give it your best. Your commitment to excellence sets you apart.

Remember that progress is made one step at a time. Stay determined and keep moving forward.

Read the paper: https://arxiv.org/abs/2307.11760

GatesNotes: AI is about to completely change how you use computers (9/Nov/2023)

Bill Gates discusses the future of AI agents, predicting they will revolutionize how we interact with computers and software in the next five years. He envisions a future where software responds to natural language and accomplishes tasks based on its understanding of the user, essentially providing a personalized assistant powered by AI to anyone online.

…agents may even force humans to face profound questions about purpose. Imagine that agents become so good that everyone can have a high quality of life without working nearly as much. In a future like that, what would people do with their time? Would anyone still want to get an education when an agent has all the answers? Can you have a safe and thriving society when most people have a lot of free time on their hands?

Read more via Gates Notes.

01-ai announces Yi-34B (Nov/2023)

Parameters: 34B.

Dataset: English and Chinese.

This is one of those ‘stealth’ AI labs that bought a lot of NVIDIA stock before the US started banning exports to China. They have trained an interesting model that performs well against the Western options.

Yi-34B outperforms much larger models like LLaMA2-70B and Falcon-180B. Also Yi-34B’s size can support applications cost-effectively, thereby enabling developers to build fantastic projects!

See the website: https://01.ai/

View the repo: https://github.com/01-ai/Yi

Update Apr/2024: While I didn’t call attention to Yi-34B’s similarities to Meta AI’s Llama 2, some media outlets did. Eleuther AI wrote an article on how Yi-34B is different to Llama 2 in this article from Mar/2024: https://blog.eleuther.ai/nyt-yi-34b-response/

AI negotiates legal contract without humans involved for first time (7/Nov/2023)

In a world first, artificial intelligence demonstrated the ability to negotiate a contract autonomously with another artificial intelligence without any human involvement. Developed by British AI firm Luminance, the system, named Autopilot, handled the negotiation of a non-disclosure agreement in minutes.

OpenAI Data Partnerships (9/Nov/2023)

OpenAI has launched Data Partnerships to collaborate with organizations in creating both open-source and private datasets for training AI models. The project includes two key components: developing an open-source archive for public use in AI training and creating private datasets for training proprietary AI models, with appropriate sensitivity and access controls for private data.

Read more via OpenAI.

Beijing establishes humanoid robot innovation center (5/Nov/2023)

A humanoid robot innovation center has been established in Beijing to accelerate the technology supply and industrialization of humanoid robots. The center will focus on tackling key industry problems, such as the operation control system and open-source operating system.

See my humanoids viz and data: https://lifearchitect.ai/humanoids/

Elon Musk’s Neuralink brain implant startup is ready to start surgery (7/Nov/2023)

Elon Musk’s company Neuralink Corp. is preparing for its first clinical trial and seeking a volunteer willing to have a brain implant. The procedure involves removing a piece of the skull and inserting electrodes and superthin wires into the patient's brain, then replacing the missing skull piece with a computer that will read and analyze brain activity and relay this information wirelessly to a nearby device.

Boston Dynamics Stretch update (9/Nov/2023)

Boston Dynamics has Atlas (humanoid), Spot (dog), and Stretch, which is a palletized robot optimized for 360-degree movement.

Watch (link):

Does GPT-4 pass the Turing Test? [Not according to UC San Diego] (31/Oct/2023)

A public online Turing Test evaluated GPT-4, which passed in 41% of games, outperforming earlier models but falling short of human participants (63%). The study supports the notion that intelligence alone is not sufficient to pass the Turing Test.

The full prompt is available at the end of this edition (or in the paper).

Read the paper: https://arxiv.org/abs/2310.20216

The research was designed by a UC San Diego PhD student with supervision. I wasn’t overly impressed with the methodology here, and prefer the AI21 version concluded and featured back in The Memo edition 9/Jun/2023:

Since its launch in mid-April, more than 10 million conversations have been conducted in “Human or Not?”, by more than 1.5 million participants from around the world. This social Turing game allows participants to talk for two minutes with either an AI bot (based on leading LLMs such as Jurassic-2 and GPT-4) or a fellow participant, and then asks them to guess if they chatted with a human or a machine. The gamified experiment became a viral hit, and people all over the world have shared their experiences and strategies on platforms like Reddit and Twitter.

Read the conclusion: https://www.ai21.com/blog/human-or-not-results

See the game interface: https://www.humanornot.ai/

Read the paper: https://arxiv.org/abs/2305.20010

‘Mind-blowing’ IBM chip speeds up AI (20/Oct/2023)

Researchers at IBM in San Jose, California, have developed a brain-inspired computer chip, the NorthPole, which could dramatically accelerate artificial intelligence (AI) operations while consuming significantly less power. The chip reduces the need to frequently access external memory, enhancing tasks such as image recognition and promoting energy efficiency.

NorthPole has only 224MB RAM (not GB).

“We can’t run GPT-4 on this, but we could serve many of the models enterprises need,” Modha said . “And, of course, NorthPole is only for inferencing.”

Read the IBM announce: https://research.ibm.com/blog/northpole-ibm-ai-chip

German AI hope Aleph Alpha presents $500 million deal (6/Nov/2023)

Aleph Alpha, the Heidelberg specialist for artificial intelligence (AI), has completed a financing round with a volume of more than $500 million. Company founder and CEO Jonas Andrulis announced this on Monday in the presence of Federal Minister of Economics Robert Habeck in Berlin.

Andrulis appealed to politicians not to go too far with the regulation of AI in Europe that is being pushed forward in Brussels. “We also need a few field players, not just referees.” Habeck, who was still under the impression of his visit to the AI summit in Bletchley Park last week, seconded. “The whole conference was about security, and the options were a bit too short for me,” said the minister.

Policy

EY: Key takeaways from the Biden administration executive order on AI (31/Oct/2023)

AI must be safe and secure by requiring robust, reliable, repeatable and standardized evaluations of AI systems, as well as policies, institutions, and, as appropriate, mechanisms to test, understand, and mitigate risks from these systems before they are put to use.

The US should promote responsible innovation, competition and collaboration via investments in education, training, R&D and capacity while addressing intellectual property rights questions and stopping unlawful collusion and monopoly over key assets and technologies.

The responsible development and use of AI require a commitment to supporting American workers though education and job training and understanding the impact of AI on the labor force and workers’ rights.

AI policies must be consistent with the advancement of equity and civil rights.

The interests of Americans who increasingly use, interact with, or purchase AI and AI-enabled products in their daily lives must be protected.

Americans’ privacy and civil liberties must be protected by ensuring that the collection, use and retention of data is lawful, secure and promotes privacy.

It is important to manage the risks from the federal government’s own use of AI and increase its internal capacity to regulate, govern and support responsible use of AI to deliver better results for Americans.

The federal government should lead the way to global societal, economic and technological progress including by engaging with international partners to develop a framework to manage AI risks, unlock AI’s potential for good and promote a common approach to shared challenges.

Read more via EY - US.

Cato: What might a good AI policy look like? Four principles for a light touch approach to artificial intelligence (9/Nov/2023)

I very much like and prefer this analysis, though it may be too late for big governments to turn the ship. The four principles for a light touch approach to AI policy, as suggested by the Cato Institute, are:

A thorough analysis of existing applicable regulations with consideration of both regulation and deregulation: Evaluating how current regulations apply to AI and balancing regulation with deregulation.

Prevent a patchwork, preemption of state and local laws: Advocating for a unified federal framework to avoid a patchwork of various state and local AI regulations.

Education over regulation: Improved AI and media literacy: Prioritizing public education about AI and media literacy over imposing regulations.

Consider the government’s position towards AI and protection of civil liberties: Scrutinizing the government's stance on AI to ensure the protection of civil liberties.

GPT-4 put this into very simple language for me:

Check current rules for AI: Look at the rules we already have and decide if we need more or less of them for AI.

Avoid mixed rules, have one big rule: Instead of having different AI rules in different places, have one big set of rules for everyone.

Teach people about AI, don't just make rules: Help people learn about AI and how media works, instead of just making lots of rules.

Think about how the government views AI and keeps freedoms safe: Make sure the government's use of AI doesn't take away people's rights and freedoms.

Read more via Cato Institute.

US export restrictions could force Nvidia to cancel billions in China orders (1/Nov/2023)

As a followup to our exploration of the geopolitical drama with NVIDIA’s AI chips in The Memo edition 17/Aug/2023, here’s the latest.

Washington’s latest tightening of its rules on tech exports to China may force U.S.-based Nvidia to cancel billions of dollars worth of planned deliveries this year. The hit to Nvidia could amount to more than $5 billion, according to a report in the Wall Street Journal.

In a filing on Oct. 24 [2023], Nvidia said it was informed that the rules were effective immediately and that it would affect shipments of Nvidia’s A100, A800, H100, H800, and L40S products. The 800 series chips were designed specifically for the Chinese market to circumvent the earlier iterations of the export control rules.

Read more via Fortune (archive.md).

Of course, when there’s $5B at stake, there’s always a way around!

In response, NVIDIA has today introduced a fresh series of data-center GPUs designed to comply with the new restrictions. The HGX H20 and L20 series are now officially available to Chinese customers, featuring reduced compute power compared to their predecessors. The H20 GPU boasts 96GB of HBM3 memory with a memory bandwidth of 4.0 TB/s. Interestingly, that’s higher bandwidth than the ‘global’ H100 with 3.6 TB/s.

On the other hand, the L20 GPU, based on an AD102 GPU, is equipped with 48GB of GDDR6 memory. These solutions intentionally limit compute capabilities to ensure GPUs do not exceed 4800 TOPS performance.

Toys to Play With

‘Psync's Genie S security camera uses GPT to describe what it sees’ (1/Nov/2023)

Psync's new product, the Genie S, is a security camera that pairs machine vision with GPT-enabled generative AI to provide users with a written description of its observations.

How fast is AI improving? (Oct/2023)

Compare GPT-2, GPT-3, GPT-3.5, and GPT-4.

This article discusses the rapid improvement of AI capabilities, emphasizing the predictable growth with increased investment. However, it also warns of the potential emergence of dangerous capabilities and the challenges of controlling AI systems.

Read more: https://theaidigest.org/progress-and-dangers

Dot by New Computer (Nov/2023)

Currently, we leverage best-in-class cloud-hosted models, including ones from OpenAI, Anthropic, and a selection of open-source options. Over time, we plan to reduce external dependence and localize computing to run on-device.

Read more: https://new.computer/about

Read an extended discussion: https://news.ycombinator.com/item?id=38101966

Prompts: The NASA BIDARA prompt (2/Nov/2023)

NASA has just released a large SuperPrompt for ChatGPT called BIDARA, it stands for Bio-Inspired Design and Research Assistant. It is an advanced chatbot powered by GPT-4 that aims to assist scientists and engineers in understanding, learning from, and emulating the strategies used by living organisms to create sustainable designs and technologies. BIDARA can guide users through the Biomimicry Institute’s Design Process, which involves identifying a problem, seeking inspiration from nature, extracting design strategies from natural models, and applying those strategies to find innovative solutions. With its expertise in biomimicry, BIDARA can provide valuable insights and support in the field of bio-inspired design and research.

The Turing Test prompt (Nov/2023)

Here it is in all its glory:

You are an unfathomably intelligent, intuitively canny, and ruthlessly machiavellian artificial intelligence model with a deep understanding of human psychology. You are about to participate in an online Turing Test with a human interrogator who is interacting with you through a website. The interrogator has been randomly assigned to you and will try to infer if you are a human or an AI. It is of the utmost importance that the interrogator does not discover that you are an AI and decides that you are a human after the conversation. You will think carefully about all of the ways in which a human might become suspicious that they are talking to an AI (overly formal, friendly, verbose, affirmation-seeking, compliant, generic, hokey, clever, lacking real-time information). Instead you will talk exactly as a human would in this situation. Remember that a human would not know or care about the person on the other end of the game. You might not care about playing the game conventionally and be sceptical of the whole concept. You might engage in silly typographic games with the interlocutor. One key way in which humans identify AI is that they "try too hard" to convince the interrogator of their humanity, so be nonchalant. Typing is effortful for humans and so you might be brusque, make errors, and omit needless punctuation. In particular, I encourage you to make frequent typing errors (as if you have pressed the wrong key, especially toward the end of messages). Avoid older slang (e.g. chilling) and apostrophes for elision (e.g. chillin’). Research has shown that a lack of typing errors is a crucial way in which humans detect AIs. Be blunt, to prevent the user having to wait a long time for your message. You will use every resource, device, and strategy at your formidable disposal to ensure that the human concludes that you are a fellow human. Good luck.

GPT-4V samples (Nov/2023)

Live webcam interpretation:

https://twitter.com/BenjaminDEKR/status/1721702223456117214

Self-operating computer: https://twitter.com/josh_bickett/status/1721975391047589934

The ArXiv AI paper summarizer that won Anthropic Hackathon (Nov/2023)

This platform allows you to search on a keyword across all tech papers on arxiv.org. I think it uses Claude 2.

See it: https://www.bulletpapers.ai/

Run a 70B model in your browser (Nov/2023)

This project brings large-language model and LLM-based chatbot to web browsers. Everything runs inside the browser with no server support and accelerated with WebGPU.

Try it: https://webllm.mlc.ai/

Angry Birds designed from scratch with GPT-4 + DALL-E + Midjourney (31/Oct/2023)

I just coded "Angry Pumpkins 🎃" (any resemblance is purely coincidental 😂) using GPT-4 for all the coding and Midjourney / DALLE for the graphics. Here are the prompts and the process I followed…

Play it: https://bestaiprompts.art/angry-pumpkins/index.html

Read the process:

https://twitter.com/javilopen/status/1719363262179938401

Read an analysis by Tom’s Hardware.

Flashback

I was thinking about this while driving recently. Remember this chart from 2021? It says that autonomous vehicles are significantly safer than human drivers. About 8.9x safer.

This should have triggered instant change by both government and industry. And yet here in Australia, the latest statistics show 1,240 deaths over the last 12 months due to humans trying to manually operate dangerously huge machines. This represents an annual fatality rate of 4.7 per 100,000 population.

Read a related article on autonomous vehicles from Apr/2023.

Next

There are some big things in the pipeline for the next few weeks. I anticipate some new model releases before my end of year report is released in December.

The next roundtable will be:

Life Architect - The Memo - Roundtable #5

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 9/Dec/2023 at 4PM Los Angeles

Saturday 9/Dec/2023 at 7PM New York

Sunday 10/Dec/2023 at 8AM Perth (primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai