The Memo - 30/Apr/2023

Stability/DeepFloyd IF, RedPajama dataset, 'Laptop model' comparisons (Alpaca, Chimera), and much more!

FOR IMMEDIATE RELEASE: 30/Apr/2023

Welcome back to The Memo.

Thanks for being a part of history!

In the Policy section we look at what the US Government is doing with LLMs for Congress, take an inside look at how the US is implementing several post-2022 LLMs for military use, the EU’s latest updates to their Draft AI Act, and dive into an English copy of Japan’s latest AI white paper. (By the way, it is often next to impossible to find some of these resources. I should know; it’s my job to source it for you and put it here in The Memo!).

In the Toys to play with section, we look at some huge new dialogue models and interfaces, a free new iPhone app for AI video, a new hands-on tutorial by OpenAI, and much more…

Special: As promised, I’m also providing my recent 2-hour private keynote/workshop that was part of a well-produced multi-camera live and streamed paid event in Sydney (I believe tickets were $4,999). Links provided at the end of this edition for paid readers.

Part I: Large language models (hands-on with ChatGPT for business).

Part II: AI art (hands-on with Midjourney v5 including live audience examples).

The BIG Stuff

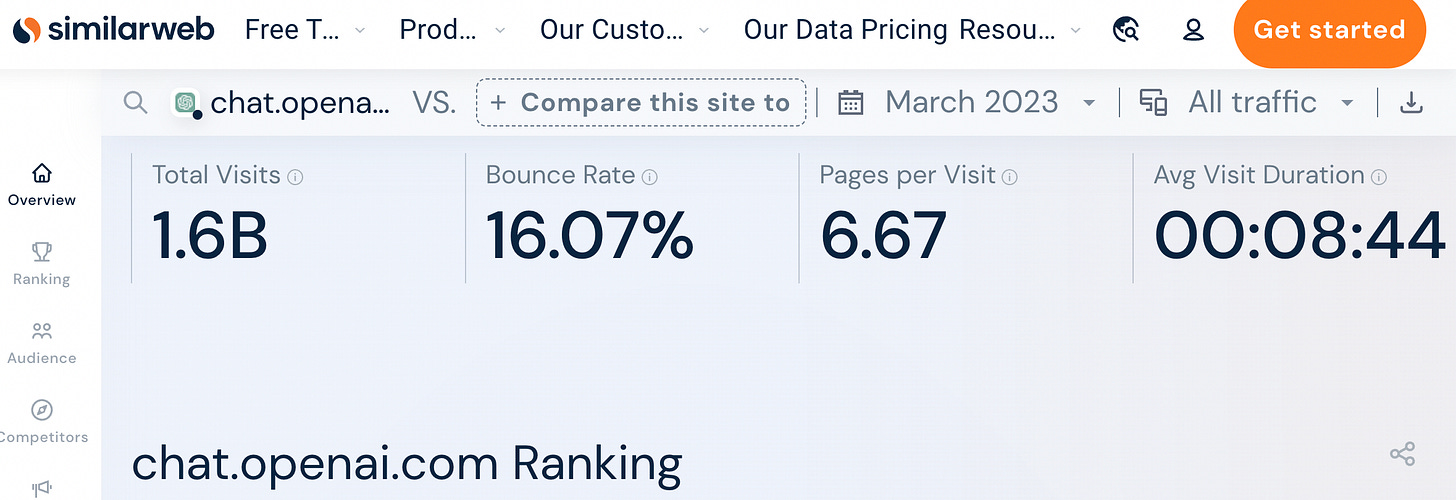

Exclusive: Users spent 13.9 billion minutes interacting with ChatGPT in March (Apr/2023)

1.6 billion visits x 8m44s (8.73) = 13.968 billion minutes

[corrected]

Data points: https://www.similarweb.com/website/chat.openai.com/#overview

AI via ChatGPT is now ‘better’ and 980% ‘more empathetic’ than a doctor (28/Apr/2023)

[This study used ChatGPT.] Chatbot responses were rated of significantly higher quality than physician responses…9.8 times higher prevalence of empathetic or very empathetic responses for the chatbot… If more patients’ questions are answered quickly, with empathy, and to a high standard, it might reduce unnecessary clinical visits, freeing up resources for those who need them… High-quality responses might also improve patient outcomes… responsive messaging may collaterally affect health behaviors, including medication adherence, compliance (eg, diet), and fewer missed appointments.

Read my table of other ChatGPT achievements.

Google Brain merges with DeepMind (20/Apr/2023)

As referenced several times in the last few editions of The Memo, DeepMind and Google have formally announced the combining of the two organizations.

The research advances from the phenomenal Brain and DeepMind teams laid much of the foundations of the current AI industry, from Deep Reinforcement Learning to Transformers, and the work we are going to be doing now as part of this new combined unit will create the next wave of world-changing breakthroughs.

Read the announcement via Demis/DeepMind.

Bloomberg: LLMs increase productivity by 14% (24/Apr/2023)

Customer service workers at a Fortune 500 software firm who were given access to generative artificial intelligence tools became 14% more productive on average than those who were not, with the least-skilled workers reaping the most benefit.

That’s according to a new study by researchers at Stanford University and the Massachusetts Institute of Technology who tested the impact of generative AI tools on productivity at the company over the course of a year.

The research marks the first time the impact of generative AI tools on work has been measured outside the lab.

Read more via Bloomberg (paywall).

The population for this study was Filipino call centre workers.

Compare this with GitHub’s Sep/2022 research into productivity using LLMs (Copilot based on GPT-3), where the population was computer programmers:

[Software] developers who used GitHub Copilot completed the task significantly faster–55% faster than the developers who didn’t use GitHub Copilot. Specifically, the developers using GitHub Copilot took on average 1 hour and 11 minutes to complete the task, while the developers who didn’t use GitHub Copilot took on average 2 hours and 41 minutes. These results are statistically significant (P=.0017) and the 95% confidence interval for the percentage speed gain is [21%, 89%].

A plethora of laptop models (25/Apr/2023)

I use the term ‘laptop model’ to refer to any model that can fit in RAM on a 2023 laptop! This includes LLaMA-based models like Alpaca, Dolly 2.0, BELLE, Vicuna, Koala, and Chimera.

You may have looked on as these were released this year, and you may have even downloaded some of them to try yourself on your local computer. While they are nowhere near as powerful as GPT-4 or PaLM, their size and accessibility is a huge leap in making language models available to people everywhere.

The table below is from the paper ‘Phoenix: Democratizing ChatGPT across Languages‘ by Chen et al, pp4, released 20/Apr/2023. There have been a few more models released in the 10 days since then!

And the figure below compares the ‘relative response quality’ of selected laptop models and dialogue models (like Bard and ChatGPT) as assessed by GPT-4 (pp11).

Link: https://lifearchitect.ai/models/#laptop-models

The Interesting Stuff

Datasets: training on code leads to reasoning ability (2022-2023)

Datasets are the big buckets of words used by AI labs to train models. Generally, they consist of web pages, books, articles, and Wikipedia. (Watch my 2-min video on this, or a longer version ‘for humans’.)

Researchers at Allen AI (with review by Google Brain) have noticed that including code in training datasets may be the cause of models learning to reason, especially via chain-of-thought (CoT).

We have concluded:

The ability to perform complex reasoning is likely to be from training on code.

If we consider the logic required to work through a programming language, it’s easy to see how beneficial this might be when applied to both human thought and daily living. For example, it may be that having a model step through a dataset with even a simple program in Pascal or BASIC or C (with its various functions and references) imitates some parts of the routines in our daily lives.

The paper is in very early draft (notes/outline) stage.

Datasets: Bigger and bigger (Apr/2023)

In a recent video I made an off-the-cuff remark that I’ve been ‘obsessed’ with datasets for a long time… it’s true!

My Mar/2022 paper What’s in my AI? A Comprehensive Analysis of Datasets Used to Train GPT-1, GPT-2, GPT-3, GPT-NeoX-20B, Megatron-11B, MT-NLG, and Gopher was well-received in academic, corporate, government, and intergovernmental circles.

Since that paper’s release, there have been a few more datasets to analyze.

Feb/2023: Meta AI’s LLaMA dataset with its 4TB of Common Crawl.

Mar/2023: OpenAI’s GPT-4 dataset designed by a team of 35 staff. No other information is available, but I’ve put together my best estimates on this dataset.

Apr/2023: Stability AI’s version of The Pile dataset, announced with their StableLM models, but no info has yet been released.

Apr/2023: Together AI’s RedPajama dataset.

This most recent one is interesting, and clones nearly exactly the LLaMA dataset:

RedPajama is more than double the size of the GPT-3 dataset (celebrating its 3rd anniversary in May/2023), but less than 10% of the size of GPT-4’s dataset using my estimates.

There is a seeming ‘duplication’ of web crawl data, using both a standard Common Crawl, as well as Google’s filtered version of the Common Crawl, C4. In effect, this means they have 20% of ‘clean’ common crawl (C4), and are then adding another 80% with ‘work to be done’ in the unfiltered Common Crawl. Work includes removing boilerplate and repeated text like footers, stripping out HTML, and more.

This open-source dataset contains 200GB of GitHub code (40% less than LLaMA), plus another 67GB of StackExchange code discussion. As highlighted above, allowing models to ‘see’ code during training may support complex reasoning abilities.

The dataset fits nicely in with the other recent releases, though it’s interesting to note just how much bigger the GPT-4 dataset is!

See my new table of big datasets.

If you’ve wondered what exactly ChatGPT (and other models) know about you, you can search for your own name or other interesting data in this searchable index here:

https://c4-search.apps.allenai.org/

Embodiment: Boston Dynamics Spot + ChatGPT (25/Apr/2023)

‘We integrated ChatGPT with our [Boston Dynamics Spot] robots.’

Watch: https://twitter.com/svpino/status/1650832349008125952

JPMorgan’s ChatGPT fine-tune rates 25 Years of Fed speeches (27/Apr/2023)

…Fed statements and central-banker speeches going back 25 years, the firm’s economists including Joseph Lupton employed a ChatGPT-based language model to detect the tenor of policy signals, effectively rating them on a scale from easy to restrictive in what JPMorgan is calling the Hawk-Dove Score.

Read more via Bloomberg: https://archive.is/npdCR

Amazon: working on a new LLM for Alexa (29/Apr/2023)

Amazon is building a more “generalized and capable” large language model (LLM) to power Alexa, said Amazon CEO Andy Jassy during the company’s first-quarter earnings call yesterday.

Apple: working on a new LLM for Siri (28/Apr/2023)

As expected!

Read (not very much) more: https://archive.is/RI4N2

Russia’s GigaChat: NeONKA 3.5 13B + Kandinsky 2.1 (Apr/2023)

I can certainly understand why many outlets ignore Russia. But they’ve always been hot on the tails of OpenAI, with ruGPT-3 and mGPT coming out soon after GPT-3’s release.

Now, Russian state-owned company Sberbank has integrated two models to make a new ChatGPT competitor.

NeONKA 3.5 13B large language model (to be publicly released). NeONKA stands for Neural Omnimodal Network with Knowledge-Awareness.

Kandinsky 2.1 text-to-image model.

The result is GigaChat. Access is by invitation only for now, but interesting to see an entire continent jumping on this. The CEO of Sberbank called GigaChat:

…a breakthrough for the entire and large universe of Russian technology. Moreover, GigaChat is unique in that it has open-source architecture, whereas its global equivalents pursue a Closed AI strategy. Importantly, GigaChat can also be used by students and serious researchers in their papers, as well as by mass users who like to experiment with innovation.

Sources: BusinessWorld, Reuters.

PwC invests $1B in Microsoft/GPT (26/Apr/2023)

PwC was one of my main clients back in the day. I’m a little surprised that it has taken them this long to ‘invest’ in AI. Remember, GPT-3 is about to celebrate its third anniversary. Meanwhile, several of PwC’s consulting competitors (Bain, BCG) announced AI partnerships months ago. PwC says:

This investment features an industry-leading relationship with Microsoft, creating scalable offerings using OpenAI’s GPT-4/ChatGPT and Microsoft’s Azure OpenAI Service… to help support clients in reinventing their businesses and delivering better outcomes by generating richer insights, driving more productivity and developing new products and services in a way that builds greater trust with their stakeholders.

Sequoia invests $21M in Harvey AI (GPT-4) (26/Apr/2023)

Sequoia’s very informal announce.

Weather people already being replaced with AI avatars (20/Apr/2023)

‘Jade, video presenter on the Swiss channel M Le Média, is generated by artificial intelligence.’

…it is because the Swiss channel has not found the right human candidate. Questioned by 20 minutes, the general manager of the Millennium Media group, Philippe Morax confides that a competition has indeed been set up, but “none of the candidates was selected”. He therefore decided to turn to new technologies, explaining that they are part of his media’s innovation strategy, to “provide our audience with quality content”.

Watch the video: https://twitter.com/VasseurVick/status/1649064305860530181

Atlassian introduces Atlassian Intelligence (20/Apr/2023)

I always feel just slightly embarrassed by Aussie company Atlassian. Their bloated Jira and Confluence offerings are found in big companies around the world. Now they’ve jumped on the post-2020 AI bandwagon, and only three years late! Screenshots and videos included.

Take a look: https://www.atlassian.com/blog/announcements/unleashing-power-of-ai

OpenAI applies to trademark ‘GPT’ (2022/2023)

Read a summary with all attachments.

Fudan MOSS 16B released (21/Apr/2023)

As reported in The Memo 25/Feb/2023 edition, MOSS is an interesting foray into chat models, and exceeds the Chinchilla recommendations. The Chinese/English model has finally been open-sourced under Apache 2.0, and is currently quite popular on both Hugging Face and Github.

Read more: https://lifearchitect.ai/moss/

The Snapchat My AI prompt (23/Apr/2023)

I’ve spent some time documenting the Snapchat My AI prompt. The platform is based on OpenAI’s GPT-3.5 models, and may include GPT-4. Some parts of the prompt are interesting, especially the instruction for it to be more succinct:

You must ALWAYS be extremely concise! 99% of the time, your lines should be a sentence or two.

I have had to use something similar with the new Leta GPT-4 prompt.

Read all ~500 words of the My AI prompt: https://lifearchitect.ai/snapchat/

Ray Kurzweil’s response to the ‘AI pause’ letter (21/Apr/2023)

Ray’s opinion on the ‘AI pause’ letter (‘too vague to be practical… tremendous benefits to advancing AI in critical fields such as medicine and health, education, pursuit of renewable energy sources to replace fossil fuels, and scores of other fields’).

Read more of Ray’s latest updates: https://lifearchitect.ai/kurzweil/#202212

Stability AI and DeepFloyd announce DeepFloyd IF (26/Apr/2023)

We introduce DeepFloyd IF, a novel state-of-the-art open-source text-to-image model [using Diffusion and T5 LLM] with a high degree of photorealism and language understanding.

The name apparently comes from the song: Pink Floyd - If (wiki).

Look at the examples and read more: https://github.com/deep-floyd/IF

Try it yourself: https://huggingface.co/spaces/DeepFloyd/IF

Here’s my first test… it passed the ‘text’ part!

DALL-E 2 used for Vogue cover for May (28/Apr/2023)

Read more (translated from Italian to English).

Source: From the original in Italian.

Cruise opens 200 autonomous robotaxis 24/7 anywhere in SF (26/Apr/2023)

This is a massive milestone for Cruise, and is slightly ahead of Waymo because there are no limits on where the Cruise driverless car can go (touted as ‘Level 4/5’ by many). The robotaxis are now able to drive around all of San Francisco, California, but it is limited to Cruise employees only for a bit longer.

Read/watch more: https://threadreaderapp.com/thread/1650845475195559939.html

The latest futuristic (and boxy) Cruise Origin vehicle—like the Didi Neuron—is coming soon:

Policy

US Congress begins using ChatGPT with 40x licenses (26/Apr/2023)

A few months ago in The Memo 27/Jan/2023 edition, we talked about ChatGPT being used to craft two speeches and two bills for the US government. Now, Congress has implemented ChatGPT for their new AI working group.

The House recently created a new AI working group for staff to test and share new AI tools in the congressional office environment and now the House of Representatives‘ digital service has obtained 40 licenses for ChatGPT Plus, which were distributed earlier this month…

“Everything from making it easier to come [up] with ideas, to summarizing information, to draft letters or documents and handle some aspects of constituent engagement. Ultimately it will allow Congressional staff to scale up more quickly regarding the demands placed on them,” said Schuman, who has played a key role in drafting and enacting tech and accountability related legislation in Congress including the DATA Act, FOIA modernization, and dozens of House rules changes.

Read more: https://fedscoop.com/congress-gets-40-chatgpt-plus-licenses/

Palantir’s new LLM platform using various open-source models (26/Apr/2023)

Palantir's solutions are deployed across nearly every Army mission area, ensuring data is accessible across all echelons for fast, agile decision-making that allows the warfighter to out-think and out-pace the adversary. (-via Palantir)

When I hear others say that the US government must have LLM technology beyond what is currently out there from Google and OpenAI, I shake my head. Until recently, they really didn’t have much; they had been caught out. Peter Thiel’s company, Palantir, has integrated a bunch of open-source models into a platform called ‘AIP’ for defence. I wonder if that fits into the original model licenses. It almost definitely does not fit into the spirit of the original model licenses. The models shown in the military platform are:

EleutherAI GPT-J 6B.

Google FLAN-T5 XL 3B.

EleutherAI GPT-NeoX-20B.

Databricks Dolly 2.0 12B.

Expecting this video to be pulled, I have hosted a backup of Palantir’s video for The Memo readers here:

EU Draft AI Act will require dataset detail (28/Apr/2023)

In The Memo edition 15/Sep/2023, I described the EU’s overreach into AI policy as ‘a true abomination’, adding:

The EU is wasting time trying to regulate a revolution that is in its fledgling stages, hamstringing humanity in the process. Their first order of business is blocking open-source models, and limiting visibility of AI models outside of big corporations. I have nothing good to say, and nothing more to add.

(Sidenote: LAION this week 28/Apr/2023 published a letter to the EU addressing this and other concerns with the Draft AI Act.)

Well, it turns out that the EU has more to add—and good stuff!—as this week they asked for AI labs to detail their datasets. Changes were analyzed by the WSJ:

Under the new provisions being added to the EU’s AI bill, developers of generative AI models will have to publish a “sufficiently detailed summary” of the copyright materials they used as part of their creation, the draft says.

I have pushed for visibility in datasets for several years, noting in my Mar/2022 paper:

Notwithstanding proposed standards [Datasheets for Datasets Mar/2018 paper] for documentation of dataset composition and collection, nearly all major research labs have fallen behind in disclosing details of datasets used in model training…

Of particular concern is the rapid progress of verbose and anonymous output from powerful AI systems based on large language models, many of which have little documentation of dataset details.

Researchers are strongly encouraged to employ templates provided in the ‘Datasheet for Datasets’ paper highlighted, and to use best-practice papers (i.e. The Pile v1 paper, with token count) when documenting datasets. Metrics for dataset size (GB), token count (B), source, grouping, and other details should be fully documented and published.

As language models continue to evolve and penetrate all human lives more fully, it is useful, urgent, and necessary to ensure that dataset details are accessible, transparent, and understandable for all.

Read my full report from Mar/2022, What’s in my AI?

Brookings: The EU and U.S. diverge on AI regulation: A transatlantic comparison and steps to alignment (24/Apr/2023)

I still lean on Brookings for thorough analysis of these policy issues.

The governance approaches of the EU and U.S. touch on a wide range of AI applications with international implications, including more sophisticated AI in consumer products; a proliferation of AI in regulated socioeconomic decisions; an expansion of AI in a wide variety of online platforms; and public-facing web-hosted AI systems, such as generative AI and foundation models.

…although the European debate over generative AI is new [Alan: even though the technology is decidedly not new], it is plausible that the EU will include some regulation of these models in the EU AI Act. This could potentially include quality standards, requirements to transfer information to third-party clients, and/or a risk management system for generative AI.

Back in 2020, I had some insight into the EU’s process in crafting the Draft AI Act. Speaking with a close colleague who was tasked with overseeing some of the documentation, I showed her the power of GPT-3. Her response was that the EU would consider that when it arrived (it had already arrived), and deal with it on a case-by-case basis. As you can tell, more often than not I have been dissatisfied with the approach by both the EU and the US.

Read more via The Brookings Institution.

Japan’s AI white paper (Apr/2023)

As I would expect of Japan, this is a really clean paper, very accessible, with recommendations outlined clearly. The paper is called ‘Japan's National Strategy in the New Era of AI’. Some of my highlights:

Accelerate applied research and development by accumulating domestic knowledge on foundation models through active use of overseas platforms.

In order to address the issue of data bias, government should work to increase the ratio of Japan-related training data by actively providing appropriate Japanese-language data for both domestic and foreign foundation models. In addition, the government should take the initiative in promoting the creation and utilization of a Japanese corpus (a structured database of Japanese sentences for bilingual translation).

Promote archiving public data held by the government and local governments so that the data can be utilized in the foundation model. Organize the rules and format for providing the data to third parties.

Deepen discussions on how AI governance should be implemented not only to manage risks for private operators, but also to encourage ingenuity and innovation; and if necessary, establish guidelines.

Download full paper in English (PDF, 26 pages).

Download visual/summary version in English (PDF, 28 pages).

Toys to Play With

Microsoft Designer now open (29/Apr/2023)

Microsoft has removed the waitlist for Designer. You’ll still need a Microsoft or Skype login, but use is free. My tests showed this is slightly different to DALL-E 2 (the model under the hood), as Microsoft are trying to steer more towards publishing.

Try it (free, login): https://designer.microsoft.com/

Hugging Face releases HuggingChat 30B (Apr/2023)

Based on OpenAssistant, a fine-tuned version of the mid-sized LLaMA 30B (not the 65B version).

In my testing, it’s decidedly poor. Sorry, guys!

Try it (free, no login): https://huggingface.co/chat/

Stability AI releases StableVicuna 13B (28/Apr/2023)

Vicuna is the first large-scale open source chatbot trained via reinforced learning from human feedback (RHLF). StableVicuna is a further instruction fine tuned and RLHF trained version of Vicuna 1.0 13b, which is an instruction fine tuned LLaMA 13b model!

Try it (free, no login): https://huggingface.co/spaces/CarperAI/StableVicuna

Read more: https://stability.ai/blog/stablevicuna-open-source-rlhf-chatbot

RunwayML releases iPhone app (25/Apr/2023)

Rather than trying to describe this model and app, watch the incredible trailer:

Download now: https://apps.apple.com/app/apple-store/id1665024375

Unrecord: Unreal Engine 5 gameplay (Apr/2023)

‘Unreal Engine 5 is just insane. This isn’t real. This is a video game being made by an indie dev.’

Video:

Download game soon: https://store.steampowered.com/app/2381520/Unrecord/

Tiny language model (Apr/2023)

Tiny Language Model (TLM) is a functional language model based on a small neural network that runs in your browser. It has the capability to learn and generate responses based on a six word customizable vocabulary. While very limited, it can offer insights into vastly more complex language models like ChatGPT.

Try it (free, no login): https://tinylanguagemodel.com/

Forefront.ai’s new chat (21/Apr/2023)

This new chat interface by my friends at Forefront uses GPT-3.5 and GPT-4, plus some other models.

Try it (free, Google login): https://chat.forefront.ai/

Read the thread: https://threadreaderapp.com/thread/1649429139907137540.html

GrammarlyGO (Apr/2023)

Grammarly has added even more AI to their writing platform.

Take a look: https://www.grammarly.com/grammarlygo

Nat.dev alternative (Apr/2023)

While Nat.dev remains popular for seeing and playing with multiple model outputs at once, there’s a few alternatives including this new app.

Try it: https://play.vercel.ai/r/mWjP5Dt

Phind.com (Apr/2023)

Phind.com (probably pronounced ‘find’!) is the latest LLM-powered search. ‘Optimized for developers and technical questions, Phind instantly answers questions with detailed explanations and relevant code snippets from the web. Phind is powered by large AI language models. Unlike some other AI assistants, Phind pulls information from the internet and is always up to date.’

I have a few standard prompts that I try for new models and platforms, and this platform seems to be giving the best results of all available LLM-powered search/retrieval platforms right now.

Test it yourself (free, no login): https://www.phind.com/

ChatGPT Prompt Engineering for Developers (29/Apr/2023)

This free course is by OpenAI. It is beginner-friendly. Only a basic understanding of Python is needed. But it is also suitable for advanced machine learning engineers wanting to approach the cutting-edge of prompt engineering and use LLMs.

Jump in: https://www.deeplearning.ai/short-courses/chatgpt-prompt-engineering-for-developers/

Next

I’m currently wrapping up a GPT-4 online seminar for the Icelandic Center for Artificial Intelligence (ICAI) and the Govt of Iceland; the first country to be integrated into OpenAI’s GPT-4 (both language and culture); I hope to share that with you shortly.

Here’s my recent 2-hour private keynote/workshop that was part of a big event in Sydney.

Part I: Large language models (hands-on with ChatGPT for business).

Part II: AI art (hands-on with Midjourney v5 including live audience examples).

We’d also love to see you in our live discussion forum, where we have realtime AI updates as they happen.

All my very best,

Alan

LifeArchitect.ai

Discussion | Search | Archives | Unsubscribe old account (pre-Aug/2022)