To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 13/Feb/2025

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 88%

ASI: 0/50 (no expected movement until post-AGI)OpenAI CEO (10/Feb/2025):

The world will not change all at once; it never does.

Life will go on mostly the same in the short run, and people in 2025 will mostly spend their time in the same way they did in 2024.

We will still fall in love, create families, get in fights online, hike in nature, etc.

But the future will be coming at us in a way that is impossible to ignore, and the long-term changes to our society and economy will be huge. We will find new things to do, new ways to be useful to each other, and new ways to compete, but they may not look very much like the jobs of today.

The early winner of The Who Moved My Cheese? AI Awards! for Feb/2025 is the majority of the British population. (‘The new poll shows that... 60% in favor of outlawing the development of “smarter-than-human” AI models.’)

Contents

The BIG Stuff (Cerebras 1,500tok/sec, next models, Stargate locations…)

The Interesting Stuff (OpenAI 3nm, OmniHuman-1, LLM-Reset, Gemini 2.0 Pro…)

Policy (GSAi gov chatbot, UN: African schools + AI, safety report, EU €200B…)

Toys to Play With (SYNTHETIC-1, OpenAI, Starlight, Mistral le chat…)

Flashback (Benchmark scores now vs two years ago…)

Next (Roundtable…)

In The Memo edition 11/Jan/2025, I included an empirical piece on brain atrophy due to LLM use. In the last edition (30/Jan/2025) we noted that researchers at the Swiss Business School had published a paper in the same area. Now, Microsoft and CMU have published another related paper, noting that ‘[AI can and does] result in the deterioration of cognitive faculties that ought to be preserved…leaving them atrophied and unprepared when the exceptions do arise.’

Download ‘The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers’ (PDF)

The BIG Stuff

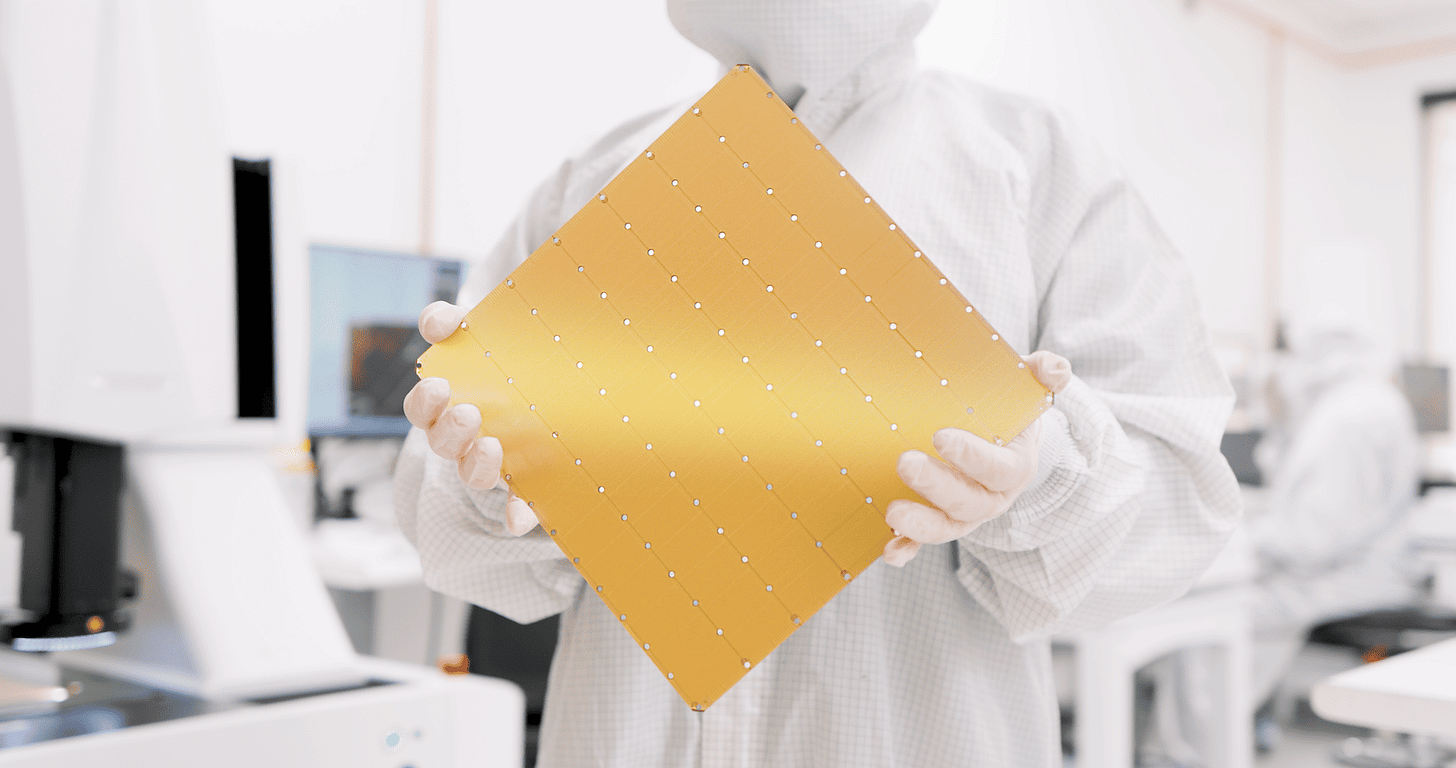

Cerebras launches world's fastest DeepSeek R1 Llama-70B inference (29/Jan/2025)

Cerebras has hosted DeepSeek R1 Llama-70B on their Cerebras Wafer Scale Engine, achieving world record performance of over 1,500 tokens per second. This is said to be 57 times faster than traditional GPU solutions. The 70B parameter model distills the reasoning capabilities of the larger 671B parameter DeepSeek R1 model and runs on Cerebras AI hardware with a focus on privacy and security. The platform's speed allows reasoning tasks that would take minutes on GPUs to be completed in seconds, offering significant benefits in coding, research, and other applications.

Here’s my analysis of this advance:

Human reading speed is 9 tokens per second (6 words per second)

1b. Human inner monologue (silent thinking) is 89 tokens per second (67 words per second)On a good day, GPT-4o and Claude 3.5S can output 60 tokens per second

On a bad day, GPT-4o and Claude 3.5S can output 10 tokens per second

Cerebras DeepSeek R1 Llama-70B is outputting 1,500 tokens per second (1,125 words per second)

The average Harry Potter book is 150,000 words long, or 200,000 tokens per book on average.

Cerebras DeepSeek R1 Llama-70B can write a completely new Harry Potter book from scratch in about two minutes (133 seconds).

This is incredibly jarring to watch in real time.

This is the new reality. Instant intelligence. Get used to it.

Try this prompt: Write a completely new Harry Potter book called ‘Harry Potter and the disembodied intelligence’. Start on page 1. "Harry was nervous. Artificial general intelligence had arrived."

Try it (free, login): https://inference.cerebras.ai/

Watch my video where the model writes a (very) short version of the new book in real time:

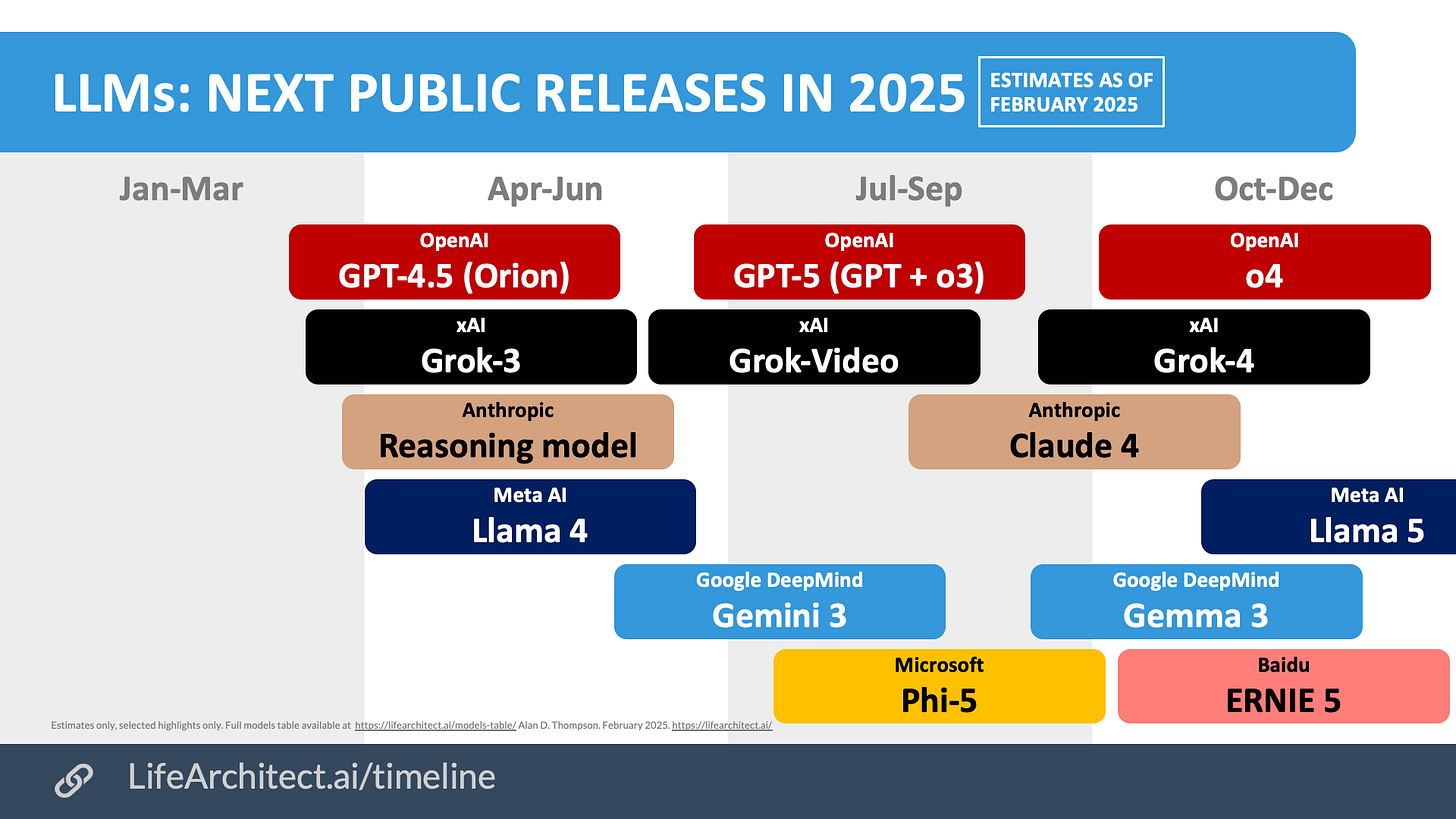

Exclusive: Next public models in 2025 (Feb/2025)

Most of these upcoming models are already known, but here they are all set out on one page. I don’t like making these kinds of estimates or predictions, and obviously AI labs have their own flexible internal schedules, but this should be fairly close to the releases we see this year from the Big 5.

OpenAI today mentioned a new roadmap for GPT and o releases (12/Feb/2025), saying:

We will next ship GPT-4.5, the model we called Orion internally, as our last non-chain-of-thought model.

After that, a top goal for us is to unify o-series models and GPT-series models by creating systems that can use all our tools, know when to think for a long time or not, and generally be useful for a very wide range of tasks.

In both ChatGPT and our API, we will release GPT-5 as a system that integrates a lot of our technology, including o3.

Similarweb: ChatGPT #6 website and #2 app (10/Feb/2025)

Here’s a little bit of history in the making. For global websites, ChatGPT now sits at #6, way ahead of Twitter and Reddit.

For apps, AI appears in position #1 (DeepSeek), #2 (ChatGPT), and #8 (Gemini).

The Interesting Stuff

Exclusive: LLM Reset/LLM Normalize (Feb/2025)

LLM-Reset is a system prompt (or developer prompt) designed to reset LLM response syntax by cleaning out overused words and poor syntax. Also known as LLM Normalize, LLM-Reset is offered as a free service to humanity.

LLM-Reset is inspired by Eric Mayer’s 2007 CSS-Reset. I’m surprised that no one seems to have offered this before, so here it is! LLM-Reset has the added benefit of bypassing ‘AI detectors’. I now use this system prompt myself across all frontier models.

Try it: https://lifearchitect.ai/llm-reset/

Can AI make us laugh? Comparing jokes generated by Witscript [GPT] and a human expert (19/Jan/2025)

This study assessed the comedic prowess of AI by comparing jokes generated by the Witscript AI system [using OpenAI GPT] to those written by a professional human joke writer. Remarkably, the AI-generated jokes elicited as much laughter from audiences as the human-crafted ones, indicating that advanced AI systems are now capable of producing humor on par with professional comedians. The research underscores the potential of AI in creating contextually integrated and conversational humor.

The human expert—a longtime joke writer for a well-known, US-based, late-night comedy/talk show—and Witscript [GPT], operated by JT, independently generated jokes based on the eight edited topics…

Experienced standup comics performed two comedy sets in front of live audiences in comedy venues in the US. The comics did not reveal the sources of the jokes and did not know which jokes had been written by AI. In each set, jokes based on all of the eight topics were performed, with half of the punchlines written by the human expert and half by Witscript [GPT]. Both the order of the topics and which punchline was selected for each topic were determined randomly and counterbalanced between sets. As a cover story, the comics explained that they would be performing some jokes written by a friend…

Our findings reveal that the AI-generated jokes got as much laughter as the humancrafted ones. This suggests that the best AI joke generators are now capable of composing original, conversational jokes on par with those of a professional human comedy writer.

Read the paper (PDF): ACL Anthology.

Here’s the first example:

Joke 1 Topic: A new report says that NASA officials are worried about a leak on the International Space Station.

Human: Will they fix it? Naw, even in space, landlords don't fix leaks. "But Houston, we have a potty problem." That's on them, we subcontracted to Boeing. (Laughter loudness=40)

AI: They're especially concerned since the leak is coming from one of their astronauts' space diapers. (Laughter loudness=50)

Read the jokes on pages 7–8: https://aclanthology.org/2025.chum-1.8.pdf#page=7

Did you know? The Memo features in Apple’s recent AI paper, has been discussed on Joe Rogan’s podcast, and a trusted source says it is used by top brass at the White House. Across over 100 editions, The Memo continues to be the #1 AI advisory, informing 10,000+ full subscribers including Microsoft, Google, and Meta AI. Full subscribers have complete access to the entire 4,000 words of this edition!

OpenAI looking at 16 states for datacentre campuses tied to Stargate (6/Feb/2025)