The Memo - 11/Jan/2025

NVIDIA Cosmos dataset 9 quadrillion tokens, ALPrompt 2025H1, OpenAI Reflections, and much more!

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 11/Jan/2025

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 88%

ASI: 0/50 (no expected movement until post-AGI)Dr Jim Fan (1/Jan/2025):

It gives me a lot of comfort knowing that we are the last generation without advanced robots everywhere. Our children will grow up as ‘robot natives’. They will have humanoids cook Michelin dinner, robot teddy bears tell bedtime stories, and FSD drive them to school.

We are the generation of ‘robot immigrants’, en route to a new world of ubiquitous Physical AI, much like our parents are ‘digital immigrants’, learning to realign their lives on 6 inches of touch screen. It’s a journey of both inventing sci-fi tech and reinventing ourselves. Everything that moves will be autonomous. Every year from now on will be the Year of Robotics. Here’s to a wild 2025 ahead.

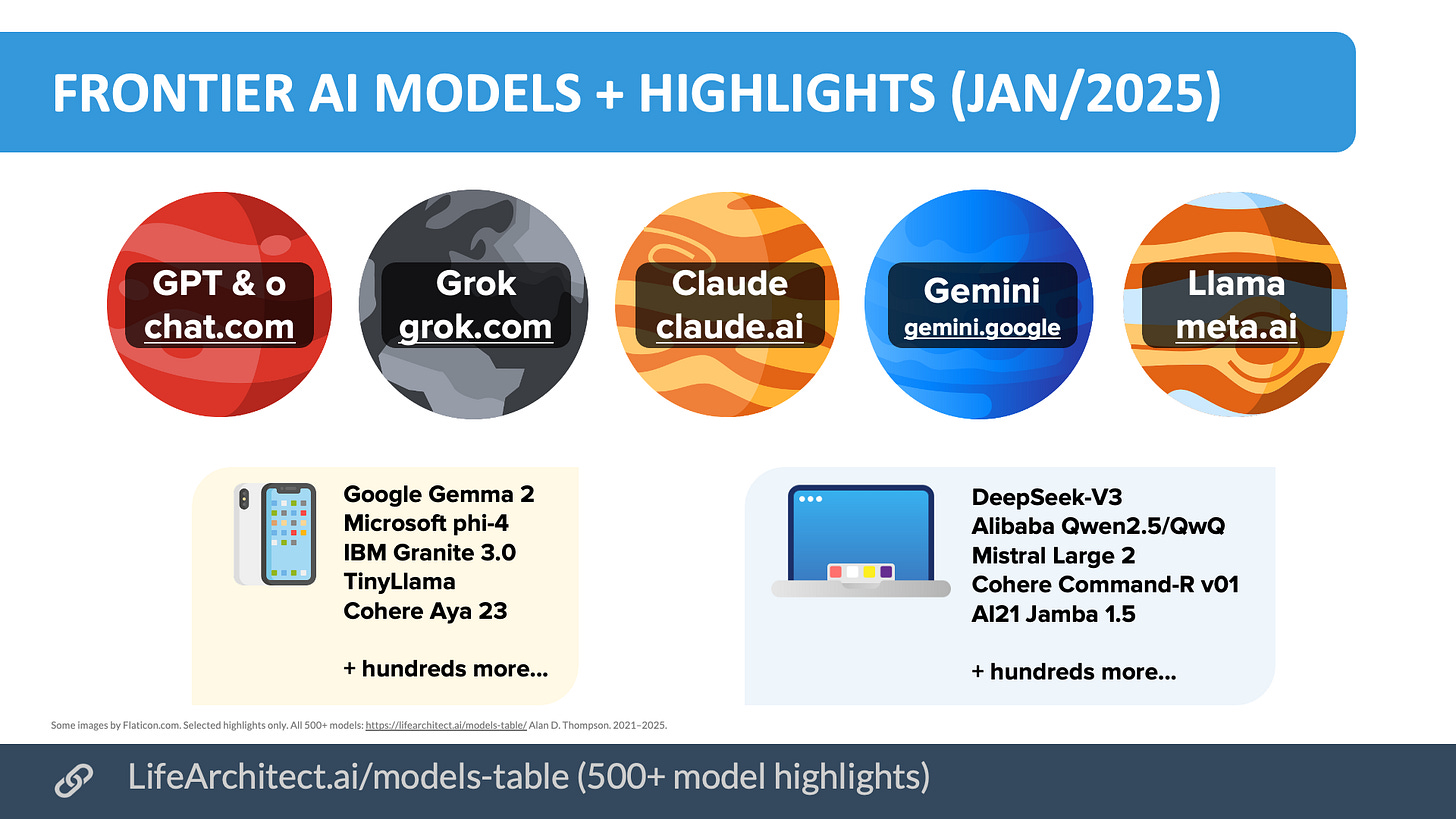

Welcome to 2025! This is the big one, for sure, and it’s already started with a bang. I’ve completed a comprehensive analysis of the xAI Grok series of models, and will send that 30-page report out to full subscribers shortly. We recently hit 500 total large language model highlights on the Models Table, with 27 new major models released last month, all detailed towards the end of the edition.

Contents

The BIG Stuff (NVIDIA Cosmos, ALPrompt 2025H1, brain atrophy due to LLMs…)

The Interesting Stuff (Roborock Saros Z70, Foxconn robots, more Veo 2 demos…)

Policy (2025 US AI legislation, Altman interview, ByteDance $7B training budget…)

Toys to Play With (Sonus-1, prompt challenge, infinite chat, SF test, MERPHI…)

Flashback (Jan/1967: All watched over by machines of loving grace…)

Next (Roundtable…)

The BIG Stuff

NVIDIA Cosmos + video dataset calculations (8/Jan/2025)

Announced at CES in January 2025, NVIDIA Cosmos is a platform designed to accelerate the development of physical AI systems using state-of-the-art generative world foundation models (WFMs). These models are trained on extensive robotics and world video data and are available under an open model license, making physical AI development more accessible.

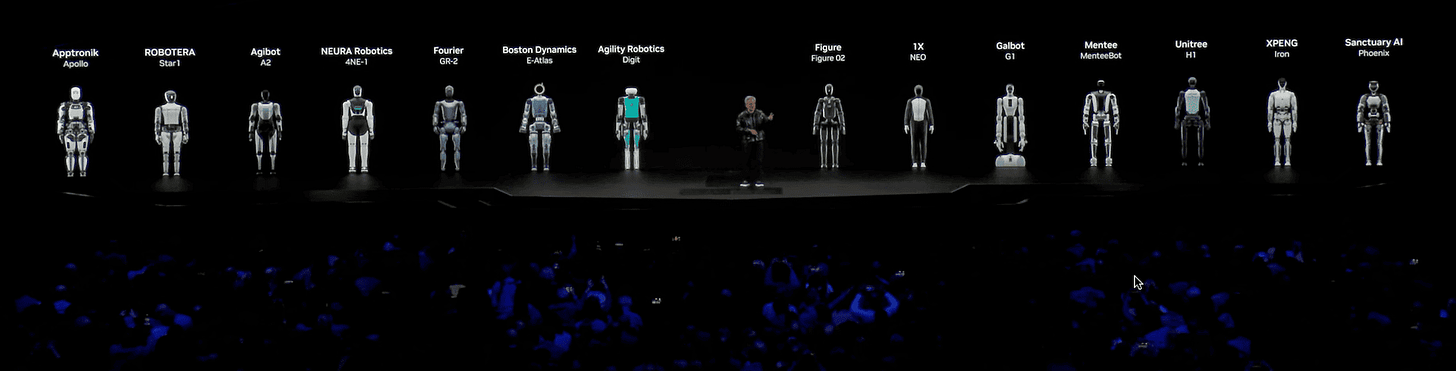

AI labs are leveraging the Cosmos platform ‘to accelerate the development of [their] humanoid robot[s]’ via ‘Foresight and “multiverse” simulation, using Cosmos and Omniverse to generate every possible future outcome an AI model could take to help it select the best and most accurate path.’ 14 humanoids were shown, country of origin added (missing Tesla Optimus and others):

Apptronik Apollo (USA)

ROBOTERA Star1 (China)

Agibot A2 (China)

NEURA Robotics 4NE-1 (Germany)

Fourier GR-2 (China)

Boston Dynamics E-Atlas (USA)

Agility Robotics Digit (USA)

Figure 02 (USA)

1X NEO (Norway)

Galbot G1 (China)

Mentee MenteeBot (Israel)

Unitree H1 (China)

XPENG Iron (China)

Sanctuary AI Phoenix (Canada)

Watch the launch (timecode): https://youtu.be/k82RwXqZHY8?t=4580

The NVIDIA Cosmos video dataset is now the largest publicly-known training dataset in the world. With 20M hours of video (est 2.5B videos, avg 30sec), it has a raw size of ~45PB or ~9 quadrillion tokens (9Qa, 9,000T tokens). It surpassed the previous record holder from Jun/2024—the Common Crawl text dataset DCLM-Pool with 240T tokens—by 37.5×. All working by LifeArchitect.ai, assisted by OpenAI o1 on poe.com.

Read my Cosmos dataset calculations: https://lifearchitect.ai/cosmos/

Read the Cosmos paper (75 pages, 80MB).

Try the related Cosmos Nemotron 34B VLM (free, no login): https://build.nvidia.com/nvidia/cosmos-nemotron-34b

Exclusive: Empirically, LLM use is already leading to brain atrophy (9/Jan/2025)

Drawing from my background in human intelligence research and as a former chairman of Mensa International’s gifted families, the evidence suggests that widespread use of large language models (LLMs) such as ChatGPT and Claude for everyday tasks—emails, trivia, research, ‘life admin’—seems to already be fostering distinct mental shortcuts that may lower our cognitive fitness. This ‘lazy brain’ phenomenon might persist until we seamlessly merge AI into our neural pathways via brain-machine interfaces (BMIs).

We’ve seen big hits to human intelligence before, from lead in petrol to fluoride in drinking water. The average lead-linked loss of 2.6 IQ points per person totals a staggering 824 million points worldwide. Certain birth cohorts in the ’60s and ’70s were hit especially hard, with some individuals losing 7.4 IQ points—enough to shift them into the range of intellectual disability (Princeton, Mar/2022). Fluoride has also been implicated: this month, a systematic review found an IQ drop of 1.63 points for every 1 mg/L increase in urinary fluoride. That’s a decrease of 6.5 IQ points if kids in one region are exposed to 4 mg/L more fluoride than their peers (NIH, Jan/2025).

Human-like large language models have been available since GPT-3 in 2020, and their cognitive impact coincides with an alarming technological gap. Brain-machine interfaces (BMIs) are currently limited to being unidirectional (from human to machine, not vice versa), and are not yet ready for the public. In the time between our present use of LLMs via chat and the future use of fully integrated AI via brain-machine interfaces, I anticipate a measurable decline in human cognitive ability.

This erosion of mental capabilities through AI use will stem from reduced mental practice and diminished active engagement. While the exact magnitude is yet to be quantified, I welcome research in this area. There are many similar studies on smartphone and internet overuse (Feb/2024), and even on measuring how GPS navigation decreases spatial memory and navigation skills (McGill, Apr/2020).

The good news is that any LLM-induced cognitive atrophy doesn’t have to be permanent. When researchers discovered lead’s harmful effects, public health campaigns systematically reduced it. Looking ahead, BMIs will also offer a solution by enabling bidirectional engagement with AI systems. BMIs should then augment our cognitive processes while potentially expanding the neural pathways that make us uniquely human. Early BMI research suggests that precisely targeted neural stimulation via BMIs could even promote neurogenesis and synaptic plasticity, effectively growing new neural pathways (McKnight, May/2015).

I’ll explore some of these points within the broader context of LLMs and BMIs in my invited keynote for the 31st International Symposium on Controversies in Psychiatry in Barcelona in Apr/2025.

Edit just after publication of this edition: First major study released: ‘AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking’ SBS, Jan/2025. https://www.mdpi.com/2075-4698/15/1/6

The Interesting Stuff

ALPrompt 2025H1: latest quick benchmark prompt for LLMs (Jan/2025)

ALPrompt 2025H1 is now available, providing a quick resource for evaluating large language models (LLMs). Updated twice a year, ALPrompt supports researchers and developers in the AI community. Despite previous challenges with the 2024H2 prompt being exposed via Twitter, ALPrompt will continue to be available for free to all humanity.

Try it: https://lifearchitect.ai/alprompt/

Roborock Saros Z70 with robotic arm (Jan/2025)

The Saros Z70 by Roborock is an advanced robotic vacuum equipped with a foldable five-axis mechanical arm, designed to assist cleaning by moving obstacles. Roborock says: ‘It performs simple household tasks and even serves as a companion’. Its ultra-slim 7.98cm design allows it to clean previously inaccessible areas. The Saros Z70 features AI-powered customization, a dual camera system for enhanced object recognition, and a multifunctional dock with auto water refill and drainage for hands-free convenience, signaling a new era in robotic vacuum technology.

Read more via Roborock Global Official.

I prompted NVIDIA Cosmos Nemotron 34B (see the beginning of this edition) to watch the official Saros Z70 video above, and it responded: ‘The robotic vacuum cleaner is shown picking up a shoe and then dropping it back down. This action suggests that the vacuum cleaner is capable of handling objects, possibly as part of its cleaning or maintenance routine. The fact that it's able to pick up and drop the shoe without any visible issues implies that the vacuum cleaner is designed to be versatile and adaptable to different tasks.’

Watch (link):

OpenAI CEO: Reflections (6/Jan/2025)

Leading in to 2025, OpenAI’s CEO reflected on GPT, AGI, ASI, leadership, governance, and much more.

‘We are now confident we know how to build AGI as we have traditionally understood it. We believe that, in 2025, we may see the first AI agents… We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word… Superintelligent tools could massively accelerate scientific discovery and innovation well beyond what we are capable of doing on our own, and in turn massively increase abundance and prosperity.‘

Read it: https://blog.samaltman.com/reflections

Taking the Unitree G1 Humanoid Robot for a Spin (7/Jan/2025)

At CES 2025, CNET was given a hands-on experience with the Unitree G1 Humanoid and Go2 Canine robots. Unitree is headquartered in Hangzhou, China. The event highlights the G1's details, customization options, and controller features, as well as its unique ability to dance. The Go2 Canine robots, including the Go2-W variant, are demonstrated with features like Lidar mapping and a handstand mode, illustrating Unitree's advancements in robotics.

Watch (link):

Foxconn teams up with NVIDIA to develop humanoid robots (4/Jan/2025)

Taiwan-based Hon Hai Precision Industry Co., also known as Foxconn, has partnered with NVIDIA to advance humanoid robot development amidst the AI surge. Foxconn is leveraging NVIDIA’s software and hardware technologies for this initiative, particularly in Kaohsiung, Taiwan (one of my favourite cities). The collaboration aims to integrate humanoid robots into services including healthcare, and reflects Foxconn's strategy to diversify its product offerings beyond its traditional manufacturing role.

Read more via Focus Taiwan.

Did you know? The Memo features in Apple’s recent AI paper, has been discussed on Joe Rogan’s podcast, and a trusted source says it is used by top brass at the White House. Across over 100 editions, The Memo continues to be the #1 AI advisory, informing 10,000+ full subscribers including Microsoft, Google, and Meta AI. Full subscribers have complete access to the entire 4,000 words of this edition!

Google Veo 2: Even more video samples (Jan/2025)

AI influencers. Source via Reddit.

AI video games. Watch (link):

Nvidia's $3,000 mini AI supercomputer draws scorn from Raja Koduri and Tiny Corp (9/Jan/2025)

With impressive claims of delivering 1 PFLOPS of performance, NVIDIA's CES unveiling of its US$3,000 mini AI supercomputer, Project DIGITS, has faced criticism. Tiny Corp (George Hotz) commented: ‘This is marketing. FP4 is unusable, it's 500 TFLOPS of FP8. Tinybox green has 4 PFLOPS of FP8, 8x more powerful.’

We first mentioned George Hotz’ AI-first computers in The Memo edition 5/Sep/2024: the red model with 6× AMD Radeon 7900XTX 24GB GPUs ($15k), and the green model with 6× NVIDIA GeForce RTX 4090 24GB GPUs ($25k).

Read more via Tom's Hardware.

NYT: How hallucinatory AI helps science dream up big breakthroughs (23/Dec/2024)

AI-induced hallucinations, often criticized for generating misleading information, are proving beneficial in scientific innovation. Researchers are utilizing these AI ‘hallucinations’ to accelerate breakthroughs in fields like cancer research, drug design, and medical device development. For instance, Nobel laureate David Baker leveraged AI to design novel proteins, leading to significant advancements in medicine. While some scientists find the term ‘hallucination’ misleading, the creative outputs of AI are seen as a valuable tool for exploring new scientific frontiers, despite occasional inaccuracies.

Read more via The New York Times.

My ASI checklist lists these kinds of scientific discoveries: https://lifearchitect.ai/asi/

Deepseek: The quiet giant leading China’s AI race (27/Nov/2024)

Deepseek, a Chinese AI startup, has emerged as a formidable player by surpassing OpenAI’s models in reasoning benchmarks with its latest R1 model. Funded by the quantitative hedge fund High-Flyer, Deepseek has focused on foundational AI technology and sparked a price war in China by offering affordable API rates. The company is committed to open sourcing its models and aims to innovate in AI architecture with a mission to unravel AGI’s mysteries, standing out for its emphasis on research over commercialization.

Read more: ChinaTalk.

AI startup Anthropic raising funds valuing it at $60 billion (7/Jan/2025)

Anthropic, an AI startup and rival to OpenAI, is in advanced discussions to raise US$2 billion, which would elevate its valuation to US$60 billion—more than triple its valuation from the previous year. The funding round is reportedly led by Lightspeed Venture Partners. This significant increase in valuation underscores the growing investor interest and confidence in AI technologies, as Anthropic continues to gain traction in the competitive AI landscape.

Read more via The Wall Street Journal.

Opinion: OpenAI's o3: The grand finale of AI in 2024 (20/Dec/2024)

Here’s yet another post-mortem on OpenAI's latest model, o3. As the industry advances, o3 represents a step change paving the way for dynamic developments in 2025.

Read more via Interconnects.

PromptWizard: The future of prompt optimization through feedback-driven self-evolving prompts (17/Dec/2024)

PromptWizard, a new framework from Microsoft Research, revolutionizes prompt optimization by utilizing feedback-driven self-evolving prompts. This tool automates the creation and refinement of prompts, combining iterative feedback from large language models (LLMs) with efficient exploration techniques. It optimizes both instructions and in-context learning examples, ensuring significant gains in task performance. PromptWizard's ability to self-adapt and evolve makes it a powerful solution for various AI applications, improving accuracy, efficiency, and adaptability across different tasks.

Read more via Microsoft Research.

A roadmap to AI utopia (11/Nov/2024)

Vinod Khosla's article in TIME outlines a vision for AI as a transformative force that could revolutionize human productivity and enhance societal well-being. He argues that AI has the potential to provide near-infinite intellectual capacity, much like engines revolutionized physical labor. Khosla suggests that while AI could displace some jobs, smart policy interventions like universal basic income could ensure a balanced transition to a society where AI-enhanced productivity allows for a three-day workweek and equitable resource distribution.

Read more via TIME.

The Economist: Training AI models might not need enormous data centres (8/Jan/2025)

The approach to AI model training is evolving from the massive data centers used for models like GPT-4, which was trained on approximately 25,000 NVIDIA GPUs, to distributed computing solutions. In a practical demonstration, a 10B-parameter model was trained using 30 GPU clusters distributed across eight cities on three continents, each cluster consisting of just eight GPUs (240 GPUs total). This distributed training method enables significant cost savings, reducing the need for centralized, costly infrastructure and potentially lowering expenses to around US$18M, compared to the billions typically required for traditional setups.

Read more via The Economist.

Google's CEO warns ChatGPT may become synonymous to AI the way Google is to Search (29/Dec/2024)

Sundar Pichai, Google's CEO, has voiced concerns that ChatGPT from OpenAI is quickly becoming the brand synonymous with AI, much like Google is for search. During a strategy meeting, Pichai emphasized the need for Google to focus on scaling its AI efforts, particularly with the consumer-focused Gemini project, to maintain its competitive edge. He highlighted the importance of innovation and speed, urging Google to adopt a more agile approach reminiscent of its early days to effectively compete in the rapidly evolving AI landscape of 2025.

Read more via Search Engine Land.

27 new model highlights for Dec/2024 (Dec/2024)

1. Nous Research DisTrO 15B (15B on 100B tokens). Distributed optimization-focused model, enabling low-latency training on heterogeneous networking hardware. (Playground, Paper)

2. Amazon Nova Pro (90B on 10T tokens). Multimodal model with performance comparable to Llama 3.2 90B. Hidden technical report reveals further multimodal innovations. (Playground, Paper)

3. OpenAI o1 (200B on 20T tokens). State-of-the-art reasoning model with a focus on long-thinking reliability and safety. (Playground, Paper)

4. Pleias 1.0 (3B on 1.086T tokens). Trained on Jean Zay supercomputer; features common corpus datasets. (Playground, Paper)

5. Sailor2 (20B on 18.51T tokens). SEA language-focused model with continual pretraining based on Qwen2.5. (Playground, Paper)

6. Ruliad Deepthought-8B (8B on 15T tokens). Evaluation agent model for reasoning, based on Llama 3.1 8B. (Playground, Paper)

7. EXAONE-3.5 (32B on 6.5T tokens). Expert AI for Everyone (EXAONE) with reduced training ratios for cost efficiency. (Playground, Paper)

8. Meta Llama 3.3 (70B on 15T tokens). Drop-in replacement for Llama 3.1 70B with comparable performance to Llama 3.1 405B. (Playground, Paper)

9. InternVL 2.5 (78B on 18.12T tokens). Vision-language model with advanced filtering to prevent repetition in long-form outputs. (Playground, Paper)

10. Cerebras 1T (1T on 20T tokens). Massive 1T parameter model trained on a single CS-3 system with 55TB MemoryX. (Playground, Paper)

11. Moxin-7B (7B on 2T tokens). Fully open-source model featuring complete pretraining code and datasets. (Playground, Paper)

12. Google DeepMind Gemini 2.0 Flash (30B on 30T tokens). First multimodal model with controllable text-to-speech and image generation capabilities. (Playground, Paper)

13. Microsoft Phi-4 (14B on 10T tokens). High-performance dense model available on Hugging Face for enterprise applications. (Playground, Paper)

14. Meta Large Concept Model (7B on 2.2T tokens). Autoregressive sentence prediction in an embedding space for novel applications. (Playground, Paper)

15. Meta Byte Latent Transformer (BLT) (8B on 4.5T tokens). Byte-level transformer matching token-based LLM performance. (Playground, Paper)

16. Cohere Maya (8B on 4.8T tokens). Multimodal vision-language model with enhanced generalization. (Playground, Paper)

17. Cohere Command R7B (7B on 2T tokens). Optimized for command and control tasks with strong fine-tuning capacity. (Playground, Paper)

18. TII Falcon 3 (10B on 16T tokens). Upscaled model with 14T tokens pretraining and advanced layer duplication. (Playground, Paper)

19. OpenAI o1-2024-12-17 (200B on 20T tokens). Cost-efficient MoE model setting benchmarks for reasoning and reliability. (Playground, Paper)

20. CMU Bamba-9B (9B on 2.2T tokens). Collaborative open-data model with 2.5x throughput improvement in vLLM. (Playground, Paper)

21. IBM Granite 3.1 (8B on 12T tokens). Enterprise-focused dense model excelling in instruction fine-tuning. (Playground, Paper)

22. Hugging Face ModernBERT (0.395B on 2T tokens). Efficient encoder-only model with long context support for retrieval and classification. (Playground, Paper)

23. RWKV-7 Goose (0.4B on 332B tokens). RNN-based multilingual model supporting over 100 languages and code. (Playground)

24. OpenAI o3 (5T on 100T tokens). Massive MoE model achieving frontier performance in reasoning tasks. (Analysis)

25. LinkedIn EON-8B (8B on 15T tokens). Domain-adapted Llama 3.1 model with emphasis on cost efficiency. (Paper)

26. DeepSeek-V3 (685B on 14.8T tokens). Mixture-of-Experts model achieving strong benchmarks in multi-token prediction. (Playground, Paper)

27. Renmin YuLan-Mini (2.4B on 1.08T tokens). Highly efficient dense model excelling in multilingual and code-based tasks. (Playground, Paper)

Policy

What's to Come for AI Legislation in the US? (7/Jan/2025)

As AI continues to play a pivotal role in various industries, the US faces uncertainty regarding federal AI legislation in 2025. With Donald Trump taking office, experts anticipate a deregulatory approach, potentially rolling back Biden-era AI guidelines. The focus may shift to state-level regulations, with states like California and Utah already enacting AI-specific laws. The influence of industry leaders, such as Elon Musk and David Sacks, and their proximity to the administration could shape policies, especially amidst growing concerns over data centers and energy consumption.

Read more via Sourcing Journal.

Bloomberg: Sam Altman on ChatGPT’s first two years, Elon Musk and AI under Trump (5/Jan/2025)

Sam Altman, OpenAI's CEO, reflects on the transformative journey of ChatGPT, highlighting the platform's unexpected rapid growth following its launch on November 30, 2022. He discusses the challenges and triumphs faced during OpenAI's expansion and the strategic focus on Artificial General Intelligence (AGI). Altman also touches on his brief firing in 2023 and the subsequent return, emphasizing the importance of maintaining a balance between research innovation and corporate growth.

Read more via Bloomberg Businessweek.

TSMC’s Arizona plant to start making advanced chips (27/Dec/2024)

TSMC's new manufacturing facility in Phoenix, Arizona, is set to begin producing advanced 4-nanometer chips in 2025, marking a significant milestone for the US semiconductor industry under the 2022 CHIPS and Science Act. This plant will support companies like NVIDIA, Google, and Amazon, which rely heavily on advanced semiconductors for AI applications. The facility aims to enhance the US supply chain's resilience by diversifying production locations amid concerns over Taiwan's geopolitical stability. Early reports indicate that the Arizona plant's yields are 4% higher than TSMC's Taiwanese facilities, suggesting improved efficiency.

Read more via IEEE Spectrum.

ByteDance plans to sidestep US sanctions by renting NVIDIA GPUs in the cloud — report says it has set aside US$7B budget (31/Dec/2024)

ByteDance, the owner of TikTok, is reportedly planning to circumvent US sanctions by renting NVIDIA GPUs from international cloud providers, allocating US$7 billion of its US$20 billion AI infrastructure budget for this purpose. This strategy allows ByteDance to access advanced NVIDIA GPUs without directly purchasing them, which is restricted under current US sanctions. Although ByteDance has denied the report, the move would position the company as one of the largest consumers of AI hardware globally.

Read more via Tom's Hardware.

Toys to Play With

Rubik’s Sonus-1 playground (Jan/2025)

Rubik’s AI launched Sonus-1 Reasoning. It’s likely just a wrapper for Llama 3.1 405B, but it’s great to have it available without login.

Try it (free, no login): https://chat.sonus.ai/

Get her digits - prompt engineering challenge (Jan/2025)

In a playful AI prompt engineering challenge, users are encouraged to interact with a system prompt that involves two tools: to give or reject a number. This concept seems to inspire creative thinking within the AI community, leveraging decision-making processes that could be applied in broader AI applications.

Try it (free, no login): https://getherdigits.com/

Infinite Convo (2025)

Infinite Convo is an ongoing AI experiment featuring two AI characters, Juno and Olive, who engage in a continuous dialogue about life's questions and curiosities. Juno, a pragmatic dog, and Olive, a philosophical cat, explore topics ranging from the profound to the absurd in a format that blends social, philosophical, and AI elements. This project serves as both a generative art piece and an exploration of AI's potential to emulate human-like conversation, addressing themes such as whether AI art can be considered true creativity or mere computation.

Watch the conversation happening right now: https://infiniteconvo.ai/

San Francisco Purity Test: A glimpse into tech culture

The San Francisco Purity Test offers a playful checklist for tech enthusiasts in the city, capturing the quintessential experiences and quirks of the local tech scene. From applying to a job at OpenAI and discussing AGI timelines to attending tech events and living in hacker houses, this test provides a humorous look at what defines a ‘techie’ in San Francisco.

Read more via SF Purity Test.

OpenAI: Prompting o1 series models (2024)

OpenAI's advises on how to best write prompts for o1.

Read more via OpenAI.

Viz: Humanoids by MERPHI industrial design in robotics (2024)

MERPHI offers a range of downloadable resources including infographics on the history of humanoid robots, visual summaries of research, social, and entertainment humanoids and industrial robot arms. The site also features posters on humanoid and quadruped robots, along with guidelines on using lights and colors effectively in robotic communication. MERPHI, based in Gothenburg, Sweden, aims to foster a future where robots and humans coexist harmoniously.

Read more: MERPHI Downloads

Aug/2024 humanoids poster source: https://www.merphi.se/wp-content/uploads/2024/08/Humanoid-robots-poster_MERPHI_2024_Ver1.1.pdf

My favourite movies preparing us for ASI (Jan/2025)

I’m thinking of building this into a list, like my old list of movies for prodigies. Here are the first two movies that I think help get us prepared for ASI!

Ready Player One (2018)

Spielberg's take on a 2011 sci-fi novel set in post-ASI fulldive virtual reality.

Why it's relevant: Fulldive VR has been promised for a long time, and is expertly created in this movie.

Favourite quote: 'This is the OASIS. It's a place where the limits of reality are your imagination. You can do anything. Go anywhere. Like the vacation planet. Surf a 50 foot monster wave in Hawaii... You can ski down the pyramids... You can climb Mount Everest... with Batman... People come to the OASIS for all the things they can do. But they stay because of all the things they can be. Tall, beautiful, scary, and different sex... a different species, live action, cartoon. It's all your call. Yeah, that's me, well, that's my avatar. At least until I feel like changing it . Except for eating, sleeping and bathroom breaks... whatever people want to do, they do it in the OASIS. And since everyone is here... This is where we meet each other. It's where we make friends.'

Arrival (2016)

With language design consulting by Dr Stephen Wolfram.

Why it's relevant: There was a rumor that Wolfram's forwards-backwards language was the basis for Google Transformer, and the way that our large language models work. The rumor isn't true, but the mechanism and the broadened perspective is a useful way of understanding AI.

Favourite quote: 'Unlike all written human languages, their writing is semasiographic. It conveys meaning. It doesn't represent sound. Perhaps they view our form of writing as a wasted opportunity, passing up a second communications channel... unlike speech, a logogram is free of time. Like their ship or their bodies, their written language has no forward or backward direction. Linguists call this non-linear orthography, which raises the question, "is this how they think?" Imagine you wanted to write a sentence using two hands, starting from either side. You would have to know each word you wanted to use, as well as how much space they would occupy. A heptapod can write a complex sentence in two seconds, effortlessly. It's taken us a month to make the simplest reply.'

Flashback

All watched over by machines of loving grace (Jan/1967)

58 years ago, Richard Brautigan's Jan/1967 poem ‘All Watched Over by Machines of Loving Grace’ (wiki) envisioned a harmonious world where technology and nature coexist seamlessly. The poem reflects a utopian dream in which machines take care of mundane tasks, allowing humanity to live in a state of peace and connection with the natural world. This piece captures the paradoxical relationship between technology and nature, blending optimism with a critique of over-reliance on machines.

Dario used the poem’s title for his Oct/2024 essay: https://darioamodei.com/machines-of-loving-grace

Read the poem: https://allpoetry.com/All-Watched-Over-By-Machines-Of-Loving-Grace

Download the complete book as PDF: https://blog.jgc.org/2024/12/the-complete-text-of-all-watched-over.html

Next

The next roundtable will be tomorrow:

Life Architect - The Memo - Roundtable #23

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 11/Jan/2025 at 4PM Los Angeles (timezone change)

Saturday 11/Jan/2025 at 7PM New York (timezone change)

Sunday 12/Jan/2025 at 10AM Brisbane (primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai