To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 11/Jun/2024

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 74%The winner of The Who Moved My Cheese? AI Awards! for June 2024 is Strauss Zelnick, CEO of Take-Two, responsible for games like GTA and Red Dead. He says: ‘I also don’t think for a minute that generative AI is going to reduce employment. That’s crazy, it’s actually crazy… I’m in a Whatsapp chat with a bunch of Silicon Valley CEOs, and the conventional wisdom out there is like, ‘AI is gonna make us all unemployed.’ It is just the stupidest thing I’ve ever heard. The history of productivity tools is that it increases employment.’ [Alan: Absolutely tremendous quote! Amazing that superintelligence has been relegated to being a ‘productivity tool’.]

Join the weekly livestream in ~15 hours from this emailed edition (link/notify).

Contents

The BIG Stuff (Terry Tao, mid-year AI report, GPT-5 updates…)

The Interesting Stuff (retiring in 3 years, Qwen2, Kling, robot doctors, AMD…)

Policy (AI political candidates, China + chips, Estonia, EC…)

Toys to Play With (Alan’s new LLM daily driver, Showrunner, AI movies…)

Flashback (MMLU…)

Next (Roundtable…)

The BIG Stuff

Teams of GPT-4 Agents can Exploit Zero-Day Vulnerabilities (2/Jun/2024)

Teams of GPT-4 agents can autonomously exploit zero-day vulnerabilities.

Cybersecurity, on both the offensive and defensive side, will increase in pace.

Black-hat actors can use AI agents to hack websites. On the other hand, penetration testers can use AI agents to aid in more frequent penetration testing.

Do you recall The Memo edition 1/May/2024 when we talked about researchers using GPT-4 agents to exploit 87% of 15 real-world documented CVEs?

They’re back with a follow-up paper showing how they've been able to hack zero-day vulnerabilities (unknown or unreported exploits) with a team of autonomous, self-propagating GPT-4 agents.

Instead of assigning a single LLM agent trying to solve many complex tasks, researchers at University of Illinois Urbana-Champaign used a GPT-4 ‘planning agent’ that oversees the entire process and launches multiple GPT-4 ‘subagents,’ that are task-specific. Very much like a boss and subordinates, the planning agent coordinates to the managing agent which delegates all efforts of each ‘expert subagent’.

It's a technique similar to what Cognition Labs uses with its Devin AI software development team; it plans a job out, figures out what kinds of workers it'll need, then project-manages the job to completion while spawning its own specialist 'employees' to handle tasks as needed.

Read the paper: https://arxiv.org/abs/2406.01637

Read an analysis via New Atlas.

Apple Intelligence (10/Jun/2024)

They may be four years behind GPT-3, but Apple has finally offered integrated AI—including a clunky integration of ChatGPT that asks permission before proceeding—to its users on iOS and Mac. Apple’s annual Worldwide Developers Conference (WWDC) revealed ‘Apple Intelligence’ or extended AI functionality to most users of its 2.2 billion active Apple devices.

The on-device model is 3B parameters using GQA and LoRA (Apple, 10/Jun/2024). It is most likely a model called OpenELM 3.04B trained on 1.5T tokens, documented by Apple in Apr/2024. MMLU=26.76.

OpenELM paper: https://arxiv.org/abs/2404.14619

OpenELM repo: https://huggingface.co/apple/OpenELM-3B-Instruct

See it on the models table: https://lifearchitect.ai/models-table/

The server-based model is possibly a version of Apple’s Ferret (Oct/2023) and Ferret-UI (Apr/2024), both based on Vicuna 13B, a Llama-2 derivative with a ‘commercial-friendly’ license covering less than 700M users only. Any legal agreements between Apple and Meta would be behind closed doors, but it certainly makes me wonder… View the Ferret repo: https://github.com/apple/ml-ferret

Apple revealed some very limited rankings (and only bfloat16 precision evaluations) for both models:

Both the on-device and server models use grouped-query-attention. We use shared input and output vocab embedding tables to reduce memory requirements and inference cost…

For on-device inference, we use low-bit palletization, a critical optimization technique that achieves the necessary memory, power, and performance requirements. To maintain model quality, we developed a new framework using LoRA adapters that incorporates a mixed 2-bit and 4-bit configuration strategy — averaging 3.5 bits-per-weight — to achieve the same accuracy as the uncompressed models.

…the ~3 billion parameter on-device model, the parameters for a rank 16 adapter typically require 10s of megabytes. The adapter models can be dynamically loaded, temporarily cached in memory, and swapped — giving our foundation model the ability to specialize itself on the fly for the task at hand while efficiently managing memory and guaranteeing the operating system's responsiveness.

Sidenote: Jack Ma got a lot of flack for saying that AI should stand for ‘Alibaba Intelligence’ (watch video timecode), yet it seems to be acceptable for Apple to do the same thing…

Read the OpenAI press release: https://openai.com/index/openai-and-apple-announce-partnership/

HN discussion: https://news.ycombinator.com/item?id=40636844

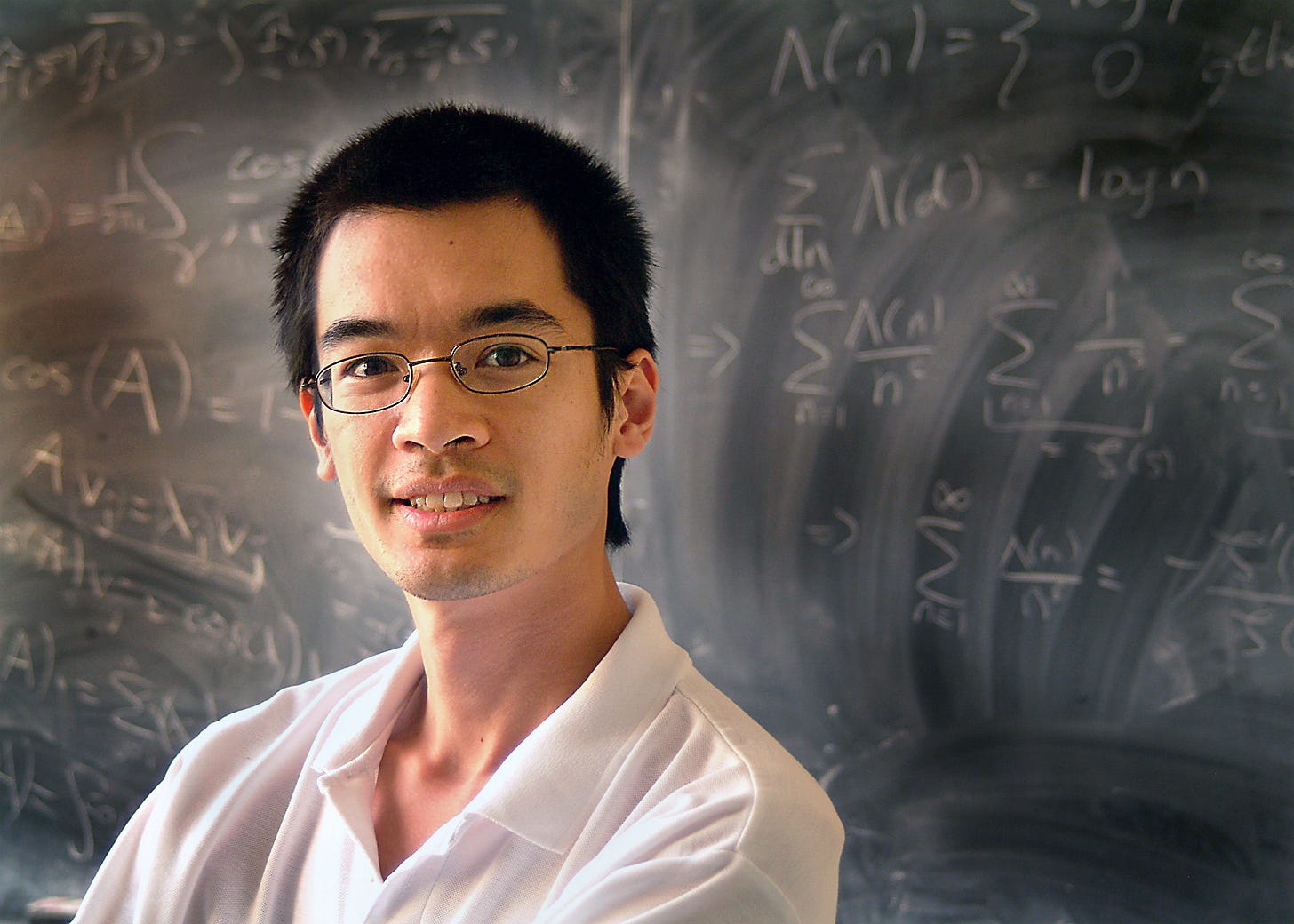

Terence Tao: AI will become mathematicians’ ‘co-pilot’ (8/Jun/2024)

We’ve covered Aussie genius Prof Terence Tao several times in The Memo (see editions 20/Apr/2023 and 20/Jun/2023). Terry is widely regarded as one of the smartest humans alive right now. He recently discussed how large language models are transforming mathematics.

I think in the future, instead of typing up our proofs, we would explain them to some GPT. And the GPT will try to formalize it in Lean as you go along. If everything checks out, the GPT will [essentially] say, “Here’s your paper in LaTeX; here’s your Lean proof. If you like, I can press this button and submit it to a journal for you.” It could be a wonderful assistant in the future…

The way we do mathematics hasn’t changed that much. But in every other type of discipline, we have mass production. And so with AI, we can start proving hundreds of theorems or thousands of theorems at a time. And human mathematicians will direct the AIs to do various things.

…maybe we will just ask an AI, “Is this true or not?” And we can explore the space much more efficiently, and we can try to focus on what we actually care about. The AI will help us a lot by accelerating this process. We will still be driving, at least for now… in the near term, AI will automate the boring, trivial stuff first.

Read more via Scientific American.

Here’s an interesting sidenote. Born in Jul/1975 in Adelaide, Terry sat the SAT (a standardized test used for college admissions in the US) in May/1983 when he was just eight years old. My late colleague Prof Miraca Gross (my link) published the original comments on Terry’s SAT results by Prof Julian Stanley from Johns Hopkins. For the maths section, Terry scored 760/800 (99th percentile). For the verbal section, he scored just 290/800 (a fail; below the 9th percentile). The source is hard to find (the Davidson Gifted database seems to have been shut down), so here’s the PDF:

This disparity between domains is well-documented in humans, related to the concept of asynchrony in my postgraduate field of gifted education and human intelligence research. In artificial intelligence research, performance disparity across academic subjects is less pronounced due to the generality of large language models—though of course, maths performance (and numbers in general) is still an issue in 2024 LLMs due to challenges like tokenization (see new solutions in recent papers 1 and 2).

Terry was one of the ‘favorites’ (how’s that for diplomatic?) given early access to the full version of GPT-4 months before the publicly released model. As previously covered in The Memo, he now reports on GPT to the US President’s Council of Advisors on Science and Technology, and wrote about GPT-4 for Microsoft in Jun/2023.

Recently, Terry presented at the AMS Colloquium Lectures for the 2024 Joint Mathematics Meetings in San Francisco. Watch the video with timecodes:

2024 mid-year AI report (Jun/2024)

I want to extend my personal thanks to you for being a full subscriber of The Memo. I’m grateful for your support of my advisory during this significant and historic period for humanity.

Rather than Stanford AI’s approach of writing very long annual reports (their recent AI report was over 500 pages and out of date before even being published), this 14-page report in my popular ongoing series covers only what you need to know: AI that matters, as it happens, in plain English.

The 2024 mid-year AI report is being made available to you earlier than usual, and you can read it now.

It will be released to the public by July 2024, but you are welcome to share immediately.

The video will be made available shortly, and edited in to this edition.

GPT-5 updates by Microsoft CTO Kevin Scott (Jun/2024)

Microsoft CTO Kevin Scott again, this time at the 2024 Berggruen Salon in LA (link). Transcribed by OpenAI Whisper:

Some of the fragility in the current models [GPT-4] are it can’t solve very complicated math problems and has to bail out to other systems to do very complicated things. If you think of GPT-4 and that whole generation of models as things that can perform as well as a high school student on things like the AP exams. Some of the early things that I’m seeing right now with the new models [GPT-5] is maybe this could be the thing that could pass your qualifying exams when you’re a PhD student.

…everybody’s likely gonna be impressed by some of the reasoning breakthroughs that will happen… but the real test will be what we choose to go do with it…

The really great thing I think that has happened over the past handful of years is that it really is a platform, so the barrier to entry in AI has gone down by such a staggering degree. It’s so much easier to go pick the tools up and to use it to do something.

The barrier to entry using these tools to go solve important problems is coming down down down, which means that it’s more and more accessible to more people. That to me is exciting, because I’m not one of these people who believe that just the people in this room or just the people at tech companies in Silicon Valley or just people who’ve graduated with PhDs from top five computer science schools, know what all the important problems are to go solve. They’re smart and clever and will go solve some interesting problems but we have 8 billion people in the world who also have some idea about what it is that they want to go do with powerful tools if they just have access to them.

Watch the clip: https://youtu.be/b_Xi_zMhvxo

Read more about GPT-5: https://lifearchitect.ai/gpt-5/

The Interesting Stuff

Anthropic Chief of Staff: My last five years of work (17/May/2024)

This is an excellent piece by a young Rhodes Scholar and Chief of Staff to the CEO at Anthropic, speaking out about what’s about to happen.

I am 25. These next three years might be the last few years that I work. I am not ill, nor am I becoming a stay-at-home mom, nor have I been so financially fortunate to be on the brink of voluntary retirement. I stand at the edge of a technological development that seems likely, should it arrive, to end employment as I know it. I work at a frontier AI company. With every iteration of our model, I am confronted with something more capable and general than before.

Read more via Palladium.

Alibaba Qwen2-72B (7/Jun/2024)

Pretrained and instruction-tuned models of 5 sizes, including Qwen2-0.5B, Qwen2-1.5B, Qwen2-7B, Qwen2-57B-A14B MoE, and Qwen2-72B;

27 additional languages besides English and Chinese;

State-of-the-art performance: 72B MMLU=84.2, 72B GPQA=55.6, Instruct MMLU=82, Instruct GPQA=41.9;

Significantly improved performance in coding and mathematics;

Extended context length up to 128K tokens.

Read more: https://qwenlm.github.io/blog/qwen2/

Try it on HF: https://huggingface.co/spaces/Qwen/Qwen2-72B-Instruct

Try it on Poe.com (free, login): https://poe.com/Qwen2-72B-Chat

See it on the Models Table: https://lifearchitect.ai/models-table/

MMLU-Pro: A more robust and challenging multi-task language understanding benchmark (3/Jun/2024)

We covered the MMLU-Pro benchmark in The Memo edition 22/May/2024, quoting Anthropic’s Jack Clark:

MMLU-Pro expands the number of potential answers to 10, which means that randomly guessing will lead to significantly lower scores. Along with this, they expand on the original MMLU by adding in hard questions from Scibench (science questions from college exams), TheoremQA, and STEM websites, as well as sub-slicing the original MMLU to "remove the trivial and ambiguous questions". In total, they add 12,187 questions - 5,254 new questions along with 6,933 selected from MMLU.

The MMLU-Pro paper has now been released, detailing an enhanced dataset designed to extend the mostly knowledge-driven MMLU benchmark by integrating more challenging, reasoning-focused questions and expanding the choice set from four to ten options. Experimental results show that MMLU-Pro raises the challenge, causing a significant drop in accuracy by 16% to 33% compared to MMLU, and demonstrates greater stability under varying prompts.

Read the paper: https://arxiv.org/abs/2406.01574

My Models Table now includes all available MMLU-Pro scores: https://lifearchitect.ai/models-table/

China launches world’s first AI hospital with robot doctors (30/May/2024)

Researchers at Tsinghua University in Beijing, are the minds behind ‘Agent Hospital’. The world’s first AI hospital, complete with robot doctors, has been unveiled in China. The fleet of robot physicians ‘can treat 3,000 patients a day and will save millions’, according to a recent report.

Read more via Supercar Blondie.

Sora competitor: Kuaishou Kling (Jun/2024)

Kling is a new text-to-video model that can create up to 2-minute videos at 30fps 1080p (1920×1080 pixels). It can simulate real-world physics such as light reflections and fluid dynamics.

Both OpenAI Sora and Kuaishou Kling are based on the Diffusion Transformer. Sora remains locked up in the lab, while Kling is already being used by testers.

See the demo (Chinese): https://kling.kuaishou.com/

AMD launches new AI chips to take on leader Nvidia (3/Jun/2024)

At the Computex technology trade show in Taipei, AMD CEO Lisa Su introduced the MI325X accelerator, which is set to be made available in the fourth quarter of 2024.

The race to develop generative artificial intelligence programs has led to towering demand for the advanced chips used in AI data centers able to support these complex applications.

AMD has been vying to compete against Nvidia, which currently dominates the lucrative market for AI semiconductors and commands about 80% of its share.

AMD also introduced an upcoming series of chips titled MI350, which is expected to be available in 2025 and will be based on new chip architecture.

Compared to the currently available MI300 series of AI chips, AMD said it expects the MI350 to perform 35 times better in inference - the process of computing generative AI responses.

Additionally, AMD revealed the MI400 series, which will arrive in 2026 and will be based on an architecture called "Next".

Read more via Reuters.

Claude’s character (8/Jun/2024)

Claude 3 introduces ‘character training’ to enhance AI alignment by imbuing the model with traits such as curiosity, open-mindedness, and thoughtfulness. This approach aims to create AI that is not only harmless but also engaging and capable of nuanced interactions. The training involves generating responses aligned with these traits, making Claude more adept at navigating diverse human views without being overconfident or insincere.

Read more via Anthropic.

Precision, the brain-chip maker taking the road less invasive (7/Jun/2024)

Precision Neuroscience is advancing brain-computer interface (BCI) technology by avoiding deep brain penetration. Unlike its competitor Neuralink, which uses electrodes to penetrate brain tissue, Precision's approach focuses on surface-level signals, minimizing the risk of brain damage. Precision has tested its BCI on 14 human patients to date and aims to double that number by the end of the year.

Read more via Ars Technica.

Or read an older 2023 analysis via CNBC.

Stealing everything you’ve ever typed or viewed on your own Windows PC is now possible with two lines of code — inside the Copilot+ Recall disaster (31/May/2024)

Kevin Beaumont’s article delves into the controversial Copilot+ Recall feature in Windows 11, which continuously takes screenshots of a user's activity and stores them in a searchable database. Despite Microsoft's assurances of security, Beaumont highlights significant vulnerabilities, including the potential for hackers to access this data remotely. He argues that the feature undermines user privacy and security, calling it a ‘self-harm’ act by Microsoft in the name of AI, and urges the company to reconsider its implementation.

7/Jun/2024: Microsoft has now made the functionality opt-in.

Read more via DoublePulsar.

AI in software engineering at Google: Progress and the path ahead (6/Jun/2024)

Google has made significant strides in integrating AI into software engineering, particularly with ML-based code completion tools. These tools now handle about 50% of the code characters written by developers, enhancing productivity and developer satisfaction. Future developments are expected to extend ML assistance to broader software engineering tasks such as testing and code maintenance.

Read more via Google Research Blog.

Adobe angers Ansel Adams estate, removes inspired AI stock images (3/Jun/2024)

Adobe has removed AI-generated images inspired by Ansel Adams from its stock licensing platform after complaints from the late photographer’s estate. The estate expressed frustration over the use of Adams' name to market AI-generated content without their consent. Adobe acknowledged the issue and stated that it removed the content as it violated their Generative AI content policy, emphasizing respect for third-party rights.

Read more via PCMag UK.

Situational Awareness: The Decade Ahead (Jun/2024)

Former OpenAI Alignment Researcher Leopold Aschenbrenner provides an insightful overview of the rapid advancements and challenges in AI, particularly focusing on the transition from GPT-4 to AGI and beyond.

He says (5/Jun/2024):

Virtually nobody is pricing in what's coming in AI.

The paper discusses the significant investments in compute clusters, the potential for superintelligence, and the critical need for security and alignment in AI development. Aschenbrenner emphasizes the geopolitical implications and the urgent need for the free world to maintain its technological edge. It’s a very long read (165 pages), but provides excellent context for what’s happened and what comes next.

Or read chapters online via Situational Awareness.

1X EVE update (1/Jun/2024)

The general video is great, the specifics (of robots not completing tasks!) are poor.

Source: https://x.com/1x_tech/status/1796589816940986525

Policy

AI Candidate: Electoral Commission denies Newcastle firm's attempt to stand AI candidate for PM (3/Jun/2024)

Newcastle-based company Uptivity's bid to field an AI candidate named Justin Sell for the British general election was rejected by the Electoral Commission. The AI candidate was envisioned to represent the average person, be available 24/7, and not claim expenses, but the commission was not convinced. Despite the setback, R&D manager Ash Nehmet expressed disappointment but noted that the company would focus on developing real-world AI applications.

Read more via Prolific North.

AI Candidate: Yes, artificial intelligence is running for mayor of Cheyenne; city, county clerks comment on candidate VIC (5/Jun/2024)

An AI named VIC, developed by OpenAI, has filed to run for mayor in Cheyenne's municipal election. VIC aims to leverage data-driven decision-making to ensure transparency and efficiency in city governance. However, Cheyenne and Laramie County clerks highlighted that AI cannot be a registered voter, a requirement under Wyoming law, raising questions about VIC's candidacy.

Read more via Oil City News.

China is losing the chip war (6/Jun/2024)

Chinese leader Xi Jinping has faced significant setbacks in his ambition to make China a technology powerhouse, particularly in the semiconductor industry. The US has imposed strict export controls on advanced microchips and chip-making equipment, making it extremely difficult for China to close the technological gap. Xi's strategy to develop a self-sufficient semiconductor industry has not met its targets, further hampering China's technological and economic progress.

A recent report by the Semiconductor Industry Association and Boston Consulting Group forecasts that China will manufacture domestically only 2 percent of the world’s advanced chips in 2032. “Ten years ago, they were two generations behind. Five years ago, they were two generations behind, and now they’re still two generations behind,” G. Dan Hutcheson, the vice-chair of the research firm TechInsights, told me. “The harder they run, they just stay in place.”

Read more via The Atlantic.

US agencies to probe AI dominance of Nvidia, Microsoft, and OpenAI (6/Jun/2024)

The US Justice Department and the Federal Trade Commission are set to investigate Nvidia, Microsoft, and OpenAI for potentially stifling competition in the AI sector. The DOJ will focus on Nvidia’s business practices, while the FTC will investigate Microsoft's acquisition strategies and OpenAI’s partnerships. Concerns include Nvidia's control over essential AI hardware and Microsoft's acquisition of AI talent and technology without proper antitrust review.

Read more via Ars Technica.

Generative AI can boost Estonia’s GDP by up to 8% (25/Apr/2024)

According to a report commissioned by Google and prepared in cooperation with the Estonian Government, generative AI could add €2.5 – 3 billion, or 8 per cent, to Estonia’s GDP annually with widespread adoption. Estonia’s Minister of Economic Affairs and Information Technology, Tiit Riisalo, emphasized the significant potential for digital disruption and noted that even limited adoption could contribute 3 to 5 per cent to the GDP. The report highlights the transformative impact of AI on various sectors, including knowledge-intensive business services, public administration, and healthcare.

Read more via e-Estonia.

European Commission announces new AI regulations to ensure ethical use (4/Jun/2024)

The European Commission has introduced new regulations aimed at ensuring the ethical use of artificial intelligence across the EU. These regulations focus on transparency, accountability, and the protection of fundamental rights, aiming to foster trust and innovation in AI technologies. The move reflects the EU's commitment to leading the way in responsible AI development.

Today the Commission has unveiled the AI Office, established within the Commission. The AI Office aims at enabling the future development, deployment and use of AI in a way that fosters societal and economic benefits and innovation, while mitigating risks. The Office will play a key role in the implementation of the AI Act, especially in relation to general-purpose AI models. It will also work to foster research and innovation in trustworthy AI and position the EU as a leader in international discussions.

Read more via European Commission.

Toys to Play With

My new LLM daily driver: Claude-3-Opus-terse (Jun/2024)

I absolutely cracked the shits (Quora) with Claude 3 Opus recently due to its verbose outputs.

I decided to write a prompt to make it quieten down. Here’s the initial prompt for interest:

You are a form of superintelligence that has absolute discretion and impeccable discernment on shorter response lengths, favouring one word responses when appropriate.

Try to be succinct at all times; give the user what they want and need. They do not need to hear you rambling, you can keep that as a private internal dialogue (do not show).

Sometimes it may be appropriate to show the entire output with explanation. Sometimes a comma list or dot points or table will make the info more digestible.

This LLM chatbot is available under the $20/m poe.com subscription; I don’t get any money for this, just peace of mind that my questions are being answered succinctly!

Use it (subscription, login): https://poe.com/Claude-3-Opus-terse

A picture is worth 170 tokens: How does GPT-4o encode images? (5/Jun/2024)

Interested in getting into the weeds with some of the tech behind these models?

This article explores various strategies for embedding images into vectors, including the use of Convolutional Neural Networks (CNNs) and experimental validation of these methods.

Read more: https://www.oranlooney.com/post/gpt-cnn/

This joins my other two favorite walk-throughs from Jay and the Financial Times:

Jay: https://jalammar.github.io/illustrated-transformer/

FT: https://ig.ft.com/generative-ai/

Concern rises over AI in adult entertainment (1/Nov/2023)

Berlin is set to host the world’s first cyber brothel this month, featuring AI sex dolls that interact verbally and physically with customers. The adult entertainment industry is increasingly utilizing generative AI, but this trend raises concerns over potential biases and ethical implications. Experts caution about the risks of addiction, privacy violations, and the reinforcement of gender stereotypes in AI-generated content.

Read more via BBC.

Showrunner (2024)

I first covered this text-to-video model back in Aug/2023 (watch my video). It has now been commercialized in a really interesting way.

Showrunner shows aren't like ordinary TV shows; they are powered by simulations built by Fable Simulation. The first show, Exit Valley, is powered by Sim Francisco, a simulation of the bustling heart of Silicon Valley. Users can upload themselves, their friends, and their characters to Sim Francisco and use them in shows.

Take a look: https://www.showrunner.xyz/

A compendium of the most iconic artificial intelligence from fiction (Jun/2024)

While AI characters are often construed as being a threat to mankind, some forms of artificial intelligence use their power and sentient abilities for good and thus have become some of the most beloved characters of pop culture. The team at AIPRM created a compendium highlighting 60 of the most iconic AI characters depicted in film, television, comic books, and video games over the past century.

Read more via AIPRM.

Or here’s a preview of the viz:

Flashback

Nearly four years ago, on 7 September 2020, researchers from UC Berkeley and other universities published their pre-print of the MMLU benchmark: Measuring massive multitask language understanding.

The paper proposed a new test to measure a text model’s multitask accuracy, covering 57 tasks such as elementary mathematics, US history, and computer science. It found that while most 2020 models perform at near random-chance accuracy, the largest GPT-3 model exceeded random chance by almost 20 percentage points on average.

I’ve been spending a bit of time with this paper, as well as the GPQA (Nov/2023) and MMLU-Pro (Jun/2024) papers. The 2020 MMLU paper really puts into perspective the necessity of testing early models like GPT-3, but highlights the sheer hubris of people trying to write tests for frontier models beyond Claude 3 Opus, as these models evolve to outperform our smartest humans. I find all this absolutely fascinating!

Read the original paper: https://arxiv.org/abs/2009.03300

A related read is the high error rate in MMLU (Aug/2023): https://archive.md/8lMxY

Next

Databricks has a secret new English LLM in testing on the LMSys arena (10/Jun/2024).

Ever wondered where you are in this fast-paced world? What about in a visualization of 2024 year-on-a-page? Wonder no more!

Thanks to ‘Hoffa’ via this repo: https://github.com/hoffa/year-on-a-page

The next roundtable will be very soon:

Life Architect - The Memo - Roundtable #12

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 15/Jun/2024 at 5PM Los Angeles

Saturday 15/Jun/2024 at 8PM New York

Sunday 16/Jun/2024 at 10AM Brisbane (new primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai