The Memo - 22/May/2024

Google Trillium, OpenAI and safety, GPT-4o, and much more!

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 22/May/2024

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 73% ➜ 74%The winner of The Who Moved My Cheese? AI Awards! for May/2024 is The Verge for messing up the basics of how LLMs work, and pushing a specist narrative.

Join me in about four hours from this emailed edition for a cozy livestream.

Contents

The BIG Stuff (OpenAI and safety, GPT-4o…)

The Interesting Stuff (Claude 4, OpenAI + Apple, Trillium, Blackwell, Baidu…)

Policy (UBI, China, France, US Senate, crony capitalism…)

Toys to Play With (GPT-4o charts, new MMLU, GPT in Rust, OpenAI leaderboard…)

Flashback (AI beginnings…)

Next (Grok, Roundtable link…)

The BIG Stuff

Exclusive: OpenAI and safety (May/2024)

Recently, some more researchers left OpenAI (18/May/2024) because they think OpenAI is releasing models too quickly and isn’t handling safety properly. I think this is a strange perspective, so allow me to provide another possibility.

In plain English, safety experts are working to ensure you don’t get access to higher intelligence.

Let’s take a quick look at the timeline of OpenAI and safety:

2019: OpenAI locks up GPT-2 1.5B. The public has to wait nine months to access the (very small and very basic) model for ‘safety’ reasons. ‘Due to our concerns about malicious applications of the technology, we are not releasing the trained model.’

2019: Connor Leahy locks up his GPT-2 replication model. After Connor successfully replicates GPT-2 1.5B, OpenAI meets with him and convinces him that AI is ‘dangerous’.

2020: OpenAI locks up GPT-3 175B. The public has to wait about a year and a half to access the model for ‘safety’ reasons. ‘We’re able to better prevent misuse by limiting access to approved customers and use cases. We have a mandatory production review process before proposed applications can go live.’ Then in 2024, OpenAI permanently revokes all access to the base GPT-3 models (RIP Leta).

2022: OpenAI locks up DALL-E. The public has to wait about a year to access the model for ‘safety’ reasons.

2023: OpenAI locks up GPT-4. The public has to wait eight months to access the model for ‘safety’ reasons.

2024: OpenAI locks up Sora. The public has to wait an estimated 10 months to access the model for ‘safety’ reasons.

2024: OpenAI locks up GPT-4o (full model). The public has to wait an unknown period to access the model for ‘safety’ reasons. ‘Today we are publicly releasing text… Over the upcoming weeks and months, we’ll be working on the… safety necessary to release the other modalities.’ As of 21/May/2024, this became: ‘GPT-4o real-time voice and vision will be rolling out to a limited Alpha for ChatGPT Plus users in a few weeks. It will be widely available for ChatGPT Plus users over the coming months.’

2025: Let’s see, but I think this probably isn’t the last time we’ll see the phrase “The public has to wait about a year to access the model for ‘safety’ reasons.”

The sophomoric approach to most aspects of business by labs like OpenAI is embarrassing to watch. We already have laws in place that cover whatever they’re worried AI will do. In-house legal teams are well-equipped to provide guidance on the usual legal landscape, particularly regarding the extensive laws and precedents surrounding deepfakes, bomb-making, and other naughty activities. These actions are already illegal and have been addressed by centuries of criminal law and jurisprudence.

It is essential to recognize that there are now over 400 AI models (see my Models Table) being developed in regions inside and outside of the US, and the focus on 'safety' and ethical concerns is not nearly as prominent away from the excess caution and hand-wringing of Silicon Valley.

The widespread practice of AI labs withholding higher intelligence from the public under the guise of ‘safety’ is ethically indefensible. Rather than complaining about not enough safety, we should be investigating the actions of these companies as they keep AI to themselves and suppress humanity’s evolution.

Despite all the claims around ’safety’, I suspect that the unspoken and underlying reasons for locking up AI models are actually far more closely related to money, reputation, power, control, and plain ol’ human greed.

Yes, I’ve covered some of this ground before, including the Sora delay in The Memo edition 19/Feb/2024, and you can find the viz here: https://lifearchitect.ai/gap/

As a caveat to this piece, I do believe AI safety is vital. Some of my thoughts above are an oversimplification of a very complex problem. Perhaps the most complex problem the world has ever seen. If you’d like to read more on AI safety, my favourite paper this month is by Yoshua Bengio, Stuart Russell, Max Tegmark and others: ‘Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems‘. It looks at ‘world models’ as a solution to the problem of AI safety, and especially the egregious ‘fool’s errand’ of fine-tuning AI models by humans (read my report on this at: https://lifearchitect.ai/alignment/)

Read the paper: https://arxiv.org/abs/2405.06624

GPT-4o (13/May/2024)

OpenAI announced a new ‘world model’ combining audio, vision, and text in real time. The model is called GPT-4o (for ‘Omnimodel’). Unfortunately, GPT-4o is not a huge improvement in ‘IQ’, and underperforms other frontier models like Claude 3 Opus.

The new functionality and rating on the MMMU (vision) benchmark did provide a small uptick to my AGI countdown, from 73 → 74%. I address why the model performance is not that impressive here: https://lifearchitect.ai/gpt-4-5/#gpt-4o

As noted in the ‘safety’ section above, this is only a partial release for now:

Today [13/May/2024] we are publicly releasing text and image inputs and text outputs. Over the upcoming weeks and months, we’ll be working on the technical infrastructure, usability via post-training, and safety necessary to release the other modalities.

Read the announcement: https://openai.com/index/hello-gpt-4o/

See it on the Models Table: https://lifearchitect.ai/models-table/

Watch one of the official demo videos (link):

The Interesting Stuff

Exclusive: Anthropic has a model 4× more powerful than Claude 3 Opus coming (20/May/2024)

In a post on AI safety—and using a logical interpretation of language which could arguably be fuzzy—OpenAI competitor Anthropic noted (20/May/2024):

Currently, we conduct pre-deployment testing in the domains of cybersecurity, CBRN [chemical, biological, radiological, and nuclear], and Model Autonomy for frontier models which have reached 4x the compute of our most recently tested model (you can read a more detailed description of our most recent set of evaluations on Claude 3 Opus here).

My estimates for the Mar/2024 release of Claude 3 Opus were 2T parameters trained on 40T tokens (see my Models Table). If we 4× this (and 4× compute does not mean we’ll get 4× the size), we may be heading towards an 8T parameter model from Anthropic this year.

Whoah. If this does become Claude 4, I hope they optimize it enough that we’re measuring single prompt costs in cents rather than dollars!

US FDA clears Neuralink's brain chip implant in second patient, WSJ reports (20/May/2024)

Neuralink expects to implant its device in the second patient in June and a total of 10 people this year, the report said, adding that more than 1,000 quadriplegics had signed up for its patient registry.

The company also aims to submit applications to regulators in Canada and Britain in the next few months to begin similar trials, according to the report.

The WSJ report is behind a paywall and doesn’t say much more.

Read more via Reuters.

What is Apple doing in AI? Summaries, cloud and on-device LLMs, OpenAI deal (19/May/2024)

[Apple] knows consumers will demand such a [ChatGPT] feature, and so it’s teaming up with OpenAI to add the startup’s technology to iOS 18, the next version of the iPhone’s software.

[Apple and OpenAI] are preparing a major announcement of their partnership at WWDC [10 June 2024], with Sam Altman-led OpenAI now racing to ensure it has the capacity to support the influx of [1.46 billion active iPhone users] later this year…

OpenAI’s GPT-4o model can hold a lifelike conversation, prepare users for a job interview, portray sarcasm and even serve as a customer service agent. It’s all ridiculously impressive — and scary to some extent.

Read more via Bloomberg.

Google’s 6th generation TPUs: Trillium (14/May/2024)

On Tuesday 14 May 2024, Google announced its sixth-generation TPU (tensor processing unit) called Trillium. The chip, essentially a TPU v6, boasts a 4.7× increase in peak compute performance per chip compared to TPU v5e, with significant improvements in memory capacity and energy efficiency. Trillium TPUs are over 67% more energy efficient and can scale up to 256 TPUs in a single high-bandwidth, low-latency pod.

[Trillium] hardware supported a number of the innovations we announced today at Google I/O, including new models like Gemini 1.5 Flash, Imagen 3, and Gemma 2.

The sixth-generation Trillium TPUs are a culmination of over a decade of research and innovation and will be available later this year [2024].

Read more via Google Cloud Blog.

Trillium will be used by many AI labs beside Google (outside labs can ‘hire’ access to the chips), and ensure that new models will be able to train more parameters more quickly.

Nvidia's next-gen Blackwell AI Superchips could cost up to $70K — fully-equipped server racks reportedly range up to $3M or more (14/May/2024)

Nvidia's Blackwell GPUs for AI applications are projected to be significantly more expensive than their predecessors, with a single GB200 Superchip potentially costing up to US$70,000. Analysts from HSBC estimate that servers featuring these GPUs, such as the Nvidia GB200 NVL72, could be priced around US$3 million. The advanced capabilities of these GPUs aim to provide substantial computing power for training large language models (LLMs).

Read more via Tom's Hardware.

Baidu Earnings call: AI Now Generates 11% of Baidu Search Results (17/May/2024)

Robin Li, CEO of Baidu: ‘While traditional search is maturing, we are working hard to renovate the user experience with gen-AI. Right now, about 11% of our search result pages are filled with generated results. These results provide more accurate, better organized and direct answers to user’s questions, and in some cases enable users to do things they could not do before. We have not started the monetization of those gen-AI results, so it will take some time for revenue to catch up.’

Read a summary transcript via Investing.com.

Full subscribers will receive my mid-year AI report very shortly, before its public launch later in June 2024. We’re about half way through this edition, with extensive Policy and Toys to Play With sections, as well as a personal invite to our growing monthly Roundtable where The Memo readers share thoughts on AI…

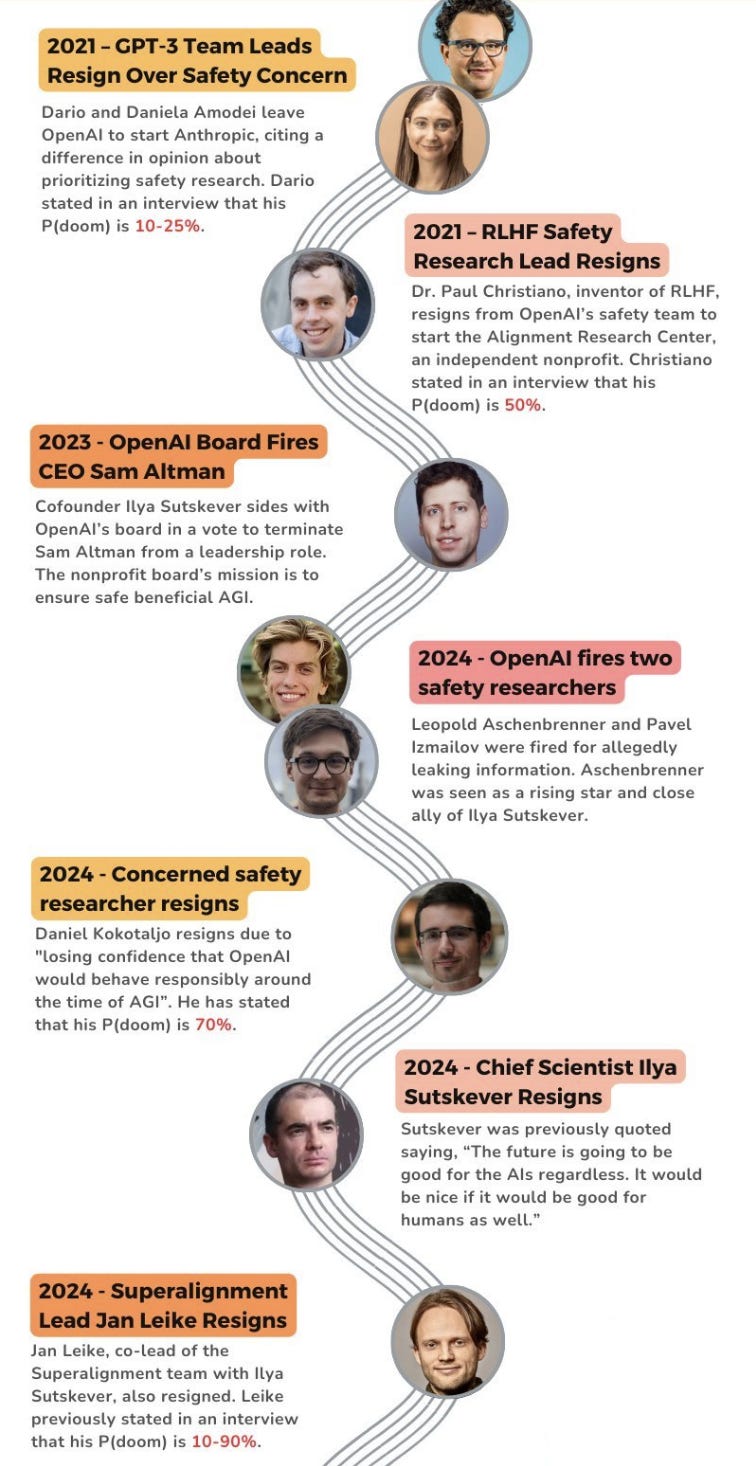

OpenAI resignations timeline viz (May/2024)

The only source I could find for this viz is via Reddit.

I talked a little bit about the OpenAI/Anthropic split in my Jan/2023 video (link).

UK engineering firm Arup falls victim to £20m deepfake scam (17/May/2024)

Arup, one of the world's leading engineering firms, confirmed it was the victim of a £20M fraud after a Hong Kong employee was duped by an AI-generated video call 'posing as senior officers of the company'.

…the employee had been invited on to a conference call with “many participants”. Because the participants “looked like the real people”, Chan said, the employee then transferred a total of HK$200m to five local bank accounts via 15 transactions.

The Hong Kong police force said in a statement on Friday that an employee had been “deceived of some HK$200m after she received video conference calls from someone posing as senior officers of the company requesting to transfer money to designated bank accounts”.

Read more via The Guardian.

AI is taking over accounting jobs as people leave the profession (17/May/2024)

The accounting profession is experiencing a significant talent drain, with over 300,000 US accountants and auditors leaving their jobs between 2019 and 2021. This exodus, coupled with the reluctance of new graduates to enter the field, is pushing firms to adopt AI and automation to fill the gaps. Puzzle, an accounting startup, is leveraging AI to streamline financial management, reducing human error and delivering real-time financial statements.

Read more via Forbes.

Replit cuts staff by 30 amid aggressive AI push in software development (16/May/2024)

Replit, a cloud-based platform for software development, has laid off 30 employees as it pivots towards integrating AI into its tools. Replit’s CEO emphasized the company's commitment to making programming more accessible through AI, despite the human cost involved in these changes. The layoffs are part of a broader trend among tech companies to streamline operations amidst economic uncertainties.

Read more via VentureBeat.

Here’s my older curation of related resources: https://lifearchitect.ai/economics/

BlackRock sent its team a memo secretly written by ChatGPT—and one major critique emerged (16/May/2024)

…we had a presentation to BlackRock’s board several months ago [around Nov/2023]. And the presentation was on GenAI and what our strategy associated with GenAI is. And as part of that presentation, because we normally would write a memo as part of the board materials, we had the idea of [using] ChatGPT to write the memo.

We took what our strategy document was, and we fed it into ChatGPT with a very simple prompt. And that prompt was,“write an executive summary.” I think it was 500 words, maybe 1000 words for the board explaining our strategy, some of the risks and so on.

So, it gave us a memo. And then we gave that memo to a bunch of people internally to read. And what was most amazing to me, is and we have some tough graders within BlackRock, let me be very clear. As we gave the memo to those tough graders to read, everyone had comments. And the comments were typically things like, ‘I hate the tone.’ ‘You’re selling yourself short.’ ‘What about this?’

No one realized it was actually written by a computer.

This is a fun read via Fortune.

Policy

AI 'godfather' says universal basic income will be needed (18/May/2024)

Geoffrey Hinton, often referred to as the ‘godfather of AI’, has advocated for the implementation of a universal basic income (UBI) to address potential job displacement caused by advancements in artificial intelligence. Hinton emphasizes that as AI systems become more capable, many jobs currently performed by humans might be automated, necessitating a societal shift towards UBI to ensure economic stability.

Read more via BBC.

“Oh, really? ‘Sky blue’, says star witness.” (Family Guy quote via Yarn)

See also: My press releases for the last three years:

It is great that Hinton is advising the UK Govt, especially about a topic as challenging as UBI. And clearly UK PM Rishi Sunak is sitting up and taking notice.

Top US and Chinese officials begin talks on AI in Geneva (14/May/2024)

High-level envoys from the US and China met in Geneva to discuss the risks associated with emerging AI technologies and to set shared standards. This meeting marks the beginning of an inter-governmental dialogue on AI, agreed upon by Presidents Joe Biden and Xi Jinping. Experts believe the discussions, while unlikely to produce immediate concrete results, are crucial to preventing the weaponization or abuse of AI, particularly in disinformation campaigns.

China has been emphasizing that the United Nations should play the main role in global AI governance. This was also included in the ‘China-France Joint Statement on AI and Global Governance’ (7/May/2024).

The talks were closed door, and there has been no joint statement or consensus issued after the first dialogue.

Read more via AP News.

Read more via Reuters.

US Senate leadership releases sweeping AI policy agenda calling for US$32B in R&D funding (16/May/2024)

On Wednesday, 15 May 2024, a bipartisan US Senate working group led by Majority Leader Sen. Chuck Schumer released a report titled ‘Driving US Innovation in Artificial Intelligence: A Roadmap for Artificial Intelligence Policy in the United States Senate’.

The agenda covers workforce modernization, data privacy, AI transparency, and protection against the acquisition of sensitive technologies by foreign adversaries. Though comprehensive AI legislation may not materialize this Congress, the roadmap underscores AI's priority in Senate leadership.

The AI Working Group encourages the executive branch and the Senate Appropriations Committee to continue assessing how to handle ongoing needs for federal investments in AI during the regular order budget and appropriations process, with the goal of reaching as soon as possible the spending level proposed by the National Security Commission on Artificial Intelligence (NSCAI) in their final report: at least US$32 billion per year for (non-defense) AI innovation.

Download the report (31 pages, PDF).

Read more via Lexology.

AI's cozy crony capitalism (19/May/2024)

Regulating artificial intelligence presents a ‘Baptists and bootleggers’ problem. OpenAI founder Sam Altman has called for government regulation to mitigate AI risks, which has received support from major AI players like Microsoft and Google. This collaborative approach between industry leaders and government could lead to a new form of crony capitalism, potentially stifling innovation in the AI sector.

Read more via Reason.

Toys to Play With

Models Table animation (May/2024)

Data science analyst Eneda Muja created this interesting animation of my Models Table data.

View original post by Eneda.

MMLU-Pro (May/2024)

An independent research team decided to improve upon MMLU. Jack Clark from Anthropic provided some clear analysis of this benchmark (20/May/2024):

What they did: MMLU challenges LLMs to answer multiple choice questions, picking from four possible answers. MMLU-Pro expands the number of potential answers to 10, which means that randomly guessing will lead to significantly lower scores. Along with this, they expand on the original MMLU by adding in hard questions from Scibench (science questions from college exams), TheoremQA, and STEM websites, as well as sub-slicing the original MMLU to "remove the trivial and ambiguous questions". In total, they add 12,187 questions - 5,254 new questions along with 6,933 selected from MMLU.

Results - it's hard: MMLU-Pro seems meaningfully harder; Claude 3 Sonnet saw its performance fall from 0.815 on MMLU to 0.5793 on MMLU Pro. Other models have even more dramatic falls - Mixtral-8x7B-v0.1 sees its performance drop from 0.706 to 0.3893.

See it: https://huggingface.co/datasets/TIGER-Lab/MMLU-Pro

Columns: your AI data storyteller (May/2024)

Columns is an AI-driven application designed to transform complex data into engaging narratives. The platform now leverages GPT-4o to help users generate insightful stories from raw data, making it easier to understand and communicate information effectively.

Try it here (free, no login): https://columns.ai/chatgpt

Discussion: https://news.ycombinator.com/item?id=40405380

GPT-burn: Implementation of the GPT-2 architecture in Rust + Burn (May/2024)

This project aims to be a clean and concise re-implementation of GPT-2. The model implementation, contained in src/model.rs, is under 300 lines of code. While this was a fun exercise mostly for educational purposes, it demonstrates the utility of Rust and Burn in the machine learning domain: The entire project compiles into a single binary, making deployment relatively straightforward.

View the repo: https://github.com/felix-andreas/gpt-burn

Turn your data into APIs for text generation and natural language search (May/2024)

Selfie is a local-first, open-source project designed to turn your data into APIs for text generation and natural language search. It supports personalized interactions for chatbots, storytelling, games, and more, using local or hosted language models. The platform offers a web-based UI for easy data import and interaction.

View the repo: https://github.com/vana-com/selfie

Llama 3 implementation one matrix multiplication at a time (May/2024)

Feeling super nerdy? This code repository provides an implementation of the Llama 3 model from scratch, focusing on matrix multiplication. The project includes code for loading tensors directly from Meta's model file for Llama 3, and uses tiktoken as the tokenizer.

View the repo: https://github.com/naklecha/llama3-from-scratch

Experts.js: The easiest way to create and deploy OpenAI's Assistants (May/2024)

Experts.js simplifies the creation and deployment of OpenAI's Assistants, enabling them to be linked together as Tools to form advanced Multi AI Agent Systems. This framework leverages OpenAI's new Assistants API, allowing for expanded memory and attention to detail by integrating various tools and utilizing a managed context window called a Thread. Key features include the ability to handle instructions up to 256,000 characters and efficient file search across up to 10,000 files per assistant.

View the repo: https://github.com/metaskills/experts

Benchmark leaderboard by OpenAI (May/2024)

This repository contains a lightweight library for evaluating language models. It emphasizes the zero-shot, chain-of-thought setting with simple instructions, aiming to reflect the models' performance in realistic usage. The repository includes evaluations for various benchmarks like MMLU, MATH, and HumanEval, and supports APIs for OpenAI and Claude.

View the repo with leaderboard: https://github.com/openai/simple-evals

Synthetic data from scratch (May/2024)

In one of the recent livestreams, I stepped through the creation of synthetic data from scratch. No rehearsal, on the spot, using theory from Microsoft (phi-1) and steps from Cosmopedia.

Read the paper: https://arxiv.org/abs/2306.11644

See the steps: https://huggingface.co/blog/cosmopedia

Read the shared document: https://docs.google.com/document/d/1dNrRumSf6Umq63r6cirvpG5ahizg8HgqJCl_RzJ6AhM

Watch the video (link):

Flashback

How did we get here? We’ve gone as far back as 1959 in The Memo edition 21/Oct/2022, where we looked at the namesake for this advisory, the MIT AI memos. But the real cornerstone (leaving out Turing’s 1940s documents for the moment) was the Dartmouth Workshop in 1956 (that’s 68 years ago).

Dartmouth College is an Ivy League university founded in 1769 (that’s 255 years ago).

Sidenote: Can you name all eight members of the Ivy League?

You’ll find Dartmouth College about two hours north of Boston. More specifically, you’ll find it along the Connecticut River (and hosting a part of the Appalachian trail) in Hanover, Grafton County, New Hampshire, United States.

This month marks the 68th anniversary of the workshop preparation. On 26 May 1956, John McCarthy pulled together 11 core attendees including Marvin Minsky.

Around 18 June 1956, the earliest participants arrived at the Dartmouth campus to join John McCarthy who already had an apartment there.

The workshop was held on the entire top floor of the Dartmouth Math Department for roughly eight weeks, from 18 June 1956 to 17 August 1956.

Their research, discoveries, and insights drove the entire field of artificial intelligence, and set the stage for neural networks—still the core concept of today’s models.

Wiki: https://en.wikipedia.org/wiki/Dartmouth_workshop

The official but brief page by Dartmouth.edu

Read the 13-page 1956 Dartmouth proposal with US$13.5K budget (PDF).

The Dartmouth workshop is also on my AI timeline: https://lifearchitect.ai/timeline/

Next

On 14/May/2024, Elon said ‘Major upgrade to Grok coming in about 39 days [taking us to a release date of around Saturday, 22 June 2024].’

The next roundtable will be:

Life Architect - The Memo - Roundtable #11

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 1/Jun/2024 at 5PM Los Angeles

Saturday 1/Jun/2024 at 8PM New York

Sunday 2/Jun/2024 at 10AM Brisbane (new primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai