The Memo - 20/Apr/2023

The smartest person in the world uses GPT-4 as an assistant, Stability AI's StableLM 7B & 65B, China's new AI laws, and much more!

FOR IMMEDIATE RELEASE: 20/Apr/2023

OpenAI President Greg Brockman (13/Apr/2023):

The upcoming transformative technological change of AI is something that is simultaneously cause for optimism and concern — the whole range of emotions is justified and is shared by people within OpenAI, too.

It’s a special opportunity and obligation for us all to be alive at this time,

to have a chance to design the future together.

Welcome back to The Memo.

This is another very long edition, including policy analysis of Beijing’s strict new AI measures, and the EFF’s legal summary of text-to-image models and datasets.

In the Toys to play with section, we look at GPT-4 generated food menus, my favourite macOS tool to use ChatGPT/GPT-4 with a shortcut key, and a new Whisper/GPT-4 language app for Android, 20+ interfaces for ChatGPT(!), film by GPT-4/Midjourney/Kaiber, and much more…

The BIG Stuff

Stability AI releases StableLM 7B, 65B coming soon (20/Apr/2023)

As forecast in editions of The Memo for the last few weeks, Stability AI (the people behind Stable Diffusion) have finished training the 3B and 7B versions of their StableLM language models, with a 65B-parameter version due shortly. Trained on up to 1.5T tokens (>24:1), these models are very close to the scale of DeepMind Chinchilla 70B announced this time last year (Apr/2022). Instruct-tuned versions are also available ‘using a combination of five recent datasets for conversational agents: Stanford's Alpaca, Nomic-AI's gpt4all, RyokoAI's ShareGPT52K datasets, Databricks labs' Dolly, and Anthropic's HH’.

The models are licensed under Creative Commons CC BY-SA-4.0. My testing showed that the chat version of StableLM 7B seems to be pretty poor, though like Chinchilla 70B I do expect the StableLM 65B model to outperform GPT-3 175B on some benchmarks.

Demo: https://huggingface.co/spaces/stabilityai/stablelm-tuned-alpha-chat

Announcement by Stability AI with example screenshots.

Repo: https://github.com/stability-AI/stableLM/

View my timeline of language models: https://lifearchitect.ai/timeline/

Exclusive: The smartest person in the world uses GPT-4 as an assistant (13/Apr/2023)

Many of you know of my former work in the human intelligence and gifted education fields. But did you know that I worked closely with Professor Miraca Gross, the woman responsible for finding and nurturing child prodigy Terence Tao back in the 1980s?

(Bonus + sidenote: Terry was also invited to write a foreword to my 2016 book, Bright. That book went on to be featured at Elon Musk’s school, at major universities, and was even sent to the Moon. Readers will find a complimentary copy at the end of this edition.)

Terry has an IQ above 220. The numbers get a bit fuzzy above IQ 200, but statistically he is in the >99.9999999995th percentile; or 1 in >190 billion people. He attended Flinders University in Adelaide (my alma mater) when he was 9 years old, and became a Professor at UCLA by the age of 24. You can read more about him on wiki or briefly in my article 98, 99… finding the right classroom for your child.

As perhaps the quantifiably smartest person in the world, Terry is a great yardstick for human vs machine intelligence and ‘integrated AI’. He recently told a maths forum:

Today was the first day that I could definitively say that #GPT4 has saved me a significant amount of tedious work. As part of my responsibilities as chair of the ICM Structure Committee, I needed to gather various statistics on the speakers at the previous ICM (for instance, how many speakers there were for each section, taking into account that some speakers were jointly assigned to multiple sections). The raw data (involving about 200 speakers) was not available to me in spreadsheet form, but instead in a number of tables in web pages and PDFs. In the past I would have resigned myself to the tedious task of first manually entering the data into a spreadsheet and then looking up various spreadsheet functions to work out how to calculate exactly what I needed; but both tasks were easily accomplished in a few minutes by GPT4, and the process was even somewhat enjoyable (with the only tedious aspect being the cut-and-paste between the raw data, GPT4, and the spreadsheet).

Am now looking forward to native integration of AI into the various software tools that I use, so that even the cut-and-paste step can be omitted. (Just being able to resolve >90% of LaTeX compilation issues automatically would be wonderful...)

Here is one of the prompts that Terry used:

Read Terry’s post: https://mathstodon.xyz/@tao/110172426733603359

Exclusive: Sunsetting large language models: AI21, OpenAI (Mar/2023)

When Israeli AI lab AI21 released Jurassic-2 178B on 9/Mar/2023, they quietly noted in their online docs that the existing ‘Jurassic-1 [178B and other] models will be deprecated on June 1st, 2023’.

In Mar/2023, OpenAI also gave just a few days notice before quietly removing the GPT-3.5 base model. According to many, this was the most powerful model: the non-instruction tuned (raw) code-davinci-002 model. The removal was discussed on Twitter and in the OpenAI forums, and you can visualize the model’s place in my GPT-3 family chart of models. (Note that the model is still available via Microsoft Azure. It should also be noted that OpenAI’s older GPT-3 models are still available, including GPT-3 davinci 175B which celebrates its 3-year anniversary on 28/May/2023.)

Some of the reasons for removing the powerful code-davinci-002 model may include:

Cost. The base model may have been more expensive when running inference. But users are prepared to pay for the base model.

Competition. Model outputs can be used to (illegally) train new instruction-tuned models like Stanford’s Alpaca. But Stanford used the instruction-tuned text-davinci-003.

Safety. Keep users within guardrails for social and political reasons. But why is the base version of davinci still available?

Proprietary/enterprise use. As noted, Microsoft Azure still provides the model via their enterprise offerings, allowing higher revenues from large corporations.

The issue of AI labs opening and then later removing models is of grave concern, and should serve as a reminder to organizations that they need to introduce redundancy into their AI setup. While the maxim ‘not your server, not your data’ rings true, APIs to models running on managed servers are still the best way to access powerful intelligence, and local or on-premise inference runs a distant second. I’ve previously compared this with leasing a Boeing 787 Dreamliner (GPT-4) versus building your own little Cessna (Flan-T5-xxl), or worse, a paper airplane (Alpaca, GPT4All, and the current crop of laptop models)!

Read the unrolled thread about OpenAI removing access to code-davinci-002.

Here’s the related viz:

Evolving models (13/Apr/2023)

Further to the OpenAI President’s quote at the beginning of this edition, he also mentioned that:

…there are creative ideas people don’t often discuss which can improve the safety landscape in surprising ways — for example, it’s easy to create a continuum of incrementally-better AIs (such as by deploying subsequent checkpoints of a given training run), which presents a safety opportunity very unlike our historical approach of infrequent major model upgrades.

It is likely that the concept of rolling out a new version of a language model (like GPT-2, GPT-3, GPT-4) every year or two is a thing of the past. Expect to see ‘evolving’ models soon, and then ‘continuous learning’ models—those that can learn from users and data in realtime—next.

Apple mixed-reality headset due in a few weeks (Apr/2023)

The rumour mill has packed more and more substance behind the news: Apple will release a new ‘mixed-reality’ (AR/VR) headset in Jun/2023. Bloomberg has the details (16/Apr/2023). I believe that this headset will be a perfect platform for easy interaction with large language models. Consider these use cases:

Personalized chatbot backed by GPT-n.

Learning and ‘injecting’ personalized knowledge via private tutors.

Socialisation and conversation (including emails) via large language models: consolidate/simplify writing, and consolidate/simplify reading.

Creating documents (doc, xls, ppt) rapidly via large language models.

Creating text-to-video (or speech-to-video) instantly (see video below).

Creating entire worlds and universes via text or speech.

Using imagination to solve any problem that can be solved using language…

Most of this functionality is already available, it is just that this will now be strapped to our head and available to our eyeballs without pulling a phone from a pocket. Here’s a 10-second rendered video I put together using The Memo header image and Kaiber.ai:

The Interesting Stuff

Alibaba Tongyi Qianwen chatbot embedded in smart speakers (13/Apr/2023)

[Alibaba] unveiled Tongyi Qianwen, a large language model that will be embedded in its Tmall Genie smart speakers and workplace messaging platform DingTalk.

“We are at a technological watershed moment, driven by generative AI and cloud computing,” Zhang said.

Take a look (right-click > translate in your browser): https://tongyi.aliyun.com/

GPT-4 and PaLM now serving patients in the medical field (Apr/2023)

In my Dec/2021 report to the UN, I wrote extensively about how large language models will benefit the world. One of the fields I highlighted was health and wellbeing. We’re now seeing powerful and capable large language models writing messages to patients, and analyzing medical records via both OpenAI GPT-4 and Google PaLM 540B.

On Monday, Microsoft and Epic Systems announced that they are bringing OpenAI's GPT-4 AI language model into health care for use in drafting message responses from health care workers to patients and for use in analyzing medical records while looking for trends.

Read more about GPT-4 and Epic via Ars.

Read limited preview of the exclusive PaLM announce via Stat: https://archive.is/AZTbz

Drew Carey used ChatGPT and AI voice for his radio show (31/Mar/2023)

Carey used an artificially generated version of his voice [via ElevenLabs] to handle most of his DJ work, reading a script written by ChatGPT. His AI voice kicked off the show, introduced upcoming songs and recapped what listeners were hearing. As an experiment to see just how far AI could go on the radio, the episode was mostly a success.

Listen to a 1-min clip of the episode via SoundCloud. (Backup mp3)

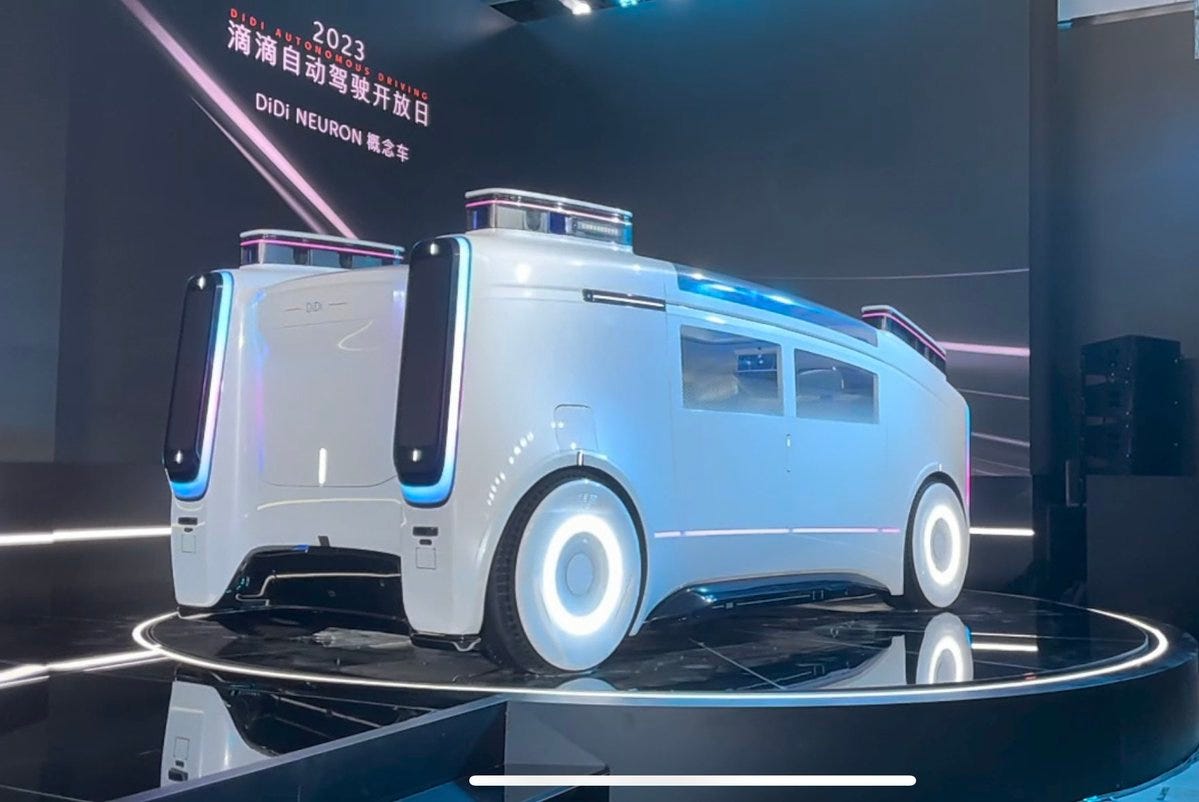

Didi Neuron self-driving robotaxi launching soon (14/Apr/2023)

[Didi will] deploy self-developed robotaxis to the public on a 24/7 basis by 2025 [Alan: in about 20 months from this edition], which will complement its network of millions of drivered cars… Like most robotaxi companies, Didi opted to partner with OEMs to build its autonomous fleets. It’s so far used vehicles from Lincoln, BYD, Nissan and Volvo [in use for the Neuron].

…a spacious rectangular van with no steering wheel or driver’s seat (shown above). Instead, “Neuron”, what the car is called, features a large in-car infotainment screen and has a robotic arm that can pick up suitcases and hand passengers a bottle of water.

Elon Musk Creates New Artificial Intelligence Company X.AI + TruthGPT (15/Apr/2023)

As part of his AI ambitions, Mr. Musk has spent the past few months recruiting researchers with the goal of creating a rival effort to OpenAI, the artificial intelligence company that launched the viral chatbot ChatGPT in November, according to researchers familiar with the outreach. OpenAI has set off a fever pitch of investor interest in the sector…

[Elon] recently recruited Igor Babuschkin, a scientist at artificial intelligence lab DeepMind, owned by Alphabet Inc., to helm the new effort. He has also tried to recruit employees at OpenAI to join the new lab…

Read more via WSJ: https://archive.is/qzbbb

Elon is also talking about training a new model called ‘TruthGPT’. I remain unconvinced, but if you’d like to hear a little more of his thesis, read the article here via The Verge.

AI-generated image wins Sony World Photography Awards 2023 (13/Mar/2023)

REFUSAL OF THE PRIZE of the Sony World Photography Awards, Open Competition / Creative Category at the London Award ceremony.

“Thank you for selecting my image and making this a historic moment, as it is the first AI generated image to win in a prestigious international PHOTOGRAPHY competition.

How many of you knew or suspected that it was AI generated? Something about this doesn’t feel right, does it? AI images and photography should not compete with each other in an award like this. They are different entities. AI is not photography. Therefore I will not accept the award.I applied as a cheeky monkey, to find out, if the competitions are prepared for AI images to enter. They are not… Many thanks. Boris.”

…

“The work [Sony] has chosen is the result of a complex interplay of prompt engineering, inpainting and outpainting [via OpenAI DALL-E 2] that draws on my wealth of photographic knowledge. For me, working with AI image generators is a co-creation, in which I am the director.”

The photo and award were later removed by Sony.

Read Boris’ take: https://www.eldagsen.com/sony-world-photography-awards-2023

The AI Alignment Problem (15/Apr/2023)

I had a great time speaking with Philosopher John Patrick Morgan about the AI Alignment Problem.

Read more: https://lifearchitect.ai/alignment/

Watch the video (49mins):

Of The Memo’s thousands of readers, many paid subscribers come from Google, using various pseudonyms. Now I’m sure it’s just a coincidence, but a few days after the release of this video, Google’s CEO echoed some of the statements in the video, saying:

“[Regarding AI alignment,] It’s not for a company to decide,” Pichai said. “This is why I think the development of this needs to include not just engineers but social scientists, ethicists, philosophers and so on.” - via CNBC (17/Apr/2023)

OpenAI leapfrogs Diffusion models with ‘Consistency models’ (2/Mar/2023)

OpenAI have published a paper on Consistency models for text-to-image generation.

Diffusion models have made significant breakthroughs in image, audio, and video generation, but they depend on an iterative generation process that causes slow sampling speed and caps their potential for real-time applications. To overcome this limitation, we propose consistency models, a new family of generative models that achieve high sample quality without adversarial training. They support fast one-step generation by design, while still allowing for few-step sampling to trade compute for sample quality. They also support zero-shot data editing, like image inpainting, colorization, and super-resolution, without requiring explicit training on these tasks.

Given the implications of this and our exponential timelines, I expect to see Consistency models overtake Diffusion (and Autoregressive) very soon.

And I wonder if Stable Diffusion will rename itself to Stable Consistency…

Read the paper: https://arxiv.org/abs/2303.01469

(Note: It is a very good thing that OpenAI are still publishing real papers with real maths versus their nearly-useless ‘technical report’ about GPT-4.)

View the repo: https://github.com/openai/consistency_models

LAION Open Assistant released (15/Apr/2023)

Based on Pythia-12B, with a version of LLaMA-30B also available.

View the repo: https://github.com/LAION-AI/Open-Assistant/tree/main/model/model_training

Read the paper: https://www.ykilcher.com/OA_Paper_2023_04_15.pdf

A note on ‘autonomous’ looping scripts like Auto-GPT (Apr/2023)

You may be wondering why I haven’t spent much time analyzing new ‘autonomous agents’ like BabyAGI, Auto-GPT, and AgentGPT…

These looping scripts are just not as fascinating (to me) as they are being made out to be. Their functionality is the equivalent of a goto statement from 1980s BASIC. There are bigger and better things available right now, some of which haven’t even been discovered in the existing models.

However, I will provide a high-level view of these scripts and compelling use cases in a future edition of The Memo.

Policy

EFF says training AI models on datasets is ‘fair use’ (3/Apr/2023)

The Electronic Frontier Foundation (EFF) is an international non-profit digital rights group based in San Francisco, California. They are highly respected in the intersection of law and technology. Their latest analysis of AI models is very long, and worth reading.

Like copying to create search engines or other analytical uses, downloading images to analyze and index them in service of creating new, noninfringing images is very likely to be fair use…

The Stable Diffusion model makes four gigabytes of observations regarding more than five billion images. That means that its model contains less than one byte of information per image analyzed (a byte is just eight bits—a zero or a one).

The complaint against Stable Diffusion characterizes this as “compressing” (and thus storing) the training images, but that’s just wrong. With few exceptions, there is no way to recreate the images used in the model based on the facts about them that are stored… Mathematically speaking, Stable Diffusion cannot be storing copies of all of its training images…

The class-action lawsuit against Stable Diffusion doesn’t focus on the “outputs,” (the actual images that the model produces in response to text input). Instead, the artists allege that the system itself is a derivative work. But, as discussed, it's no more illegal for the model to learn a style from existing work than for human artists to do the same in a class, or individually, to make some of the same creative choices as artists they admire…

Done right, copyright law is supposed to encourage new creativity. Stretching it to outlaw tools like AI image generators—or to effectively put them in the exclusive hands of powerful economic actors who already use that economic muscle to squeeze creators—would have the opposite effect.

Read the summary: https://www.eff.org/deeplinks/2023/04/how-we-think-about-copyright-and-ai-art-0

China’s first pass on tough new AI laws (13/Apr/2023)

This is a strict and hostile policy. Here’s a short translated extract of Beijing’s draft:

Those using the AI to provide services to the public… will be required to undertake a security assessment first before their offerings go live. Those providers then become responsible for the outcome, and any leak of personal information or IP infringement. They must… also clearly label the content as being AI generated and handle any complaints, as well as ensure against users becoming addicted to or dependent on the tool.

Read the related article: https://www.theregister.com/2023/04/12/china_ai_regulation/

I also recommend that you read a full analysis of Beijing’s policy by Jeremy Daum. The article is called Overview of Draft Measures on Generative AI (14/Apr/2023).

Obligations

Security Assessment to be conducted before making services publicly available in accordance with Provisions on the Security Assessment of Internet Information Services that have Public Opinion Properties or the Capacity for Social Mobilization (Art. 6)

Filing Algorithms in accordance with Provisions on the Management of Algorithmic Recommendations in Internet Information Services (Art. 6)

Ensuring the sources of training data are lawful (Art. 7)

Developing detailed rules for manual data tagging (Art. 8)

Implementing Real-name verification system (Art. 9)

Specifying intended users and uses (Art. 10)

Taking measures to prevent over-reliance and addiction (Art. 10) and guide proper use (Art. 18)

Protecting information entered by users (Art. 11)

Non-discriminatory output (Art. 12)

Accept user complaints and correct information that infringes user rights (Art. 13)

Provide safe and stable services (Art. 14)

Prevent illegal content through screening and retraining of the model. (Art. 15)

Label generated content (Art. 17)

Suspend services to stop user violations (Art. 19)

Liability

Responsible for generated content as if a producer of the content (Art. 5)

Responsible for handling of personal information (Art. 5)

Punishment in accordance with other law, or where laws are silent, penalties of warnings, public criticism, ordering corrections, stopping services, or fines of between 10,000 and 100,000 RMB

Via Jeremy Daum - Overview of Draft Measures on Generative AI (14/Apr/2023).

ChatGPT embraced by the education department in Slovenia (16/Apr/2023)

With AI, adaptive learning, personalization and different learning styles come to the fore. By using artificial intelligence in education, the current approach based on a one-size-fits-all solution is replaced by learning adapted to the individual student. Students will be able to learn at their own pace. [AI] offers opportunities in the form of new tools that will help teachers and students.

Toys to Play With

Keynote for Bond University - Law (from Sep/2022, remastered Apr/2023)

This is a pre-ChatGPT, pre-GPT-4 keynote that acted as a rehearsal for my Devoxx keynote a few days later in October 2022. You may recognize some of the content, and then there are some very niche rabbit holes for the legal entrepreneurs and academics in the audience!

Audio remastered by AI using Adobe Enhance (also used for Leta Episodes 0-5).

I’m making it available here for members only.

Watch my video (45mins):

GPT-4 generated food menus (11/Apr/2023)

Please note that grand total prices exclude the cost of wine due to significant market fluctuations. Additionally, GPT-4's knowledge is based on data up to September 2021, which may lead to biases and overestimations for wine prices in 2023.

Take a look: https://exquisite-menus.thefrenchartist.dev/

Midjourney guide book (Apr/2023)

GPT4 + ElevenLabs + Midjourney + Kaiber: A short film narrated by David Attenborough (18/Apr/2023)

Tech stack: GPT-4 (script), ElevenLabs (voice), Midjourney v5 (visuals), Kaiber.ai (image-to-video).

SimplySpeak for Android (Apr/2023)

Thanks to The Memo reader, Kin, for this one!

Android app SimplySpeak enables users to speak in 99 different languages and convert their speech into well-written English texts. It also offers different writing styles. The public testing link is provided below, and the app also offers a 30-day trial period.

Tech stack: OpenAI Whisper + GPT-4.

Try it: https://play.google.com/store/apps/details?id=com.simplyspeak

Nomic GPT4All Chat: Local version for your computer (18/Apr/2023)

A new one-click installer for the GPT4All (-J) model. In some ways, this model is better than Alpaca or Koala. As with my viz above, it is still a paper airplane, based on a GPT-2-like model released in 2021 '(Eleuther AI’s GPT-J 6B, with fine-tuning via ChatGPT). I loved their quote:

You are downloading a 3GB file that has baked into it all human-historical knowledge placed onto the internet. We appreciate your patience and understanding - maybe take some time during the download to appreciate the gravity of how far we as humans have come and pat yourself on the back.

Download for macOS, Windows, Linux: https://gpt4all.io/index.html

View the repo: https://github.com/nomic-ai/gpt4all-chat/

20+ interfaces for ChatGPT (Apr/2023)

Here’s the full list: https://github.com/billmei/every-chatgpt-gui

My favourite so far is Koala.sh (web, free, no login, powered by ChatGPT): https://koala.sh/chat

ChatGPT interface for macOS (Apr/2023)

This macOS app has been around for a few weeks and is in the list above, but I wanted to put it back at your fingertips (or menubar!). It is a free app, but billing for GPT is via your OpenAI account. You can select the GPT-4 chat model in the dropdown for this app. As with my viz above, if you use GPT-4, you’re effectively leasing a Boeing 787!

Download it: https://github.com/vincelwt/chatgpt-mac

After install, it sits in your menu bar. The two main commands are:

⌘-Shift-G: Start typing to ChatGPT (or GPT-4).

⌘-R: Refresh (new chat).

Next

As promised, here is a complimentary copy of my book, Bright (2016), for any device.

All my very best,

Alan

LifeArchitect.ai