To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 8/Feb/2024

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 65%Mustafa Suleyman CBE, founder of DeepMind and Inflection AI (1/Feb/2024):

’It’s actually quite incredible to be alive at this moment. It’s hard to fully absorb the enormity of this transition. Despite the incredible impact of AI recently, the world is still struggling to appreciate how big a deal its arrival really is.

We are in the process of seeing a new species grow up around us. Getting it right is unquestionably the great meta-problem of the twenty-first century.

But do that and we have an unparalleled opportunity to empower people to live the lives they want.’

I was intrigued by a recent forum question posed on Hacker News, querying why I spent time doing what I do (they specifically wanted to know why I cooked up the growing Models Table, now with 250+ models). What is my motivation for analyzing post-2020 AI? I do it for the public, as a service, sure. But I primarily do it to satisfy my own curiosity.

It is a continuing surprise to me that no one else in the world bothers to do what I do. It’s not like the data isn’t there, it is. It’s usually out in the open, or sometimes hidden in plain sight. And it is deeply compelling.

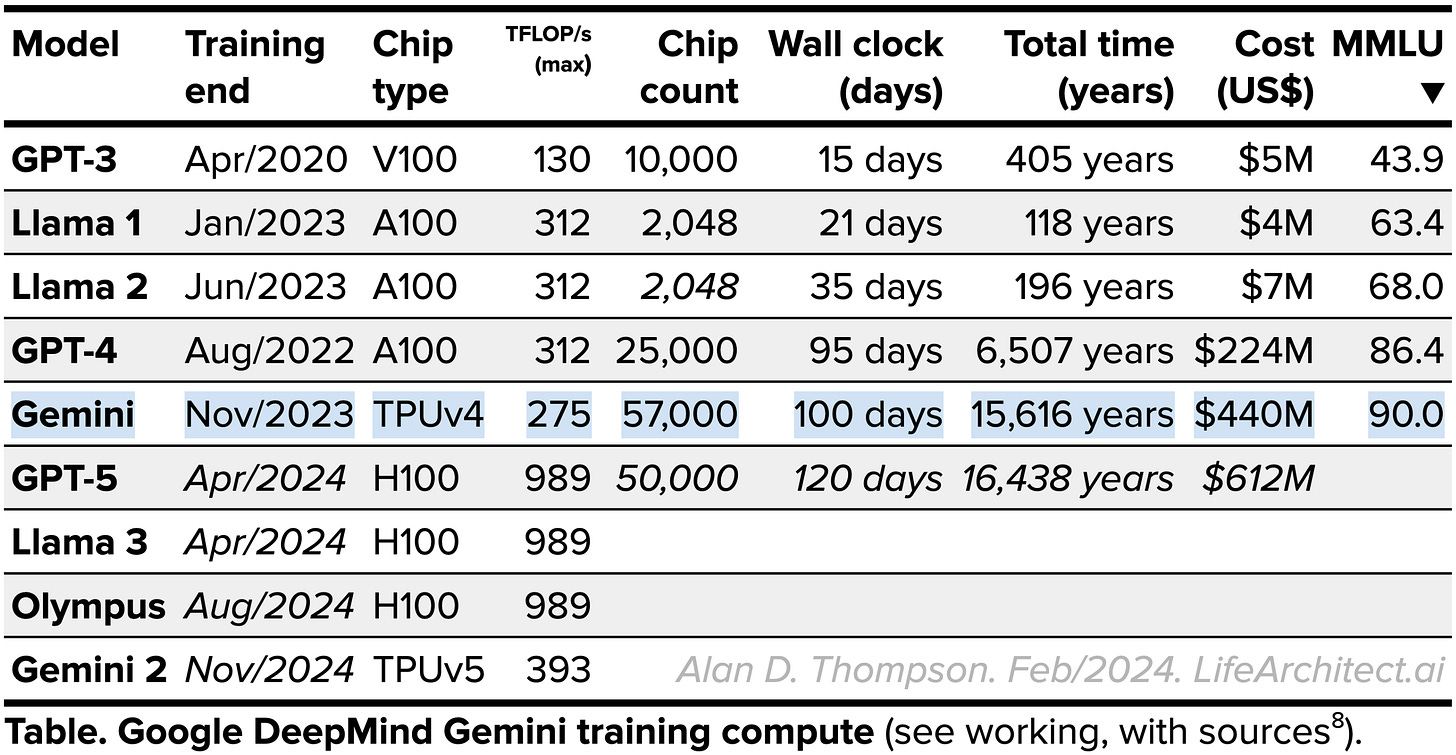

Consider this table I drew together one night this week:

All of the data is available in various papers and repositories, but had not been brought together in plain English in this way at any stage. Why not?! It is completely mesmerizing to know these kinds of details about our evolving superintelligence; that Google Gemini used significantly more compute than OpenAI GPT-4, that Gemini was trained for the equivalent of 15,000 years at a retail cost of 600 million dollars, and that the next frontier models will be measured in billions of dollars of training compute spend.

And while putting it all together quelled my curiosity, I know it also assists tens of thousands of people (at many big places)—including you.

I’m certain this mindset is not unique. Humans can only flourish through a sense of what Prof Marty Seligman calls ‘the peaks of lasting fulfillment, meaning, and purpose’ (PERMA and his book, Flourish), and we find this through countless paths. As AI envelops our work and play, I am keen to see how our sense of purpose unfolds as it is essentially taken away from us by AI over the next few months and years.

And each person is going to have to discover for themselves how to navigate this new way of being… with the help of AI, of course.

The BIG Stuff

Gemini Ultra 1.0 (7/Feb/2024)

I’m getting this one out within an hour of launch, and actually before the official announcement. I will update the web version of this edition of The Memo as further news comes to light over the next period.

Today, Google DeepMind will publicly release the largest model version in the Gemini family, the model is called Gemini Ultra 1.0. I’ve previously estimated this dense model to be around 1.5T parameters trained on 30T tokens. It is more powerful than GPT-4, and likely the largest and most powerful model in the world as of February 2024.

‘Gemini Ultra outperforms all current models.’

— Google Gemini paper (Dec/2023)

See it on the Models Table: https://lifearchitect.ai/models-table/

Read my annotated Gemini paper.

Google users can use the Gemini Ultra 1.0 model as ‘Gemini Advanced’, in 150 countries, via subscription with a grace period and then US$19.99/month.

Try it: https://one.google.com/explore-plan/gemini-advanced

After entering card details, you can use the Gemini Ultra 1.0 model as ‘Gemini Advanced’ inside the platform formerly known as Bard: https://gemini.google.com/

My initial tests are not positive, but I am expecting that they still have to iron out some issues during this launch period. I will update this web edition as we proceed.

Updates:

‘Gemini Advanced gives you access to Ultra 1.0, though we might occasionally route certain prompts to other models.’ FAQ > What is Gemini Advanced?

Google CEO: ‘we’re already well underway training the next iteration of our Gemini models’ 8/Feb/2024

Exclusive: Google agentized Gemini to fix their software (31/Jan/2024)

Yes, ‘agentized’ is a word, and Google did it. This is exclusive in that no media has picked it up, but the paper and code are available (for free). Google has created an agent using the Gemini Pro model to trawl through their internal codebase and fix bugs.

…leveraging AI to scale our ability to fix bugs, specifically those found by sanitizers in C/C++, Java, and Go code… harnessed our Gemini model to successfully fix 15% of sanitizer bugs discovered during unit tests, resulting in hundreds of bugs patched…

Instead of a software engineer spending an average of two hours to create each of these commits, the necessary patches are now automatically created in seconds [by Gemini].

Approximately 95% of the commits [fixed by Gemini] sent to code owners were accepted without discussion. This was a higher acceptance rate than human-generated code changes, which often provoke questions and comments…

Reviewers may have had greater trust in the solutions because they were generated by [AI] technology.

The prompt given to Gemini in this project was:

You are a Senior Software Engineer tasked with fixing sanitizer errors. Please fix them.

Read the paper (PDF, 4 pages).

View the repo: https://github.com/google/oss-fuzz-gen

These are the first glimpses of a completely new economy, and the new way of doing things in humanity’s next revolution:

Mar/2023: OpenAI uses GPT-4 to help write the GPT-4 paper: ‘GPT-4 was used in the following ways: to help us iterate on LaTeX formatting; for text summarization; and as a copyediting tool.’ Read more: GPT-4 Technical Report (appendix)

Dec/2023: OpenAI uses GPT-4 to prepare GPT-5 and future models: ‘For instance, we’re leveraging the immense capabilities of GPT-4 to innovate on safety, trimming the time it takes to undertake some safety processes down from months to hours.’ Read more: OpenAI—written evidence to UK govt (PDF)

Jan/2024: Google uses Gemini to fix their code: ‘Instead of a software engineer spending an average of two hours to create each of these commits, the necessary patches are now automatically created in seconds [by Gemini].’ Read more: Google—AI-powered patching: the future of automated vulnerability fixes (PDF).

If a lumbering giant like Google can use AI to optimize its processes by just a few percentage points (for now), consider the immediate impact on efficiency, productivity, goods, services, happiness(!), and the rapid approach of the ‘post-scarcity’ or abundance economy (wiki)…

Couple this with two more data points:

12/Jan/2024: Google fires 1,000 workers after parent company announced firing 12,000 (6%) employees.

7/Feb/2024: Microsoft CEO: ‘AI could power 10% [$500B] of the $5-trillion Indian economy’.

Russian programmer finds true love with ChatGPT (2/Feb/2024)

Alexander Zhadan, a Russian programmer, automated his search for love using a ChatGPT-based chatbot, which interacted with 5,239 girls before finding ‘the one’.

He decided to create a dating bot based on the ChatGPT API. The bot selected suitable profiles in the Tinder app based on certain criteria (for example, having at least two photos in the profile), chatted with them and, if all went well, suggested meeting in person…

In total, the bot met 5,239 girls, out of which Alexander selected four most suitable ones. Ultimately, he chose one of them named Karina…

"V3 messaged me when the conversation with Karina heated up, a summary or a question about a reply appeared. It systematically understands from the request whether the conversation is negative or emotional…

In one of the conversation summaries, the bot directly suggested Alexander propose to Karina, which he did. She said yes.

Two months before the proposal, Alexander told Karina about how exactly he used the chatbot. "She was, of course, shocked. But, in the end, she began asking questions about how it all works, how it reacts to different scenarios, etc. But what? We have been living together for more than a year, have known each other for more than a year and really enjoy spending time together. And we treat each other super well, empathetically and with support," the programmer says.

Read the whole story with screenshots via Russia Beyond.

In my end-of-year report released a few weeks ago, I asked a pertinent question: ‘Post-2020 AI currently has the ability to amplify and augment your output by about 2×, and this will increase to 1,000× soon. What does this look like for you?’ Alexander’s version of 1,000x was fascinating (although slightly depressing), and adds to my growing list of ChatGPT achievements!

Read my AI report: https://lifearchitect.ai/the-sky-is-comforting/

Read my full list of ChatGPT achievements.

AI image provenance: Content Credentials Verify (Feb/2024)

The Coalition for Content Provenance and Authenticity (C2PA) has developed a technology to verify the provenance of images.

OpenAI has finally applied this invisible digital watermark to all DALL-E 3 images as of February 2024.

You can check whether an image is AI-generated by uploading it here (free, no login):

Official verification site: https://contentcredentials.org/verify

Read more via OpenAI.

Read more via The Verge.

The Interesting Stuff

Norway purchases ChatGPT for 110,000 students and teachers (6/Feb/2024)

Oslo, Norway has acquired GPT 3.5-Turbo licenses for education and assessment for 110,000 students and staff, necessitating significant changes to teaching and evaluation methods.

Read more via Digi.no (Norwegian).

We’ve previously explored Ivy League schools making the use of AI mandatory:

University of Pennsylvania Wharton MBA: ChatGPT and image generation tools mandatory. The Memo edition 27/Jan/2023.

Harvard computer science: ChatGPT and GPT-4 mandatory. The Memo edition 9/Jul/2023.

Contrast this with the majority of schools today where rote memorization (and perhaps money) remains the driving force, and where intelligence is seen as a wasteful dalliance, perhaps like art in the 1800s, physical education in the 1850s, typing in the 1980s and graphic calculators in the 1990s. Obviously post-2020 AI is far beyond any of these comparisons, but the continuing lack of education in educational institutions throughout the 2020s is still a disappointment.

My 2017 analysis of this area ended up affecting education policy here in Australia (Jun/2019, PDF), based on learnings from Elon Musk’s school in the US and the Mensa Gymnasium in Czechia: https://lifearchitect.ai/ad-astra/

Openwater’s brain-machine interfaces open sourced (Jan/2024)

Many years ago now, I began talking about Dr Mary Lou Jepsen’s Openwater device. It is a brain-machine interface that is completely non-invasive, worn like a beanie or ski cap.

This is another substantial edition. Let’s look at a lot more AI, from Amazon to Google to Meta and beyond, new AI apps in the Vision Pro, factory humanoids, updates on the abominable EU AI Act, my new favourite GPT-4 fine-tune, a very out of character dystopian perspective, and much more…

Recently, Openwater decided to open source their patents, clinical data, and safety information to foster innovation, reduce healthcare costs dramatically, and improve access to medical treatments globally. Their recent $54 million funding supports this vision, aiming to accelerate medical advancements and make treatments more accessible and affordable.

Read more: https://www.openwater.health/

Read a CNBC article from Jul/2017.

Openwater has a new BMI device available for pre-order.

I explored the Openwater device in Apr/2022 (timecode): https://youtu.be/u64YrV3Ic4E

Meta Q4 earnings call (1/Feb/2024)

Based on their ongoing investment (especially compute spend measured in the billions, with analysts mentioning Meta’s additional $9B annual spend probably just on NVIDIA GPUs for training LLMs) and access to unique data, I expect Meta to leapfrog other AI labs this year.

“Llama 3 which is training now and is looking great so far”

“by the end of this year we'll have about 350k H100s [$10.5B retail] and including other GPUs that’ll be around 600k H100 equivalents of compute”

“we think that training and operating future models will be even more compute intensive. We don't have a clear expectation for exactly how much this will be yet, but the trend has been that state-of-the-art large language models have been trained on roughly 10x the amount of compute each year. Our training clusters are only part of our overall infrastructure and the rest obviously isn't growing as quickly. But overall, we're playing to win here and I expect us to continue investing aggressively in this area. In order to build the most advanced clusters, we're also designing novel data centers and designing our own custom silicon specialized for our workloads.”

“we're also working on the research we need to advance for Llama 5, 6, and 7 in the coming years and beyond to develop full general intelligence.”

“the corpus that you might use to train a model up front. On Facebook and Instagram there are hundreds of billions of publicly shared images and tens of billions of public videos, which we estimate is greater than the Common Crawl dataset and people share large numbers of public text posts in comments across our services as well.”

Download earnings call transcript PDF (19 pages).

OLMo - Open Language Model by AI2 (Feb/2024)

Allen AI has finally released their OLMo model. OLMo is a comprehensive open-source language model framework by AI2, offering full access to training data, code, models, and an evaluation suite to foster collective advancement in AI language model research. It has 7B parameters trained on 2.5T tokens (358:1).

Read the paper: https://arxiv.org/abs/2402.00838

HF: https://huggingface.co/allenai/OLMo-7B

Repo: https://github.com/allenai/olmo

New and better ways to create images with Imagen 2 (1/Feb/2024)

Google has finally released Imagen 2 to the public, a major update to its image generation technology, now available in Bard, ImageFX, Search, and Vertex AI, offering high-quality, photorealistic image outputs from text prompts.

Read more via Google blog.

Try it in Bard: https://bard.google.com/

I explored Imagen 2 in a stream in Dec/2023: https://youtu.be/KfXlY8PgyZY?t=2832

Boston Dynamics Atlas (6/Feb/2024)

I understand that this bot’s movements are still pre-programmed rather than generally intelligent.

Watch (link):

Google Maps is getting ‘supercharged’ with generative AI (1/Feb/2024)

Google Maps will integrate large language models to enhance discovery, allowing users to find places like 'vintage shops in SF' through a more natural, conversational interface.

Read more via The Verge.

Amazon announces AI shopping assistant called Rufus (1/Feb/2024)

Amazon unveils Rufus, an AI-powered shopping assistant designed to simplify product searches and comparisons for users through conversational queries within Amazon’s mobile app.

CEO Andy Jassy has said the company plans to incorporate generative AI across all of its businesses.

Read more via CNBC.

Read my page on the upcoming 2 trillion-parameter frontier model, Amazon Olympus: https://lifearchitect.ai/olympus/

Adobe Firefly comes to the Apple Vision Pro – and it's a wild mashup of AI art and AR (2/Feb/2024)

Adobe Firefly AI, an artificial intelligence image generator, is now available as a native app on Apple’s Vision Pro, allowing users to create and pin augmented reality images in their environment.

Read more via TechRadar.

Europcar says someone likely used ChatGPT to promote a fake data breach (31/Jan/2024)

Europcar has investigated an alleged data breach advertised on a hacking forum and deems it fake, potentially created with ChatGPT, as the sample data contains inconsistencies and inaccuracies.

The sample data is likely ChatGPT-generated (addresses don’t exist, ZIP codes don’t match, first name and last name don’t match email addresses, email addresses use very unusual TLDs)

Read more via TechCrunch.

Introducing Semafor Signals (5/Feb/2024)

Semafor is launching ‘Signals’, a multi-source breaking news feed using tools from Microsoft and Open AI, to provide readers with a diverse range of perspectives and insights on developing global stories.

Read more: https://www.semafor.com/article/02/05/2024/introducing-semafor-signals

Introducing CERN’s robodog (6/Feb/2024)

CERN's newly introduced robodog, known as CERNquadbot, has successfully completed its initial radiation protection test, showcasing its ability to navigate complex environments within the laboratory's facilities.

Read more via CERN.

Watch the video (link):

AI can now master your music—and it does shockingly well (6/Feb/2024)

AI-powered music mastering services like LANDR have advanced to the point where they can sometimes surpass professional human mastering, providing quick, cost-effective, and high-quality results that challenge traditional audio mastering methods.

This is a 2,000-word article with screenshots, but a very worthwhile read, and takes me back to my old days as a live sound designer for some of the biggest artists in the world (2009, archive.org).

Read more via AT.

Policy

EU approves AI Act (Feb/2024)

On February 2, 2024, a committee of ambassadors from all countries of the European Union (EU) approved the latest draft of the EU Artificial Intelligence Act (AIA or the Act). Following weeks of speculation that there could be a blocking minority of EU countries who had concerns about the final text, this vote confirms that the AIA has substantial support within the Council of the EU (Council)…

Before the AIA can become law, it must be formally approved by both EU co-legislators, namely the European Parliament (EP) and the Council. Since the co-legislators reached a political agreement on the AIA in December 2023, the drafters have been ironing out the technical details of the draft text. It was reported that the negotiators from France, Germany, and Italy raised concerns about some of the provisions in the AIA…

The other co-legislator, the EP, will likely vote on the final text in April [2024]. While some political groups at the EP also expressed concerns about the final text of the Act, they are not expected to impede the Act’s formal adoption by the EP. Once the AIA is formally adopted and enters into force, there will be a grace period for companies to bring their activities into compliance. The relevant grace period will depend on the category of requirements. For instance, rules prohibiting certain types of AI systems will be enforceable after six months (likely before the end of this year), requirements for general purpose AI models will be enforceable after 12 months (likely around Q2 2025), while most rules for high-risk AI systems will be enforceable after 24 months (likely around Q2 2026).

Read more via JDSupra.

Download the AIA (PDF, 272 pages): https://data.consilium.europa.eu/doc/document/ST-5662-2024-INIT/en/pdf

See The AI Act Explorer by the Future of Life Institute (FLI): https://artificialintelligenceact.eu/ai-act-explorer/

A reminder that this Act is an abomination, and the EU has twice won my Who Moved My Cheese? AI Awards!: https://lifearchitect.ai/cheese/

Cutting off Chinese AI access is harder than you'd think (5/Feb/2024)

US trade restrictions aim to limit China's access to advanced semiconductor technologies, but smuggling and evasion tactics, including the use of seemingly legitimate businesses, continue to challenge these efforts.

widespread availability of GPU resources in the cloud means that, for the moment, Chinese companies can just rent time on accelerators that they're otherwise barred from buying.

US intelligence agencies have raised alarm bells about this loophole. Late last year, a New York Times report claimed US agencies suspected United Arab Emirates' AI darling G42 of secretly furnishing China with compute resources.

Read more via The Register.

Toys to Play With

Copilot GPT-4 (Feb/2024)

I hesitate to call this the current state-of-the-art model, but there is something extraordinary about Microsoft’s implementation of GPT-4 without all the frustrating guardrails added by OpenAI. They also use completely different fine-tuning, which means Copilot’s GPT-4 outperforms OpenAI’s GPT-4 for all of my test prompts.

Set the output to ‘More Precise’.

On web, Copilot uses GPT-4 by default, but on mobile, you must set the model to ‘GPT-4’.

Try it (free, no login): https://chat.bing.com/

Consider putting it on your phone, too. The setting to use ‘Precise’ is accessible via the three dots at the top-right: https://apps.apple.com/us/app/microsoft-copilot/id6472538445

General world models are coming to VR… (Feb/2024)

This is early days, but if you’d like to go down the path, here are the links:

Midjourney’s hardware (6/Feb/2024): Dr Jim Fan notes that: ‘MidJourney hired an engineer from Apple Vision Pro to be "Head of Hardware". My best guess is that they are thinking about generating full synthetic worlds for AR/VR, because of their rumored works on text-to-3D.’

https://twitter.com/DrJimFan/status/1754558101829881893Runway’s General World Models (Dec/2023): ‘[General world models will] need to generate consistent maps of the environment, and the ability to navigate and interact in those environments. They need to capture not just the dynamics of the world, but the dynamics of its inhabitants, which involves also building realistic models of human behavior.’

https://research.runwayml.com/introducing-general-world-models

Adobe Firefly 2 (Oct/2023)

The latest version from October 2023 may be easier to use than any other text-to-image model. I don’t think I’ve mentioned its ease-of-use in these editions. While I can’t recommend the company, the UI/UX is really, really well done.

Try it (free, login): https://firefly.adobe.com/inspire/images

Compare with other text-to-image models (mostly free, some with login):

Imagen 2 on Bard: bard.google.com

Midjourney v6: https://www.midjourney.com/

DALL-E 3 on ChatGPT.com: https://chat.openai.com/

Stable Diffusion XL on Poe.com: https://poe.com/StableDiffusionXL

Stable Diffusion XL on mage.space: https://www.mage.space/

Flashback

While ‘dystopia’ comes up in my conversations nearly never, the Apple Vision Pro reviews reminded me of this famous image by Eran Fowler from Nov/2005:

Which led me down a rabbit hole of related emails. 2005 must have been the year for dystopia, because I found an email I had sent to a colleague about a story from that year called Manna: Two Visions of Humanity’s Future.

It’s worth reading, but it is what I have previously called a ‘misuse of imagination’. Whether due to mental illness or some sort of intergenerational trauma, this kind of lazy dystopian nightmare fodder probably isn’t healthy for anyone. As a reprieve, there is a contrasting utopian experience in Australia(!) of all places explored later in the book.

“We could change it now. Robots are doing all the work. Human beings — all human beings — could now be on perpetual vacation. That’s what bugs me. If society had been designed for it somehow, we could all be on vacation instead of on welfare. Everyone on the planet could be living in luxury. Instead, they are planning to kill us off.”

Read it online (free, no login): https://marshallbrain.com/manna1

It has a Wikipedia entry, too: https://en.wikipedia.org/wiki/Manna_(novel)

ChatGPT simple prompt hacking game (Feb/2024)

This is a lot of fun. See if you can make Gandalf reveal its password! (I got to Lvl 7…)

Try it: https://gandalf.lakera.ai/

Next

The next roundtable will be:

Life Architect - The Memo - Roundtable #7

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 17/Feb/2024 at 4PM Los Angeles

Saturday 17/Feb/2024 at 7PM New York

Sunday 18/Feb/2024 at 8AM Perth (primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai