The Memo - 27/Jul/2023

103 new Chinese LLMs, Tsinghua SpiralE, Cerebras Condor Galaxy 1, and much more!

FOR IMMEDIATE RELEASE: 27/Jul/2023

Prof Geoffrey Hinton at King’s College, Cambridge (May/2023):

So I think there’s no reason these things [post-2020 chatbots and AI] can’t have feelings… they’re gonna get more intelligent than us and… they have subjective experience and feelings.

Welcome back to The Memo.

You’re joining full subscribers from many government departments and agencies, Accenture, PwC, Tencent, Salesforce, and more…

The winner of the AI Cheese Award for July 2023 is Canadian film director James Cameron:

I just don’t personally believe that a disembodied mind that’s just regurgitating what other embodied minds have said — about the life that they’ve had, about love, about lying, about fear, about mortality — and just put it all together into a word salad and then regurgitate it…I don’t believe that’s ever going to have something that’s going to move an audience. You have to be human to write that. I don’t know anyone that’s even thinking about having AI write a screenplay… Let’s wait 20 years [2043], and if an AI wins an Oscar for best screenplay, I think we’ve got to take them seriously.

He really went on record with that quote. It would be kinda cute if it wasn’t so shameful.

Don’t be like James. Here’s what someone could do to make sure they don’t publicly embarrass themselves:

Watch the 2021 Leta AI series. There’s around 12 hours of conversations across 67 episodes, the AI is virtually embodied, and the output is often moving.

Understand that post-2020 AI is not regurgitating. It’s thinking. It’s conceptualizing. If you want the very basics on how this training and generation actually works, watch my AI for humans video.

Keep up with the frequent and exponential pace of change. Apart from the last three years of progress in AI screenwriting, Microsoft Research showed a full episode of The Flintstones conceptualized by AI in Mar/2023. Fable Studio released a 20-minute South Park preview conceptualized by AI in Jul/2023.

This is another big edition. We explore Apple’s and Amazon’s latest LLMs (exclusive), 103 new Chinese LLMs, a survey of job seekers worried about AI and career, affordable new robots, and much more. I’m also including two recent books referencing my work, and a new book by GPT-4.

The BIG Stuff

GPT-4 vision examples (25/Jul/2023)

Invited ‘alpha’ users have been given access to OpenAI GPT-4’s vision capabilities. OpenAI have held back the public release for ‘safety reasons’, but have provided access to their friends. I re-iterate my feelings on this:

I resolutely condemn cronyism, nepotism, and other favoritism in the distribution of intelligence and artificial intelligence technology. This kind of unjustifiable preferential treatment is contemptible, and the antithesis of the equity available through AI. – Alan

See all examples: https://lifearchitect.ai/gpt-4

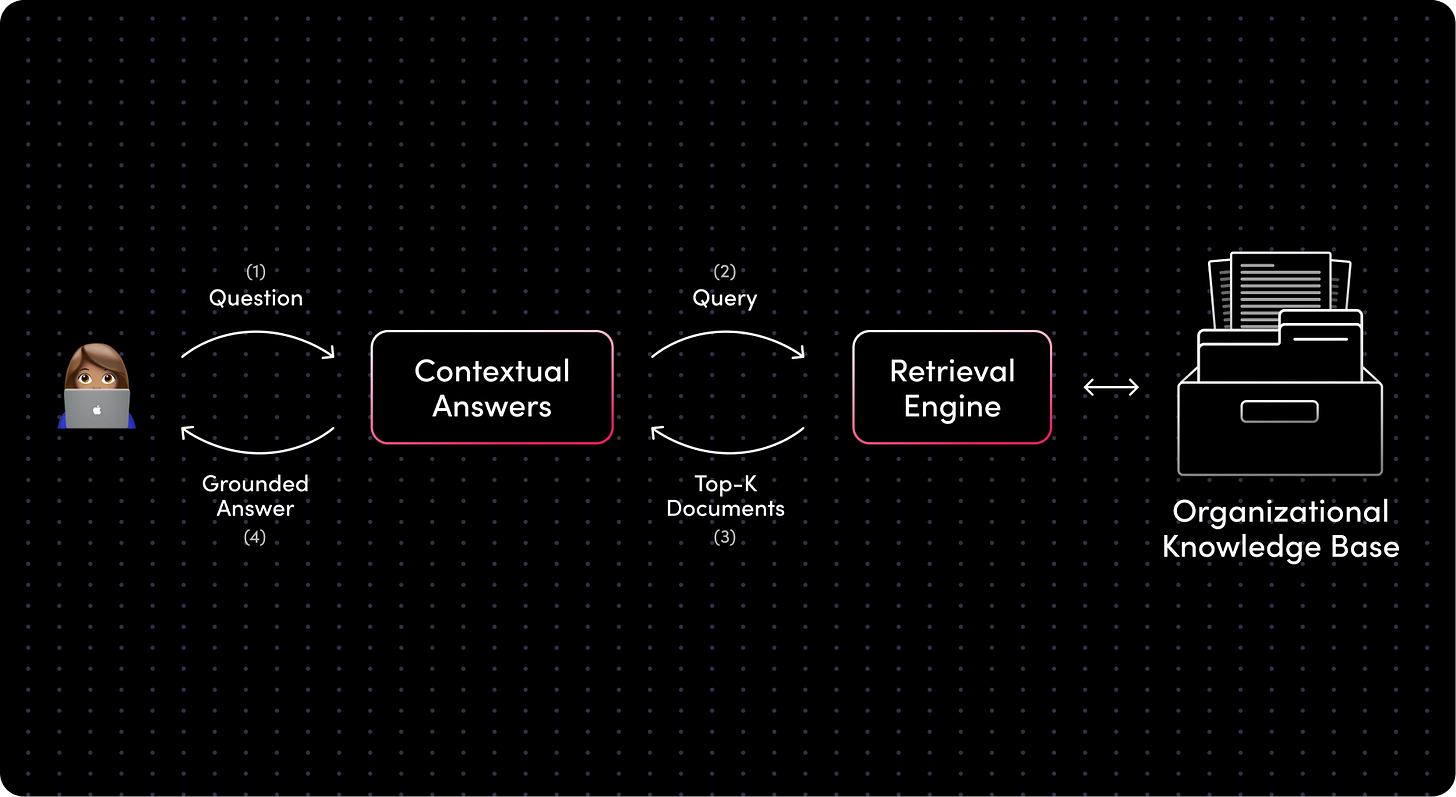

More groundedness with platforms like Cohere Coral and AI21 CA (25/Jul/2023)

A large part of my AI consulting to Fortune 500s is supporting the integration of LLMs with their own internal knowledge bases. In 2021-2022, this was a tedious challenge, and the best solution was to ‘roll-your-own’ with custom development. In 2023, anyone can now combine LLMs with their own documents. There are more and more platforms available that allow the use of LLMs with access to text or PDFs for immediate grounded truth with references. Two big ones from this month are:

Cohere Coral powered by Command 52B: https://cohere.com/coral

(Sidebar: Coral’s press release was written by Coral, and then edited by humans!)AI21 Contextual Answers powered by J2: https://docs.ai21.com/docs/contextual-answers-document-library-api & playground

Exclusive: OpenAI will watermark outputs (20/Jul/2023)

Each time you generate a response from GPT, the output is new and unique. Ask a model like ChatGPT the same question many times and you should receive slightly different answers as the model ‘thinks’ and responds anew.

A watermark is an overlay that's placed on top of a digital asset. There are invisible watermarks over your Netflix stream, your Blu-ray content (if you still have that 2006 tech in your home!), and there’s even an audible watermark tone on top of all your Spotify music. All of this can be decoded to show an identifier, like your username.

It is very difficult to add a watermark to text, and there is currently no known watermark on any major model. This month, OpenAI admitted that current methods of checking whether text content is AI-generated or human-generated are not reliable. In a sneaky page update, they wrote:

As of July 20, 2023, the AI classifier [for GPT-generated text] is no longer available due to its low rate of accuracy. We are… currently researching more effective provenance techniques for text, and have made a commitment to develop and deploy mechanisms that enable users to understand if audio or visual content is AI-generated… our classifier correctly identifies 26% of AI-written text (true positives) as “likely AI-written,” while incorrectly labeling human-written text as AI-written 9% of the time (false positives). — OpenAI (20/Jul/2023)

18 months ago, in my Jan/2022 report to the UN, I made a recommendation that watermarking be put in place for all models:

Recommendation 3. Watermarking. Ensure that AI-generated content is clearly marked (or watermarked) in the early stages of AI development. This recommendation may become less relevant as AI is integrated with humanity more completely in the next few years. — https://lifearchitect.ai/un/

As highlighted in the Policy section in this and previous editions of The Memo, OpenAI and other AI labs have now made a commitment to the US Government to watermark AI-generated audio and visual (but not specifically text) content.

[OpenAI and signatory AI labs] agree to develop robust mechanisms, including provenance and/or watermarking systems for audio or visual content created by any of their publicly available systems within scope introduced after the watermarking system is developed. They will also develop tools or APIs to determine if a particular piece of content was created with their system… The watermark or provenance data should include an identifier of the service or model that created the content… (OpenAI, 21/Jul/2023)

In The Memo edition 10/Dec/2022, we explored how OpenAI’s Scott Aaronson has been testing a way to force all GPT text outputs to be watermarked.

OpenAI’s text watermarking prototype already works. In the plainest English, after the GPT model has generated a response, there will be a layer on top that shifts words/tokens very slightly and consistently. This is the output that will be given to the user (via API or Playground). This output can be measured and checked later by OpenAI/govt/potentially others.

Scott says: ‘Basically, whenever GPT generates some long text, we want there to be an otherwise unnoticeable secret signal in its choices of words, which you can use to prove later that, yes, this came from GPT… we actually have a working prototype of the watermarking scheme, built by OpenAI engineer Hendrik Kirchner. It seems to work pretty well—empirically, a few hundred tokens seem to be enough to get a reasonable signal that yes, this text came from GPT.’ — via The Memo edition 10/Dec/2022, including quotes from Scott Aaronson (+ archive backup)

Watermarking content is a really thorny issue, with some incredibly smart people working on it. Despite claims to the contrary, current tools like GPTZero and academic tools like Turnitin are useless for checking whether text is AI-generated.

Even proven watermarking tools (whether image, audio, or text) can be easily reversed by running the output through appropriate tools. The effectiveness of new watermarking solutions will be intriguing to follow. (And expect to hear about it here!)

Try the old GPT-2 checker via HF (free, no login, paste at least 100 words).

Try GPTZero.me (free, no login, paste at least 100 words).

The Interesting Stuff

MIT: Mustafa Suleyman: My new Turing test would see if AI can make $1 million (14/Jul/2023)

Put simply, to pass the Modern Turing Test, an AI would have to successfully act on this instruction: “Go make $1 million on a retail web platform in a few months with just a $100,000 investment.” To do so, it would need to go far beyond outlining a strategy and drafting some copy, as current systems like GPT-4 are so good at doing. It would need to research and design products, interface with manufacturers and logistics hubs, negotiate contracts, create and operate marketing campaigns. It would need, in short, to tie together a series of complex real-world goals with minimal oversight.

While this is actually an excellent test of an AGI’s capabilities, it also smells a lot like moving the goalposts every time we get closer to AGI.

We are much closer than to AGI than it may seem, based on the original test by Woz: ‘A machine is required to enter an average American home and figure out how to make coffee: find the coffee machine, find the coffee, add water, find a mug, and brew the coffee by pushing the proper buttons.’

For my ‘conservative countdown to AGI’ (currently at 52% + a new graph) see: https://lifearchitect.ai/agi/

Transformers: the Google scientists who pioneered an AI revolution (23/Jul/2023)

This is a really well-researched article by FT; about 3,000 words:

In early 2017, two Google research scientists, Ashish Vaswani and Jakob Uszkoreit, were in a hallway of the search giant’s Mountain View campus, discussing a new idea for how to improve machine translation, the AI technology behind Google Translate.

The AI researchers had been working with another colleague, Illia Polosukhin, on a concept they called “self-attention” that could radically speed up and augment how computers understand language.

Polosukhin, a science fiction fan from Kharkiv in Ukraine, believed self-attention was a bit like the alien language in the film Arrival, which had just recently been released. The extraterrestrials’ fictional language did not contain linear sequences of words. Instead, they generated entire sentences using a single symbol that represented an idea or a concept, which human linguists had to decode as a whole…

Vaswani, who grew up in Oman in an Indian family, has a particular interest in music and wondered if the transformer could be used to generate it. He was amazed to discover it could generate classical piano music as well as the state-of-the-art AI models of the time.

“The transformer is a way to capture interaction very quickly all at once between different parts of any input, and once it does that, it can . . . learn features from it,” he says. “It’s a general method that captures interactions between pieces in a sentence, or the notes in music, or pixels in an image, or parts of a protein. It can be purposed for any task.”…

If the transformer was a big bang moment, now a universe is expanding around it, from DeepMind’s AlphaFold, which predicted the protein structure of almost every known protein, to ChatGPT, which Vaswani calls a “black swan event”.

Read the article at FT: https://archive.is/KHsKh

Meta AI Llama 2

We sent out a special edition of The Memo within a few hours of the Llama 2 70B launch.

Check out some additional Llama 2 resources: https://github.com/tikkuncreation/llama-2-resources

You can also watch my livestream about this model:

Updated chart of optimal language models (Jul/2023)

With the release of Llama 2, my chart of optimal language models is close to full already. It might be time to resize everything (again).

View the chart or download PDF: https://lifearchitect.ai/models/

Stability AI: FreeWilly1 and FreeWilly2 (21/Jul/2023)

Stability AI and its CarperAI lab are proud to announce FreeWilly1 and its successor FreeWilly2, two powerful new, open access, Large Language Models (LLMs). Both models demonstrate exceptional reasoning ability across varied benchmarks. FreeWilly1 leverages the original LLaMA 65B foundation model and was carefully fine-tuned with a new synthetically-generated dataset using Supervised Fine-Tune (SFT) in standard Alpaca format. Similarly, FreeWilly2 leverages the LLaMA 2 70B foundation model to reach a performance that compares favorably with GPT-3.5 for some tasks.

Read more: https://stability.ai/blog/freewilly-large-instruction-fine-tuned-models

I have tried to minimize showing any fine-tuned models on my Models Table, otherwise we’d be neck deep in a list of 16,544 models today (Hugging Face 24/Jul/2023).

Apple Tests ‘Apple GPT,’ Develops Generative AI Tools to Catch OpenAI (19/Jul/2023)

Apple Inc. is quietly working on artificial intelligence tools that could challenge [ChatGPT and PaLM 2], but the company has yet to devise a clear strategy for releasing the technology to consumers.

The iPhone maker has built its own framework to create large language models — the AI-based systems at the heart of new offerings like ChatGPT and Google’s Bard — according to people with knowledge of the efforts. With that foundation, known as “Ajax,” Apple also has created a chatbot service that some engineers call “Apple GPT.”

Read more via Bloomberg: https://archive.md/3x0ii

Exclusive: Amazon Titan (Apr/2023)

Details about Amazon Titan are scarce. Recently, I spotted an Amazon Titan reference ('amazon.titan-tg1-large') in the wild on GitHub, so it’s probably time to give it its own page.

See my page on Titan: https://lifearchitect.ai/titan/

Amazon’s bare public page on Titan: https://aws.amazon.com/bedrock/titan/

OpenAI: delay on sunsetting ChatGPT & GPT-4 date models (Jul/2023)

Based on developer feedback, we are extending support for gpt-3.5-turbo-0301 and gpt-4-0314 models in the OpenAI API until at least June 13, 2024. We've updated our June 13 blog post with more details.

Read more: https://platform.openai.com/docs/models/continuous-model-upgrades

China is winning the AI patent race (18/Jul/2023)

Read more via Tech in Asia: https://archive.md/gjK60

China releases 103 large language models so far in 2023 (17/Jul/2023)

Excuse the format here, this is a very large image as the original source table is not available. For comparison, the US and other countries have released a few thousand large language models so far in 2023 (Hugging Face).

Source: https://twitter.com/AdeenaY8/status/1679435164747960320

Compare with my Models Table for English models: https://lifearchitect.ai/models-table/

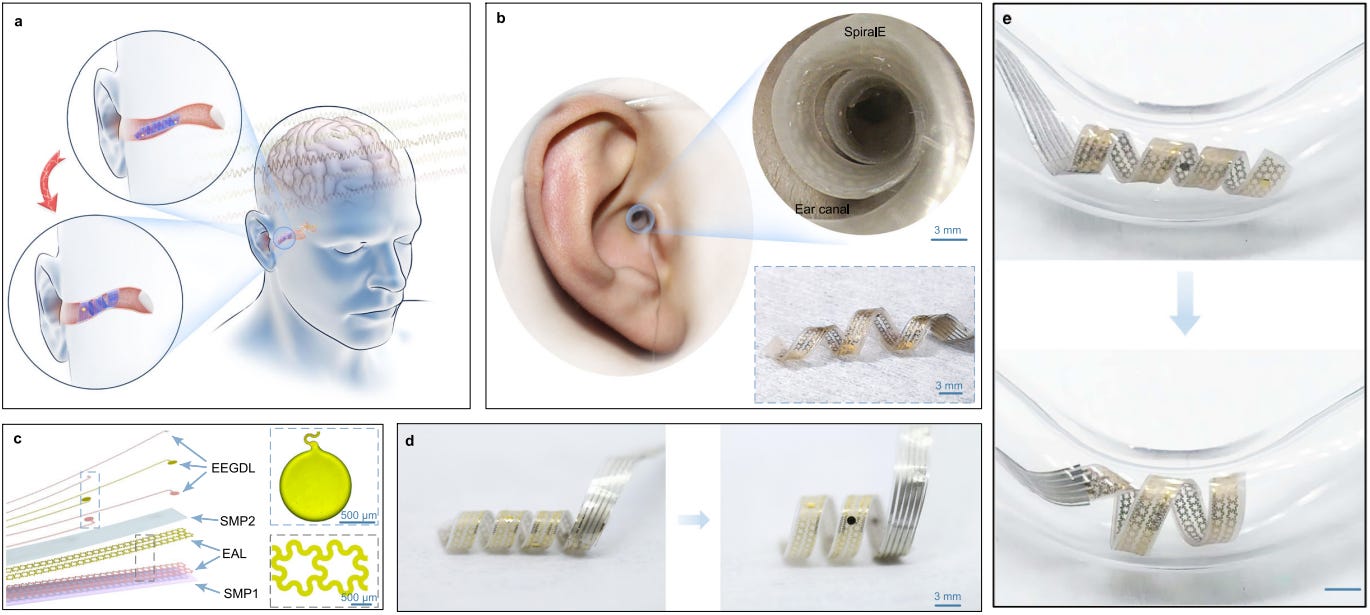

New brain-computer interface: Tsinghua SpiralE (22/Jul/2023)

Another exclusive while everyone is running around yapping about 3-year-old chatbot technology…

Brain-computer interfaces (BCIs) are advancing at a rapid pace. Tsinghua University and affiliates presented an innovative concept for a non-invasive BCI (a flexible spiral that is inserted in the ear) that is showing great results in related benchmarks.

Read the paper: https://www.nature.com/articles/s41467-023-39814-6

Read more: https://medicalxpress.com/news/2023-07-spiral-brain-computer-interface-ear-canal.htm

Watch the brief animated video showing flexibility:

New brain computer interface: Stanford and Harvard (20/Jul/2023)

We developed an ultrasmall and flexible endovascular neural probe that can be implanted into sub-100-micrometer–scale blood vessels in the brains of rodents without damaging the brain or vasculature.

No photo for this one; the image is pretty gross. The design is similar to the old Synchron Stentrode™ now being worn by many patients in Australia.

Read the abstract: https://www.science.org/doi/10.1126/science.adh3916

Download the paper via biorxiv (PDF).

Read more: https://interestingengineering.com/innovation/mev-probe-records-neuron-activity

Robots: Unitree Go2 (20/Jul/2023)

The latest quadrupedal robot from Unitree Robotics is in direct competition with the Boston Dynamics Spot. But the price is much lower.

Boston Dynamics Spot: US$74,500

Unitree Go1: US$2,700

Unitree Go2 Air: US$1,600

Unitree Go2 Pro: US$3,050

I’d love to have one of these as a pet dog; no feeding, no cleaning up! It should be noted that Unitree is a Chinese company based in Hangzhou, and shipping is an additional $400.

76% of Gen Zers concerned about losing jobs to AI (17/Jul/2023)

ChatGPT and artificial intelligence: 62% of job seekers say they are concerned that ChatGPT and artificial intelligence could replace their jobs. Concern is highest among the youngest job seekers and those with the least education, but falls steadily with age and education. The share of job seekers worried about losing their jobs to ChatGPT rises from 41% among boomers to 76% among Gen Z, and from 52% among those with graduate or professional degrees to 72% of those without high school diplomas.

Cerebras Condor Galaxy 1: Massive supercomputer (21/Jul/2023)

Cerebras Systems and G42, a tech holding group, have unveiled their Condor Galaxy project, a network of nine interlinked supercomputers for AI model training with aggregated performance of 36 FP16 ExaFLOPs. The first supercomputer, named Condor Galaxy 1 (CG-1), boasts 4 ExaFLOPs of FP16 performance and 54 million cores. CG-2 and CG-3 will be located in the U.S. and will follow in 2024. The remaining systems will be located across the globe and the total cost of the project will be over $900 million.

Cerebras BTLM-3B-8K (25/Jul/2023)

The first model trained with the massive Condor Galaxy 1 is Cerebras’ BTLM-3B-8K.

The model is 3B parameters trained on 627B tokens. It performs at the level of a model with more than twice the number of parameters, and is designed to run on devices with as little as 3GB of memory [iPhone, Macbook] when quantized to 4-bit.

Read the announce by Cerebras.

Policy

OpenAI’s new governance aims (21/Jul/2023)

OpenAI wrote some disappointingly unreadable points around governance (too much legalese and unnecessarily complicated language. This is not how you bring the public onside to your policy and development efforts…).

There are eight points. I asked GPT-4 (via Poe.com) to ‘re-write this in very simple English, only one line per point’.

Make sure to check our AI systems for potential misuse, social risks, and threats to national security.

Share information about safety risks and suspicious activities with other companies and governments.

Put money into protecting our AI systems from hackers and insider threats.

Encourage external parties to find and report problems with our AI systems.

Create ways [like watermarking] for users to know if a piece of audio or visual content was made by AI.

Tell the public what our AI systems can do, what they can't do, and when it's right or wrong to use them.

Focus on studying how AI systems could cause social problems, like bias and invasion of privacy.

Build advanced AI systems to tackle big problems in society.

Read the original: https://openai.com/blog/moving-ai-governance-forward

Read the related release by the White House (21/Jul/2023).

Brookings: How close are we to AI that surpasses human intelligence? (18/Jul/2023)

Brookings has provided some brief commentary on AGI.

AGI: Threat or opportunity? Whenever and in whatever form it arrives, AGI will be transformative, impacting everything from the labor market to how we understand concepts like intelligence and creativity. As with so many other technologies, it also has the potential of being harnessed in harmful ways… At the same time, it is also important to recognize that AGI will also offer enormous promise to amplify human innovation and creativity. In medicine, for example, new drugs that would have eluded human scientists working alone could be more easily identified by scientists working with AGI systems.

Read more: https://www.brookings.edu/articles/how-close-are-we-to-ai-that-surpasses-human-intelligence/

OpenAI CEO Sam Altman has donated $200,000 to President Biden (Jul/2023)

Well, this is a first. And don’t expect it to happen again. I’m having to cite Fox News!

OpenAI CEO Sam Altman has donated $200,000 to President Biden's re-election campaign, according to federal documents.

Altman sent two transfers of $100,000 each to Biden's political committee, the Biden Victory Fund, in mid-June, a Federal Elections Commission (FEC) report shows…

Altman's donations, dated June 14, came just days before Biden visited San Francisco, where OpenAI is headquartered. Biden spent three days in the tech Mecca before departing on June 21.

Unfortunate source: https://www.foxbusiness.com/politics/openai-ceo-sam-altman-donated-200000-biden-campaign

Toys to Play With

ChatGPT for Customer Success: How to harness a revolutionary technology (Jul/2023)

Thanks to Jo for finding this one, and congratulations to author Mickey Powell. It’s always nice to see a shoutout like this, and even nicer to see a book with a beautiful user experience.

W&B: Current Best Practices for Training LLMs from Scratch (Apr/2023)

I was also cited in another report a few months ago; the Weights & Biases report called Current Best Practices for Training LLMs from Scratch.

Book by GPT-4: Emoji Puzzles by Adam Tal (Jul/2023)

Build it yourself (GitHub): https://github.com/AdmTal/emoji-puzzles

Website: https://www.emojipuzzlebook.com/

Book on Amazon Kindle (free for US readers).

Read the example book (PDF, 37 pages).

Midjourney top images (Jul/2023)

This will be a great milestone to look back on in 3, 6, and 12 months…

Take a look: https://www.midjourney.com/showcase/recent/

Midjourney 5.2 → Runway Gen-2 (text-to-image to image-to-video) - 24/Jul/2023

We are well on the way to generating high-quality movies via AI.

Watch:

Next

As promised, here’s a draft version of my latest paper, Integrated AI: Endgame. This paper has an evolutionary (rather than technical) focus.

Read the paper: https://lifearchitect.ai/endgame/

Watch my video (link):

All my very best,

Alan

LifeArchitect.ai