The Memo - 10/Dec/2022

Watermarking text in GPT models, the new Google LaMDA as Character.ai, DeepMind Dramatron playground, and much more!

FOR IMMEDIATE RELEASE: 10/Dec/2022

Welcome back to The Memo.

While The Memo is supposed to be sent out in monthly editions, there is a lot happening right now, so it has been a bit more frequent!

In the ‘toys to play with’ section at the end of this edition, we look at a new ChatGPT with web access (free), the first user interface for Stable Diffusion 2.1 (free), DeepMind’s Dramatron playground released today (using GPT-3 instead of Chinchilla), and the new Leta archives!

The BIG Stuff

OpenAI building in a watermarking layer to text generation (28/Nov/2022)

Mega-engineer and former gifted child Scott Aaronson at OpenAI has been working on adding text watermarking to GPT outputs. In my Jan/2022 report to the UN, I made a recommendation that this be put in place for all models:

Recommendation 3. Watermarking. Ensure that AI-generated content is clearly marked (or watermarked) in the early stages of AI development. This recommendation may become less relevant as AI is integrated with humanity more completely in the next few years.

OpenAI’s text watermarking prototype already works. In the plainest English, after the GPT model has generated a response, there will be a layer on top that shifts words/tokens very slightly and consistently. This is the output that will be given to the user (via API or Playground). This output can be measured and checked later by OpenAI/govt/potentially others. Scott says:

My main project so far has been a tool for statistically watermarking the outputs of a text model like GPT. Basically, whenever GPT generates some long text, we want there to be an otherwise unnoticeable secret signal in its choices of words, which you can use to prove later that, yes, this came from GPT.

…instead of selecting the next token randomly, the idea will be to select it pseudorandomly, using a cryptographic pseudorandom function, whose key is known only to OpenAI.

…we actually have a working prototype of the watermarking scheme, built by OpenAI engineer Hendrik Kirchner. It seems to work pretty well—empirically, a few hundred tokens seem to be enough to get a reasonable signal that yes, this text came from GPT.

Scott’s entire post is worth reading, and he links out to one of my papers. Warning: the post is over 10,000 words, plus more than 100 insightful comments.

Anthropic estimates AI model training costs will increase by 100x (8/Dec/2022)

In a recent live presentation, Dario Amodei and Anthropic (ex-OpenAI staff) commented on the large language model training space, including scaling. Even as we become much more efficient in training, Anthropic are estimating that organizations will continue to increase spending on compute, by a factor of 100x. (‘Cost on biggest model today [Dec/2022] is $10M. Expect $100M in a few years [~2026]. $1B by 2030.’)

via Rhys Lindmark.

The Interesting Stuff

OpenAI CEO talks about the Singularity (19/Nov/2022)

21 minutes worth watching! Sam says:

Imagine that all of the scientific progress since the beginning of the Enlightenment until now could happen in one year…

I think we're really just gonna have to think about how we share wealth in a very different way than we have in the past. Like the fundamental forces of capitalism and what make that work, I think break down a little bit… some version of like a basic income or basic wealth redistribution or some sort of version like that. We're trying to study that. I think it's collectively under-explored.

Watch the video:

I’ve previously defined the Singularity in this video.

The LifeArchitect.ai Who Moved My Cheese? AI Awards! (Dec/2022)

Look, I didn’t really want to do this. But the sheer lunacy of some of the noisiest critics deserves to be memorialized, much like the famous Darwin Awards.

Inspired by Spencer Johnson’s 1998 business classic, Who Moved My Cheese? (wiki link), these awards recognize those who are most change-resistant, their outrageous quotes and claims, and… that’s about it!

Take a look: https://lifearchitect.ai/cheese/

Character.ai provides some detail (5/Dec/2022)

Prompted by the noisy release of ChatGPT, the team have finally spoken up, after months of silence. Character.ai now generates 1 billion words per day (~692,000wpm), which is only ~22% of what GPT-3 was generating a year ago.

Still, that’s pretty good for a new project! Expect to see more of these guys in 2023. Noam Shazeer says:

As an early engineer at Google, I’ve built AI systems used by billions of people, from Google's Spell Checker ("did you mean?") to the first targeting system for Adsense. I also co-invented Transformers, scaled them to supercomputers for the first time, and pioneered large-scale pretraining, all of which are foundational to recent AI progress…

My co-founder Daniel de Freitas and I witnessed this technology's possibilities first-hand as he invented and led the development of Meena and LaMDA…

Two months after launching in September, our beta generates 1 billion words per day. We've been particularly impressed by the creativity of our community, who have created more than 350,0000 unique Characters, with applications spanning searching for information, coaching, education, entertainment and more.

Read more: https://blog.character.ai/introducing-character/

Try it yourself: https://beta.character.ai/

Also, try interacting with ‘The Psychologist’.

OpenAI updates Whisper on GitHub (6/Dec/2022)

Just a small update to the large model (view). This improves transcription of languages other than English.

OpenAI Codespace rumor (8/Dec/2022)

There is a secret space inside ChatGPT called ‘Codespace’, only available to OpenAI staff at the moment.

via samczsun.

AI checker by Originality.ai (8/Dec/2022)

For the current crop of post-2020 large language models, and without any fancy layer like Scott’s solution at the top of this edition, it has not been possible to detect AI-generated text content. Back in 2019, HF hosted a model that would attempt to detect whether text had been generated by GPT-2, with mixed results.

Originality.AI now thinks they can somehow detect whether raw text is AI-generated (hint: they probably can’t for most cases). The platform ingests text and then provides a detection score and plagiarism check. I see this as a strange sideways glance on the highway to superintelligence, and not a real solution (even if it works, and even for academia!).

Originality AI can detect the AI on all the text generated by GPT-3, GPT-3.5, and ChatGPT by 99.41% on average… GPT-3 has the highest maximum average score of 99.95%, followed by GPT-3.5 99.65%, and ChatGPT at 98.65%.

Take a look: https://originality.ai/can-gpt-3-5-chatgpt-be-detected/

More autonomous cars: Uber in Las Vegas (7/Dec/2022)

Uber now has robotaxis available for its customers to hail in Las Vegas… Motional, a joint venture between Hyundai and Aptiv… will feature safety drivers behind the steering wheel, though the vehicles will be operated by Motional’s autonomous driving system. Riders are not being charged for the initial launch, with both companies saying that fares will come in the future. And Motional says it intends on launching a public fully driverless service without safety drivers in 2023.

I rode in the driverless Waymo (by Google) last year while living in the US. There was no safety driver, just me and the AI. It successfully navigated the vehicle through busy intersections and even parking lots with pedestrians. The Waymo driverless service on the US West Coast has been operating there for four years—since 2018! You can watch a bit of that in my 2021 report (timecode link).

Read more about Motional: https://www.theverge.com/2022/12/7/23496383/uber-motional-av-robotaxi-las-vegas-ridehail

Toys to Play With

DeepMind releases a Dramatron demo with GPT-3 instead of Chinchilla (10/Dec/2022)

Dramatron is a script writing tool [based on Chinchilla 70B] that leverages large language models. This page allows you to use a basic version of the Dramatron tool [using GPT-3].

Try it with your OpenAI key: https://deepmind.github.io/dramatron/index.html

Watch my video about this amazing model:

mage.space now using Stable Diffusion 2.1 (8/Dec/2022)

I love this free platform for quick text-to-image generation. The team notes that: “In general, v1.5 is better for art and NSFW, while v2.1 is better for realism.”

To use the latest engine, you’ll need to click these buttons before generating:

View options > Stable Diffusion v2.1

I also click this for a decent aspect ratio:

View options > Landscape (3:2)

Try it for free: https://www.mage.space/

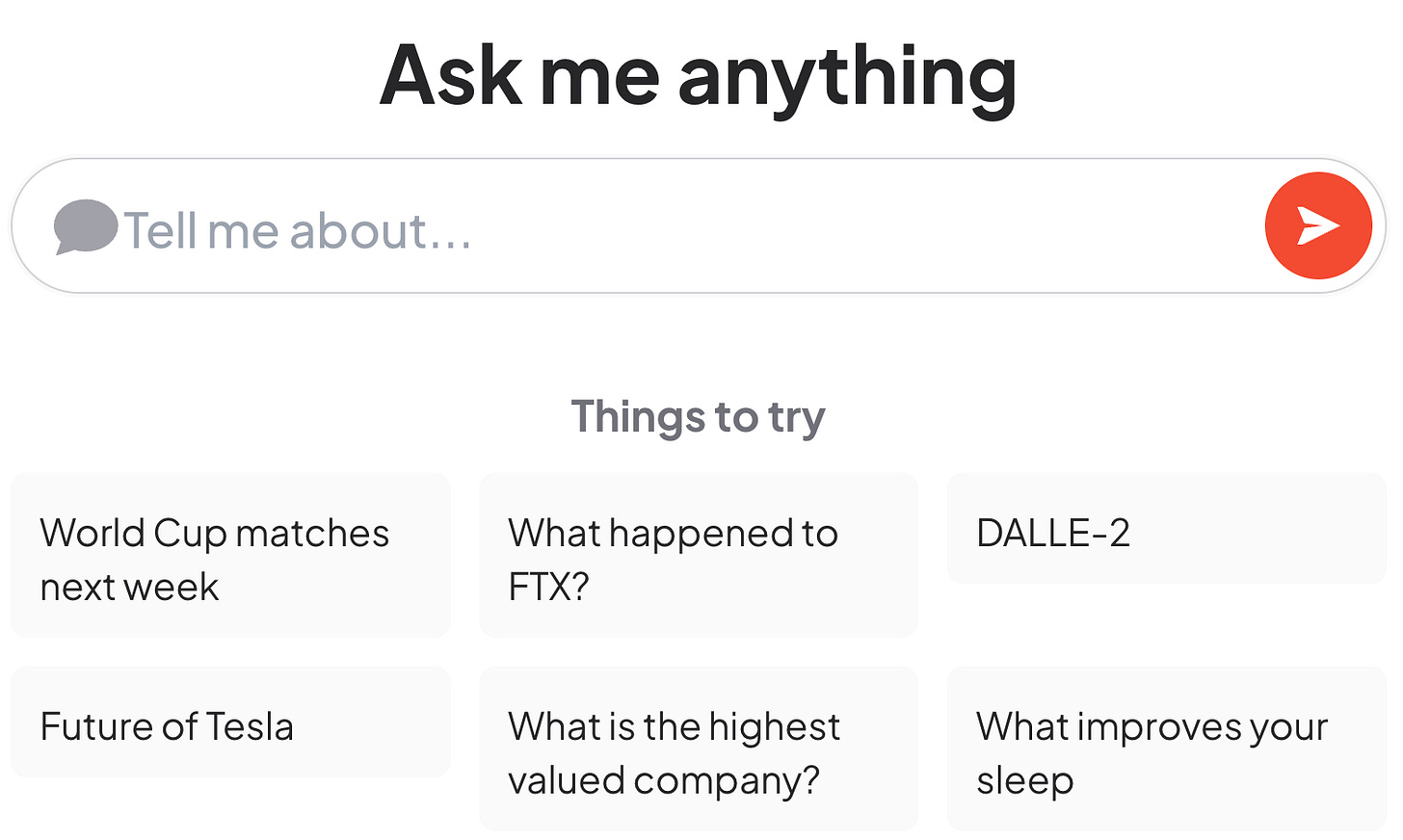

Perplexity.ai includes web access (Dec/2022)

If you liked ChatGPT (where web access is currently disabled), you’ll probably like this!

This is a demo inspired by OpenAI WebGPT, not a commercial product. We use large language models (OpenAI API) and search engines.

If Perplexity is using text-davinci-003, and I think it is, this should have even better outputs than ChatGPT, though it is not dialogue…

Try it out for free: https://www.perplexity.ai/

Here’s my test prompt for a friend who lives in Claremont, just west of Perth. I loved the real estate listings at the end!:

https://www.perplexity.ai/?q=What+can+i+see+from+my+balcony+in+claremont%3F%2C+WA%3F

Leta AI added to The Internet Archive (Dec/2022)

All episodes of the Leta AI series have been added to archive.org for very-long-term backup (i.e. hopefully forever) and historical record. You can leave a review for posterity if you’d like.

Take a look: https://archive.org/details/leta-ai

Next

Due to being asked too many times about it, I’ve prepared:

I’ve used some of the concepts from this great post: Illustrating RLHF (9/Dec/2022).

All my very best,

Alan

LifeArchitect.ai

Housekeeping…

Unsubscribe:

Older subscriptions before 17/Jul/2022, please use the older interface or just reply to this email and we’ll stop your payments and take you off the list!

Newer subscriptions from 17/Jul/2022, please use Substack as usual.

Note that the subscription fee for new subscribers will increase from 1/Jan/2023. If you’re a current subscriber, you’ll always be on your old/original rate while you’re subbed.

Gift a subscription to a friend or colleague for the holiday season: