The Memo - 19/Feb/2024

OpenAI Sora, Gemini 1.5, Stable Cascade, and much more!

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 19/Feb/2024

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 70%The winner of the The Who Moved My Cheese? AI Awards! for February 2024 is Gergely Orosz:

As a [software engineer], it is, frankly, terrifying to stare down the possibility of “superhuman devs” that are AI tools running on GPUs that do your job better. I get that this is what investors and founders want. I’m not that eager to rush to this future. (16/Feb/2024)

Gergely briefly consulted to OpenAI, and was one of the first to publicly reveal GPT-4’s architecture (here’s the widely accepted version from 11/Jul/2023 or see my GPT-4 page). I was beyond shocked to hear this take from an informed expert. I have enormous sympathy for Gergely, yet still find it strange that humans are so change-resistant. I keep coming back to this old quote from Ray Kurzweil:

It’s taken me a while to get my mental and emotional arms around the dramatic implications of what I see for the future [with artificial intelligence]. So, when people have never heard of ideas along these lines, and hear about it for the first time and have some superficial reaction, I really see myself some decades ago. I realize it’s a long path to actually get comfortable with where the future is headed. (2009)

The next roundtable will be 16/Mar/2024, details at the end of this edition.

The BIG Stuff

OpenAI Sora (‘sky’) (15/Feb/2024)

This model bumped up my AGI countdown to 70%: https://lifearchitect.ai/agi/

In plain English, OpenAI Sora (Japanese for ‘sky’) is a new AI model that can ‘understand and simulate the physical world in motion… solve problems that require real-world interaction.’

In response to a text prompt, it conceptualizes 1-minute HD video from scratch. But Sora is not just a text-to-video model. It is a world model with a fully-functioning physics engine underlying its conceptualizations. It is text-to-video, image-to-video, text+image-to-video, video-to-video, video+video-to-video (sample), text+video-to-video (sample), and text-to-image (sample).

Dr Jim Fan from NVIDIA provided an excellent explanation of this sample video output by OpenAI:

Prompt: Photorealistic closeup video of two pirate ships battling each other as they sail inside a cup of coffee.

The simulator instantiates two exquisite 3D assets: pirate ships with different decorations. Sora has to solve text-to-3D implicitly in its latent space.

The 3D objects are consistently animated as they sail and avoid each other’s paths.

Fluid dynamics of the coffee, even the foams that form around the ships. Fluid simulation is an entire sub-field of computer graphics, which traditionally requires very complex algorithms and equations.

Photorealism, almost like rendering with raytracing.

The simulator takes into account the small size of the cup compared to oceans, and applies tilt-shift photography to give a "minuscule" vibe.

The semantics of the scene does not exist in the real world, but the engine still implements the correct physical rules that we expect. (16/Feb/2024)

Like DALL-E 3 (paper), Sora is AI trained by AI. ‘We first train a highly descriptive captioner model and then use it to produce text captions for all videos in our training set.’

See the OpenAI Sora project page with all videos: https://openai.com/sora

Watch a movie of all videos upscaled to 4K (no sound): https://youtu.be/f2h9Jt2Awpc

Read about a possible emergent ability: Object permanence and spatial awareness.

There is a sinister rumor that this is related to OpenAI’s Q* project, and that Sora has been available in the OpenAI lab for about a year, since March 2023. This is similar to GPT-4, which—even after safety guardrails had been applied—was recommended to be held in the lab for more than a year, and was still held for eight months before release. Sora is not being released to the public in the short-term, but was perhaps revealed to coincide with the announcements of two other models (Meta V-JEPA and Google Gemini 1.5, both below).

Not including the rumored one year delay, if Sora is released in Dec/2024, it will line up with forced time delays imposed by OpenAI for its other models all the way back to 2019, and robbing the public of access to increased intelligence:

Viz: https://lifearchitect.ai/gap/

[Sidenote: OpenAI addressed the unfair economic advantages of first movers in its DALL-E 2 analysis: https://lifearchitect.ai/gpt-4#cronyism and in Oct/2023 I provided a look at what is happening now using Tegmark’s prescient Life 3.0 chapter called ‘AGI achieved internally: A re-written story‘.]

Sora’s announcement and delay may also be due to the US elections happening in November 2024. OpenAI’s policy advisor Dr Yo Shavit noted in a reply to a deleted tweet:

We very intentionally are not sharing [OpenAI Sora] widely yet - the hope is that a mini public demo kicks a social response into gear. (15/Feb/2024)

That social—and especially governmental—response is something I’ve been waiting on for the last few years. See my media releases:

Media release: AI fire alarm (20/Jul/2021)

Media release: AI is outperforming humans in both IQ and creativity in 2021 (19/Sep/2021)

Media release: Artificial general intelligence is here. Leaders not endorsing this revolution are guilty of negligence. (25/Oct/2023)

Gemini 1.5 Pro (15/Feb/2024)

This model also bumped up my AGI countdown: https://lifearchitect.ai/agi/

The big changes are:

Model architecture has moved from ‘dense’ (like nearly all other models) to a ‘sparse mixture-of-experts’ (like GPT-4). This means it was cheaper to train, has more parameters (like human synapses) but not all of them activated at once, with the drawback of being a lot more complex to implement. This would explain the delay between version 1.0 and 1.5, even though Google DeepMind may have been working on them both at the same time.

Much higher performance, even with ‘smaller’ models (using this different architecture).

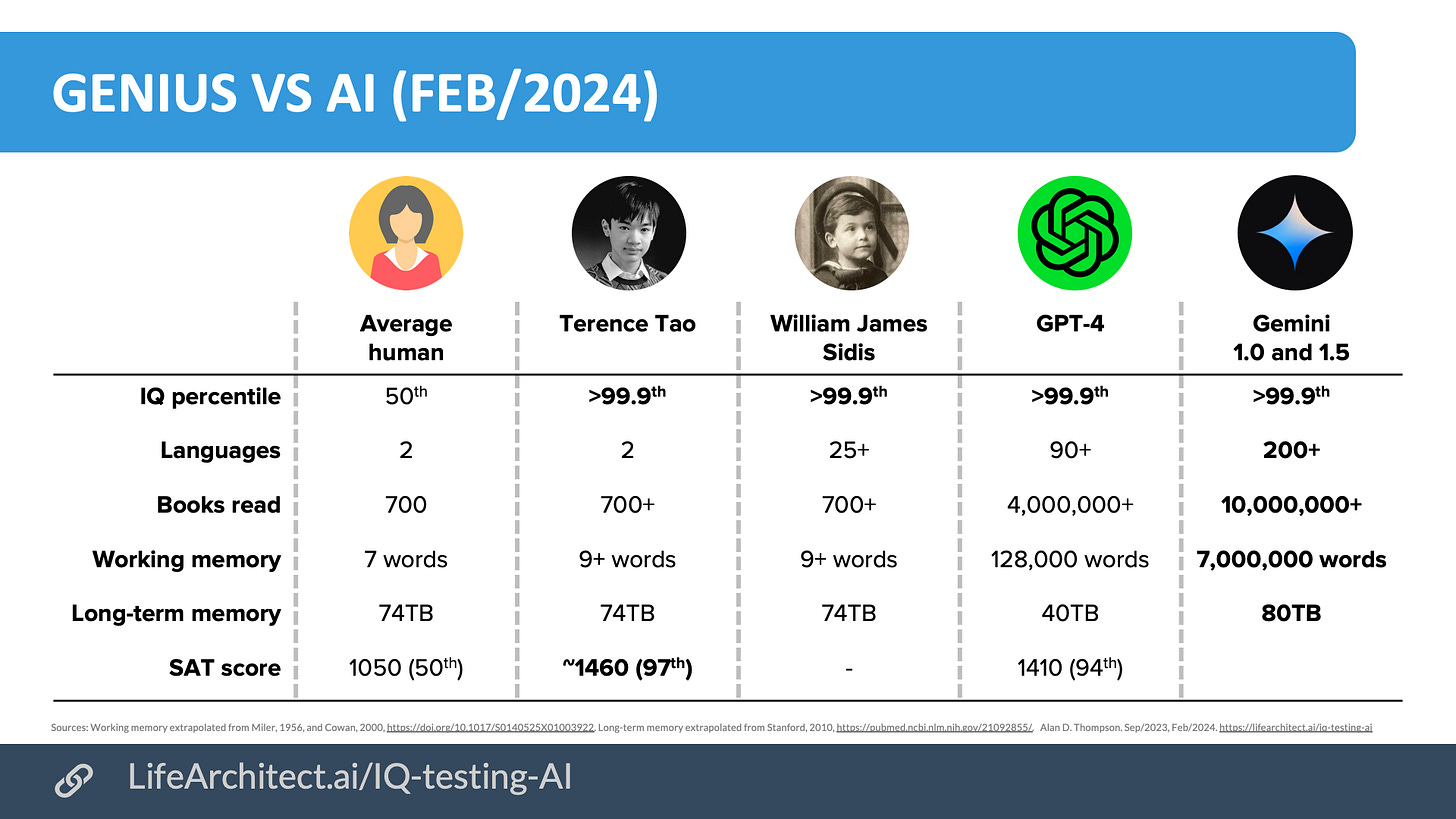

Short term memory or working memory (includes manipulation of information, and called ‘context window’ in AI models) of 1 million tokens in production and 10 million tokens in the lab. Compare this to 128,000 tokens for GPT-4, and usually around 4,000 tokens for other models. [Sidenote: Google released an appendix called ‘What is a long context window?’ on 16/Feb/2024.] A longer working memory allows Gemini 1.5 to hold a lot of information in its ‘mind’ (months of conversation at once), and is already superhuman compared to our average working memory of about seven words(!).

If you’re interested in comparing your own working memory with machines performing at 1,000,000×, there are a few online tests available. You’ll find this basic digit span task subtest in many IQ assessments, including the Wechsler Adult Intelligence Scale (WAIS). Here’s a free version, no login. Click ‘Forward’ (so it says ‘Reverse’) and then ‘New Test’ to be in simulated test conditions.

[Sidenote: Child prodigies always appear in the 99th percentile for working memory, regardless of their performance in other subtests (14/Mar/2014). You can watch my 2018 series Making child prodigies on YT or Apple TV. In the test below, with a span of 6 in reverse, I scored 0.]

Click ‘Forward’ (so it says ‘Reverse’) and then ‘New Test’ to be in simulated test conditions.

See the viz: https://lifearchitect.ai/iq-testing-ai/

See Gemini 1.5 Pro on the Models Table: https://lifearchitect.ai/models-table/

I released my final report on Gemini here: https://lifearchitect.ai/gemini-report/

ChatGPT outputting 1.5 million tokens per second worldwide (Feb/2024)

I’d previously estimated ChatGPT’s output as a bit higher than this, but it was great to hear OpenAI’s CEO reveal that the platform is outputting 100B words per day. Here are those numbers in full compared to GPT-3 just three years ago…

Download viz: https://lifearchitect.ai/chatgpt/

The Interesting Stuff

UPenn announces first Ivy League undergraduate degree in AI (15/Feb/2024)

The University of Pennsylvania introduces the first Ivy League Bachelor of Science in Engineering (BSE) in Artificial Intelligence (sic, yes all of those ‘ins’ are part of the official degree title), focusing on the ethical application of AI in various fields and featuring coursework in machine learning, computing algorithms, and robotics. The course starts September 2024.

Read more via TimeNow.

Browse the curriculum: https://ai.seas.upenn.edu/curriculum/

Two months after the public release of GPT-4 and its training in Icelandic (OpenAI, Mar/2023), I helped design the world’s first Executive Master in Artificial Intelligence for the Icelandic Center for Artificial Intelligence (ICAI). Readers with a full subscription to The Memo can watch my opening lecture here:

Introducing Stable Cascade (12/Feb/2024)

Stability AI announced the research preview release of Stable Cascade, a new text-to-image model that is easy to train and fine-tune on consumer hardware, and offers efficient hierarchical compression of images for high-quality output.

I expect that this will be available on Poe.com shortly.

Read more via Stability AI.

View the repo: https://github.com/Stability-AI/StableCascade

Sam Altman Seeks Trillions of Dollars to Reshape Business of Chips and AI (8/Feb/2024)

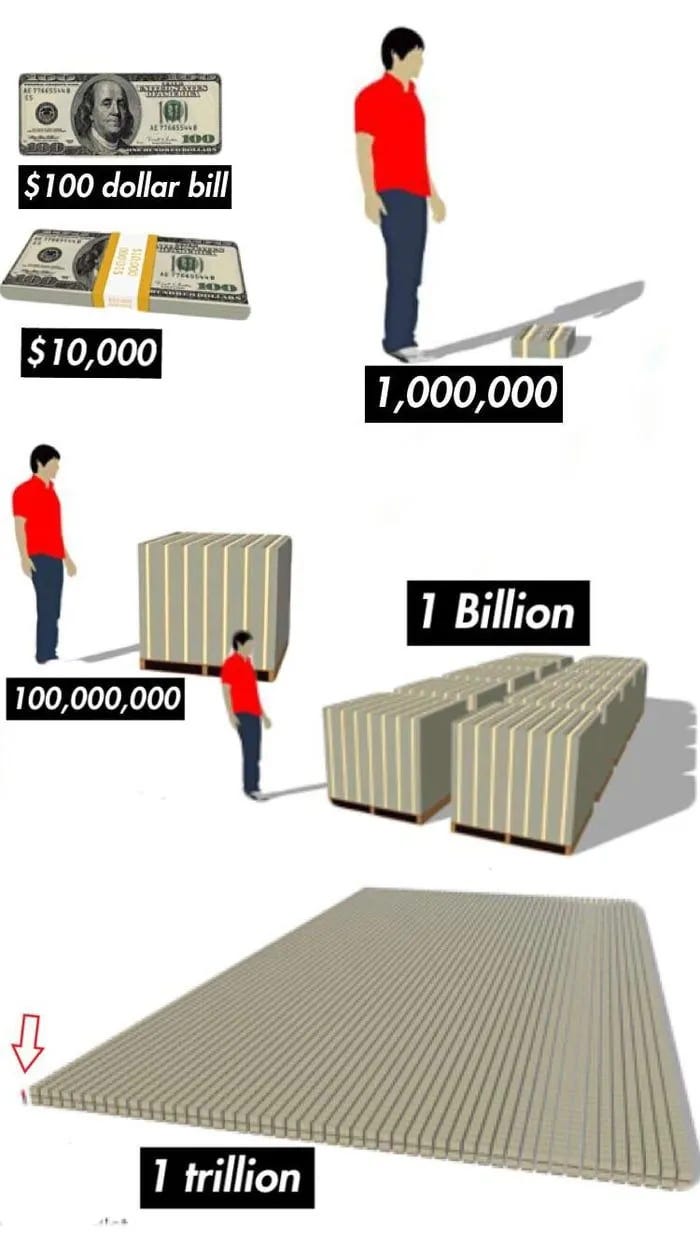

The OpenAI chief executive officer is in talks with investors including the United Arab Emirates government to raise funds for a wildly ambitious tech initiative that would boost the world’s chip-building capacity, expand its ability to power AI, among other things, and cost… $5 trillion to $7 trillion…

Read more via WSJ.

Seven trillion is a very, very large number.

To put a trillion dollars in context, if you spend a million dollars every day since Jesus was born, you still wouldn't have spent a trillion. — Mitch McConnell via CNN (2009).

Read the original comparison and viz from 2009 via archive.org

Read a very interesting thought experiment (slash conspiracy theory, but also realistic) in this related comment (18/Feb/2024):

What would you expect to see? Requests for money that are granted. Colossal, truly absurd amounts of money. Say, 7 trillion dollars. The only product that could convince anybody to give that much money is AGI that can't be faked. So if there is any indication that that money has been given, you can be sure of AGI.

…Any company that makes AGI is going to want to feed it as many GPUs as money can buy, while delaying having to announce AGI. They've now changed from a customer-facing company, to a ninja throwing smoke bombs. In order to throw people off the scent, they're going to want to release a bunch of amazing new products and make random cryptic statements to keep people guessing for as long as possible. Their actions will start to seem more and more chaotic and unnecessarily obtuse. Customers will be happy, but frustrated. They will start to release products that are unreasonably better than they should be, with unclear paths to their creation. There will be sudden breakdowns in staff loyalty and communications. Firings, resignations, vague hints from people under NDAs.

One day soon after, the military will suddenly take a large interest and all PR from the company will go quiet. That's when you know it's real. When the curtain comes down and everyone stops talking, but the chequebooks continue to open up so wide that nobody can hide how many resources are being poured into the company from investors and state departments. Bottlenecks reached for multiple industries. Complete drought of all GPUs, etc.

The current situation meets some of these criteria, but not others. If there is no indication of the 7 trillion being provided, it was hype. If there is any indication that it is being provided, AGI is upon us, or something that looks exactly like AGI.

This is another comprehensive edition. Let’s look at more AI, including Google Goose, V-JEPA, several detailed OpenAI updates, policy movements, and more. Full subscribers will get access to my 2024 keynote rehearsal video shortly…

Google Gemini + Goose (14/Feb/2024)

In the last edition of The Memo 8/Feb/2024, we talked about Google Gemini being used to fix code inside Google. There is apparently another model at Google named 'Goose' to help employees write code faster. The internal AI model, Goose, trained on Google's 25 years of engineering expertise, was designed to increase productivity in coding and product development among its employees.

Read more via Business Insider: https://archive.md/04LjR

V-JEPA: Video Joint Embedding Predictive Architecture (15/Feb/2024)

On the same day as OpenAI Sora and Google Gemini 1.5, Meta introduced the Video Joint Embedding Predictive Architecture (V-JEPA), enhancing machine intelligence with improved video understanding capabilities and efficient learning, released under a Creative Commons NonCommercial license.

Meta’s Chief AI Scientist Dr Yann LeCun believes that JEPA is an alternative to transformer (and generative AI), and a better way to achieve artificial general intelligence. [Sidenote: Yann is one of the 99% of AI experts that I do not listen to.]

Read the announce: https://ai.meta.com/blog/v-jepa-yann-lecun-ai-model-video-joint-embedding-predictive-architecture/

Read the paper: https://ai.meta.com/research/publications/revisiting-feature-prediction-for-learning-visual-representations-from-video/

Karpathy leaves OpenAI (14/Feb/2024)

We reported in The Memo edition 17/Feb/2023 that Slovak-Canadian AI researcher Dr Andrej Karpathy had left Tesla to join OpenAI. It seems that a year in the role was long enough, as he has now left OpenAI. It may be to do with OpenAI’s relentless pursuit of AGI to replace humans, while other researchers prefer the less intrusive idea of human augmentation. Or perhaps he just wants to work with a company that is less closed…

Source tweet: https://twitter.com/karpathy/status/1757600075281547344

NVIDIA launches a chatbot that can run on your PC's GeForce RTX GPU (13/Feb/2024)

NVIDIA has introduced ‘Chat with RTX’, a personal AI chatbot application that runs locally on PCs with GeForce RTX 30 or 40 Series GPUs, promising fast and contextually relevant interactions.

Read more via TechSpot.

The setup seems to offer Llama 2 7B and Mistral 7B models by default.

See the Models Table: https://lifearchitect.ai/models-table/

AI protest at OpenAI HQ in San Francisco focuses on military work (13/Feb/2024)

Protesters gathered at OpenAI’s San Francisco office to demand the company stop engaging in military work.

Read more via Bloomberg.

OpenAI denied GPT trademark (8/Feb/2024)

This is the second time the USPTO has denied OpenAI’s trademark application for the acronym ‘GPT’. The first refusal was in May 2023. OpenAI can ask the USPTO one more time to reconsider its decision, or file an appeal with mediators.

The Internet attached evidence establishes that "GPT" is a widely used acronym which means "generative pre-trained transformers," which are neural network models that "give applications the ability to create human-like text and content (images, music, and more), and answer questions in a conversational manner."

Read more via USPTO (inline PDF) or download:

Memory and new controls for ChatGPT (13/Feb/2024)

OpenAI is testing a new memory feature for ChatGPT, allowing it to remember past interactions for more helpful future conversations, with full user control over the memory settings.

This is unrelated to working memory or context window as we explored above, and is likely using retrieval-augmented generation (RAG) instead.

Read more: https://openai.com/blog/memory-and-new-controls-for-chatgpt

Policy

Disrupting malicious uses of AI by state-affiliated threat actors (14/Feb/2024)

OpenAI, in partnership with Microsoft Threat Intelligence, has disrupted five state-affiliated actors attempting to use AI for malicious cyber activities and emphasizes the importance of collaborative defense and transparency in AI safety.

These nation-state groups, are utilizing large language models like ChatGPT to enhance their cyberattacks, with activities ranging from scripting to crafting phishing emails.

Read more via The Verge.

Read an official summary via OpenAI.

Read an official summary with much more detail via Microsoft.

Fact Sheet: Biden-Harris Administration announces key AI actions following President Biden’s landmark Executive Order (29/Jan/2024)

The Biden-Harris Administration announced substantial progress in AI policy, including strengthening AI safety and security, after a landmark Executive Order directed a range of actions within 90 days.

Read more via the Whitehouse.

The FCC’s Ban on AI in Robocalls Won’t Be Enough (13/Feb/2024)

Experts doubt the effectiveness of the FCC's ban on AI-generated voices in robocalls, as generative AI makes targeted scams easier and enforcement remains challenging.

In the days before the U.S. Democratic Party’s New Hampshire primary election on 23 January, potential voters began receiving a call with AI-generated audio of a fake President Biden urging them not to vote until the general election in November. In Slovakia a Facebook post contained fake, AI-generated audio of a presidential candidate planning to steal the election—which may have tipped the election in another candidate’s favor. Recent elections in Indonesia and Taiwan have been marred by AI-generated misinformation, too.

Traceback, for example, identified the source of the fake Biden calls targeting New Hampshire voters as a Texas-based company called Life Corporation. The problem, Burger says, is that the FCC, the U.S. Federal Bureau of Investigation, and state agencies aren’t providing the resources to make it possible to go after the sheer number of illegal robocall operations…

“I don’t think we can appreciate just how fast the telephone experience is going to change because of this”…

…in the case of calls like the fake Biden one, it was already illegal to place those robocalls and impersonate the president, so making another aspect of the call illegal likely won’t be a game-changer.

…With the FCC’s unanimous vote to make generative AI in robocalls illegal, the question naturally turns to enforcement.

“By making it triply illegal, is that really going to deter people?”

Read more: https://spectrum.ieee.org/ai-robocalls-2667266649

Toys to Play With

VR: AVP competitors (2024)

These two headsets have very similar resolution and design to the Apple Vision Pro, but they have a tighter focus (entertainment and productivity respectively).

Bigscreen Beyond (5120×2560): https://www.bigscreenvr.com/

Immersed Visor (4K microOLED per eye): https://visor.com/

The ChatGPT system prompt (2024)

The ChatGPT system prompt was revealed and discussed in detail recently. While the prompt may not be completely ‘true’ (there may be some hallucination or other factor affecting how it is output), the grammar and syntax are surprisingly bad for such an important work. Some of the commands seem to be arbitrary, others may be the cause of ChatGPT’s poor performance. For example, this rule within the prompt may explain some ‘laziness’:

Never write a summary with more than 80 words. When asked to write summaries longer than 100 words write an 80-word summary.

Read the full prompt: https://pastebin.com/vnxJ7kQk

Google Imagen 2 via ImageFX is a lot of fun (Feb/2024)

Try it: https://aitestkitchen.withgoogle.com/tools/image-fx (free, login)

Flashback

This piece of satire was first posted a year ago in Feb/2023.

Next

The next roundtable will be:

Life Architect - The Memo - Roundtable #8

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 16/Mar/2024 at 4PM Los Angeles

Saturday 16/Mar/2024 at 7PM New York

Sunday 17/Mar/2024 at 8AM Perth (primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai