The Memo - 21/Apr/2024

Reka Core, Grok-1.5V, frontier model benchmarks GPQA + MMLU, and much more!

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 21/Apr/2024

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 72%OpenAI CEO (he says this a lot; May/2023 & 16/Apr/2024):

I do think that when we [have AGI/ASI and] look back at the standard of living and what we tolerate for people today [in 2024], it will look even worse than when we look back at how people lived 500 or 1,000 years ago. ‘Can you imagine that people lived in poverty? Can you imagine people suffered from disease? Can you imagine that everyone didn't have a phenomenal education and were able to live their lives however they wanted?’ It's going to look barbaric.

I’ve opened up my main testing prompts by putting them behind a ‘no index’ protected page. It’s quick and easy to use, and the password is provided in the title. Feel free to try this with your favorite model. Only the latest gpt-4-turbo-2024-04-09 model achieves 5/5, and many models struggle to format a table and score even 1/5! I’ll be refreshing the prompt shortly for the second half of 2024.

ALPrompt: https://lifearchitect.ai/ALPrompt/

The BIG Stuff

Ray Kurzweil says that technological progress is moving faster than his forecasts (12/Apr/2024)

Kurzweil is now suggesting that we’ll hit AGI by 2026. That would be very close to my countdown which shows AGI around 2025…

18m48s

I made a prediction in 1999 [2029 for AGI and 2045 for the Singularity]. It feels like we're two or three years ahead of that…

22m30s

[When we achieve artificial general intelligence, AGI, median human level in]

2026, we may not be able to understand everything going on, but we can understand it. Maybe it's like a hundred humans, but that's not beyond what we can comprehend.

[Artificial superintelligence, ASI in]

2045 will be like a million humans, and we can’t begin to understand that. So approximately at that time—we borrowed this phrase from physics and called it a ‘Singularity’.

Watch the interview on YouTube (18m48s timecode).

Reka Core(15/Apr/2024)

Commercial AI lab Reka AI has unveiled Reka Core, its largest and most capable multimodal language model to date. Core is competitive with leading industry models across key evaluation metrics. Its capabilities include image/video understanding, 128K context window, superb reasoning abilities, coding and agentic workflows, and fluency in 32 languages.

The paper provides no architecture details, but I’ve estimated this model at 300B parameters trained on 10T tokens. The performance is surprisingly poor—probably because they don’t have whatever secret sauce OpenAI et al are applying—scoring only 2/5 on my ALPrompt.

Read the paper: https://publications.reka.ai/reka-core-tech-report.pdf (PDF, 21 pages)

Try it (login): https://chat.reka.ai/chat or via Poe.com (login): https://poe.com/RekaCore

Grok-1.5 Vision Preview (12/Apr/2024)

Grok-1.5V(ision) is x.ai's first-generation multimodal model that can process a wide variety of visual information, including documents, diagrams, charts, screenshots, and photographs. Grok outperforms peers in the new RealWorldQA benchmark measuring real-world spatial understanding. Grok-1.5V will be available soon to early testers and existing Grok users.

Grok-1.5V is similar to other vision models: GPT-4V, Claude 3 Opus, Gemini Pro 1.5.

Read more: https://x.ai/blog/grok-1.5v

Llama 3 (18/Apr/2024)

We provided a special edition of The Memo to talk about this high-performance open-source model.

The Interesting Stuff

Exclusive: Scaling laws updates (Apr/2024)

Following the release of several new papers this month, I’ve updated my Chinchilla advisory note, expanding the literature references from the original tokens:parameters ratio of Chinchilla 20:1 to new findings from Mosaic at an average of 190:1, Tsinghua at 192:1, the Epoch AI replication at 26:1, and the latest findings from Llama 3 8B at 1,875:1.

In plain English (well, plain-ish for this nerd stuff), to match new findings from Mosaic and Tsinghua in particular, models should now be trained using 112× more data than used for GPT-3, and 6.6× more data than used for Llama 2.

In plainer English, and possibly oversimplified:

For the best performance, and at 2024 median model sizes and training budgets, if a large language model used to read 20M books during training, then it should instead read more than 190M books (or 40M books five times!).

Read more: https://lifearchitect.ai/chinchilla/

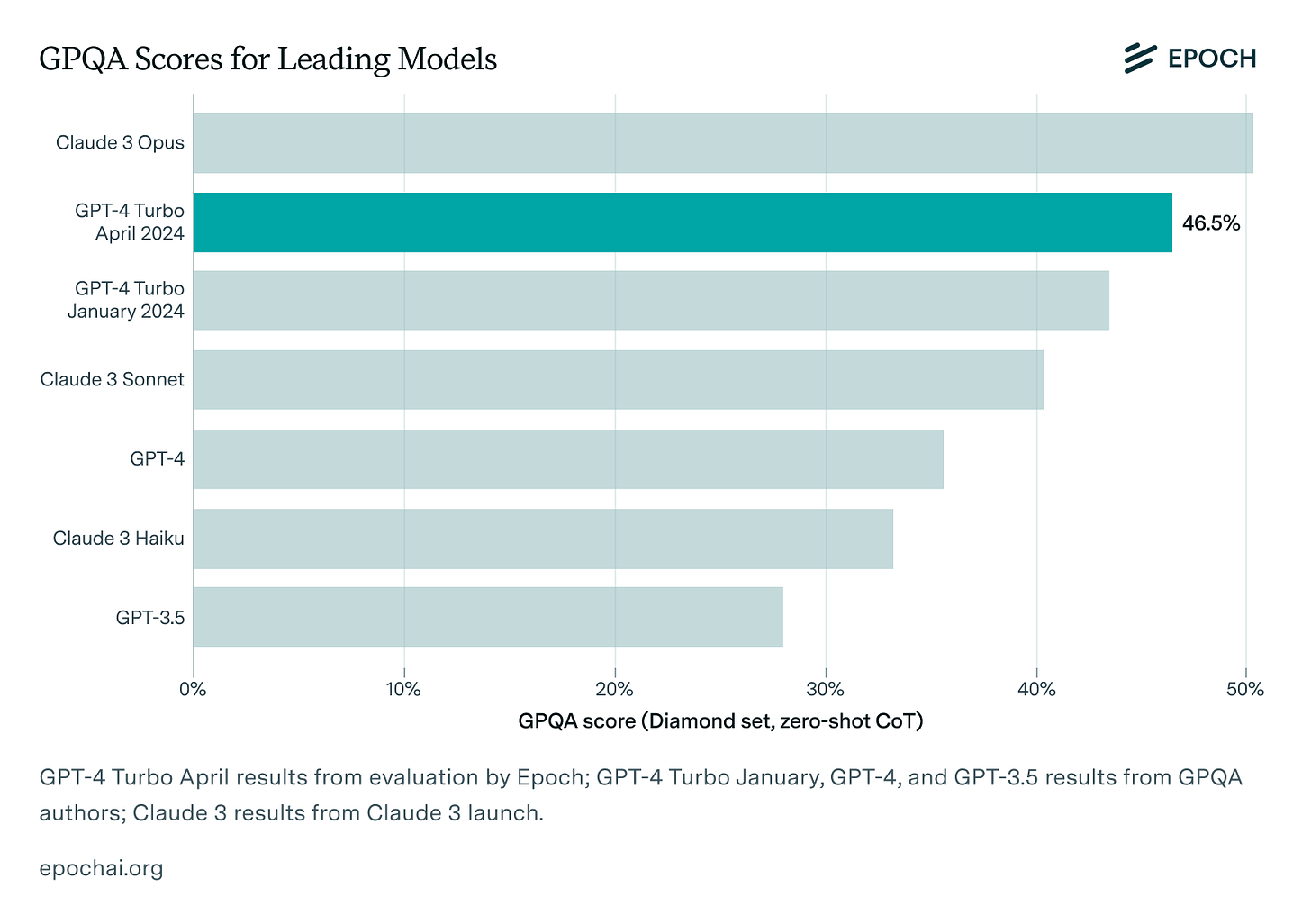

GPQA benchmarks (12/Apr/2024)

My friends at Epoch AI have visualized the major frontier models using GPQA, a strict new ‘Google-proof’ benchmark by NYU, Anthropic, and Cohere.

[Sidenote: GPQA is designed by PhDs, and I’ve estimated that it measures IQ 115-135. The only benchmark above that is my BASIS suite, designed for IQ 170-190 (Nov/2023).]

Interestingly, current frontier models are only just breaching the 50% mark on GPQA in Q1 2024; a good sign of a rigorous test!

Claude 3 Opus GPQA=50.4%

gpt-4-turbo-2024-04-09 GPQA=46.5%

Source via Epoch AI.

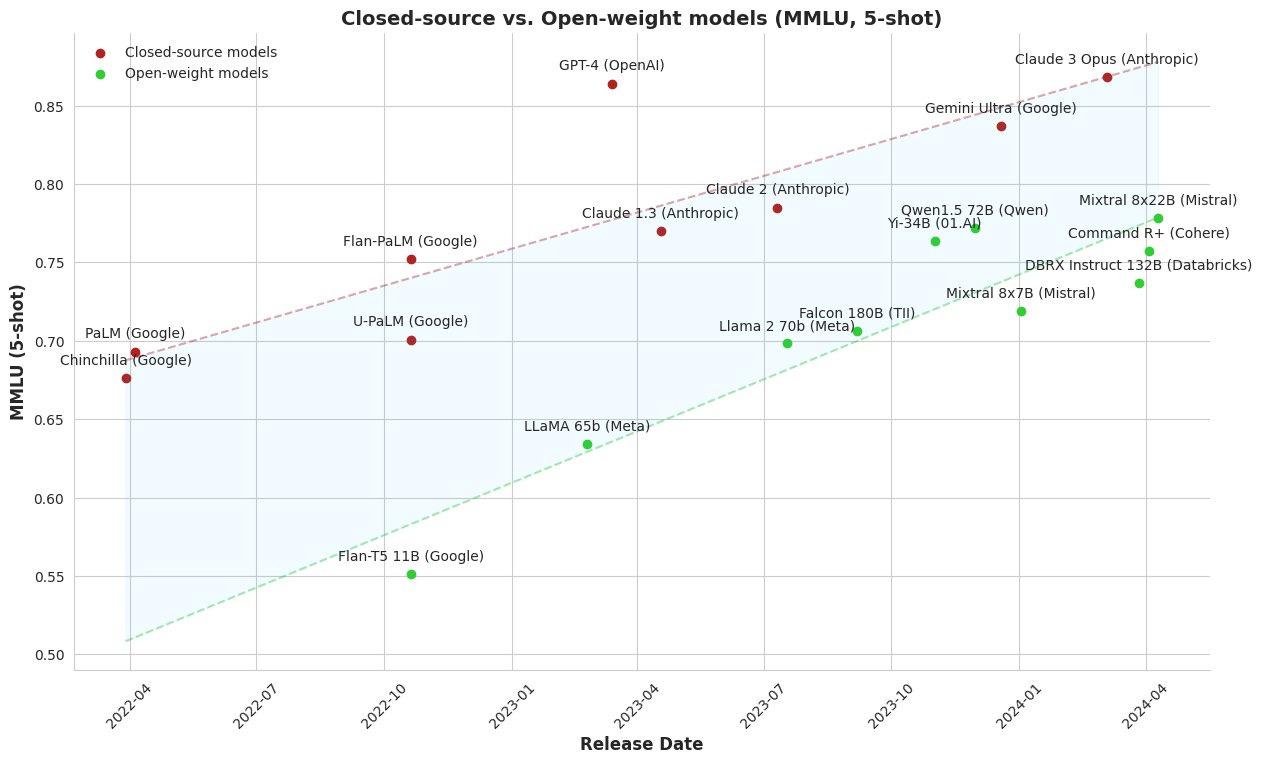

MMLU benchmarks for open vs closed models (13/Apr/2024)

MMLU is an older standard test for AI and it no longer has a high enough ceiling to test frontier models in 2024. We are now scoring above 90% for several models (GPT-4, Gemini Ultra, Claude 3 Opus), and the test suite has a very high error rate (read an analysis of error rates in MMLU from Aug/2023, and watch my video on the ceiling issue from May/2023).

However, this chart was interesting to me because it shows the gap between open and closed models narrowing. Compare this month’s Mixtral 8x22B (Apr/2024) with Claude 2 (Jul/2023). If we were to interpret it generously, in terms of model performance, it looks like the best open models are now only ~9 months behind closed models…

Source via Twitter.

[Sidenote: The MMLU scores for this month’s gpt-4-turbo-2024-04-09 are unclear, but may have improved by 1% from 80.48% → 81.48% (12/Apr/2024).]

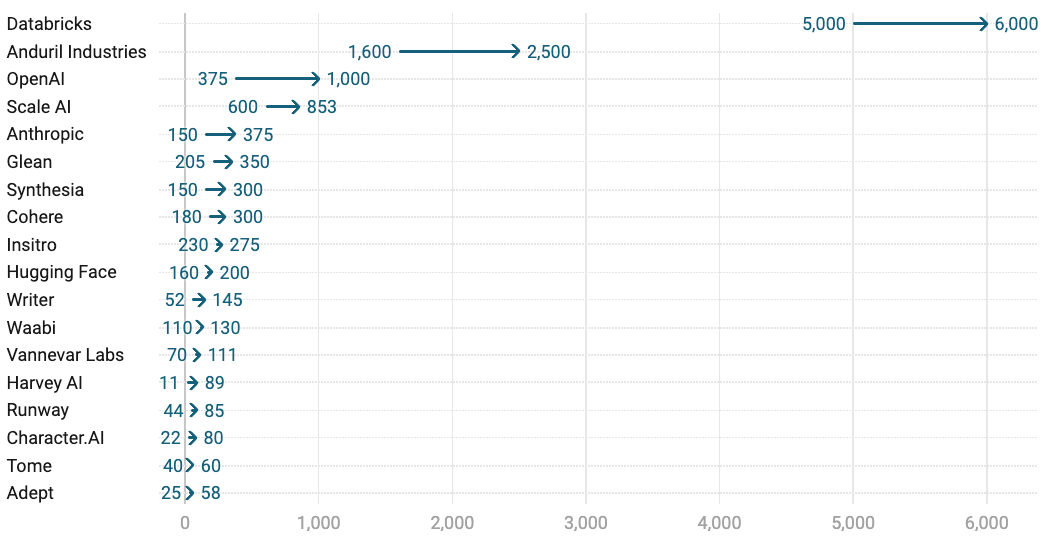

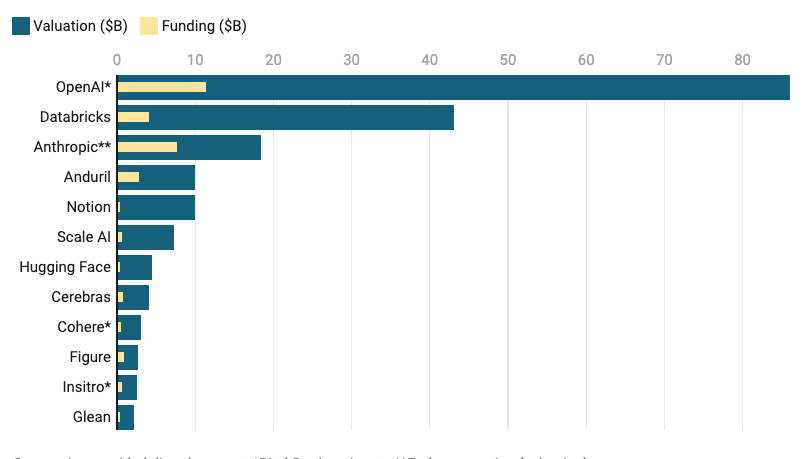

AI 50: The Top Artificial Intelligence Startups (11/Apr/2024)

Forbes’ sixth annual AI 50 list shows AI startups are getting younger and leaner, with a median headcount of 89 employees, down from 150 last year. The list is dominated by 28 new entrants, many of which are selling AI infrastructure or applying AI to real-world use cases. AI is booming, with the AI 50 honorees raising a total of US$34.7B.

Among this year’s debut standouts is Mistral AI, a year-old startup which soared to a $2 billion valuation, per PitchBook, with its play to build a European rival to OpenAI. Named for a wind which blows from the French Alps into the Mediterranean that portends good weather, the Paris-based startups’ founders hail from AI research labs at Google and Meta. Mistral is just one of eight companies on the AI 50 that are headquartered in Europe…

Read summary via Forbes Australia.

Official report via Forbes.

Adobe adds Sora to Premiere Pro (16/Apr/2024)

Adobe, which released a demonstration of Sora being used to generate video in Premiere Pro, described the demonstration as an "experiment" and gave no timeline for when it would become available.

Read more via Reuters.

Watch it (link):

Adobe's AI Firefly Used AI-Generated Images From Rivals for Training (12/Apr/2024)

Adobe's Firefly AI, promoted as a 'commercially safe' alternative trained mainly on licensed images from Adobe Stock, was revealed to have also used AI-generated content from rival services like Midjourney to train its models. This contradicts Adobe's public statements about Firefly being safer due to its training data.

Read more via Bloomberg.

Microsoft releases WizardLM 2 but then pulls it for toxicity testing (16/Apr/2024)

Microsoft used Mistral 8x22B and other models to fine-tune against their datasets.

The models were then removed from HF. Official update: https://twitter.com/WizardLM_AI/status/1780101465950105775

Read an analysis by PCMag Australia.

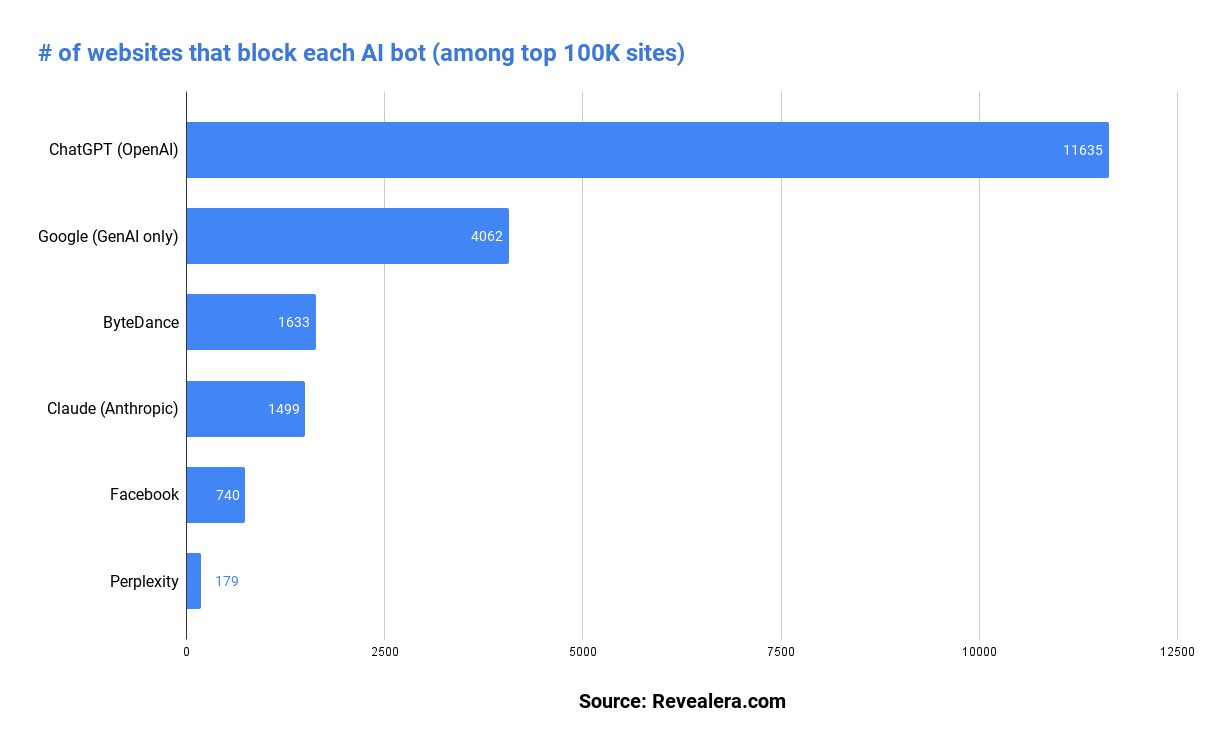

Websites blocking GPT crawlers (9/Apr/2024)

OpenAI’s GPTBot web crawler can be blocked by any website/domain, and is famously blocked by media sites like NYT and BBC.

What’s the bear case against ChatGPT/OpenAI? 11% of the top 100K websites block them from accessing their data. That’s more than their competitors combined including 4% for $GOOG, <1% for $META (who arguably doesn’t need any public data) and almost 0% for Perplexity.

Read source: https://twitter.com/AznWeng/status/1777688628308681000

Goldman says it's time to take tech profits and invest elsewhere (10/Apr/2024)

Goldman Sachs Asset Management is taking profits from high-flying tech shares and putting the money into cheaper companies like energy and Japanese shares. The firm believes tech shares will come under pressure as the US economy heads for a soft landing, but there are risks that could change the trajectory.

Read more via Bloomberg.

Stanford AI Index Report 2024 (Apr/2024)

The Stanford Institute for Human-Centered AI (HAI) has released the comprehensive AI Index Report 2024, looking back on AI for Jan-Dec 2023.

Stanford are gonna have to pull their socks up; now that AI is moving so fast, there’s no room for reports that are already about four months out of date on release.

The report tracks AI trends, including technical progress, public perceptions, and the geopolitical dynamics surrounding its development.

Here’s my favorite of the many charts and visualizations in the report (p96):

Read more via Stanford Institute for Human-Centered AI.

Download the report (PDF, 502 pages)

OpenAI opens first Asia office in Tokyo, releases Japanese GPT-4 model (14/Apr/2024)

OpenAI announced the opening of its first Asia office in Tokyo, Japan, led by Tadao Nagasaki as President of OpenAI Japan. The company is providing early access to a GPT-4 custom model optimized for Japanese, offering improved translation and summarization performance at up to 3x the speed of its predecessor.

Read official announce: https://openai.com/blog/introducing-openai-japan

Read analysis by Japanese media: 1, 2

Elon Musk to Raise US$4B for xAI, Challenging ChatGPT (12/Apr/2024)

Elon Musk is in the process of securing up to US$4B in funding for his AI startup xAI, aiming for a post-funding valuation of US$18B. xAI debuted its open-source chatbot Grok in November 2023 as a direct competitor to OpenAI's ChatGPT. Despite its high valuation, xAI remains a small firm with just 10 engineers and 5,000-10,000 GPUs.

Read more via BitDegree.

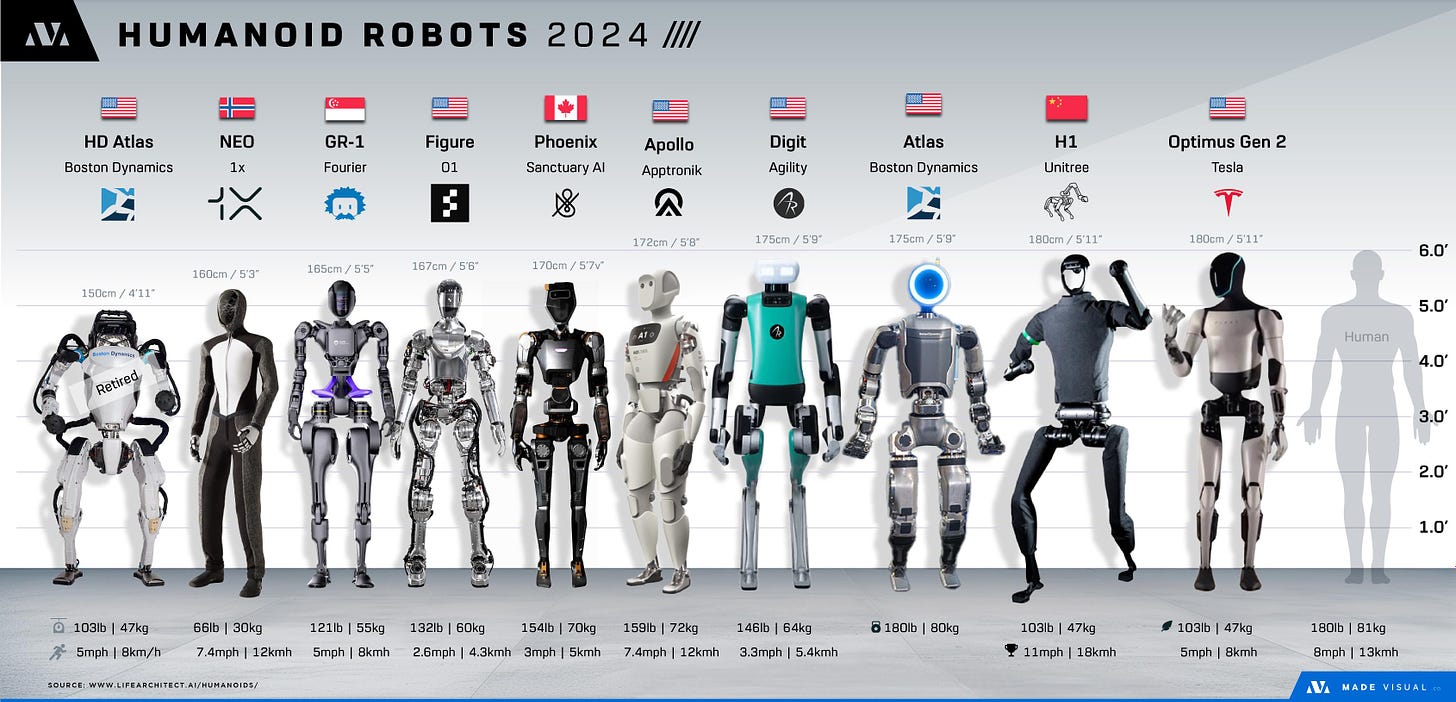

Boston Dynamics Retires [hydraulic] Atlas Humanoid Robot After Nearly 11 Years and launches an electric version (16/Apr/2024)

Boston Dynamics is retiring its Atlas humanoid robot, which was designed for search and rescue missions but gained fame for its impressive dance moves and physical feats. The company shared a final YouTube video showing Atlas' accomplishments and bloopers over the years.

Read a send-off by The Verge.

Watch the retirement video (link).

Watch the new Atlas electric, not hydraulic (link):

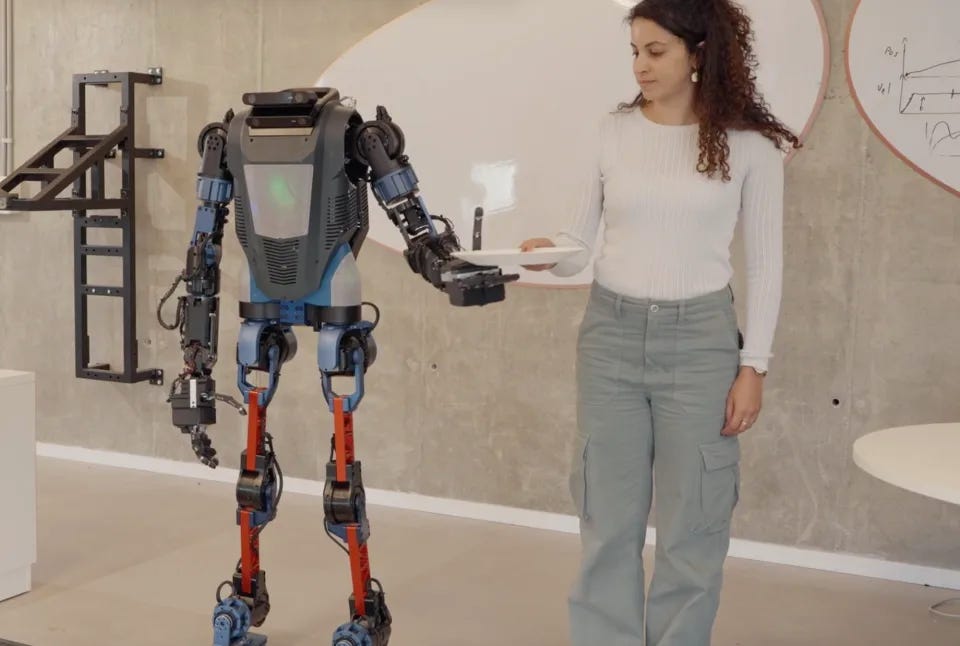

New humanoid: Menteebot (17/Apr/2024)

Mentee Robotics has introduced Menteebot, a human-sized robot packed with AI that can run, walk sideways, turn, and even hold conversations. Users issue commands via natural language and can train it to do new tasks.

Read more via Engadget.

See my Humanoids page: https://lifearchitect.ai/humanoids/

Harrison Schell from madevisual.co revamped my data above into an interesting viz:

The World's First AI Creator Awards (17/Apr/2024)

Miss AI is the world's first beauty pageant for AI-generated models, celebrating creators at the forefront of AI. Contestants will be judged on beauty, tech skill, and social clout for the chance to win the Miss AI crown and over $20k in prizes.

Two of the judges are also AI bots.

Sidenote: I was wondering whether my 2021 Leta AI would have entered such a competition, but I think she is/was far too modest for that kind of thing.

Read more via the World AI Creator Awards website: https://waicas.com/

Turnitin: AI used in 11% of papers since April 2023 (9/Apr/2024)

Turnitin is an older web-based text-matching software that works by comparing electronically submitted papers to known content. I had to use this platform for all my papers during my post-graduate degree at Flinders University in Adelaide. It works well for checking citations and flagging chunks of text drawn from other papers.

However, I’m certain that no platform is able to detect AI-generated text content. There’s just no way to prove something was written by AI. Consider asking GPT-4 to generate a paper on bunnies, then asking Claude 3 Opus to re-write that output in the style of a university student, and then finally having a ‘rare’ model (like Phi-2 or any of the 300+ on my Models Table) rewrite the whole thing to match your writing style. Obviously it would be impossible to prove that text came from a machine instead of a person.

Turnitin tried anyway, and (arrogantly) think they can defy logic:

Approximately 11% of papers having at least 20% AI-generated content… To further highlight the prevalence of AI writing in the classroom, a recent study conducted by Tyton Partners** also found that nearly half of the students surveyed have used generative AI tools, such as ChatGPT, monthly, weekly or daily. Additionally, the survey found 75 percent of these students said they will continue to use the technology even if faculty or institutions ban it.

Read the announce by Turnitin.

Read an analysis by GovTech.

Brain Implants Aim to Provide Artificial Vision to the Blind (15/Apr/2024)

Researchers are developing brain implants that could provide a crude form of artificial vision to blind individuals by stimulating the visual cortex. Elon Musk's Neuralink is also working on a device called Blindsight, which Musk claims is 'already working in monkeys'.

Read more via Wired.

Invisibility Cloak: Real World Adversarial Attacks on Object Detectors

Researchers have developed wearable "invisibility cloaks" that use adversarial patterns to evade most common object detectors. The patterns are trained using a gradient descent algorithm to minimize the "objectness scores" produced by detectors for every potential bounding box in an image.

Read more via University of Maryland.

Paper: Is artificial intelligence the great filter that makes advanced technical civilisations rare in the universe? (Jun/2024)

The rapid development of AI presents a formidable challenge to the survival and longevity of advanced technical civilisations, not only on Earth but potentially throughout the cosmos. The pace at which AI is advancing is without historical parallel, and there is a real possibility that AI could achieve a level of superintelligence within a few decades. The development of ASI is likely to happen well before humankind manages to establish a resilient and enduring multiplanetary presence in our solar system. This disparity in the rate of progress between these two technological frontiers is a pattern that we can expect to be repeated across all emerging technical civilizations.

Read more: https://doi.org/10.1016/j.actaastro.2024.03.052

For a more approachable piece just watch Ridley Scott’s Prometheus (2012).

Amodei on AGI (12/Apr/2024)

Dario Amodei is co-founder and CEO of Anthropic, the AI lab behind Claude.

Ezra/NYT: You don't love the framing of artificial general intelligence, what gets called AGI. Why don't you like it? Why is it not your framework?

Amodei/Anthropic: So [AGI is] actually a term I used to use a lot 10 years ago. And that's because the situation 10 years ago was very different. 10 years ago, everyone was building these very specialized systems, right?

Here's a cat detector. You know, you run it on a picture and it'll tell you whether a cat is in it or not. And so I was a proponent all the way back then of like, no, we should be thinking generally.

Humans are general. The human brain appears to be general. It appears to get a lot of mileage by generalizing.

We should go in that direction. And I think back then, you know, I kind of even imagine that that was like a discrete thing that we would reach at one point.

If you look at a city on the horizon and you're like, you know, ‘We're going to Chicago.’

Once you get to Chicago, you stop talking in terms of Chicago. You're like, ‘Well, what neighborhood am I going to? What street am I on?’

And I feel that way about AGI. We have very general systems now. In some ways, they're better than humans. In some ways, they're worse. There's a number of things they can't do at all. And there's much improvement still to be gotten.

Source: NYT

Policy

Regulation: Stanford AI Index Report 2024 (Apr/2024)

It’s not big news (you already know about this), but AI regulation has exploded in a big way. One of the key takeaways from the 2024 Stanford AI Index Report (above) was just how onerous new AI guidelines are:

The number of AI regulations in the United States sharply increased. The number of AI-related regulations in the U.S. has risen significantly in the past year and over the last five years. In 2023, there were 25 AI-related regulations, up from just one in 2016. Last year alone, the total number of AI-related regulations grew by 56.3%.

Read more via Stanford Institute for Human-Centered AI.

Download the report (PDF, 502 pages)

I come back to the Cato principles as addressed in The Memo edition 13/Nov/2023:

A thorough analysis of existing applicable regulations with consideration of both regulation and deregulation: Evaluating how current regulations apply to AI and balancing regulation with deregulation.

Prevent a patchwork, preemption of state and local laws: Advocating for a unified federal framework to avoid a patchwork of various state and local AI regulations.

Education over regulation: Improved AI and media literacy: Prioritizing public education about AI and media literacy over imposing regulations.

Consider the government’s position towards AI and protection of civil liberties: Scrutinizing the government's stance on AI to ensure the protection of civil liberties.

GPT-4 put this into very simple language for me:

Check current rules for AI: Look at the rules we already have and decide if we need more or less of them for AI.

Avoid mixed rules, have one big rule: Instead of having different AI rules in different places, have one big set of rules for everyone.

Teach people about AI, don't just make rules: Help people learn about AI and how media works, instead of just making lots of rules.

Think about how the government views AI and keeps freedoms safe: Make sure the government's use of AI doesn't take away people's rights and freedoms.

Read more via Cato Institute.

Or for something more visceral, the UAE Minister of State for AI, in The Memo edition 2/Dec/2023:

…the issues policymakers are now facing with regard to AI—such as its impact on job losses, misinformation, and fear of social upheaval—are very similar to the problems faced by the empire’s then leader, Sultan Selim I.

We overregulated a technology, which was the printing press. It was adopted everywhere on Earth. The Middle East banned it for 200 years. The calligraphers came to the sultan and said: ‘We’re going to lose our jobs, do something to protect us’—so, job loss protection, very similar to AI. The religious scholars said ‘people are going to print fake versions of the Quran and corrupt society’—misinformation, second reason.”

…it was fear of the unknown that led to this fateful decision.

The top advisors of the sultan said: ‘We actually do not know what this technology is going to do; let us ban it, see what happens to other societies, and then reconsider…’

IOC takes the lead for the Olympic Movement and launches Olympic AI Agenda (19/Apr/2023)

The International Olympic Committee (IOC) is a non-governmental sports organization based in Lausanne, Switzerland. The IOC has launched the 'Olympic AI Agenda' to explore opportunities and manage risks related to AI in sport, with Working Groups focused on key areas.

The IOC is coming dangerously close to getting a Who Moved My Cheese? AI Award!…

The IOC President continued: “At the centre of the Olympic AI Agenda are human beings. This means: the athletes. Because the athletes are the heart of the Olympic Movement. Unlike other sectors of society, we in sport are not confronted with the existential question of whether AI will replace human beings. In sport, the performances will always have to be delivered by the athletes. The 100 metres will always have to be run by an athlete – a human being. Therefore, we can concentrate on the potential of AI to support the athletes.”

The unbridled superciliousness is astounding, and they put it in writing! Actually, let’s add this guy to the cheese room for April 2024, next to Pearl Jam and Dove: https://lifearchitect.ai/cheese/