The Memo - 17/Jul/2023

Anthropic Claude 2, Poe.com's new models, Stable Doodle, and much more!

FOR IMMEDIATE RELEASE: 17/Jul/2023

Prof Geoffrey Hinton (10/Jul/2023)

’Digital neural networks [AI] may be a much better form of intelligence

than biological neural networks [human brains].’

[Alan’s note: Don’t underestimate this quote from the man who is credited as discovering neural networks, a clinical psychologist and computer scientist, a university professor, Turing award winner, ex-Googler (he left Google in May/2023 to speak out like this), and much more. AI is a purer form of intelligence than what we have in our heads. You can read my thoughts on this in my 2020 article for Mensa, The new irrelevance of intelligence.]

Welcome back to The Memo.

You’re joining full subscribers from Harvard, Google, PepsiCo, NASA, many US Government departments and agencies, Tesla AI, and more…

This is another big edition, covering massive new remuneration for developers now being offered hundreds of millions of dollars each(!) to work for new AI companies, new governance guidelines that affect you, lots of new robots, new LLaMA rumours, and much more. We also look at a fun new text-to-image model just released, and a new application of deep learning for music…

The BIG Stuff

Anthropic Claude 2 (11/Jul/2023)

I’ve estimated this model at 130B parameters trained on 2.5T tokens (20:1). The main highlight is the 200k context window (launching with 100k for now), though even that is not enough for me to deem this a significant release compared to GPT-4 or PaLM 2. The much larger context window, or short-term memory, means that the model can send or receive up to 150,000 words, making it possible to feed it entire books, or have it generate entire books at once.

I should mention a couple of excellent use cases:

One of my consulting clients used Claude to develop their entire ISO 9001 system & Quality Management System. The process took just hours to get them up and running, rather than months (or years).

Your friendly reviewer of The Memo, Jo, used Claude 2 to instantly summarize a 6000-word YouTube transcript. (Hi, Jo!)

Claude 2’s release also managed to add another percentage to my conservative countdown to AGI, due to its increased HHH achievements—helpful, honest and harmless. On the TruthfulQA benchmark, it scored a new state of the art: Claude 2=0.69 vs GPT-4=0.60.

Interestingly, the Claude 2 model card (note: we’ve gone from ‘paper’ to ‘technical note’ to the generic junk that is a ‘model card’) avoids mentioning both ‘OpenAI’ and the four-month-old ‘GPT-4’. Keeping in mind that Anthropic was founded by ex-OpenAI staff who left that company in 2021 after the GPT-3 release, I wonder if there is a fair amount of distancing between the two organizations, if not outright animosity.

In online and offline discussions, I’ve noticed a general consensus that Claude 2 is better than GPT-3.5 via ChatGPT. Claude 2 has also been built-in to Jasper.ai, a company first created around OpenAI’s GPT-3.

Read the announce: https://www.anthropic.com/index/claude-2

Read Anthropic’s prompt suggestions: https://docs.anthropic.com/claude/docs/introduction-to-prompt-design

US & UK readers, try the official interface (free, no login): https://claude.ai/

The rest of the world can try it on Poe.com: https://poe.com/Claude-2-100k

Poe.com adds Google PaLM 2, Claude-2-100k, and GPT-4 32k (11/Jul/2023)

Poe.com is still my ‘daily driver,’ via web (and sometimes phone). It now allows access to the latest and greatest models. Even for paid subscribers of Poe.com, the models are still subject to rate limiting. The PaLM 2 model via Poe is actually the medium-sized model in that family, bison-001. The full PaLM 2 family is Gecko, Otter, Bison, and Unicorn. Unicorn is said to be 340B parameters.

The full list of Poe’s available models as of 11/Jul/2023 is:

Try it: https://poe.com/

There is a new standalone mac app as well (direct link to dmg download).

The Interesting Stuff

Interviews with Google DeepMind CEO about Gemini (11/Jul/2023)

What I think is going to happen in the next era of systems — and we’re working on our own systems called Gemini — is that I think there’s going to be a combination of the two things [general and specialized]. So we’ll have this increasingly more powerful general system that you basically interact with through language but has other capabilities, general capabilities, like math and coding, and perhaps some reasoning and planning, eventually, in the next generations of these systems… There should be specialized A.I. systems that learn how to do those things — AlphaGo, AlphaZero, AlphaFold. And actually, the general system can call those specialized A.I.s as tools.

'Gemini... is our next-generation multimodal large models — very, very exciting work going on there, combining all the best ideas from across both world-class research groups [DeepMind and Google AI]. It’s pretty impressive to see... Today’s chatbots will look trivial by comparison to I think what’s coming in the next few years.'

Read my summary of Google DeepMind Gemini: https://lifearchitect.ai/gemini/

Google DeepMind + Princeton announce Robots That Ask For Help: Uncertainty Alignment for Large Language Model Planners (4/Jul/2023)

We present KnowNo, which is a framework for measuring and aligning the uncertainty of LLM-based planners such that they know when they don't know and ask for help when needed… can be used with LLMs out of the box without model-finetuning, and suggests a promising lightweight approach to modeling uncertainty that can complement and scale with the growing capabilities of foundation models.

View the project page (with video): https://robot-help.github.io/

Read the paper: https://arxiv.org/abs/2307.01928

More robots (Jul/2023)

Do we need an entire section of The Memo dedicated to robots soon? Maybe…

The New Mexico school district started a pilot program in mid-June with the robot, which patrols the multi-building campus grounds 24 hours a day, seven days a week… Using artificial intelligence, the robot in Santa Fe learns the school’s normal patterns of activity and detects individuals who are on campus after hours or are displaying aggressive behavior, said Andy Sanchez, who manages sales for Team 1st Technologies, the robot’s distributor in North America.

Read more via WSJ: https://archive.md/S37n6

Fourier GR-1 (13/Jul/2023)

Once again, China is quietly making headway with AI.

The GR-1 is a pre-programmed robot (not yet backed by a large language model). The original (cached) article by AP/Reuters said that ‘The company behind GR-1 plans to release 100 units by the end of 2023‘. This was quickly updated to read ‘Fourier Intelligence hopes a working prototype can be ready in two to three years [2025-2026]‘.

Standing 1.64 m tall and weighing 55 kg, GR-1 can walk, avoid obstacles and perform simple tasks like holding bottles…

GR-1 was presented at the World AI Conference in Shanghai along with Tesla’s humanoid robot prototype Optimus and other AI robots from Chinese firms.

Among those was X20, a quadrupedal robot developed to replace humans for doing dangerous tasks such as toxic gas detection.

View my new shared sheet tab of current LLM-based robots.

Meta AI’s LLaMA v2 expected shortly (14/Jul/2023)

Meta to release commercial AI model in effort to catch rivals, Microsoft-backed OpenAI and Google are surging ahead in Silicon Valley development race. Meta is poised to release a commercial version of its artificial intelligence model, allowing start-ups and businesses to build custom software on top of the technology.

Where LLaMA 1 was 65B parameters, I expect that the coming release of LLaMA 2 will sit within the 70B-130B parameter range, to make it viable for inference on consumer-grade hardware.

Read more via FT: https://archive.is/WS877

MedPaLM 2 brought into practice (Jul/2023)

Google opened an office in 2021 in Rochester, Minn.—near the Mayo Clinic’s headquarters—to work on projects using the hospital’s data. The hospital said in June that it would use Google AI models to build a new internal search tool for querying patient records.

Both Google and Microsoft also have expressed interest in a bigger ambition: building a virtual assistant that answers medical questions from patients around the world, particularly in areas with limited resources…

Read more via WSJ: https://archive.md/VHXOg

Read a related exploration by McKinsey (10/Jul/2023).

Read an interesting exploration of LLMs in the biomedical domain (Jun/2023).

Google Bard updates (13/Jul/2023)

Google has updated their Bard platform, a ChatGPT competitor which uses PaLM 2 as the base model. The new functionality includes access to Google Lens, and the results are stunning.

View the updates: https://bard.google.com/updates

Read my summary of Bard: https://lifearchitect.ai/bard/

Try it (login with Google): https://bard.google.com/

OpenAI licenses Associated Press News data + extension with Shutterstock (14/Jul/2023)

The deal marks one of the first official news-sharing agreements made between a major U.S. news company and an artificial intelligence firm… OpenAI will license some of the AP’s text archive dating back to 1985 to help train its artificial intelligence algorithms.

Read more via Axios (exclusive).

This follows from OpenAI’s recent 6-year license extension with Shutterstock (11/Jul/2023), an image database company claiming to be the ‘largest subscription-based stock photo agency in the world‘ with 200 million images and 10 million videos. The images have been and will continue to be used to train DALL-E and other models.

New GPT-4 architecture leak (11/Jul/2023)

The latest GPT-4 architecture leak made the rounds on social media and mass media alike. The details were very similar to those from Jun/2023, with the notable addition of a dataset size: GPT-4 trained on 13T tokens (including repeating tokens).

Read full details of GPT-4 and latest leak: https://lifearchitect.ai/gpt-4

xAI launched (12/Jul/2023)

We already featured this news three months ago in The Memo 20/Apr/2023 edition, but the latest update is noisy in the press, so here it is again. Elon Musk has announced a new company called xAI or x.AI.

Exclusive: Elon may have launched xAI on 12/Jul/2023 because 12 + 07 + 23 = 42, which Douglas Adams called ‘the answer to the ultimate question of life, the universe, and everything’. Elon has a history of naming things after science fiction and pop references, and deciding whether or not this is lame I’ll leave as an exercise for the reader.

It should be noted that Elon was one of the primary signatories to the AI pause letter (22/Mar/2023). I gave that letter no heed, and next to no space in The Memo 29/Mar/2023 edition, as it was a blatant attempt at publicity and distraction masquerading as fear-mongering (at best). It is regrettable to see cults of personality running companies that will create our superintelligence. But let’s see how it goes.

The company now features 12 founding engineers, some of whom helped with contributions to the Adam optimizer and GPT-4. Here’s the list excluding Elon:

Igor Babuschkin: ex-DeepMind, OpenAI, CERN.

Manuel Kroiss: ex-DeepMind, Google.

Dr Yuhuai (Tony) Wu: ex-Google, Stanford, University of Toronto, ex-intern at DeepMind, OpenAI.

Dr Christian Szegedy: ex-Google (over a decade).

Prof Jimmy Ba: ex-University of Toronto, advised by Prof Geoffrey Hinton.

Toby Pohlen: ex-Google, Microsoft.

Ross Nordeen: ex-Tesla AI.

Kyle Kosic: ex-OpenAI, OnScale, Wells Fargo.

Greg Yang: ex-Microsoft Research, Harvard.

Dr Guodong Zhang: ex-DeepMind, ex-intern Google Brain, Microsoft Research.

Dr Zihang Dai: ex-Google, Tsinghua.

Dr Dan Hendrycks (advisor): UC Berkeley, Center for AI Safety, ex-intern DeepMind.

The salaries and signing bonuses are heady. Inside sources have claimed Elon offered 9-figure deals (Yahoo, 15/Jul/2023):

[xAI is estimated to be] worth $20 billion — a valuation Musk came up with — before it was born, then each 1% offer in stock options was like a $200 million signing bonus.

Compare this to starting salaries (cash, not equity) at OpenAI in 2016 (WSJ, 19/Apr/2018):

Dr Ilya Sutskever: $1.9 million per year.

Dr Ian Goodfellow: more than $800,000 per year.

Official site: https://x.ai/

Bloomberg: https://archive.md/98SvF

AI Startup Hugging Face Is Raising VC Funds At $4 Billion (13/Jul/2023)

The numbers being thrown around Northern California are outrageous. Keep in mind that in 2016 (before the discovery of Transformers, and obviously well before post-2020 AI) Hugging Face was a tiny startup working on a chatbot app for teenagers. It is now the largest AI model platform in the world.

Its revenue run rate has spiked this year and now sits at around $30 million to $50 million, three sources said — with one noting that it had more than tripled compared to the start of the year…

Per a Forbes profile in 2022, Bloomberg, Pfizer and Roche were early Hugging Face customers…

In June, Inflection AI raised $1.3 billion, in part to manage its Microsoft compute and Nvidia hardware costs; the same month, foundation model rival Cohere raised $270 million. Anthropic, maker of the recently-released ChatGPT rival Claude 2, raised $450 million in May. OpenAI closed its own $300 million share sale in April, then raised $175 million for a fund to back other startups a month later, per a filing. Adept became a unicorn after announcing a $350 million fundraise in March.

At a $4 billion valuation, Hugging Face would vault to one of the category’s highest-valued companies, matching Inflection AI and just behind Anthropic, reported to have reached closer to $5 billion. OpenAI remains the giant in the fast-growing category, Google, Meta and infrastructure companies like Databricks excluded; while its ownership and valuation structure is complex, the company’s previous financings implied a price tag in the $27 billion to $29 billion range.

Speaking for another Forbes story on the breakout moment for generative AI tools, Delangue predicted, “I think there’s potential for multiple $100 billion companies.”

Read more via Forbes: https://archive.md/7C1l2

Read an excellent overview of the Hugging Face team by Peabody Award-winning writer Sam Eifling for Sequoia Capital (29/Jun/2023): https://www.sequoiacap.com/article/clem-delangue-spotlight/

Policy

NSA working on new AI ‘roadmap’ as intel agencies grapple with recent advances (14/Jul/2023)

And despite intelligence agencies’ propensity for analyzing trends and forecasting future events, officials at the Intelligence and National Security Summit in Fort Washington, Maryland, this week largely agreed that the AI developments over the past nine months have been surprising.

George Barnes, deputy director of the National Security Agency, [NSA] called it a “big acceleration” in AI since last November, when OpenAI publicly launched ChatGPT.

“What we all have to do is figure out how to harness it for good, and protect it from bad,” Barnes said during a July 13 panel discussion with fellow leaders of the “big six” intelligence agencies…

Read more via Federal News Network (exclusive). (Archive)

Let me put this in plain English: any agency, any business, any person who thinks that AI acceleration started recently with ChatGPT is very lost. And this can’t just be about ChatGPT’s shiny interface and explosive popularity. Even OpenAI’s CEO told the New York Times that ‘ChatGPT is a horrible product’ (10/Feb/2023).

Today’s base AI technology is now more than three years old, and in many ways the capabilities of raw language models like GPT-3 175B three years ago were far greater than today’s current batch of censored and ‘aligned’ models.

I find it remarkable to see all this sudden hand-wringing about AI models that are actually dumber than they were several years ago, and particularly unnerving that it is usually coming from people who should be much more informed.

See also:

My ’fire alarm’ press releases from two years ago.

The Leta AI experiments from more than two years ago (video playlist).

EleutherAI’s GPT-NeoX-20B paper and warnings in Section 6 ‘Broader Impacts’ from more than a year ago (my backup of the original draft paper; Section 6 was removed from the final version).

Google DeepMind/OpenAI/Oxford/Stanford/more: Forming a world government body for AI oversight (10/Jul/2023)

Researchers from Google DeepMind and eight other organizations have proposed a massive intergovernmental body to oversee AI. The paper is called ‘International Institutions for Advanced AI’.

An intergovernmental Commission on Frontier AI [defined in the next paper below as being highly capable foundation models that could possess dangerous capabilities sufficient to pose severe risks to public safety] could establish a scientific position on opportunities and risks from advanced AI and how they may be managed. In doing so, it would increase public awareness and understanding of AI prospects and issues, contribute to a scientifically informed account of AI use and risk mitigation, and be a source of expertise for policymakers.

This is an excellent and necessary step for our future. Given the well-known ‘toothless’ nature of the United Nations, a completely new intergovernmental body with an effective structure is the only way I can see international (or indeed, universal) AI visibility and governance being addressed.

Read the paper: https://arxiv.org/abs/2307.04699

Read my report to the UN from Jan/2022: https://lifearchitect.ai/un/

Frontier AI Regulation: Managing Emerging Risks to Public Safety (6/Jul/2023)

It seems that everyone wants to add their own twist to naming of post-2020 AI models! A large coalition of 24 experts from major universities and labs presents the latest name, ‘frontier’ models (not ‘foundation’ models, not ‘large language’ models, not ‘enormous language’ models, and not ‘universal’ models).

The paper is hefty—31 pages plus references—and seems to be a seminal work in the field of AI and policy.

…"frontier AI" models [are] highly capable foundation models that could possess dangerous capabilities sufficient to pose severe risks to public safety. Frontier AI models pose a distinct regulatory challenge: dangerous capabilities can arise unexpectedly; it is difficult to robustly prevent a deployed model from being misused; and, it is difficult to stop a model's capabilities from proliferating broadly.

To address these challenges, at least three building blocks for the regulation of frontier models are needed: (1) standard-setting processes to identify appropriate requirements for frontier AI developers, (2) registration and reporting requirements to provide regulators with visibility into frontier AI development processes, and (3) mechanisms to ensure compliance with safety standards for the development and deployment of frontier AI models.

Congratulations to Prof Anton Korinek and the various collaborators from around the world.

Read the paper: https://arxiv.org/abs/2307.03718

AI Safety and the Age of Dislightenment: Model licensing & surveillance will likely be counterproductive by concentrating power in unsustainable ways (10/Jul/2023)

My Australian colleague Prof Jeremy Howard has penned a rebuttal to the above paper. He has also made up his own word, but this time it has nothing to do with the technology and more to do with the outcome. ‘Dislightenment’ remains undefined, except his note that it refers to ‘rolling back the gains made from the Age of Enlightenment’. (With 15 reviewers, I’m sure someone could have helped him find an existing word.)

Look out for my appearance with Jeremy on ABC Catalyst soon; I’ll be posting a link in an upcoming edition of The Memo.

Read it: https://www.fast.ai/posts/2023-11-07-dislightenment.html

Toys to Play With

Stable Doodle (14/Jul/2023)

This is so much fun! Transform your doodles into real images in seconds.

Try it (free, no login): https://clipdrop.co/stable-doodle

Unloop (16/Jul/2023)

Unloop is a co-creative looper that uses generative modeling (VampNet) to not repeat itself.

View the repo: https://github.com/hugofloresgarcia/unloop

Watch the video (link):

Neural Frames (Jul/2023)

The Memo reader Nicolai has built a text-to-video platform called neural frames. It enables musicians, artists, and other folks interested in this technology, to create Stable Diffusion-based videos with lots of control over the outcome.

Try it: https://www.neuralframes.com/?q=hellofriends

20% code is: [removed]

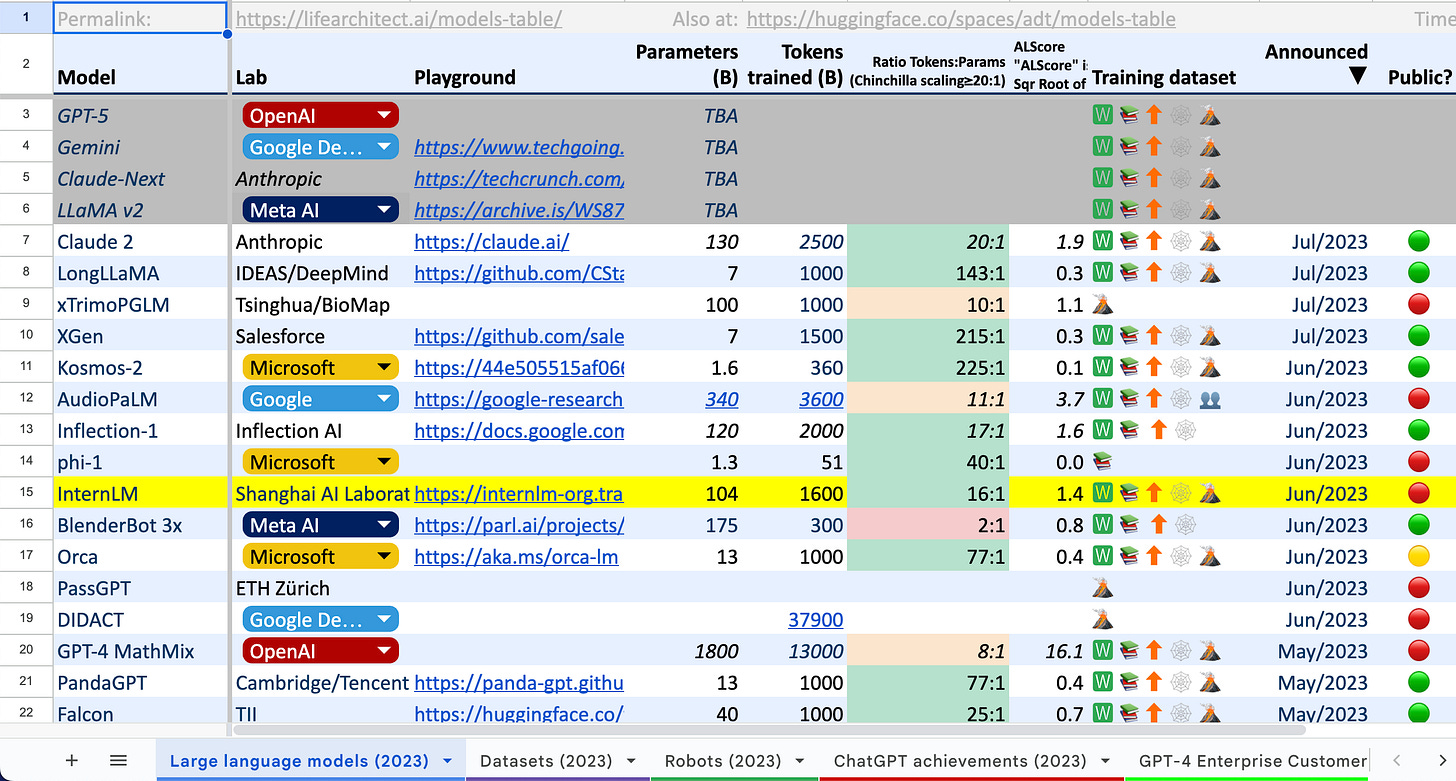

Flashback: Models table (2021)

The LifeArchitect.ai models table is the most comprehensive view of all large language model highlights to date. It is kept in line with major model releases as they are announced, and includes links out to demo environments and papers. I started this for my own benefit in 2021, and it has grown to around 146 models today (Jul/2023). The sheet is in constant use by developers and experts worldwide.

Permanent link: https://lifearchitect.ai/models-table/

Next

I’m just finishing up writing an exhilarating paper on AI + our evolution. Beyond the hype of AI technology and overregulation is this important path to our own upgrade. For the first time, I used an AI model, Google’s PaLM 2 as a co-author for some sections. That’s right, every edition of The Memo, every paper, and every article to date has been written by hand, but it’s time to integrate AI into some of my own writing! As a full member of The Memo, you’ll receive the draft shortly.

All my very best,

Alan

LifeArchitect.ai