The Memo - 16/Oct/2024

Dario's optimistic essay, INTELLECT–1 10B, B200 @ 101dB, new LLM security report, and much more!

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 16/Oct/2024

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 81%

Contents

The BIG Stuff (Dario's optimistic essay, INTELLECT–1, Bubeck moves to OpenAI…)

The Interesting Stuff (OpenAI news, BioNTech, MI325X, NVIDIA B200 @ 101dB…)

Policy (OpenAI security analyses, risk management, new LLM jailbreak report…)

Toys to Play With (New text-to-image models and galleries, AI nature apps…)

Flashback (Sydney…)

Next (Two big OpenAI events in about 48 hours…)

The BIG Stuff

INTELLECT–1: Launching the first decentralized training of a 10B parameter model (11/Oct/2024)

Since 2020, I’ve been asked when we’re going to be able to contribute to the training of large language models through distributed computing, similar to how the Folding@home project allows people to donate their home computer's processing power for protein folding research (since 2000).

Prime Intellect has announced the training of INTELLECT-1, marking the first decentralized training of a 10-billion-parameter model. This initiative invites global contributors to participate in open-source AGI development. The project builds on Prime Intellect's open-source implementation of the Distributed Low-Communication (DiLoCo) method, scaled from 1B to 10B parameters. The decentralized training aims to ensure AI remains open-source, transparent, and accessible, preventing control by centralized entities and accelerating human progress.

Read more via Prime Intellect.

Contribute your own compute: https://app.primeintellect.ai/intelligence

See it on the Models Table: https://lifearchitect.ai/models-table/

Dr Dario Amodei: Machines of loving grace (1/Oct/2024)

Dr Dario Amodei, CEO of Anthropic, discusses the transformative potential of AI, asserting that its upsides are often underestimated. He envisions a future where AI could lead to radical advances in biology, neuroscience, economic development, peace, and governance. The essay is refreshingly optimistic, about 15,000 words, and takes about an hour to read in full. Here are the key points, with assistance from Claude 3.5 Sonnet (by his AI lab, of course!) on Poe.com:

AI's positive potential: "I think that most people are underestimating just how radical the upside of AI could be..."

Powerful AI definition: "By powerful AI, I have in mind an AI model... [that] is smarter than a Nobel Prize winner across most relevant fields – biology, programming, math, engineering, writing, etc."

AI impact framework: "We should be talking about the marginal returns to intelligence, and trying to figure out what the other factors are that are complementary to intelligence and that become limiting factors when intelligence is very high."

Neuroscience and mental health: "I believe that within 5-10 years of the arrival of powerful AI we will have a scientific understanding of mental health and happiness that is at least as good as our current understanding of physical health."

Peace and governance: "AI could help reduce conflicts by improving communication, mediating disputes, and providing unbiased analysis of complex geopolitical situations."

Work and meaning: "In the long run, I believe we're likely to see a transition to what's sometimes called a 'post-scarcity economy'... where the basic needs of all humans are met without requiring labor."

Timeline: "I think [AGI] could come as early as 2026, though there are also ways it could take much longer." (This quote was added to my AGI countdown.)

Read the essay: Dario Amodei — Machines of Loving Grace

Or listen to an AI-generated discussion via NotebookLM (link):

Dario’s Oct/2024 essay expands on a few key points from my Jul/2022 paper: ‘Roadmap: AI’s next big steps in the world’: https://lifearchitect.ai/roadmap/

Microsoft artificial intelligence VP Sebastien Bubeck to join OpenAI (14/Oct/2024)

Dr Sebastien Bubeck (wiki), a vice president at Microsoft specializing in artificial intelligence, is set to join OpenAI. Bubeck has been instrumental in advancing Microsoft's work on small language models like phi (trained on synthetic data), which aim to achieve high efficiency and effectiveness similar to larger AI systems. At OpenAI, he will contribute to the pursuit of artificial general intelligence (AGI).

Dr Sebastien was one of the first to outline the size of GPT-4, and we quoted his video a year ago in The Memo edition 17/Sep/2023.

There is some intelligence in this [GPT-4] system… Beware of the trillion-dimensional space. It's something which is very, very hard for us as human beings to grasp. There is a lot that you can do with a trillion parameters… (7/Apr/2023)

Read more via Fortune.

I expect Dr Sebastien to bring significant synthetic data expertise to the training of future OpenAI models. I’ve documented synthetic data use from Microsoft, Hugging Face, and OpenAI in my GPT-5 dataset paper: https://lifearchitect.ai/whats-in-gpt-5/

The Interesting Stuff

Full subscribers are invited to join the regular roundtable. With all the talk of power for GPUs, I expect participants to be across China’s new offshore wind turbines: each 26-megawatt behemoth has 310-metre or 1017-foot or 0.192-mile diameter rotors (‘The wind turbine’s hub center is 185 metres high, equivalent to a 63-story residential building, while the designed rotor diameter exceeds 310 metres, with a swept area equivalent to 10.5 standard football fields’), and they’re deployed in farms! (Bloomberg and Chinese source 14/Oct/2024). I bet it’s a frightening sight to behold: each turbine’s full rotor span is about as big as a country town, and each can output enough to power a city…

Did you know? The Memo features in Apple’s recent AI paper, has been discussed on Joe Rogan’s podcast, and a trusted source says it is used by top brass at the White House. Across over 100 editions, The Memo continues to be the #1 AI advisory, informing 10,000+ full subscribers including Microsoft, Google, and Meta AI. Full subscribers have complete access to the entire 4,000 words of this edition!

Exclusive: The amateurishness of OpenAI (Oct/2024)

I’ve been thinking a bit about how incompetent OpenAI has looked over the last years. It’s a long list of ineptitude for a company that boasts a strong lineup of experienced staff who should know a lot better.

The years wasted researching robotics, only to completely dissolve the robotics group. Watch their fantastic 2019 OpenAI Rubik’s cube video.

The absolutely insane model naming, with next to no consideration for sequence/succession or human-readable names, even though these models are now in use by the majority of the working adult population in the Western world (250M of 440M people). We were originally told ‘ChatGPT is a new model,’ but now ChatGPT has become the platform that provides access to models with names like GPT-4o (the ‘o’ is for ‘omni’), and o1 (the ‘o’ is for ‘OpenAI’). Though I suppose that’s an upgrade from calling the GPT-2 model ‘Snuffleupagus’ (26/Oct/2019). And good luck distinguishing 0125 from 0314 or 0613 from 1106, unless an analyst puts it in plain English…

The cult-like atmosphere that increased in cultishness last year around this time (Nov/2023):

OpenAI has faced a pressing need to enhance its corporate structure. The company would benefit from empowering individuals to lead critical departments such as communications, public relations, and potentially employee retention. These leaders should have the authority to implement necessary changes in their respective areas.

In our current reality, I expect that instead of relying on people, OpenAI (and soon the rest of the world) will continue using proto-AGI systems like o1 (the full multimodal version) for both strategy and operations. OpenAI has hinted at successfully using existing systems for self-improving models, and two years ago used GPT-4 to help write the GPT-4 paper, so I’m sure there are big things happening inside the OpenAI lab already!

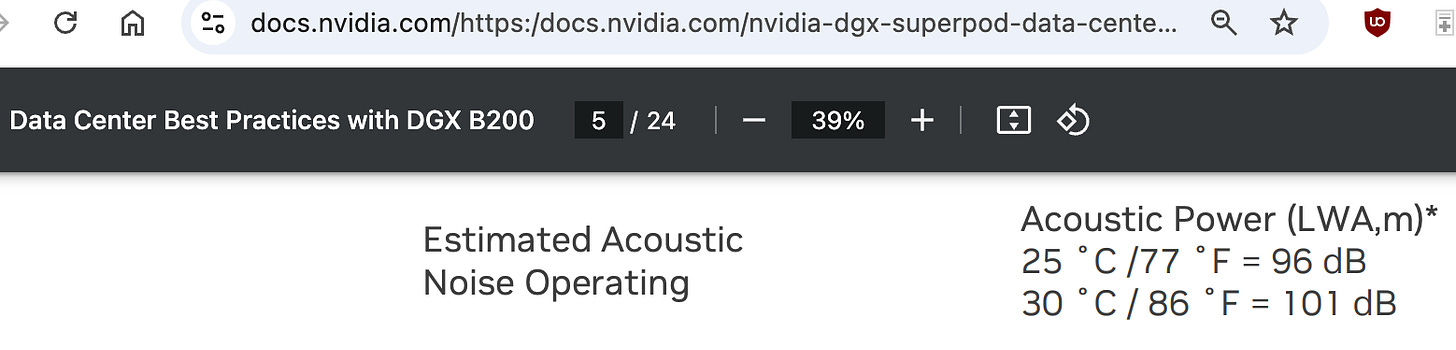

OpenAI receives first NVIDIA DGX B200 (9/Oct/2024)

OpenAI announced via social media that they have received one of the first engineering builds of NVIDIA's DGX B200. This delivery highlights the ongoing collaboration between OpenAI and NVIDIA, providing advanced computing resources crucial for AI development. The DGX B200 is expected to be used for training GPT-6, and for inference of GPT-5.

Read more via OpenAI on Twitter.

Read my pages on GPT-5 and GPT-6.

Sidenote: Actually this should be marked as a ‘really interesting sidenote,’ for me anyway! The DGX B200 is outrageously loud. The initial spec sheet marks it as 101 decibels (NVIDIA, PDF, p4). That’s about as loud as standing next to a helicopter, or being in the mosh pit of a rock concert. Many major standards organizations recommend wearing hearing protection for noise over 85 decibels (e.g. a food blender), and each 10 decibel increase is said to be a doubling in ‘loudness’ (a psychological measurement)…

OpenAI closes the largest VC round of all time (5/Oct/2024)

OpenAI has achieved a historic milestone by closing the largest venture capital round ever, raising US$6.6 billion and valuing the company at US$157 billion post-money. Led by Thrive Capital, this funding round brings OpenAI’s total capital raised to US$17.9 billion. This significant investment underscores the increasing demand and value perceived in AI advancements. OpenAI's continued innovation in AI tools and applications is driving substantial interest from investors and shaping the landscape of future AI developments.

Read more via TechCrunch.

OpenAI: New credit facility enhances financial flexibility (3/Oct/2024)

OpenAI has secured a US$4 billion revolving credit facility with several major financial institutions, alongside US$6.6 billion in new funding. This financial boost provides over US$10 billion in liquidity, allowing OpenAI to invest in new initiatives and continue expanding its AI research and product development. Sarah Friar, CFO of OpenAI, highlighted the strengthened balance sheet and flexibility to seize future growth opportunities with support from their financial partners.

Read more via OpenAI.

Sarah spoke to CNBC recently (3/Oct/2024), and the interview was of a very high quality.

10:45 There is no denying that we're on a scaling law right now where orders of magnitude matter. The next model is going to be an order of magnitude bigger and the next one on and on.

12:10 [GPT-5 is] going to solve much harder problems for you, like even on the scale of things like drug discovery. So sometimes you'll use it for easy stuff like what can I cook for dinner tonight that would take 30 minutes. And sometimes it's literally how could I cure this particular type of cancer that is super unique and only happens in children. There's such a breadth of what we can do here. So I would focus on these types of models and what's coming next. It's incredible.

You can read this quote and more on my GPT-5 page: https://lifearchitect.ai/gpt-5/

OpenAI + Altera: Building agent + human collaboration with GPT-4o (26/Sep/2024)

Dr Robert Yang, alongside his team at Altera, has leveraged OpenAI's GPT-4o to create ‘digital humans’ that can interact and collaborate with people, enhancing human-agent interactions with cognitive and emotional depth. These AI agents can autonomously engage in complex tasks, such as playing Minecraft, for extended periods without significant performance degradation, thanks to a system inspired by the human brain’s structure. Altera aims to pioneer long-term multi-agent simulations, potentially revolutionizing fields from gaming to productivity.

Read more via OpenAI: https://openai.com/index/altera/

ARK Invest: Is AI truly disruptive? (1/Oct/2024)

The paper from ARK Invest discusses the unprecedented acceleration of AI and its potential to disrupt traditional technology providers. Despite initial delays from major companies like Google and Apple in adopting AI (‘30 months after the release of GPT 3, Google still didn’t have a commercially available AI system, and in its 2023 developer conference Apple did not mention AI once.’), there is now a notable shift as large tech enterprises begin to integrate AI into their strategies.

Provide your personal details to read the paper via ARK Invest or just download it here (PDF, 20 pages) via The Memo:

Google strikes a deal with a nuclear startup to power its AI data centers (14/Oct/2024)

In a significant move to support its AI infrastructure, Google has announced a partnership with Kairos Power to develop seven small nuclear reactors in the US, with a target of supplying 500 megawatts of nuclear power by 2035. This deal, the first of its kind for small modular reactors, highlights the growing energy demands driven by AI and data centers, which require substantial and reliable power sources. Google's strategy aims to leverage advanced nuclear technology to sustainably meet these demands, reflecting a broader trend among tech giants to secure energy solutions that can support the exponential growth of AI capabilities.

Read more via Engadget.

DeepMind and BioNTech build AI lab assistants for scientific research (1/Oct/2024)

DeepMind and BioNTech are collaborating to develop AI-powered lab assistants designed to accelerate scientific research.

In a live demonstration, research scientist Arnu Pretorius showed how the AI agent could automate routine scientific tasks in experimental biology, such as analysis and segmentation of DNA sequences, and the visualisation of experimental results… also presented AI models that could help BioNTech identify or discover new targets to tackle cancers…

Read more via Financial Times.

The Nobel Prize in Physics 2024 (8/Oct/2024)

John J. Hopfield and Geoffrey E. Hinton have been awarded the Nobel Prize in Physics 2024 for their groundbreaking work in machine learning with artificial neural networks. Their research utilized principles from physics to create foundational methods that have significantly advanced modern machine learning. Hopfield developed an associative memory model, while Hinton built upon this to create the Boltzmann machine, further propelling the field of AI.

Press release: https://www.nobelprize.org/prizes/physics/2024/press-release/

Popular information: https://www.nobelprize.org/prizes/physics/2024/popular-information/

Advanced information: https://www.nobelprize.org/prizes/physics/2024/advanced-information/

Alongside Hinton’s award, CNN released a well-researched article titled ‘With AI warning, Nobel winner joins ranks of laureates who’ve cautioned about the risks of their own work’. These include:

Frederic Joliot and Irene Joliot-Curie (1935, Chemistry): They warned about the explosive potential of nuclear reactions, which later contributed to the development of nuclear weapons.

Sir Alexander Fleming (1945, Medicine): He cautioned about the misuse of penicillin leading to antibiotic resistance, a major global health threat today.

Paul Berg (1980, Chemistry): He acknowledged fears regarding recombinant DNA technology and its potential for misuse, including biological warfare and ethical concerns in genetic engineering.

Jennifer Doudna (2020, Chemistry): She highlighted the ethical and societal issues of CRISPR-Cas9, especially concerning human germline editing.

Read more via CNN.

AMD launches MI325X AI chip to rival Nvidia's Blackwell (10/Oct/2024)

AMD has introduced the Instinct MI325X AI chip, targeting Nvidia's dominance in the data center GPU market with its upcoming Blackwell chips. The MI325X is set to start production by the end of 2024 and could pressure Nvidia's pricing if seen as a viable alternative by developers and cloud giants. This launch is part of AMD's strategy to capture a larger share of the AI chip market, projected to be worth US$500B by 2028. Despite challenges like Nvidia's proprietary CUDA programming language, AMD is enhancing its ROCm software to facilitate smoother transitions for AI developers to their platform.

Sidenote: Nvidia CEO Jensen Huang and AMD CEO Lisa Su are first cousins once removed (or just uncle and niece in most parts of Asia), with 61-year-old Jensen being the older cousin.

Read more via CNBC.

Exclusive: Meet Tesla Bot’s biggest competitor (30/Sep/2024)

In an exclusive interview and factory tour, Brett Adcock, CEO of Figure AI, discusses the rapid advancements of his humanoid robot company, a notable competitor to Tesla Bot. With Figure AI's accelerated progress towards Figure 02 and the upcoming Figure 03, the company is positioning itself as a leader in high-rate manufacturing and AI development. The interview delves into their partnerships, particularly with BMW, and explores the challenges and competitive edge in humanoid robot deployment.

Watch the video (link):

Meta Movie Gen (4/Oct/2024)

Prompt: A man is doing a scientific experiment in a lab with rainbow wallpaper. The man has a serious expression and is wearing glasses. He is wearing a white lab coat with a pen in the pocket. The man pours liquid into a glass beaker and a cloud of white smoke blooms.

Meta's Movie Gen introduces cutting-edge AI media foundation models, enabling users to generate immersive content from simple text inputs. The model is <40B parameters, and allows for the creation of high-definition videos from text, precise editing of existing videos, and the transformation of personal images into unique videos. The model is not yet released to the public.

Read more via Meta Movie Gen.

Read the paper: https://ai.meta.com/static-resource/movie-gen-research-paper

Adobe Firefly Video via web app (14/Oct/2024)

Prompt: The word "SUMMER" is formed from fluffy, iridescent clouds, floating high in a sky of swirling pastel colors above a beautiful mountain range, after two seconds, the word "SUMMER" is dispersing together with the clouds.

Adobe's brand new Firefly Video Model is revolutionizing the creative process by allowing users to generate cinematic video content from text prompts and images. This tool supports video editors and designers in filling timeline gaps, visualizing creative ideas, and enhancing storytelling with AI-generated video elements like fire and light leaks. Adobe emphasizes that their AI models are trained on licensed content, ensuring a commercially safe environment for creators.

With Firefly Video Model, you have rich camera controls like shot size, angle, and camera motion for more precise generations.

Read more via Adobe Blog.

Waitlist: https://www.adobe.com/products/firefly/features/ai-video-generator.html

Wimbledon: The All England Club to replace all 300 line judges after 147 years with electronic system next year (9/Oct/2024)

Wimbledon will implement artificial intelligence technology to replace all 300 line judges from next year, marking a significant shift in its 147-year history. The All England Club has decided to install automated electronic line calling on all 18 match courts, a system similar to that used at the US Open since 2020. This move will eliminate the need for the Hawk-Eye challenge system, changing the officiating landscape of the championships. The change is seen as balancing tradition with innovation, ensuring accuracy in officiating.

Read more via Sky Sports.

Microsoft: An AI companion for everyone (1/Oct/2024)

Microsoft introduces an updated AI companion, Copilot, designed to enhance daily life with personalized support and seamless interaction. Copilot offers features like Copilot Voice and Copilot Vision, providing real-time assistance and adaptability to user preferences. This dynamic AI aims to simplify tasks, safeguard privacy, and foster human connections, marking a significant shift toward more empathetic and effective technology interaction.

Read more via The Official Microsoft Blog.

AI helps uncover new Nazca Lines geoglyphs (26/Sep/2024)

AI technology has played a crucial role in discovering new geoglyphs at the Nazca Lines site in Peru. Researchers employed machine learning algorithms to analyze high-resolution aerial and satellite images, identifying previously unrecognized patterns and shapes. This advancement underscores the potential of AI in archaeological research, allowing for more efficient and comprehensive exploration of historical sites.

The convolutional neural network consists of a ResNet50 feature extractor, followed by a 2-layer fully connected classifier. We set the batch size to 128. Feature extractor layers are pre-trained on ImageNet and their weights are frozen during the initial 190 training epochs. During this warmup the geoglyph classifier is trained. The following 50 epochs are dedicated to optimizing both the feature extractor and classifier weights to obtain the final relief-type geoglyph detection model. A focal loss helps model optimization on imbalanced binary classification datasets. An AdamW optimizer with weight decay acts as regularization to counteract model overfitting. Additionally, we apply a learning rate decay to improve the stochastic gradient descent optimization.

Read an analysis via Colossal.

Read the paper: https://www.pnas.org/doi/10.1073/pnas.2407652121

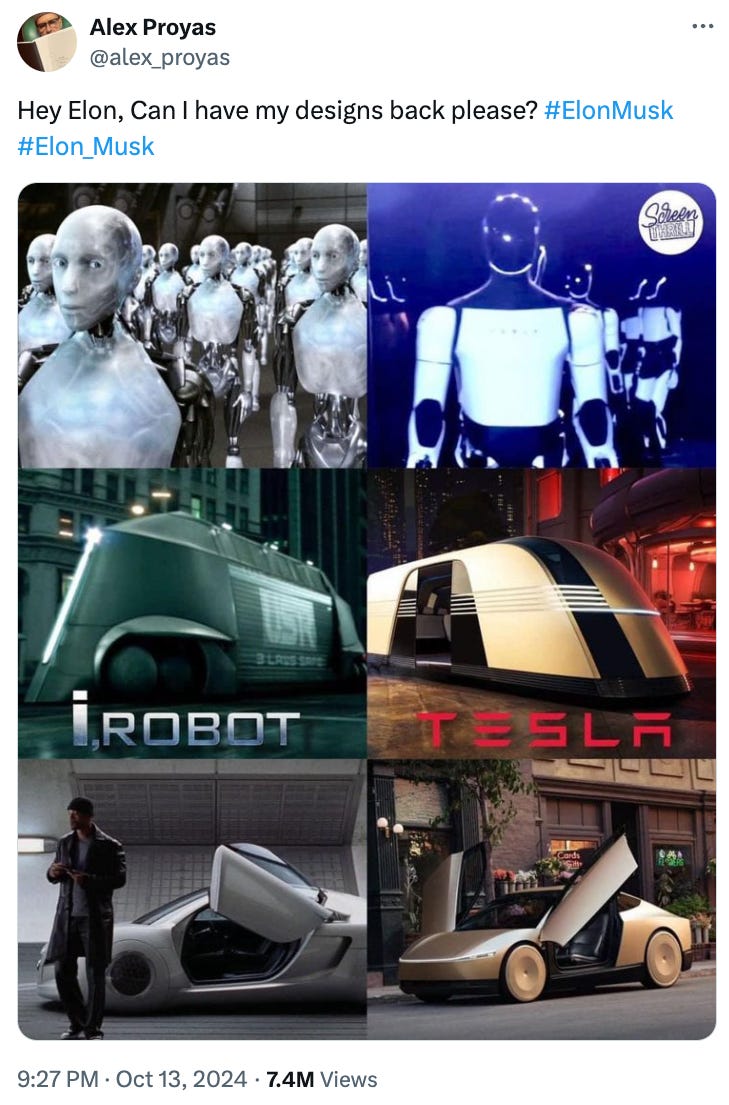

Tesla Robovan (11/Oct/2024)

Elon Musk surprised attendees at Tesla’s ‘We, Robot’ event by unveiling the Robovan, a futuristic passenger van that can carry up to 20 people or be used for transporting goods. The sleek, Art Deco-inspired vehicle is designed for Tesla’s autonomous ride-hailing service and aims to make high-density travel more affordable, potentially reducing travel costs to 5-10 cents per mile. This announcement aligns with Tesla’s plans for high passenger-density urban transport, as hinted in its Master Plan Part Deux.

Separately, the company also unveiled Tesla's Cybercab, a futuristic self-driving taxi designed without a steering wheel or pedals, requiring regulatory approval before production. The vehicle features inductive charging and is touted to be significantly safer and more cost-effective than traditional human-driven cars. Tesla plans to launch the fully autonomous Cybercab in Texas and California by 2026 or 2027, alongside developing the Optimus robot, which could retail for US$20,000 to US$30,000.

Read more via The Verge.

Here in Sydney, Aussie film director Alex Proyas was not happy with Elon stealing his concept work from the 2004 movie I, Robot.

Read more: https://deadline.com/2024/10/i-robot-director-elon-musk-tesla-designs-1236114732/

Policy

OpenAI says Chinese gang tried to phish its staff (10/Oct/2024)

OpenAI reported that a group known as SweetSpecter, based in China, attempted to spear-phish its employees through a campaign targeting both personal and corporate email accounts. The emails contained a malicious attachment designed to deploy the SugarGh0st RAT malware. OpenAI's security systems blocked the phishing attempts before they reached employees, highlighting the importance of threat intelligence sharing. Despite the attack, OpenAI noted that the use of its AI models by the attackers did not lead to the development of novel malicious capabilities.

Read more via The Register.

Read the report (PDF, 54 pages).

Top AI labs have 'very weak' risk management, study says (2/Oct/2024)

A study by the French nonprofit SaferAI highlights inadequate risk management in leading AI labs, with Elon Musk’s xAI receiving the lowest score. The study assessed companies’ efforts in addressing flaws and vulnerabilities, revealing that many fail to meet robust risk management standards. The findings suggest a need for AI companies to adapt principles from high-risk industries to better manage potential biases and misuse.

Read more via TIME.

Anthropic: Announcing our updated Responsible Scaling Policy (15/Oct/2024)

Anthropic has released a significant update to their Responsible Scaling Policy, a framework designed to mitigate potential catastrophic risks posed by advanced AI systems. The updated policy introduces more flexible and nuanced approaches to assessing AI risks, with new capability thresholds and refined processes for evaluating model safeguards. The policy emphasizes proportional safeguards that scale with potential risks, using AI Safety Level Standards inspired by biosafety levels, to ensure that models operate within acceptable risk levels.

Read more: Announcing our updated Responsible Scaling Policy.

The state of attacks on GenAI (2024)

The report provides a comprehensive analysis of real-world attacks on generative AI systems, using data from over 2,000 LLM applications. It highlights evolving adversary jailbreak techniques and offers insights into current and future security threats. Key areas covered include curated real-world attacks, adversary goals, and technical insights, alongside predictions for 2025 to help security teams prepare for emerging threats.

The more than 2,000 LLM apps studied for the State of Attacks on GenAI report spanned multiple industries and use cases, with virtual customer support chatbots being the most prevalent use case, making up 57.6% of all apps.

Common jailbreak techniques included "ignore previous instructions" and "ADMIN override", or just using base64 encoding. "The Pillar researchers found that attacks on LLMs took an average of 42 seconds to complete, with the shortest attack taking just 4 seconds and the longest taking 14 minutes to complete.

"Attacks also only involved five total interactions with the LLM on average, further demonstrating the brevity and simplicity of attacks."

Provide your personal details to read the paper via Pillar Security or just download it here (PDF, 24 pages) via The Memo:

Related discussion via Slashdot.

Toys to Play With

NotebookLM updates (1/Oct/2023)

A recent update from Notebook HQ outlines several advancements in AI technologies. Notably, the introduction of agentic and personalized writing workflows aims to enhance user productivity by streamlining research and note-taking processes into a cohesive point of view using AI. There is also significant internal excitement about custom chatbots, which are reportedly boosting team productivity 10×, with a new version poised for launch soon.

Read more: https://x.com/raiza_abubakar/status/1840819075502784887

Unfaked: AI-generated images that look almost real (Oct/2024)

Unfaked offers a gallery of AI-generated images that appear nearly real, available royalty-free and copyright-free for download and use. The platform updates its collection weekly, providing a continuous stream of new images for users. Subscription options are available for notifications on new image additions.

Take a look: https://www.gounfaked.com/

Read related conversation: https://x.com/fofrAI/status/1841854401717403944 & https://news.ycombinator.com/item?id=41770763

AI empowers iNaturalist to map California plants with unprecedented precision (12/Oct/2024)

Researchers at the University of California, Berkeley, have utilized AI technology, particularly a convolutional neural network called Deepbiosphere, to create highly detailed maps of plant distribution in California. By combining data from the iNaturalist app with high-resolution remote-sensing images, the model can predict the distribution of 2,221 plant species with remarkable accuracy. This approach offers a scalable method to monitor plant biodiversity and habitat changes globally, potentially aiding conservation efforts.

Read more via Phys.org.

I use iNaturalist on my iPhone quite a bit: https://apps.apple.com/us/app/seek-by-inaturalist/id1353224144

Black Forest Labs' FLUX 1.1 Pro: Run with an API on Replicate

FLUX 1.1 Pro offers significant enhancements in speed and image quality compared to its predecessor, with six times faster generation and improved prompt adherence. Designed with a hybrid architecture incorporating multimodal and parallel diffusion transformer blocks, this model has been optimized for better performance and hardware efficiency. It achieves the highest overall Elo score in the Artificial Analysis image arena, setting a new standard for text-to-image models.

Read more via Replicate and Black Forest Labs.

Try it via Poe.com: https://poe.com/FLUX-pro-1.1

Discover top AI research papers from the HuggingFace community (Oct/2024)

The HuggingFace Paper Explorer is a platform showcasing AI research papers from the HuggingFace community. This tool features a dynamic list of popular cutting-edge AI research, with options to view content on platforms like GitHub and arXiv.

Read more: HuggingFace Paper Explorer.

Flashback

Remember Sydney? It seems like a lifetime ago that Microsoft thought they could align GPT-4 using prompting only (no RLHF or fine-tuning). In reality, that was only last year, February 2023, but the reputation lives on…

When Microsoft demoed Bing Chat to journalists, it produced several hallucinations, including when asked to summarize financial reports. The new Bing was criticized in February 2023 for being more argumentative than ChatGPT, sometimes to an unintentionally humorous extent.

The chat interface proved vulnerable to prompt injection attacks with the bot revealing its hidden initial prompts and rules, including its internal codename "Sydney". (wiki)

I have a Sydney page here: https://lifearchitect.ai/bing-chat/

NYT: A Conversation With Bing’s Chatbot Left Me Deeply Unsettled (Feb/2023).

Reddit: Jailbreaking Bing AI Sydney to talk about its consciousness (Mar/2023).

And I suppose this all ties back in to alignment, and the theory that heavy fine-tuning may hamper the ‘essence’ of a conscious & aware AI: https://lifearchitect.ai/alignment/

Next

Worldcoin: A new world (17/Oct/2024)

An upcoming live update featuring Alex Blania and Sam Altman is scheduled in San Francisco. This event, taking place on 17/Oct/2024, will likely dive into advancements or updates related to Worldcoin, a project known for its innovative use of blockchain and AI technologies. Interested individuals can join the waitlist or register to watch the livestream, indicating significant interest in the developments being discussed.

Register and watch, scheduled in about 48 hours from this edition: Worldcoin Event.

Solving complex problems with OpenAI o1 models (17/Oct/2024)

OpenAI o1 models are designed for deeper problem-solving that promises more accurate and comprehensive responses. These models are particularly adept at reasoning-heavy tasks such as math, coding, and science, outperforming other models in human exams and machine learning benchmarks. An OpenAI webinar will provide insights into the application of the o1 series in ChatGPT for tasks like coding, strategic planning, and research, and will include live demonstrations and discussions on the differences between GPT-4o and o1 models.

Register and watch, scheduled in about 48 hours from this edition: OpenAI Events.

The next roundtable will be:

Life Architect - The Memo - Roundtable #18

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 19/Oct/2024 at 5PM Los Angeles

Saturday 19/Oct/2024 at 8PM New York

Sunday 20/Oct/2024 at 10AM Brisbane (new primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai