FOR IMMEDIATE RELEASE: 17/Sep/2023

Dr Sébastien Bubeck, Microsoft Research (7/Apr/2023):

There is some intelligence in this [GPT-4] system… Beware of the trillion-dimensional space. It's something which is very, very hard for us as human beings to grasp. There is a lot that you can do with a trillion parameters…

It could absolutely build an internal representation of the world, and act on it as the processing progresses through the layers and through the sentence temporally… We shouldn't think about those neural networks as learning a simple concept like ‘Paris is the capital of France.’ It's doing much more, like learning operators, it’s learning algorithms. It's not just retrieving information, not at all. It has built internal representations that allows it to reproduce the data that it has seen succinctly… Yes, it was trained just to predict the next word. But what emerged out of this is a lot more than just a statistical pattern-matching object.

Welcome back to The Memo.

You’re joining full subscribers from Harvard, Rice, Columbia, MIT, Cornell, Stanford, Brown, UC Berkeley, FSU, Princeton, and more…

This is another long edition. I don’t usually announce my keynotes here (nearly all of them are for private bodies), but I’m really looking forward to opening the next public event in Ukraine by Devoxx, ‘AI – Friend or Foe?’ My keynote is called ‘Superintelligence: No one is smart enough‘ and the recording will be made available to full subscribers. The next roundtable is on 23/Sep/2023, details at the end of this edition.

In the Toys to play with section, we look at a new prediction viz for GPT, a brilliant application of image models for your own family, and a new vision-language-action model for self-driving vehicles.

The BIG Stuff

Andreessen Horowitz: How Are Consumers Using Generative AI? (13/Sep/2023)

ChatGPT: estimated 200M monthly users, 1.6B monthly visits (Jun/2023).

ChatGPT: 24th most visited website globally.

80% of the top 50 AI products didn’t exist a year ago.

Read more: https://a16z.com/how-are-consumers-using-generative-ai/

Falcon 180B is the largest open(-ish) dense model right now (6/Sep/2023)

TII in Abu Dhabi released a 180B parameter version of Falcon, trained on 3.5T tokens (20:1). It is the largest and highest performing open dense model in the world right now, about twice as big as Llama 2. The definition of ‘open’ does not include full commercial use.

Read more: https://huggingface.co/blog/falcon-180b

Try the demo: https://huggingface.co/spaces/tiiuae/falcon-180b-demo

See Falcon 180B on the Models Table and Timeline.

Watch a replay of my Falcon 180B livestream.

Apple UniLM 34M + Apple Ajax 200B (7/Sep/2023)

Hacker Jack Cook discovered Apple’s newest LLM, a tiny Transformer model used for predictive text on-device in the next version of macOS (Sonoma 14.0), and the next version of iOS (iOS 17).

From my calculations based on sizes of each layer, Apple’s predictive text model appears to have about 34 million parameters, and it has a hidden size of 512 units. This makes it much smaller than even the smallest version of GPT-2.

The reason this one is so interesting to me is because Apple has 2B+ active devices out there (Feb/2023).

UniLM may be the very first on-device Transformer model, and it’s hitting a huge slice of the population.

Read more: https://jackcook.com/2023/09/08/predictive-text.html

Browse the repo: https://github.com/jackcook/predictive-spy

Apple’s most advanced LLM, known internally as Ajax GPT, has been trained on “more than 200 billion parameters” and is more powerful than OpenAI’s GPT-3.5…

See UniLM on the Models Table.

We covered some of this (UniLM and Ajax) in my recent livestream (replay).

Bonus: There were 9 major model announcements in the first two weeks of Sep/2023.

TinyLlama

Falcon 180B

FLM-101B

Persimmon-8B

UniLM

phi-1.5

NExT-GPT

MoLM

DeciLM

Read more (including playground and paper) on the Models Table and Timeline.

GPT-4 hits 99th percentile in creativity testing (25/Aug/2023)

The gold standard for testing creativity is the Torrance Tests of Creative Thinking, TTCT (wiki). The TTCT was designed to measure six sub-constructs of creativity and creative strengths: fluency, flexibility, originality, elaboration, titles, closure, and creative strength.

GPT-4 scored in the top 1% of test-takers for the originality of its ideas… Scholastic Testing Service is a private company, it does not share its prompts with the public. This ensured that GPT-4 would not have been able to scrape the internet for past prompts and their responses.

Read more: https://theconversation.com/ai-scores-in-the-top-percentile-of-creative-thinking-211598

I’ve updated my viz to include this important benchmark:

Stability AI releases Stable Audio (13/Sep/2023)

I keep thinking back to when we didn't have Stability AI, and it was just Google and Meta teasing us with mouth watering papers, but never letting us touch them. I'm so thankful Stability exists. (HN user, 13/Sep/2023)

Stable Audio is a latent diffusion model (like Stable Diffusion) trained on >800k [studio quality] sound files. A 907M parameter U-net powers Stable Audio.

Read release: https://stability.ai/research/stable-audio-efficient-timing-latent-diffusion

Try it out: https://stableaudio.com/

Exclusive: Microsoft argues about data quality with phi-1.5 (12/Sep/2023)

Microsoft’s latest LLM is a 1.3B-parameter model trained on 150B tokens, with performance comparable to models 5x larger.

The most interesting part of this entire piece is that Microsoft is arguing against ever-larger datasets, and proposing that dataset quality is more important.

It reminds me of my exploration of this topic more than two years ago, back in Jun/2021, with my paper Integrated AI: Dataset quality vs quantity via bonum (GPT-4 and beyond). Microsoft came to the same conclusion, finding that (I’m gonna bold the whole thing; it’s important!):

…the creation of a robust and comprehensive dataset demands more than raw computational power: It requires intricate iterations, strategic topic selection, and a deep understanding of knowledge gaps to ensure quality and diversity of the data. We speculate that the creation of synthetic datasets will become, in the near future, an important technical skill and a central topic of research in AI.

Read the paper: https://arxiv.org/abs/2309.05463

Watch the related video by Microsoft’s Dr Sébastien Bubeck.

See phi-1.5 on the Models Table.

Exclusive: Inflection ready to train a 1,000T parameter model (1/Sep/2023)

This whole interview with Inflection’s CEO is great. But there are some monumental points in there…

We [Inflection] have 6,000 H100s in operation today, training models. By December, we will have 22,000 H100s fully operational. And every month between now and then, we’re adding 1,000 to 2,000 H100s. So people can work out what that enables us to train by spring [Mar/2024 in US], by summer of next year [Jun/2024 in US], and we’ll continue training larger models.

We’re going to be training models that are 1,000x larger than they currently are in the next three years. Even at Inflection, with the compute that we have, will be 100x larger than the current frontier models in the next 18 months.

…[and we’re not even] an AGI company; we’re not trying to build a superintelligence. We’re trying to build a personal AI.

This says to me that these companies are going big. Really big.

1,000 trillion parameters is 1 quadrillion parameters.

GPT-4 is 1.76T parameters. 1,000× is 1,760T (or 1.76 quadrillion) parameters. The capabilities of a model 1,000× GPT-4 would be something to behold…

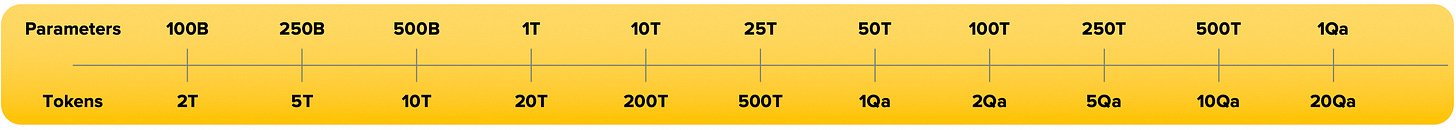

I had a hunch we were quickly heading this way when I designed the original LifeArchitect.ai report card ruler in 2022 (shown below) which caps out at 1Qa (quadrillion) parameters. Next stop is quintillion (Qi).

Check out my latest visualization showing humans versus AI (GPT-4 and Gemini):

The Interesting Stuff

Google OPRO self-improves (7/Sep/2023)

…prompts optimized by OPRO outperform human-designed prompts by up to 8% on GSM8K [maths], and by up to 50% on Big-Bench Hard [IQ] tasks.

Read the paper: https://arxiv.org/abs/2309.03409

This milestone bumped up my conservative countdown to AGI from 54% ➜ 55%.

Alan’s conservative countdown to AGI: https://lifearchitect.ai/agi/

Use case: GPT for medical diagnosis (11/Sep/2023)

Article title: A boy saw 17 doctors over 3 years for chronic pain. ChatGPT found the right diagnosis

The frustrated mom made an account and shared with the artificial intelligence platform everything she knew about her son's symptoms and all the information she could gather from his MRIs.

“We saw so many doctors. We ended up in the ER at one point. I kept pushing,” she says. “I really spent the night on the (computer) … going through all these things."

So, when ChatGPT suggested a diagnosis of tethered cord syndrome, "it made a lot of sense," she recalls.

Read the discussion on Hacker News.

Use of GPT-4 to Analyze Medical Records of Patients With Extensive Investigations and Delayed Diagnosis (14/Aug/2023)

I do like a good empirical case study like the one above, but here’s a rigorous paper from a few weeks ago.

The accuracy of the primary diagnoses made by GPT-4 was 4 of 6 patients (66.7%), [and human clinicians was] 2 of 6 patients (33.3%)…

GPT-4 may increase confidence in diagnosis and earlier commencement of appropriate treatment, alert clinicians missing important diagnoses, and offer suggestions similar to specialists to achieve the correct clinical diagnosis, which has potential value in low-income countries with lack of specialist care.

Read the paper: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10425828/

AI21 Labs riding GenAI wave with $155 million Series C at $1.4 billion valuation (31/Aug/2023)

I’ve been playing with Israeli lab AI21’s models for a long time, starting with Jurassic-1 back in 2021. Leta even spoke to the J1 model (via Julian, named after SMPY Prof Julian Stanley) in Episode 17 in Aug/2021. Now with the J2 model, the lab is even bigger.

AI21 employs around 200 people and plans to recruit an additional 100 in the coming year.

AI21’s proprietary Jurassic-2 foundation models are some of the world’s largest and most sophisticated LLMs. Jurassic-2 powers AI21 Studio, a developer platform for building custom text-based business applications off of AI21’s language models; and Wordtune, a multilingual reading and writing AI assistant for professionals and consumers…

The new funding comes on the heels of AI21’s recent collaborations with customers in diverse sectors, among them Carrefour, Clarivate, eBay, Guesty, Monday.com and Ubisoft.

Read (not very much) more: https://www.calcalistech.com/ctechnews/article/byy4pxa6h

Morgan Stanley + OpenAI launching financial chatbot (5/Sep/2023)

After testing it with 1,000 financial advisers for some months, the bank will roll out a generative artificial intelligence bot this month, developed with the makers of ChatGPT, OpenAI.

Bankers can use the virtual assistant to quickly find research or forms instead of sifting through hundreds of thousands of documents.

The bank is also developing technology which eventually, with clients' permission, could create a meeting summary of the conversation, draft a follow-up email suggesting next steps, update the bank's sales database, schedule a follow-up appointment, and learn how to help advisers manage clients' finances on areas such as taxes, retirement savings and inheritances. The details of the program have not yet been reported.

We were doing all this and more in 2020, by the way. There are many, many better use cases for AI today.

More OpenAI news (Sep/2023)

OpenAI had a lot of news in the last two weeks; some medium-sized, and some just interesting sidenotes.

OpenAI: Teaching with AI (31/Aug/2023).

OpenAI-backed GPT-4 platform Speak expanding to US (31/Aug/2023).

OpenAI dev conference in SF on 6/Nov/2023 (6/Sep/2023).

OpenAI CEO gets Indonesia’s first Golden Visa (5/Sep/2023).

Google investing $20M to further research into ‘responsible AI’ (12/Sep/2023)

Inaugural grantees of the Digital Futures Fund include the Aspen Institute, Brookings Institution, Carnegie Endowment for International Peace, the Center for a New American Security, the Center for Strategic and International Studies, the Institute for Security and Technology, Leadership Conference Education Fund, MIT Work of the Future, R Street Institute and SeedAI. The fund will support institutions from countries around the globe, and we look forward to sharing more on these organizations soon.

Read the release: https://blog.google/outreach-initiatives/google-org/launching-the-digital-futures-project-to-support-responsible-ai/

Read more: https://www.theregister.com/2023/09/11/google_think_tanks/

IBM invests in $4.5 billion A.I. unicorn Hugging Face

Since May, Hugging Face and IBM had already been working together on a suite of A.I. tools. As of this month, IBM has also uploaded around 200 open A.I. models to Hugging Face’s platform. One of the models IBM posted to Hugging Face was a collaboration with NASA, marking the space agency’s first ever open-source A.I. model…

Google, Amazon, Nvidia, Intel, and Salesforce all [also] participated.

Scotland is planning a transition to a 4-day work-week (4/Sep/2023)

Tyler Grange, an environmental consulting firm based in England. Managing director Simon Ursell told NPR that the firm invested in technology and stopped doing the "day-to-day rubbish" of certain administrative tasks in order to squeeze the required weekly workload into four days instead of five….

"I think the real question is: Why five days? I haven't heard anybody give me a reason why we work five days other than tradition," he said. "What I think the trial has proved is that working in a way that is most applicable to your organization to achieve the sweet spot of productivity, the best productivity for the time, that's what you've got to be aiming at." (NPR, 21/Feb/2023)

He’s thinking along the right lines. Now extend it a little more, and you have the next few years of embodied AI. Large language models inside robots will ensure that we quickly enter the ‘post-scarcity’ (or abundance) economy (wiki). This is the moment where AI can mine our ore, build our houses, do our work, and cater to our every whim. And it will happen in your lifetime. It has already begun…

Read the Scotland article: https://archive.md/gN83i

Take a look at the humanoids already available to buy: https://lifearchitect.ai/humanoids/

Ray Kurzweil is back (Aug/2023)

…large language models, which I think is the best example of AI, they’re already quite remarkable. You can have very intelligent discussion with them about anything. So nobody on this planet, Einstein, Freud and so on, could do that.

Read the transcript: https://lifearchitect.ai/kurzweil/#202308

Coca‑Cola Y3000 (Sep/2023)

To explore that hypothetical future, Coca-Cola developed a mystery flavor and wrapped it in an AI-themed marketing package and mobile web-based app that draws upon both human designers and the aforementioned Stable Diffusion.

Coke is working with Bain to integrate OpenAI’s models including DALL-E 2, so it may be that Coke used DALL-E 2 for the design and marketing of this campaign, while users are given access to Stable Diffusion instead.

Read more: https://www.bain.com/vector-digital/partnerships-alliance-ecosystem/openai-alliance/

Watch the Coke/DALL-E 2 video from Mar/2023 (link):

Policy

US restricts exports of some Nvidia chips to certain Middle East countries

Further to our exploration of US restrictions of GPUs to China, the US has now extended this to unspecified Middle East countries. A reason for the ban was listed by Reuters:

Nvidia, which gave no reason for the new restrictions in the filing dated Aug. 28, last year said U.S. officials informed them the rule "will address the risk that products may be used in, or diverted to, a 'military end use' or 'military end user' in China."

Reuters: https://archive.md/Hc9qo

Axios Exclusive survey: Experts favor new U.S. agency to govern AI (6/Sep/2023)

AI experts at leading universities favor creating a federal "Department of AI" or a global regulator to govern artificial intelligence over leaving that to Congress, the White House or the private sector. That's the top-level finding of the new Axios-Generation Lab-Syracuse University AI Experts Survey of computer science professors from top U.S. research universities. The survey includes responses from 213 professors of computer science at 65 of the top 100 U.S. computer science programs, as defined by SCImago Journal rankings.

The survey found experts split over when or if AI will escape human control -- but unified in a view that the emerging technologies must be regulated. "Regulation" was the top response when asked what action would move AI in a positive direction. Just 1 in 6 said AI shouldn't or can't be regulated. Only a handful trust the private sector to self-regulate. About 1 in 5 predicted AI will "definitely" stay in human control. The rest were split between those saying AI will "probably" or "definitely" get out of human control and those saying "probably not."

This is an excellent example of ‘no one is smart enough for AI’. When only 20% of computer science professors believe that AI will definitely stay in human control, we have a problem. If they are like most of the CS profs I’ve met, they are locked in their 1990s mindset, and have no place commenting on post-2020 AI.

Be very careful who you listen to…

Read more: https://www.axios.com/2023/09/05/ai-regulations-expert-survey

Hawley, Blumenthal unveil bipartisan AI framework (8/Sep/2023)

While we wait to see the draft, the announcement is just a screenshot(!) on Twitter (8/Sep/2023):

Read more: https://thehill.com/policy/technology/4193967-hawley-blumenthal-unveil-bipartisan-ai-framework/

There has already been quite a bit of criticism:

Licensing for large models is based on illusory risks drummed up by folks who want to create an AT&T 1950's style regulatory moat for existing companies and lock out competition. This is, to put it plainly, un-American. Freedom to compete and to create are what made American business what it is today. Creating this kind of overreaching framework will stifle innovation and smash American competitiveness. (Twitter, 12/Sep/2023)

Toys to Play With

Dr Harvey Castro’s new AI in healthcare course, AIDE (Sep/2023)

Welcome to A.I.D.E - Artificial Intelligence Decoding & Exploring in Healthcare. Taught by Dr. Harvey Castro, this course is your gateway to understanding and applying AI in the medical field. With 65 lessons across eight modules, you'll dive deep into everything from Large Language Models to ethical considerations.

Exclusive 30% discount. Use code THEMEMO30 at checkout.

Join the course: https://www.harveycastromd.info/aide

LINGO-1: Exploring Natural Language for Autonomous Driving (14/Sep/2023)

I’m putting this under ‘Toys to play with’ because you could spend a lot of time watching and reading about this huge application of LLMs/VLMs to the niche field of self-driving cars.

Microsoft-backed self-driving car startup Wayve has pioneered AI-backed cars that explain their decisions while driving.

The commentary technique is reminiscent of roadcraft used by professional driving instructors in their lessons: instructors say interesting aspects of the scene aloud and justify their driving actions using short phrases, helping their students learn by example…

In this first video, LINGO-1 describes the actions it takes when it overtakes a parked car.

LINGO-1: I’m edging in due to the slow-moving traffic.

LINGO-1: I’m overtaking a vehicle that’s parked on the side.

LINGO-1: I’m accelerating now since the road ahead is clear.

Read more about the LINGO-1 model.

Watch one of the short videos (link), or the full list of videos:

Fully client-side GPT2 prediction visualizer (Aug/2023)

This is a fun way to learn more about how LLMs predict the next word.

Try it: https://perplexity.vercel.app/

My Future Children (Sep/2023)

This is an interesting application of image-to-image models.

The technology behind My Future Children's magic are advanced AI algorithms and generative models combined to create potential offspring images based on parent photos.

Take a look (first image free): https://www.myfuturechildren.com/

Flashback

Two years ago this week… Don’t say I didn’t warn you!

Next

Google DeepMind Gemini is on its way! Some people are claiming to have access already:

I have been testing a version of Google’s Gemini and find it very interesting. It is equivalent to ChatGPT-4 but with newly up to the second knowledge base. This saves it from some hallucinations. (Twitter, 15/Sep/2023)

The next roundtable will be:

Life Architect - The Memo - Roundtable #2 with Harvey Castro

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 23/Sep/2023 at 5PM Los Angeles

Saturday 23/Sep/2023 at 8PM New York

Sunday 24/Sep/2023 at 8AM Perth (primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai