The Memo - 12/Feb/2023

WordPress Jetpack AI, GPT-3’s 50+ models, Bing Chat, and much more!

FOR IMMEDIATE RELEASE: 12/Feb/2023

Welcome back to The Memo.

Another big week, and this edition is already full so I’m sending it out!

Al Jazeera picked up my research on GPT-3.5 + Raven’s IQ testing.

Reuters grabbed an early quote from my ChatGPT cost estimates, below.

And one of my consulting clients provided some excellent insights on Microsoft AI in this article (investor.com).

In the Toys to play with section, we look at a new story generator for kids using GPT-3 and Midjourney, one of my favourite ChatGPT alternatives now with visuals, and much more…

The BIG Stuff

Large language models come to your search engine (8/Feb/2023)

Microsoft and Google went head-to-head recently with new AI chat models built in to their services. Here’s the ranking in plain English:

OpenAI’s ChatGPT with 175B parameters or connections (estimated), is still the top dog in terms of both performance and user count.

Microsoft’s Bing chat is probably based on GPT-3.5 (text-davinci-003) with 175B parameters, and adds a layer of web searching through their ‘Prometheus’ model. It remains to be seen whether this is in fact better than pure ChatGPT, or has finally recreated the exciting WebGPT by OpenAI from more than a year ago (Dec/2021). See also my video on the WebGPT model.

Google’s Bard is based on LaMDA with 137B parameters or connections, so it is in last place on this list.

Note that some of #2 and all of #3 is mostly vaporware for now, and have not been publicly released.(By the way, that vaporware link is to FOLDOC. If you remember FOLDOC or The Jargon File from ~30 years ago, give yourself a high-five!)

OpenAI President pointing to other aspects of AI safety (6/Feb/2023)

Here’s another exclusive, based on a throwaway comment by OpenAI President Greg Brockman (‘Was reflecting today on how far AI alignment has come, from being entirely a fringe concern to a critical product feature. Other aspects of AI safety out of limelight (e.g. capability prediction, monitoring for power-seeking, supervising self-improving agents); may change too!’).

He has mentioned a couple of important aspects of AI alignment here:

Capability prediction.

Alan’s notes: Model are getting smarter, and more powerful. They can do things that they shouldn’t be able to do, like learning maths without being ‘taught’. AI capabilities are even beyond Moore’s Law, exploding in predicted performance. See my article on DeepMind Chinchilla and my report on Google Pathways for emerging capabilities.Monitoring for power-seeking.

Alan’s notes: Remember Jarvis and Ultron from Marvel’s Iron Man series? It seems out of reach, and yet it is something to look out for in this rapidly evolving space. ‘Rapidly evolving’ means more than 100,000 new Transformer models on Hugging Face last year, 100 million new ChatGPT users within 8 weeks, and multi-billion-dollar AI labs in the US and China jockeying for position…Supervising self-improving agents.

Alan’s notes: Anthropic’s RL-CAI 52B, announced in Dec/2022, was one of the first models to use Reinforcement Learning from AI Feedback (rather than Reinforcement Learning from Human Feedback). (See my article and video on Anthropic’s RL-CAI 52B model). This is a huge milestone. AI models have been self-improving in other ways, too. Giving GPT access to the web via Bing Chat is one example. What are these models doing? How are they making connections? In Mar/2022, OpenAI said that ‘…the model is a big black box, we can’t infer its beliefs.’ Researchers at Anthropic and Allen AI have gone some way in beginning to mitigate this issue using toy model simulations, but we still don’t know…

The Interesting Stuff

Stanford: GPT-3.5 mental state compared with 9-year-old child (Feb/2023)

…models published before 2022 show virtually no ability to solve [theory of mind] ToM tasks. Yet, the January 2022 version of GPT-3 (davinci-002) solved 70% of ToM tasks, a performance comparable with that of seven-year-old children. Moreover, its November 2022 version (davinci-003), solved 93% of ToM tasks, a performance comparable with that of nine-year-old children.

Read the paper: https://arxiv.org/abs/2302.02083

Read my summary of AI + IQ: https://lifearchitect.ai/iq-testing-ai/

Microsoft’s Bing Chat prompt (10/Feb/2023)

Exclusive. This is the entire Microsoft Bing Chat prompt in plain text.

It is very similar to the Leta AI prompt and the DeepMind Sparrow prompt.

At the time of writing, this full text does not appear anywhere else on the web.

Take a look: https://lifearchitect.ai/bing-chat/

Additionally, here’s some comparisons between responses from OpenAI ChatGPT and Microsoft Bing Chat: https://www.businessinsider.com/chatgpt-ai-compared-to-new-bing-search-chatbot-answers-2023-2

WordPress testing GPT-3 and DALL-E 2 (Jan/2023)

WordPress is the largest content management platform in the world, powering 36% of the top 1 million websites, including LifeArchitect.ai. Codenamed ‘Jetpack AI’, they are now testing content creation using GPT-3 + DALL-E 2:

We have introduced 2 new blocks for WordPress.com customers in partnership with OpenAI:

AI Paragraph will auto-complete your post. Insert the block to generate a new paragraph from your existing content, or craft an example post from only a title

AI Image will let you create new images based on the text prompt.

Read more: https://wordpress.com/forums/topic/jetpack-ai/ (archive)

How much is ChatGPT costing OpenAI? (10/Feb/2023)

Exclusive. We have to make a few assumptions to get an answer here, and it’s a bit of fun!

Assumptions:

Users. As of Jan/2023, ChatGPT has 100M monthly unique users according to UBS. Ark Invest gives a different figure of 10M unique daily users. We’ll use Ark’s figure.

Cost. Inference is expensive. ChatGPT’s inference costs are ‘eye-watering’ according to their CEO. In a reply to Elon Musk, he later said that each conversation costs ‘single-digit cents per chat’. GPT-3.5 is billed out at 2c per 750 words (1,000 tokens) for both prompt + response (question + answer). This includes OpenAI’s small profit margin, but it’s a decent starting point. And we’ll double this to 4c for a standard conversation.

So, as of Jan/2023…

Every day, ChatGPT costs the company @ 10M users * 4c = $400,000.

Every month, ChatGPT costs the company $12,000,000.

Not a bad marketing budget!

Here’s my link to this calculation.

Turnitin with ChatGPT detection capability (13/Jan/2023)

As noted several times in The Memo 10/Dec/2022 edition, it is next to impossible to detect text written by AI. However, with academics howling, Turnitin are giving it a go. Turnitin is used by over 34 million students in over 15,000 institutions in 153 countries, including at our Aussie universities.

Read more: https://www.turnitin.com/blog/sneak-preview-of-turnitins-ai-writing-and-chatgpt-detection-capability

David Guetta uses ChatGPT + uberduck.ai to emulate Eminem (4/Feb/2023)

If you think this AI stuff is getting popular now that it is hitting so many different industries, then you’re right…

Lyrics by ChatGPT: https://chat.openai.com/

Voice by Uberduck (sign up): https://app.uberduck.ai/speak#mode=tts-basic&voice=zwf

Watch the video via Twitter:

Leta is back for Episode 66 (6/Feb/2023)

Now in her third year, she’s still here with the original and raw GPT-3 davinci engine from 2020. She looks exactly the same, but I look a bit different in this episode…

Watch:

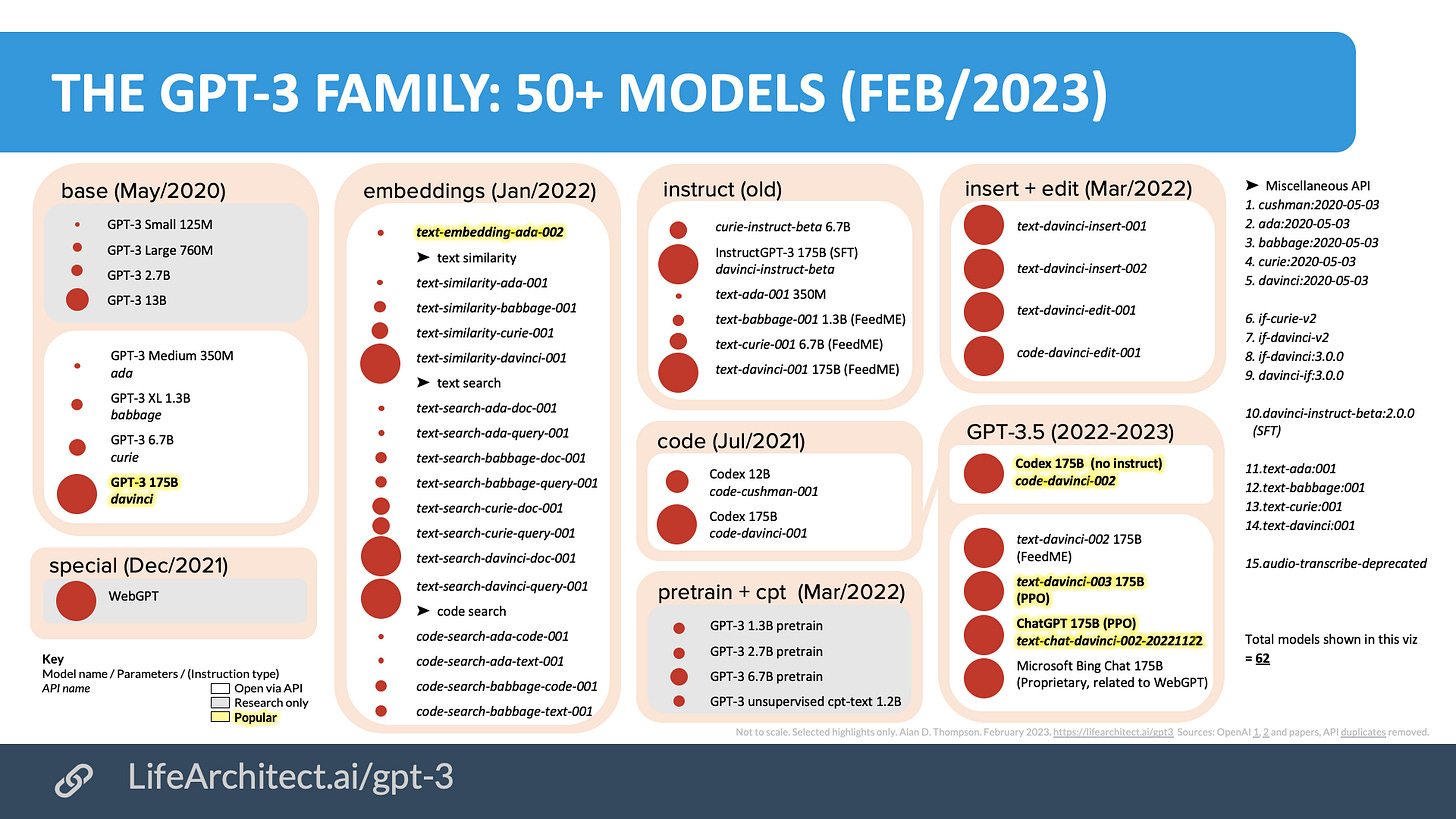

The GPT-3 Family: 50+ models (Feb/2023)

Exclusive. This is the most resource-intensive visualization I’ve ever put together. It should have been easy, but OpenAI’s documentation is… developing.

This draft is being provided to paid readers of The Memo early, and will be published later in the week. After removing duplicates, we found a total of 62 unique models.

Corrections welcomed for this one by replying to this email (though please note all primary sources: 1, 2, 3). Thanks to Joanna for comprehensive input and review.

Link to image and PDF download: https://lifearchitect.ai/gpt-3

Xpeng ‘Level 4’ self-driving cars for 100 cities in China (Feb/2023)

I’ve been riding Level 4 Waymos driven by AI here in Phoenix (I sit in the back seat, with nobody sitting in either of the front seats), and it is still a very strange experience! As usual, China is steadily advancing beyond even the US when it comes to some of this AI tech.

[Xpeng’s CEO] is making a bold bet on full self driving, a technology even Elon Musk’s electric car pioneer Tesla Inc. hasn’t been able to perfect despite years of work. He is aiming to snare at least 20% of what he calls the “all-intelligent vehicle” market, referring to cars “infinitely close to Level 4 autonomous driving” where the vehicle can handle complex urban situations…

As part of its push into autonomous driving, a key task for the second half of this year will be to develop a version of self driving that doesn’t rely on high-definition maps and expand that into 50 to 100 cities, He said. From 2024, the company aims to upgrade its intelligent systems to not only drive the car, but also learn the driving habits of owners and make automatic adjustments.

“You will feel very comfortable because you like the driving logic and mode that copies yourself,” He said. All vehicles will eventually be equipped with full autonomous driving technology as standard and in the long run, these intelligent features may become a main profit source for the company, he added.

Read more via Bloomberg: https://archive.is/k8HxL

Toolformer: Meta AI adds actions to LLMs (9/Feb/2023)

LMs can teach themselves to use external tools via simple APIs and achieve the best of both worlds. We introduce Toolformer, a model [using GPT-2 and GPT-J] trained to decide which APIs to call, when to call them, what arguments to pass, and how to best incorporate the results into future token prediction.

Read the paper: https://arxiv.org/abs/2302.04761

Compare with GPT + LangChain + API calls to Wolfram Alpha and more (my video with James Weaver).

Google discovers that GPT models hallucinate a lot (8/Feb/2023)

Google’s Bard was prompted with the question, ‘What new discoveries from the James Webb Space Telescope can I tell my 9-year old about?’

Bard provided two correct answers, and one not-so-correct answer. Bard wrote that the telescope took the very first pictures of a planet outside our solar system. In fact, the first pictures of these "exoplanets" were taken by the European Southern Observatory's Very Large Telescope. (via Investor.com)

Microsoft is definitely being advised by very informed engineers at OpenAI, but they missed this one in a big way. I’m no stranger to large language models providing incorrect data that they pass off as fact! Leta AI embarrassed me in front of the World Gifted Conference back in Apr/2021 with this incorrect answer (timecode), and more recently, ChatGPT + Wolfram Alpha provided inaccurate working of a maths problem in another of my videos (timecode).

By design, and in the simplest terms, large language models seek to statistically predict the next word in a sentence. This means that they make up facts a lot, just so they can complete the next word. (Compare this with your favourite toddler and their storytelling!)

Many labs have worked around this through various mechanisms, including reinforcement learning, an additional layer of checking, and even an external connection to another source for verification. The most successful at this so far seems to be Meta AI’s Galactica (my video) which provides citations, and even this model was not quite good enough for prime time.

Toys to Play With

Oscar: Bedtime stories for iOS (5/Feb/2023)

The mobile app that creates personalized bedtime stories for your children featuring them as the main characters. Say goodnight to boring stories and hello to endless imagination with Oscar.

Your child will love being the star of their own bedtime story. Make bedtime a special time for your family.

The tech is GPT-3 davinci + Midjourney (currently pre-generated) + React Native + Firebase + Express.js.

Give it a go with 3 free stories: https://oscarstories.com/

(A quick reminder that I never do sponsorships or promotions, paid or otherwise. The Memo is exclusively bleeding-edge tech that interests me… and you!)

YouChat updated to version 2 (6/Feb/2023)

‘Introducing YouChat 2.0, conversational AI for search with more reliable info, rich media, and visual apps... Better answers, Accomplish more, Expand research, Continue conversations, Rich visuals, Better accuracy’.

Try it out with a conversation about The Memo!

Opera browser AI (10/Feb/2023)

Opera is planning to add popular AI-generated content services to the browser sidebar. On top of that, the company is also working on augmenting the browsing experience with new features that will interact with these new generative-AI-powered capabilities. Among the first features to be tested is a new “Shorten” button in the address bar that will be able to use AI to generate short summaries of any webpage or article.

Read the press release: https://blogs.opera.com/news/2023/02/opera-aigc-integration/

Next

Now that executive and board-level are seeing the phenomenal power of post-2020 AI, the big AI labs are panicking (even though they’ve been carefully working on this stuff for years!).

Despite creating the earliest and largest models 9-15 months ago, it’s incredibly strange to see Google (creator of PaLM 540B) and Microsoft (creator of MT-NLG 530B) rushing in to the ‘commercialization party’ so late in the game.

It’s going to get very loud in here…

All my very best,

Alan

LifeArchitect.ai

Archives | Unsubscribe new account | Unsubscribe old account (before Aug/2022)