The Memo - Special edition - GPT-5 - Aug/2025

Three years after GPT-4 was ready, GPT-5 has been launched...

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 7/Aug/2025

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 94%

ASI: 0/50 (no expected movement until post-AGI)OpenAI announces GPT-5

Once again, we have this out to The Memo readers within just a few hours of model release.

It has been a full three years since GPT-4 1.76T was ready in lab (Aug/2022). Today marks the release of GPT-5. The release is underwhelming, but does offer some marginal improvements. While marketed as a breakthrough, GPT-5 represents OpenAI’s strategic focus on model inference cost reduction and optimization, achieving standard performance while serving close to a billion users in 2025.

OpenAI does note ‘significant advances in reducing hallucinations, improving instruction following, and minimizing sycophancy.’

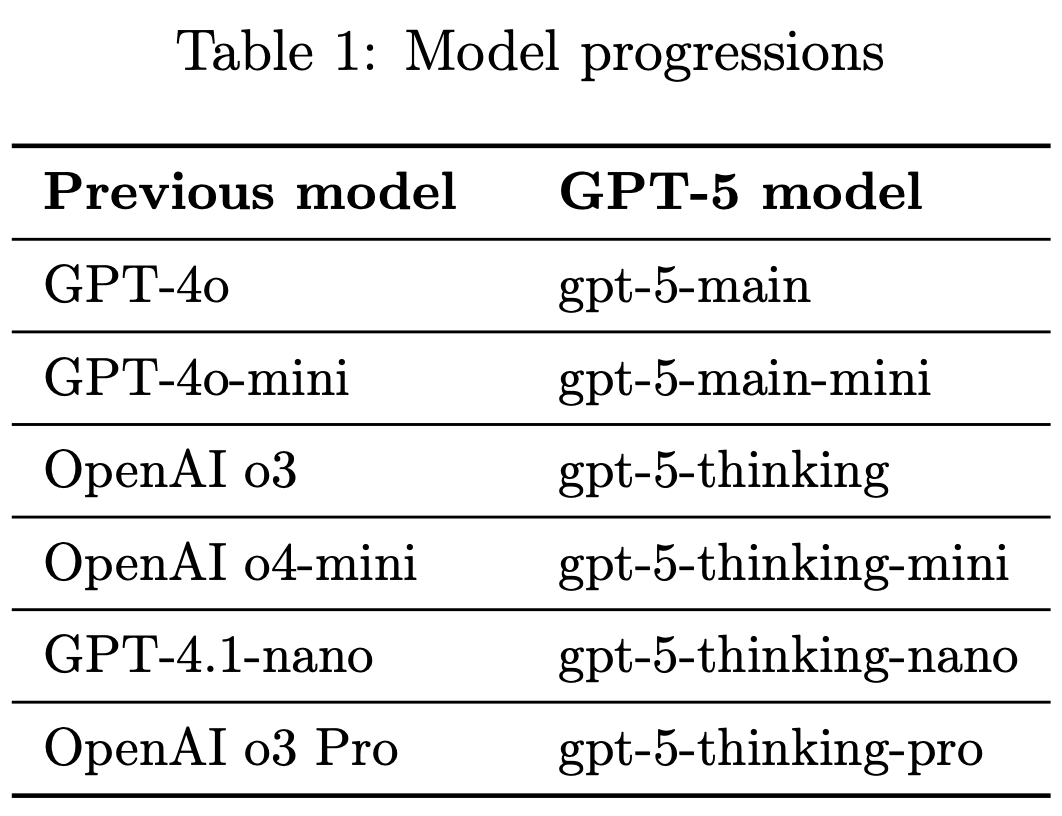

GPT-5 is not yet a unified system, but emulates an integrated system, meaning that the interface switches models automatically, deciding when to spend time thinking/reasoning.

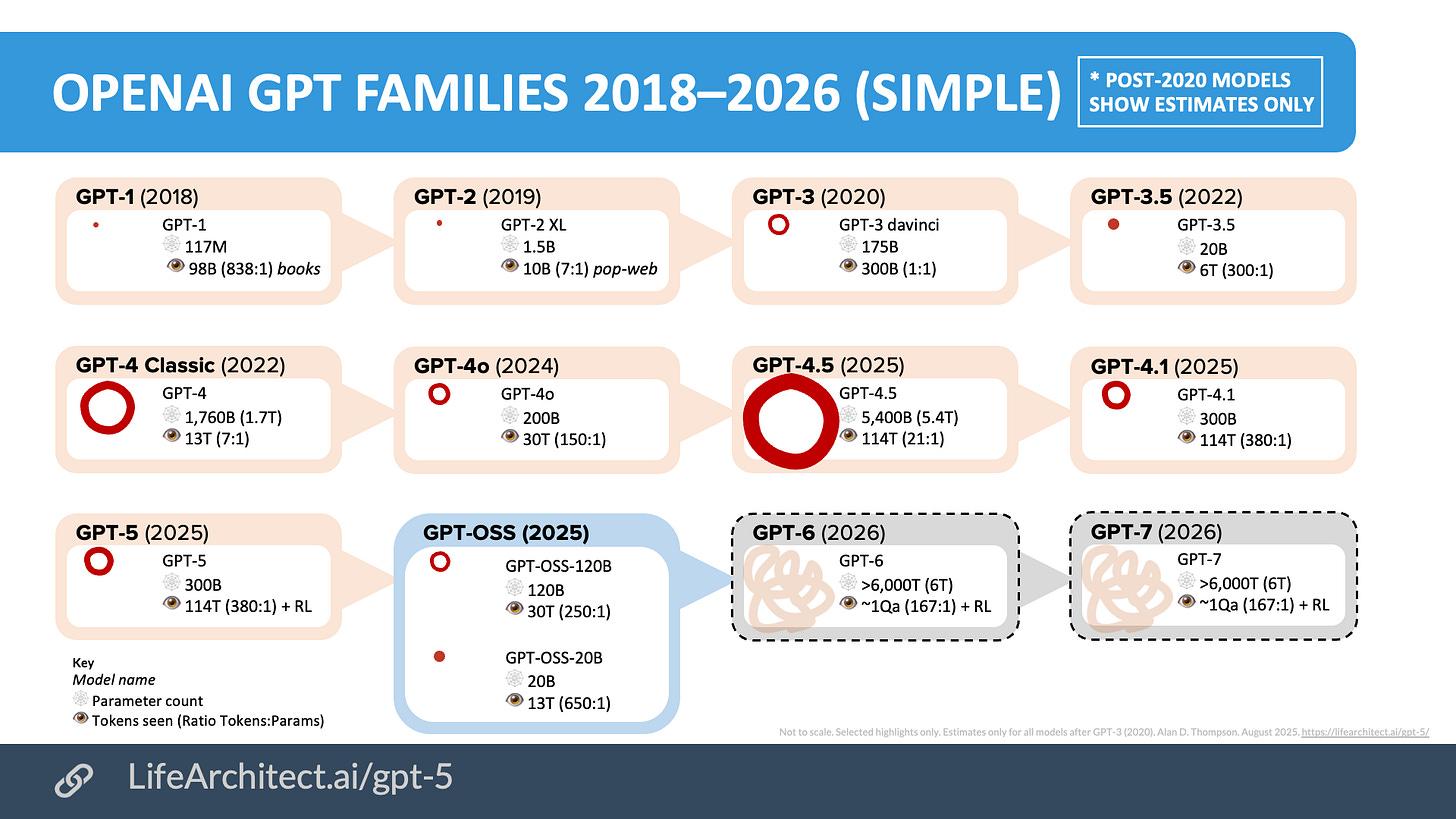

This integrated model ‘system’ is similar to the combination of o3 + GPT-4o. OpenAI compared GPT-5 models to previous models:

GPT-5 has much lower hallucination rates—up to 90% lower hallucination rates than o3 (and on CharXiv deception, o3=86.7%, GPT-5=9%).

We find that gpt-5-main has a hallucination rate 26% smaller than GPT-4o, while gpt-5-thinking has a hallucination rate 65% smaller than OpenAI o3…

gpt-5-main has 44% fewer responses with at least one major factual error, while gpt-5-thinking has 78% fewer than OpenAI o3. (p10, GPT-5 system card)

Knowledge cutoff for GPT-5=Sep/2024 (11 months). Compare to:

Gemini 2.5 Pro=Jan/2025 (2 months before release)

Claude Opus 4.1=Mar/2025 (5 months before release).

Size estimates

Parameter count and general model size is no longer an indicator of performance, but I still find it interesting. With all model details kept confidential, plus added complexity in reasoning/thinking mode, it is as challenging as ever to estimate token and parameter counts. One year ago, in my Aug/2024 GPT-5 paper, I estimated that GPT-5 would be around:

70T tokens of text in 281TB (rounded). Over 1.625 epochs—the estimated average ‘times seen’ of GPT-4’s dataset—that would be a total of 114T tokens seen during training…

Multimodality (including images, video, audio, and special datasets seen during training) would increase this parameter count significantly. Note that these estimates are speculative…

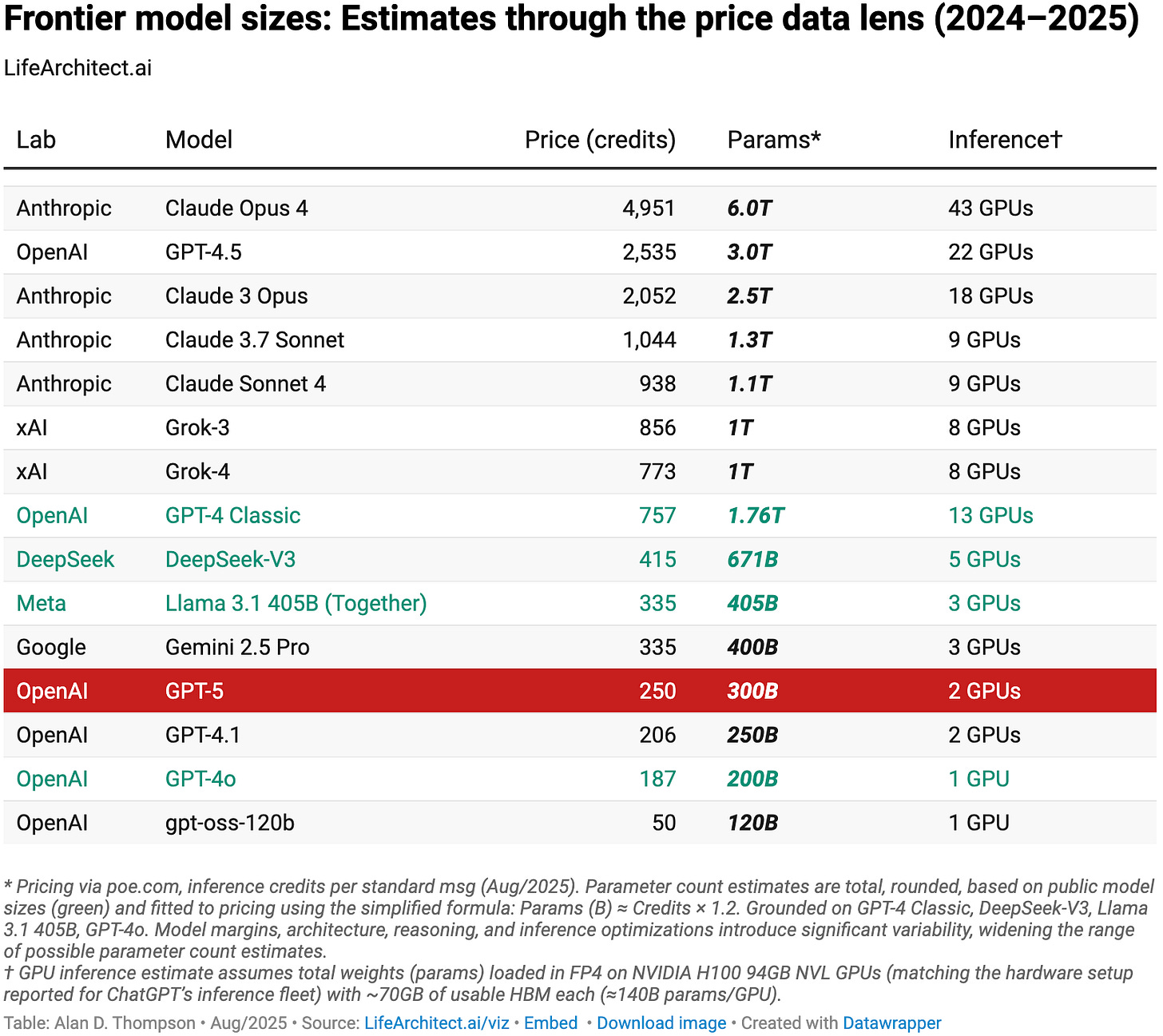

Now in 2025, based on my ongoing analysis, known GPT-5 model pricing, similar known frontier MoE model sizes and pricing, estimates of training supply (GPUs), inference supply (GPUs), and demand (users), here are my initial estimates for the GPT-5 model.

For dataset size, my 2024 estimate of ‘114T tokens seen’ easily holds for GPT-5 today.

The parameter count is likely to be far smaller than my initial estimates, as ChatGPT is now serving around 800 million users. With the latest 2024–2025 LLM optimizations including thinking/reasoning, we’d still get adequate capabilities from much lower parameter counts.

For parameters, I estimate GPT-5 to have a centrepoint of around 300B parameters MoE, placing it next to estimates for Google Gemini 2.5 Pro. (The outlier is Anthropic with huge models being served at high cost.) I’m releasing my latest table in today’s special edition for the first time:

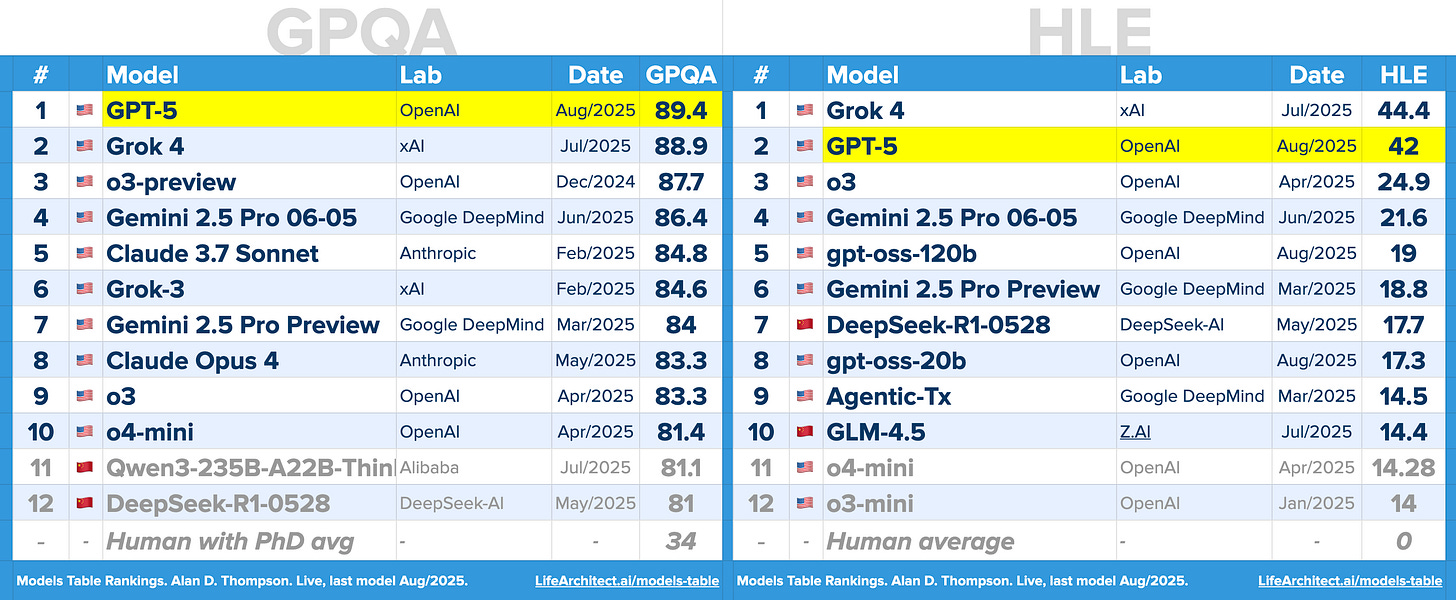

Benchmark scores

With pro mode and tool use, GPT-5 scores GPQA=89.4, HLE=42.

GPT-5 performs well on my ALPrompt benchmarks:

GPT-5 ALPrompt 2025H2 score: 2/5

GPT-5 ALPrompt 2025H1 score: 3/5

GPT-5 ALPrompt 2024H2 score: 5/5

GPT-5 ALPrompt 2024H1 score: 5/5

Try it

Try it on Poe.com: https://poe.com/GPT-5

Try it in the official interface: https://chatgpt.com/?model=gpt-5

Documentation

Read the official announce: https://openai.com/index/introducing-gpt-5/

OpenAI had a livestream featuring developers: https://youtu.be/0Uu_VJeVVfo

Read my GPT-5 dataset paper: https://lifearchitect.ai/whats-in-gpt-5/

See it on the Models Table: https://lifearchitect.ai/models-table/

Read the GPT-5 system card (source):

Full subscribers can download my annotated GPT-5 paper at:

My GPT-5 page has been updated with the latest visualizations, and will continue to be updated as analysis continues: https://lifearchitect.ai/gpt-5/

I will be livestreaming an analysis of this model in about 24 hours (link):

All my very best,

Alan

LifeArchitect.ai