To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 20/Feb/2025

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 88 ➜ 90%

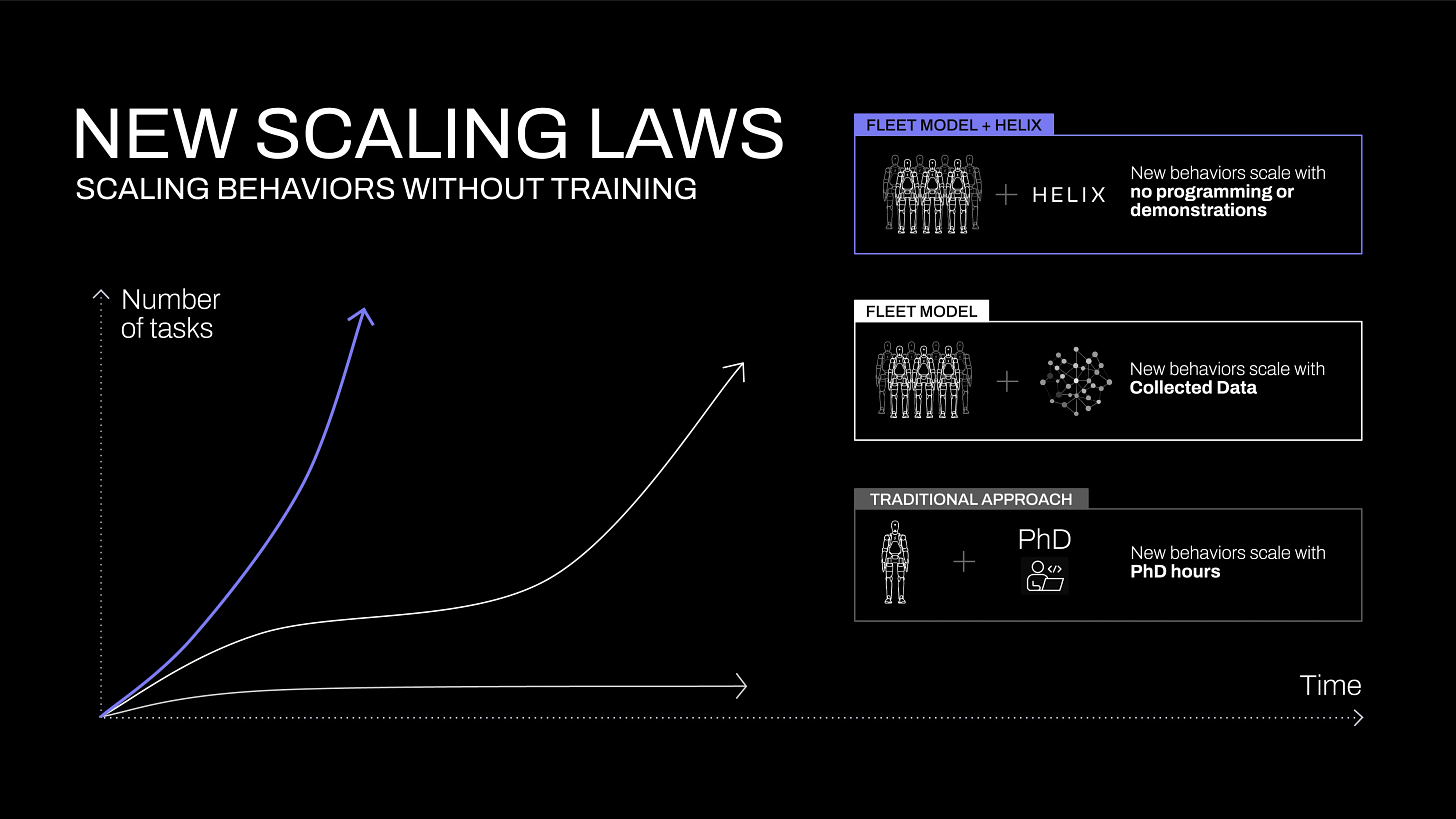

ASI: 0/50 (no expected movement until post-AGI)This morning, just before 1AM here in Adelaide (6:11AM on 20/Feb/2025 in California), Figure AI announced Helix, a Vision-Language-Action system for generalist humanoid control where ‘new skills that once took hundreds of demonstrations could be obtained instantly just by talking to robots in natural language.’

Paper, announce, video, Models Table

Watch the video (link):

While the concepts are similar to the generalist model DeepMind Gato (‘cat’) from nearly three years ago (May/2022 paper and my video), Figure’s Helix system is demonstrated in a real, commercially available humanoid robot.

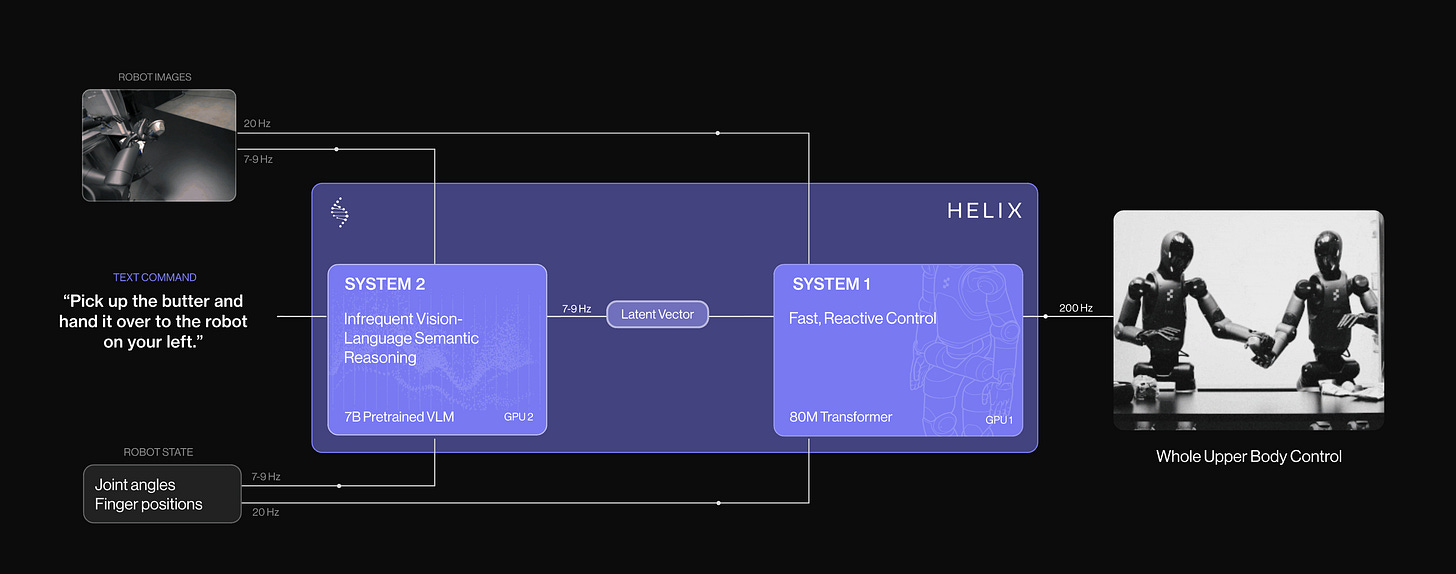

The Helix system uses two models, an 80M latent-conditional visuomotor transformer called S1, and an open source 7B VLM (vision language model) backbone called S2.

Emergent ‘Pick up anything’.

Zero-shot multi-robot coordination.

Uses a single set of neural network weights to learn all behaviors—picking and placing items, using drawers and refrigerators, and cross-robot interaction—without any task-specific fine-tuning…

We are eager to see what happens when we scale Helix by 1,000x and beyond.

AGI is nearly here

AGI, artificial general intelligence (a machine that performs at the level of an average human) is nearly here. I believe that the full definition of AGI must include physical embodiment, and we are now seeing that across the industry with labs like OpenAI, Meta AI, and Apple joining the humanoid robot race in 2025. Here’s what happens next:

AGI achieved internally: A re-written story (GPT-6 or Gemini 4)

I’d like to invite you to gift a subscription to someone in your world who needs AI that matters, as it happens, in plain English:

All my very best,

Alan

LifeArchitect.ai