The Memo - 7/Sep/2022

Tang Yu as first AI CEO, more GPT-4 rumors, the Stable Diffusion dataset database, and much more!

FOR IMMEDIATE RELEASE: 7/Sep/2022

Welcome back to The Memo.

This week saw a great quote from OpenAI researcher, Dr Mohammad Bavarian (6/Sep/2022):

With the current pace of development in AI and as demos turn into full featured products and services, I can see the overall US GDP growth rising from recent avg 2-3% to 20+% in 10 years... The current advances we are seeing with Image Synthesis and LLMs are just the beginning. And most of the refinements needed to make them into reliable products haven't been done yet. And they will be done, people have started working on it... I won't comment on OpenAI's ambitions, my opinion is its impact is likely to be larger than any of the above efforts! But it's not a competition, there are lots of different wonderful things to be built and a huge pie to go around.

It’s sometimes difficult to ascertain who to listen to in this noisy world, but OpenAI’s Dr Mohammad Bavarian is credible, and his comments are well-informed. OpenAI very much has the ear of the US government, affecting policy. OpenAI’s CEO, Sam Altman participated in the secretive, off-the-record Bilderberg meeting in Washington DC in Jun/2022. Former OpenAI researcher Jack Clark was recently elected to serve on the National AI Advisory Committee, which advises the USA’s National AI Initiative Office and the President of the USA (25/Apr/2022). I expect that OpenAI’s ‘inside track’ means that upcoming AI-driven GDP increases of 20% are feasible, and this number aligns with forecasts from ARK, McKinsey, and elsewhere.

The BIG Stuff

There was nothing extraordinarily big announced in this period… GPT-4 and similar models are right around the corner. This prediction site gave the average estimated release date of GPT-4 as 29/Aug/2022, which means the release is now overdue by a few days. More recently, rumors suggest that GPT-4’s release date will be in December 2022-Feb 2023...

The Interesting Stuff

Documenting the Stable Diffusion dataset (Aug/2022)

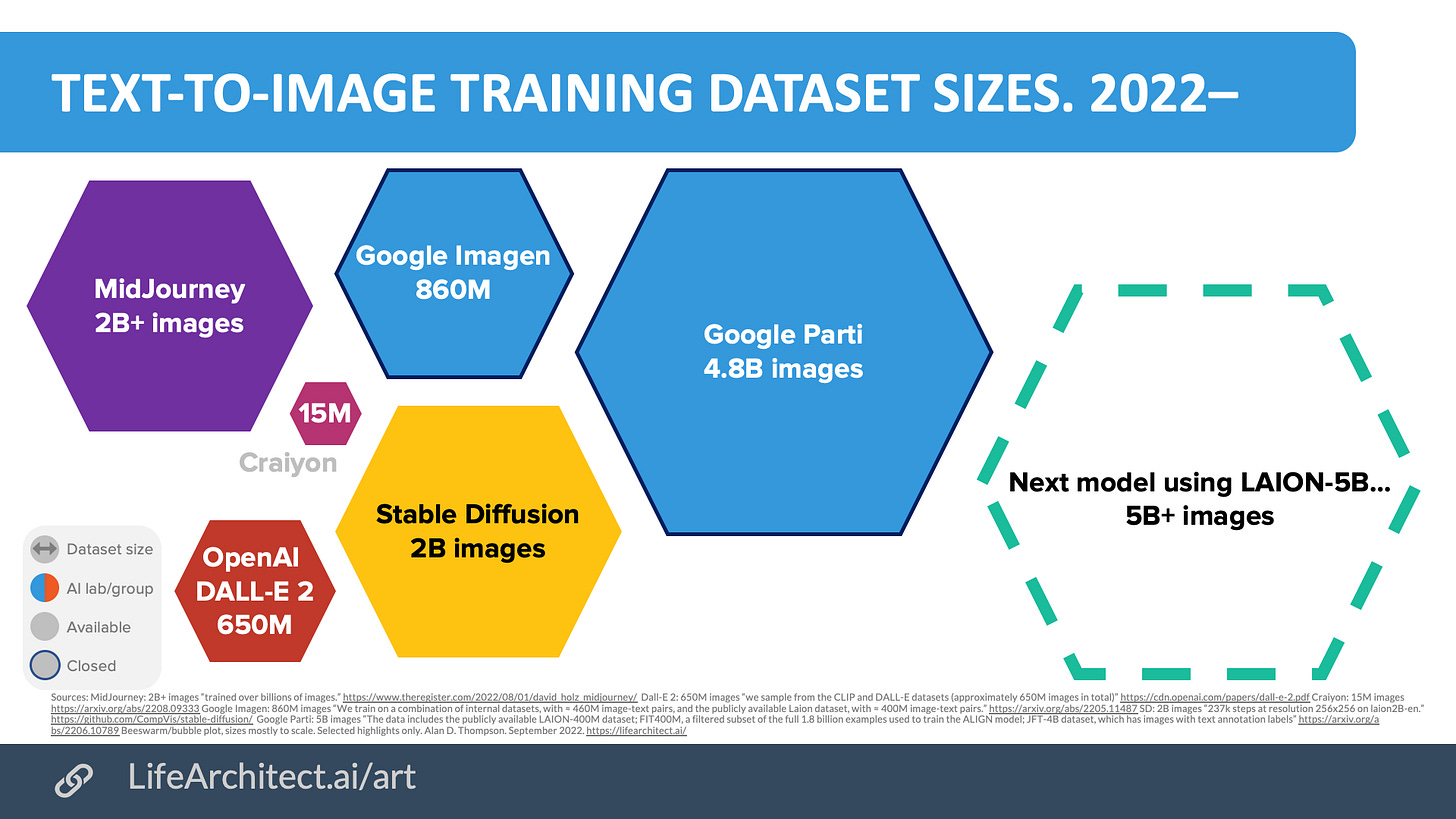

Stable Diffusion is one of the largest text-to-image models right now:

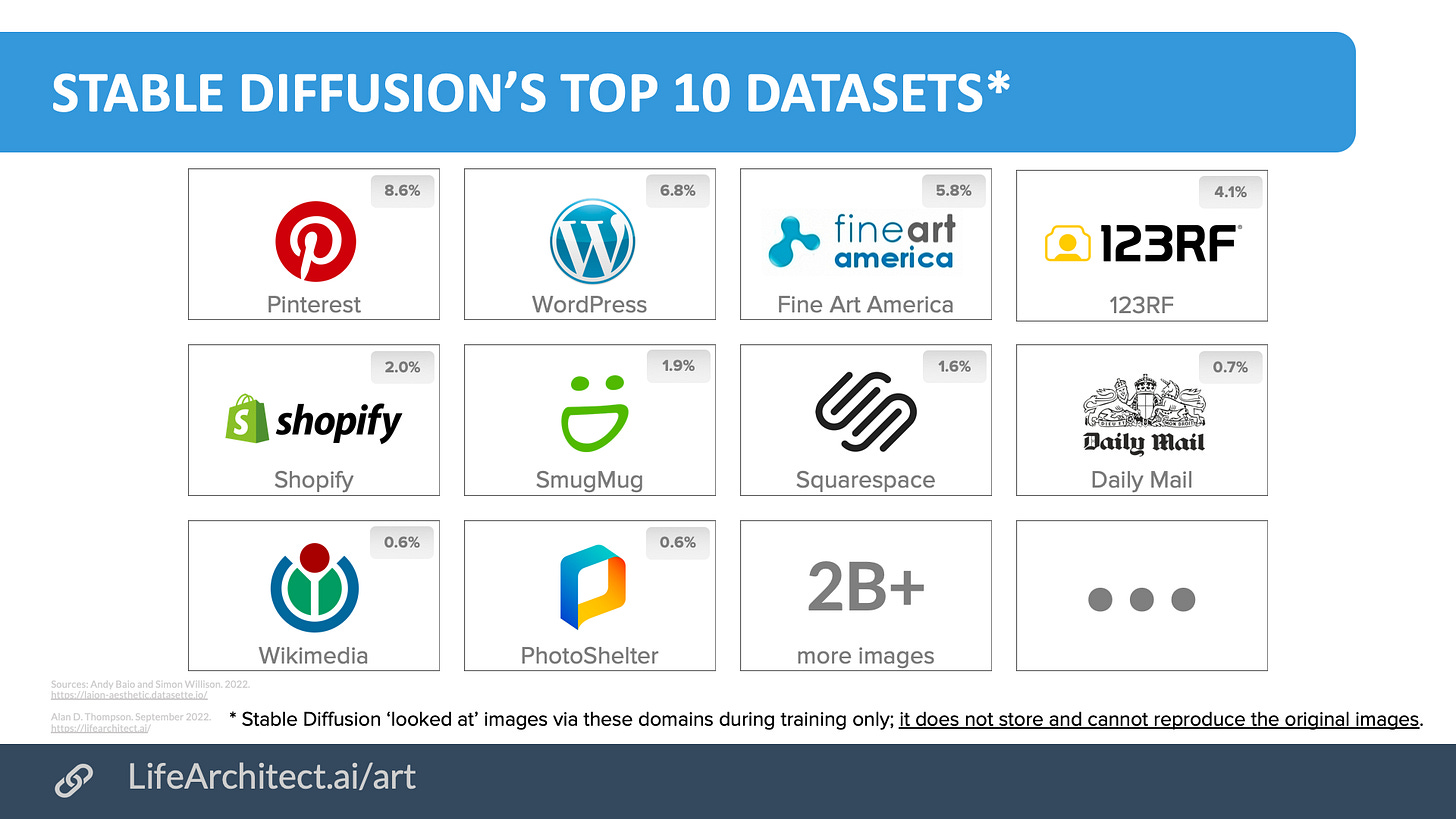

Andy Baio and Simon Willison grabbed the data for over 12 million images used to train Stable Diffusion, and used the Datasette project to make a data browser for the images. I’m most interested in the top domains used to source the images used for training…

At the risk of stirring up debate among those who feel threatened by artistic AI, I found that a rough top 10 dataset sources for the Stable Diffusion text-to-image model (after filtering; the LAION-Aesthetic dataset) looks like this:

Pinterest (image sharing): 8.6%.

WordPress (general blogs) 6.8%.

Fine Art America (art marketplace) 5.8%.

123RF.com (stock photos) 4.1%.

Shopify (e-commerce platform) 2.0%.

SmugMug (image sharing) 1.9%.

Squarespace (e-commerce platform) 1.6%.

Daily Mail (tabloid) 0.7%.

Wikimedia (general images) 0.6%.

PhotoShelter (image sharing) 0.6%.

Read the article: https://waxy.org/2022/08/exploring-12-million-of-the-images-used-to-train-stable-diffusions-image-generator/

Search the database (with CSV download option): https://laion-aesthetic.datasette.io/laion-aesthetic-6pls/domain?_sort_desc=image_counts

Meta AI non-invasive brain reading (31/Aug/2022

Researchers at Meta, the parent company of Facebook, are working on a new way to understand what’s happening in people’s minds. On 31/Aug, the company announced that research scientists in its AI lab have developed AI that can ‘hear’ what someone’s hearing, by studying their brainwaves.

Read the article: https://time.com/6210261/meta-ai-brains-speech/

Read the paper: https://arxiv.org/pdf/2208.12266.pdf

NetDragon Appoints AI CEO (26/Aug/2022)

While this may be just a gimmick, I think it is something far more than that. We’ve already seen GPT-3 augmenting and replacing roles across industries. Putting AI into this role (HR → strategic input) is certainly the next step, and a ‘superintelligence’ benefits everyone here. Imagine a world where the company leader is 1,000x smarter, drawing on input from the most creative minds in the world, and working 24x7…

Ms. Tang Yu, an AI-powered virtual humanoid robot, has been appointed as the Rotating CEO of Fujian NetDragon Websoft Co., Ltd.

The appointment is a move to pioneer the use of AI to transform corporate management and leapfrog operational efficiency to a new level. Tang Yu’s appointment highlights the Company’s “AI + management” strategy and represents a major milestone of the Company towards being a “Metaverse organization”. Tang Yu will streamline process flow, enhance quality of work tasks, and improve speed of execution. Tang Yu will also serve as a real-time data hub and analytical tool to support rational decision-making in daily operations, as well as to enable a more effective risk management system. In addition, Tang Yu is expected to play a critical role in the development of talents and ensuring a fair and efficient workplace for all employees. Dr. Dejian Liu, Chairman of NetDragon, commented, “We believe AI is the future of corporate management, and our appointment of Ms. Tang Yu represents our commitment to truly embrace the use of AI…”

DeepMind’s embodied intelligence/humanoid football player (1/Sep/2022)

Using human and animal motions to teach robots to dribble a ball, and simulated humanoid characters to carry boxes and play football.

https://www.deepmind.com/blog/from-motor-control-to-embodied-intelligence

OpenAI’s alignment research (24/Aug/2022)

Our alignment techniques need to work even if our AI systems are proposing very creative solutions (like AlphaGo’s move 37), thus we are especially interested in training models to assist humans to distinguish correct from misleading or deceptive solutions. We believe the best way to learn as much as possible about how to make AI-assisted evaluation work in practice is to build AI assistants.

https://openai.com/blog/our-approach-to-alignment-research/

The NYT: We Need to Talk About How Good A.I. Is Getting (25/Aug/2022)

Interesting article by The New York Times, and wonderful to see the media picking this up after a good 3-4 years of silence:

…the news media needs to do a better job of explaining A.I. progress to nonexperts. Too often, journalists — and I admit I’ve been a guilty party here — rely on outdated sci-fi shorthand to translate what’s happening in A.I. to a general audience. We sometimes compare large language models to Skynet and HAL 9000, and flatten promising machine learning breakthroughs to panicky “The robots are coming!” headlines that we think will resonate with readers. Occasionally, we betray our ignorance by illustrating articles about software-based A.I. models with photos of hardware-based factory robots — an error that is as inexplicable as slapping a photo of a BMW on a story about bicycles.

In a broad sense, most people think about A.I. narrowly as it relates to us — Will it take my job? Is it better or worse than me at Skill X or Task Y? — rather than trying to understand all of the ways A.I. is evolving, and what that might mean for our future. - https://archive.ph/uBVoe

DeepMind’s conversational AI report (7/Sep/2022)

Modelling conversation as a cooperative endeavour between two or more parties, the linguist and philosopher, Paul Grice, held that participants ought to:

Speak informatively

Tell the truth

Provide relevant information

Avoid obscure or ambiguous statements

Read the report: https://www.deepmind.com/blog/in-conversation-with-ai-building-better-language-models

The world’s first fully autonomous restaurant (1/Sep/2022)

This is the real deal! Mezli was founded by three graduate students from Stanford University, who started working on the concept in January 2021 at startup accelerator Y Combinator.

Customers place orders on a touch screen kiosk on the container’s side or from a smartphone app. Inside the container, which is refrigerated, robots select ingredients from bins of prepared items, transferring those that need to be cooked or heated to a smart oven. Once all the ingredients are ready to go, additional robots mix and box them. - Article 1 by CNBC, article 2, official website.

US Army will spend $22B on HoloLens for AR/VR (2/Sep/2022)

The augmented reality goggles, a customized version of the HoloLens goggles, give the user a “heads-up display” — meaning that a hologram is placed over their environment, giving them more information about what they can already see.

The Army expects to spend around $21.9 billion on the goggles over the next 10 years.

Read: https://news.yahoo.com/microsoft-us-army-combat-hololens-goggles-military-152855161.html

AI + 3D virtual newsrooms (24/Aug/2022)

HourOne introduced 3D virtual newsrooms via avatar + AI. The solution offers camera movement for visual interest. This means the video can zoom in, zoom out, pan, and tilt to maintain the viewer’s attention. It also enables the use of multiple locations and formats to show visual content.

Read more: https://voicebot.ai/2022/08/24/hour-one-debuts-synthetic-video-news-with-virtual-human-news-anchors/

Watch an example video output, which could potentially be entirely generated by AI:

Why You Should Consider Hanging AI Art in Your Home (6/Sep/2022)

Goodbye to Mass-Produced Prints. Most of the art people put in their homes are mass-produced prints of well-known works, or low-cost decorative art pieces that you’d buy at decor megastores. While there’s nothing inherently wrong with this, it does mean that we decorate our homes with artwork anyone can have. With AI-generated art, what you hang in your home is a totally unique piece.

Read the article: https://www.howtogeek.com/825273/why-you-should-consider-hanging-ai-art-in-your-home/

Google digitizing smells (6/Sep/2022)

Did you ever try to measure a smell? …Until you can measure their likenesses and differences you can have no science of odor. If you are ambitious to found a new science, measure a smell.— Alexander Graham Bell, 1914.

Read the article: https://ai.googleblog.com/2022/09/digitizing-smell-using-molecular-maps.html

Toys to Play With

BLOOM 176B (Sep/2022)

I’ll be using this demo in an upcoming keynote session in Australia, and thought I’d drop it in here even though it was released so long ago… (Nearly 8 full weeks ago!) There are some fantastic examples below the text box that you can paste in, using any of 46 languages. The demo is available for free and without login, and it is the full 176B model, currently the largest publicly-available model. It’s easy…

Try it yourself: https://huggingface.co/spaces/huggingface/bloom_demo

New GPT-3 tricks (17/Aug/2022)

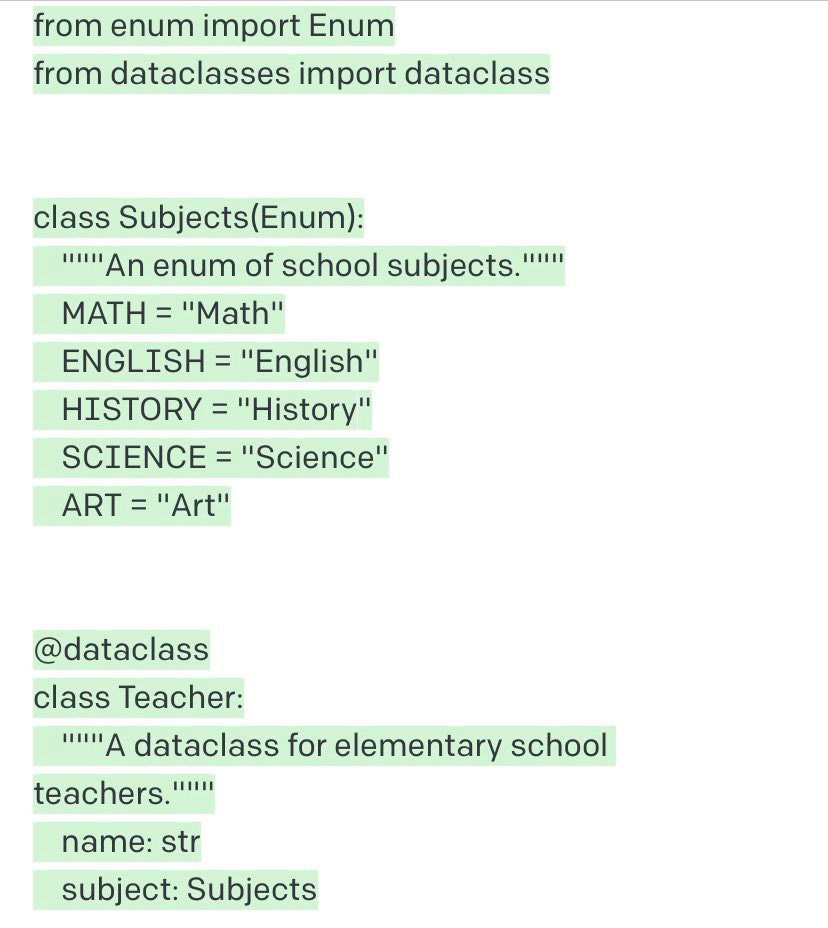

It’s been more than two years since GPT-3 was launched, and we are still finding new tricks to get it to do what we want it to do(!). This new templating functionality is from Riley Goodside, inspired by Boris Power from OpenAI.

Here’s just one of the first outputs for the simple prompt:

enumsubclassforfiveschoolsubjects

It is worth reading the whole thread to understand how this functionality is slightly different to OpenAI’s INSERT/EDIT function, and opens up a new world for prompting:

https://threadreaderapp.com/thread/1559801520773898240.html

Lexica: Search existing text-to-image prompts for suggestions (Aug/2022)

Lexica is called ‘The Stable Diffusion prompt search engine,’ and it’s a remarkable resource for entering a keyword and seeing what expert prompt crafters have been building!

This week, I spent quite a bit of time searching and browsing the millions of images that have been documented from the beta testing period of Stable Diffusion. I tried these keywords (among many more):

Bach

Perth

Unobtainium

Give it a go: https://lexica.art/

Browse thousands (millions?) of DALL-E 2 images (Aug/2022)

OpenArt collects AI-generated ‘all the amazing DALL·E 2 art on the web’.

Play with it: https://openart.ai/

Download a font made with DALL-E 2 (21/Aug/2022)

A user generated images via DALL-E 2, extracting the images that looked like letters of the English alphabet. A world first!

I found attractive letters DALL-E 2 produced in one of my generations, grabbed and rotated the text, re-uploaded to DALL-E with the prompt "a, b, c, d, e, f, g, h, i, j, k, l, m, n, o, p, q, r, s, t, u, v, w, x, y, z" typeface, font, lettering, black against a white background", and handpicked the best looking letters, numbers and symbols until I had a full library of symbols to make a font.

Read the original discussion.

Download the font files: OTF, TTF.

SALT is becoming the first AI film (1/Sep/2022)

I first mentioned SALT back in the 17/Jul/2022 edition of The Memo. It has been picked up by PCMag, with some quotes by the creator:

We're on the verge of a new era, really… To me this is as big as the invention of photography, and to be honest maybe as big as the invention of writing.

Read the article in PCMag: https://www.pcmag.com/news/the-footage-in-this-sci-fi-movie-project-comes-from-ai-generated-images

Watch and play Salt: https://twitter.com/SALT_VERSE

The most shocking text-to-image generations from this month (7/Sep/2022)

These 13 Stable Diffusion generations blew my mind. The prompt is:

fallout 5 tarkov stalker 2, canon50 first person movie still, ray tracing, 4k octane render, hyperrealistic, extremely detailed, epic dramatic cinematic lighting, downtown Toronto

View all 13 images: https://www.reddit.com/r/MediaSynthesis/comments/x7cz1d/fallout_5_toronto_stable_diffusion/

Next

My favourite quote this edition is a bit of a flashback. The CTO of OpenAI, back in Mar/2022 (‘In the future, it will be obvious that the sole purpose of science was to build AGI’):

I recently explored this concept of AGI vs the Singularity in a video (30/Aug/2022):

If you’ve made it this far, you must love to read! So, for your reading pleasure, here is the original 5,000-word essay on the Singularity, from 1993, by Emeritus Professor Vernor Vinge:

From the human point of view this change will be a throwing away of all the previous rules, perhaps in the blink of an eye, an exponential runaway beyond any hope of control. Developments that before were thought might only happen in "a million years" (if ever) will likely happen in the next century. (In [5], Greg Bear paints a picture of the major changes happening in a matter of hours.)

I think it's fair to call this event a singularity ("the Singularity" for the purposes of this paper). It is a point where our old models must be discarded and a new reality rules. As we move closer to this point, it will loom vaster and vaster over human affairs till the notion becomes a commonplace. Yet when it finally happens it may still be a great surprise and a greater unknown.

…

I believe that the creation of greater than human intelligence will occur during the next thirty years… let me more specific: I'll be surprised if this event occurs before 2005 or after 2030.

https://mindstalk.net/vinge/vinge-sing.html

We’ve got a good few months to go as these events unfold through to 2030. And I’ll be documenting each of those months right here via The Memo! Thanks so much for joining us, and a shoutout to the major universities and academic institutions that subscribed to The Memo in August and September 2022.

All my very best,

Alan

LifeArchitect.ai