Voice generated by genny.lovo.ai. No, that isn’t AI-Morgan Freeman, it’s AI-‘Bryan Lee Jr’!

FOR IMMEDIATE RELEASE: 30/May/2023

NVIDIA CEO Jensen Huang (27/May/2023):

Agile companies will take advantage of AI and boost their position.

Companies less so will perish…

In 40 years, we created the PC, Internet, mobile, cloud, and now the AI era. What will you create? Whatever it is, run after it like we did. Run, don’t walk.

Welcome back to The Memo.

The Policy section covers the consistent views of the world’s most powerful person (OpenAI’s CEO) from a private blog post in 2015 to their latest push for more governance. We also explore Microsoft’s latest regulatory guidance, as well as updates on the UK’s AI regulation.

In the Toys to play with section, we look at Stability AI’s latest image model, a fully 'offline' LLM for security-conscious people, podcasts generated by the latest AI, a GPT-4 backed news site, and much more!

The BIG Stuff

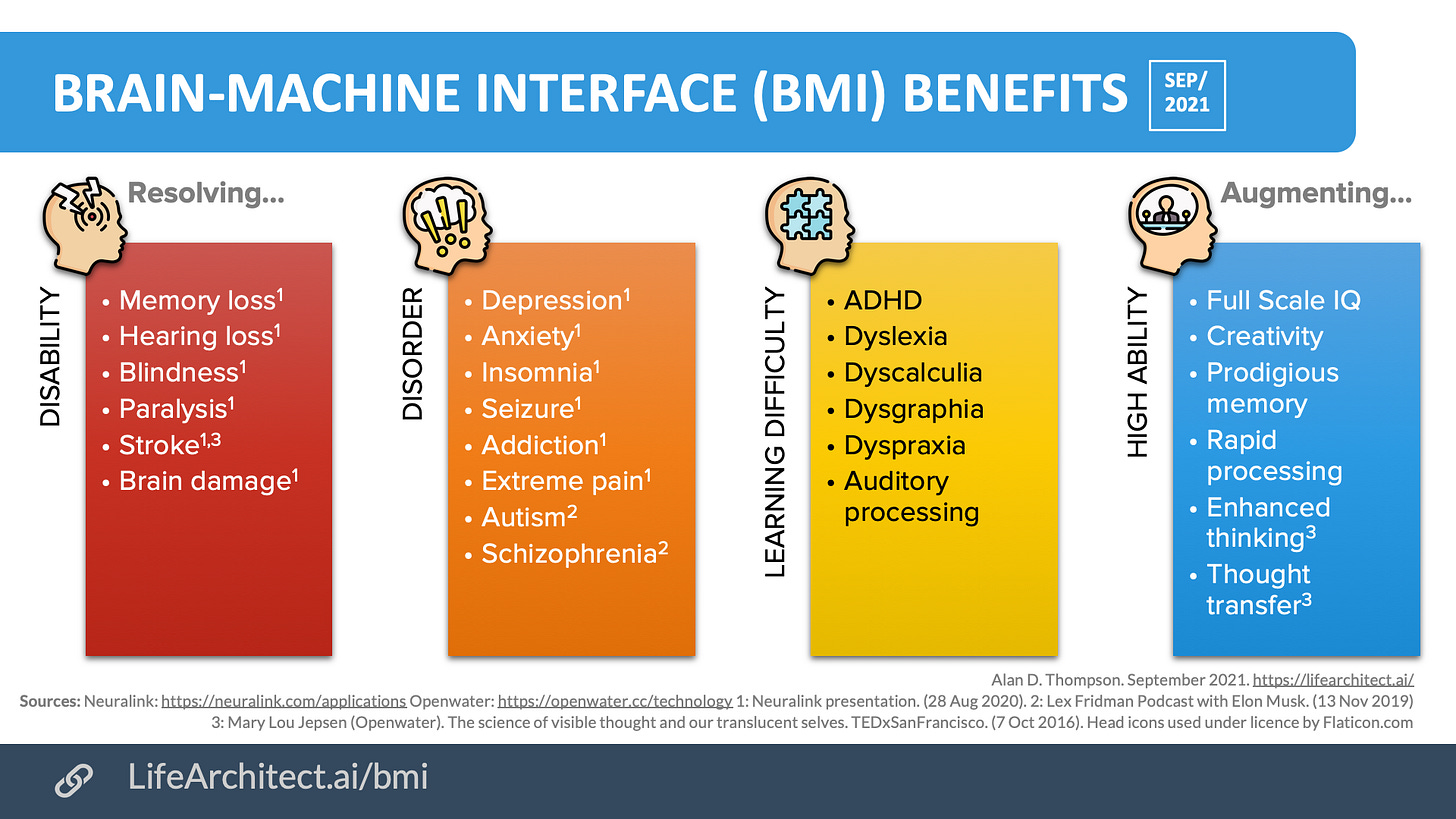

Brain-computer interfaces: Neuralink to test on humans (26/May/2023)

We are excited to share that we have received the FDA’s approval to launch our first-in-human clinical study!

This is the result of incredible work by the Neuralink team in close collaboration with the FDA and represents an important first step that will one day allow our technology to help many people.

Recruitment is not yet open for our clinical trial. We’ll announce more information on this soon! (-via Twitter)

As usual, I have a page dedicated to this subject. Read more: https://lifearchitect.ai/bmi/

Back in 2021, I documented the benefits of brain-computer interfaces, according to the top creators/inventors.

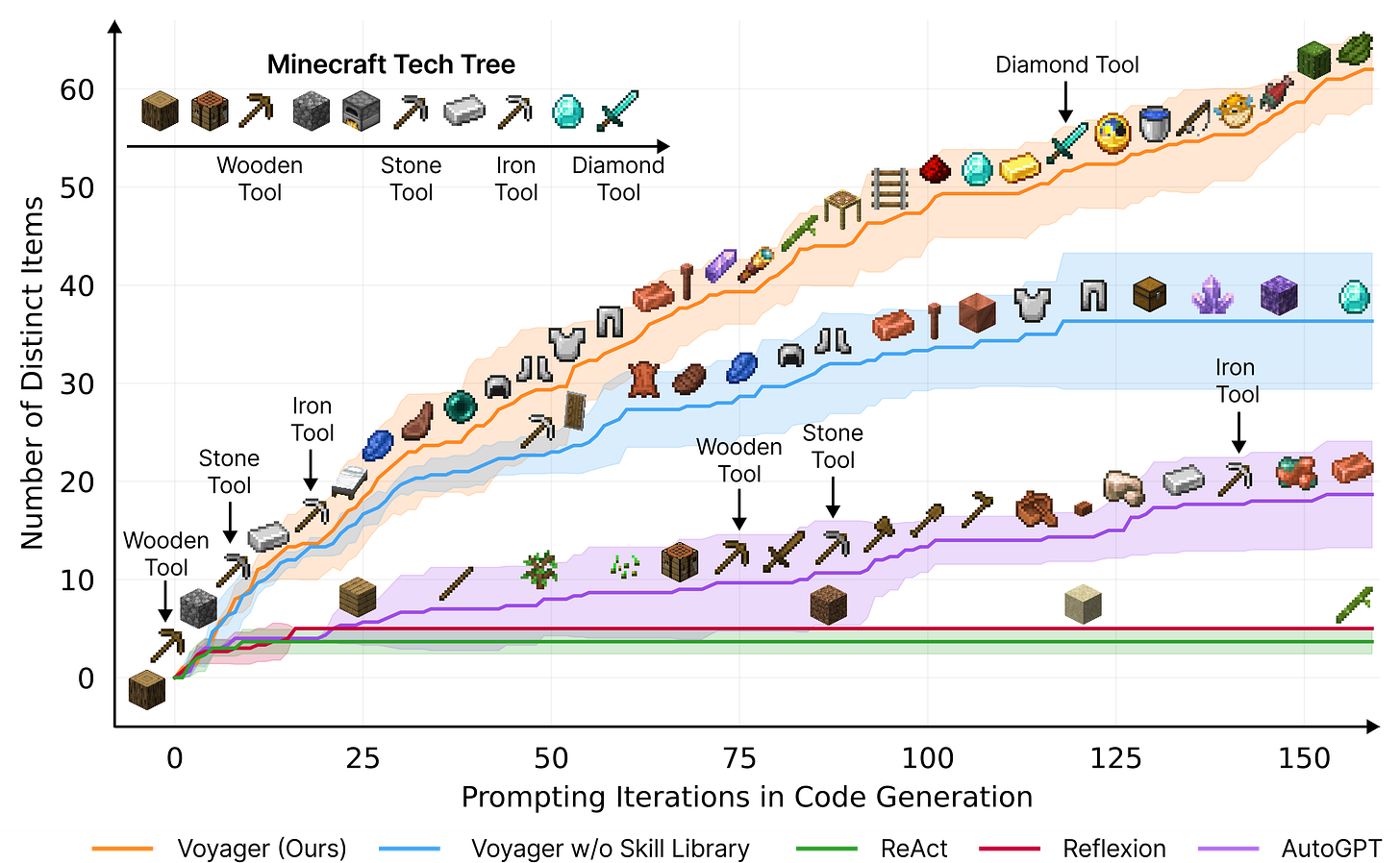

Voyager: A GPT-4 ‘lifelong learning’ agent in Minecraft (26/May/2023)

…we [NVIDIA, Caltech, UT Austin, Stanford, Arizona State University] introduce Voyager, the first LLM-powered embodied lifelong learning agent, which leverages GPT-4 to explore the world continuously, develop increasingly sophisticated skills, and make new discoveries consistently without human intervention.

This may look like just a silly game, but think further out… LLMs becoming agentic (the ability to act on their own: having goals, reasoning, and monitoring their own behaviour) is the equivalent of humans having created new life. And this new life is already optimizing skill development, and ‘lifelong learning’ within an environment.

It may be that this discovery is a big milestone in our history. Expect the next iteration of this concept to drive real use cases impacting our physical world—and all our lives.

Read the prompts used for GPT-4 (GitHub).

Read Dr Jim Fan’s summary of how this works.

Read the paper: https://arxiv.org/abs/2305.16291

NVIDIA Announces DGX GH200 AI Supercomputer (28/May/2023)

New Class of AI Supercomputer Connects 256 Grace Hopper Superchips Into Massive, 1-Exaflop, 144TB GPU for Giant Models…

GH200 superchips eliminate the need for a traditional CPU-to-GPU PCIe connection by combining an Arm-based NVIDIA Grace™ CPU with an NVIDIA H100 Tensor Core GPU in the same package, using NVIDIA NVLink-C2C chip interconnects

Expect trillion-parameter models like OpenAI GPT-5 (my link), Anthropic Claude-Next (my link), and beyond to be trained with this groundbreaking hardware. Some have estimated that this could train language models up to 80 trillion parameters, which gets us closer to brain-scale.

The Interesting Stuff

GPT-4 annotated paper (26/May/2023)

I’m providing a version of the GPT-4 paper with my annotations and updated context. I’ll also provide an annotated version of Google’s PaLM 2 paper shortly.

Grab a copy: https://lifearchitect.ai/report-card/

Fine-tuning on human preferences is a fool’s errand (28/May/2023)

I’ve presented some background on why allowing models to be guided by humans directly after pre-training—a process called reinforcement learning from human feedback or RLHF—is suboptimal, and introduces problems.

Read more: https://lifearchitect.ai/alignment/#rlhf

PandaGPT 13B (24/May/2023)

While we wait for DeepMind to announce Gato 2, here is some interesting progress from researchers at Cambridge and Tencent. The PandaGPT model is one of the few examples of proto-AGI so far this year, based on a large language model in Vicuna 13B combined with Meta’s ImageBind embedding model (9/May/2023). The researchers note that this is ‘the first foundation model capable of instruction-following data across six modalities’:

Text.

Image/video.

Audio.

Depth.

Thermal.

Inertial measurement units/accelerometer/gyroscope/compass.

Demo: https://huggingface.co/spaces/GMFTBY/PandaGPT

Project page: https://panda-gpt.github.io/

IndexGPT by JPMorgan Chase; trademark filing (May/2023)

I continue to provide AI consulting via expert calls as well as longer-term advisory to big companies and major governments around the world, most under NDA with ‘no identify’ clauses. Besides government, banking and finance is my biggest sector, with spectacular advances being made. While I can’t reveal any of that work, I can certainly point out public documents, including JPMorgan Chase’s trademark filing for a product called IndexGPT earlier this month.

…JPMorgan may be the first financial incumbent aiming to release a GPT-like product directly to its customers…“It’s an A.I. program to select financial securities,” Gerben said. “This sounds to me like they’re trying to put my financial advisor out of business.”…

The bank, which employs 1,500 data scientists and machine-learning engineers, is testing “a number of use cases” for GPT technology, said global tech chief Lori Beer.

‘Laptop models’ are a waste of time (25/May/2023)

I have editorialised this title. The UC Berkeley paper is actually called “The False Promise of Imitating Proprietary LLMs“. It names several smaller ‘imitation’ models (Alpaca, Vicuna, Koala, GPT4ALL) that imitate (or ‘steal’) output examples from larger models (ChatGPT, GPT-3.5). The imitation model output ‘falls short in improving LMs across more challenging axes such as factuality, coding, and problem solving.’

Initially, we were surprised by the output quality of our imitation models—they appear far better at following instructions, and crowd workers rate their outputs as competitive with ChatGPT. However, when conducting more targeted automatic evaluations, we find that imitation models close little to none of the gap from the base LM to ChatGPT on tasks that are not heavily supported in the imitation data. We show that these performance discrepancies may slip past human raters because imitation models are adept at mimicking ChatGPT’s style but not its factuality. Overall, we conclude that model imitation is a false promise: there exists a substantial capabilities gap between open and closed LMs that, with current methods, can only be bridged using an unwieldy amount of imitation data or by using more capable base LMs. In turn, we argue that the highest leverage action for improving open-source models is to tackle the difficult challenge of developing better base LMs, rather than taking the shortcut of imitating proprietary systems.

Read the paper: https://arxiv.org/abs/2305.15717

Uber + Waymo in Phoenix (24/May/2023)

I love catching Waymo self-driving cars in my second home of Phoenix. The feel of being alone in an AI-controlled vehicle speeding along the highway is… futuristic. This week, Waymo and Uber partnered up.

This integration will launch publicly later this year with a set number of Waymo vehicles across Waymo’s newly expanded operating territory in Phoenix, and will include local deliveries and ride-hailing trips. Uber users will be able to experience the safety and delight of the Waymo Driver on both the Uber and Uber Eats apps. Riders will also still be able to hail a Waymo vehicle directly through the Waymo One app. At over 180 square miles [467 km²], Waymo’s Phoenix operations are currently the largest fully autonomous service area in the world.

Pew: 58% of US adults have heard of ChatGPT; 14% have tried it (19/Mar/2023)

It should be noted that Pew Research ran this study from 13/Mar-19/Mar, and the numbers would be higher two months later in May/2023.

Among the subset of Americans who have heard of ChatGPT, 19% say they have used it for entertainment and 14% have used it to learn something new. About one-in-ten adults who have heard of ChatGPT and are currently working for pay have used it at work.

ChatGPT iOS app: 500k downloads in 6 days (26/May/2023)

Despite being U.S.- and iOS-only ahead of today’s expansion to 11 more global markets, OpenAI’s ChatGPT app has been off to a stellar start. The app has already surpassed half a million downloads in its first six days since launch. (-via TC)

UW Guanaco 65B (25/May/2023)

It looks like a LLaMA, but a Guanaco is ‘one of two wild South American camelids, the other being the vicuña, which lives at higher elevations’ (wiki).

Researchers at the University of Washington developed QLoRA, enabling fine-tuning on a single GPU, and then trained a model called Guanaco. The largest Guanaco model is 65B parameters, and achieves above 99 percent of the performance of ChatGPT via GPT-4 benchmarking.

They’ve even shoehorned the animal name into a technical name: Generative Universal Assistant for Natural-language Adaptive Context-aware Omnilingual outputs.

The big takeaway is this:

LLaMA 65B needs 780GB of GPU RAM.

Guanaco 65B needs 48GB of GPU RAM.

Guanaco 7B needs 5GB GPU RAM.

QLoRA will also enable privacy-preserving fine-tuning on your phone. We estimate that you can fine-tune 3 million words each night with an iPhone 12 Plus. This means, soon we will have LLMs on phones which are specialized for each individual app.

A year ago, it was a common sentiment that all important research is done in industrial AI labs. I think this is no longer true. (-via Twitter)

View the project page: https://guanaco-model.github.io/

Read the paper: https://arxiv.org/abs/2305.14314

Demo: https://huggingface.co/spaces/uwnlp/guanaco-playground-tgi

Abu Dhabi releases Falcon 40B (26/May/2023)

Falcon, a foundational large language model (LLM) with 40 billion parameters, trained on one trillion tokens, grants unprecedented access to researchers and small and medium-sized enterprise (SME) innovators alike. TII is providing access to the model’s weights as a more comprehensive open-source package [for commercial use]…

Falcon 40B is a breakthrough led by TII’s AI and Digital Science Research Center (AIDRC). The same team also launched NOOR, the world’s largest Arabic NLP model last year, and is on track to develop and announce Falcon 180B soon.

New models bubbles viz (27/May/2023)

This viz looks at 2023-2024 optimal language models; those using Chinchilla scaling or better, which means using a lot of data. Where GPT-3 only used 2 tokens (words) per parameter, Chinchilla scaling laws advise using 20 tokens (words) per parameter.

As with all my visualizations, this is available for you to use in any reasonable venue.

Download original: https://lifearchitect.ai/models/

New GPT-4 vs human viz (20/May/2023)

By request, here's a simplified version of this full GPT-4 vs human viz; easier to read on a big screen!

Read more: https://lifearchitect.ai/iq-testing-ai/

List of ChatGPT plugins (May/2023)

ChatGPT now has nearly 100 plugins, each performing a different online task. Here’s one of the best examples:

Link Reader plugin for ChatGPT is a great choice. It can read the content of links like webpages, PDFs, and images.

To use it, give it a link and ask for information. ChatGPT works together with Link Reader to give you a detailed answer. So if you want a quick summary, this plugin is perfect.

Prompts For Link Reader ChatGPT Plugin

“Summarize this article”

“Estimate reading time for these links”

View all 80 plugins with two example prompts each, by theinsaneapp (dark mode; I can’t read this white text on black background stuff).

View 78 plugins with uses cases, by startuphub.

Short film clips using 2023 technology (May/2023)

Here’s the latest of the greatest. With the exponential rate of change, we’re only a few months away from full feature-length films. Created by Caleb Ward, with the help of Tyler Ward and Shelby Ward.

Tech stack: ChatGPT for script, ChatGPT for shot list, Midjourney v5.1 for images, ElevenLabs for voices, D-ID for face animations. Then, some masking and background removal by a human with Adobe/Canva/other, titles added by a human.

Here’s some behind-the-scenes info:

Mid-year AI report in progress (May/2023)

Every six months, I release a ‘plain English’ AI report. Previous reports are:

2022 retrospective: The sky is infinite.

Mid-2022 retrospective: The sky is bigger.

2021 retrospective: The sky is on fire.

A quick reminder that full subscribers to The Memo will get early access to my mid-2023 AI report, covering progress between January and the end of June 2023. The report is called

The sky is entrancing.

NVIDIA share price surges by +20%, close to $1T market cap (25/May/2023)

NVIDIA is very close to hitting the $1 trillion market cap.

“The transformational surge in AI spending is paying off much earlier than expected,” Morgan Stanley analyst Joseph Moore wrote in a note. “We simply have no historical precedent for the magnitude of this step function.”

Read Reuter’s analysis titled ‘Nvidia close to becoming first trillion-dollar chip firm…’.

Opera Aria (24/May/2023)

Aria connects to OpenAI’s GPT technology and is enhanced by additional capabilities such as adding live results from the web. Aria is both a web and a browser expert that allows you to collaborate with AI while looking for information on the web, generating text or code, or getting your product queries answered.

Adobe Firefly update (25/May/2023)

I’ve featured Firefly (part of Photoshop and other tools) in keynotes since last year, and it even made its way into my end of year 2022 AI report. The latest update and video is shiny!

Read more: https://www.adobe.com/sensei/generative-ai/firefly.html

Watch the video:

Policy

Microsoft: Governing AI: A Blueprint for the Future (25/May/2023)

41 pages of very clean and clear English. Though I do agree that throwing the whole ‘regulation’ thing out and letting AI provide some informed, consolidated, 152+ IQ options is a good thing. Less likelihood of sociopathic/selfish/nepotistic attitudes deciding how the world looks…

Germany balks at paying €300-400M for a European ChatGPT (26/May/2023)

"We see these large models as a kind of public infrastructure ... like a piece of highway." And LEAM's request would be only a "few kilometers." (-via Politico)

As reported in The Memo 19/Mar/2023 edition, the United Kingdom will build its own so-called BritGPT, committing £900M (€1B, $1B). It should be noted that Germany is bigger and has a lot more money than the UK (despite Germany’s current recession):

Population: UK=67M, Germany=83M.

GDP: UK=$3T, Germany=$4T.

GDP per capita: UK=$46k, Germany=$51k.

Altman on AI regulation: 2015 (26/Feb/2015)

Here’s a blast from the past, written by OpenAI’s CEO about 9 months before OpenAI was founded on 11/Dec/2015.

The US government, and all other governments, should regulate the development of SMI [superhuman machine intelligence]. In an ideal world, regulation would slow down the bad guys and speed up the good guys—it seems like what happens with the first SMI to be developed will be very important.

…when governments gets serious about SMI they are likely to out-resource any private company.

Require development happen only on airgapped computers.

Require that self-improving software require human intervention to move forward on each iteration.

Require that certain parts of the software be subject to third-party code reviews.

…humans will always be the weak link in the strategy…

Although it’s possible that a lone wolf in a garage will be the one to figure SMI out, it seems more likely that it will be a group of very smart people with a lot of resources. It also seems likely, at least given the current work I’m aware of, it will involve US companies in some way (though, as I said above, I think every government in the world should enact similar regulations).

Some people worry that regulation will slow down progress in the US and ensure that SMI gets developed somewhere else first. I don’t think a little bit of regulation is likely to overcome the huge head start and density of talent that US companies currently have.

Altman on AI regulation: 2023 (24/May/2023)

Altman said his preference for regulation was “something between the traditional European approach and the traditional U.S. approach.”

OpenAI on superintelligence in 2023 (22/May/2023)

…the governance of the most powerful systems, as well as decisions regarding their deployment, must have strong public oversight. We believe people around the world should democratically decide on the bounds and defaults for AI systems. We don't yet know how to design such a mechanism, but we plan to experiment with its development. We continue to think that, within these wide bounds, individual users should have a lot of control over how the AI they use behaves…

Given the risks and difficulties, it’s worth considering why we are building this technology at all.

At OpenAI, we have two fundamental reasons. First, we believe it’s going to lead to a much better world than what we can imagine today (we are already seeing early examples of this in areas like education, creative work, and personal productivity). The world faces a lot of problems that we will need much more help to solve; this technology can improve our societies, and the creative ability of everyone to use these new tools is certain to astonish us. The economic growth and increase in quality of life will be astonishing.

Second, we believe it would be unintuitively risky and difficult to stop the creation of superintelligence. Because the upsides are so tremendous, the cost to build it decreases each year, the number of actors building it is rapidly increasing, and it’s inherently part of the technological path we are on, stopping it would require something like a global surveillance regime, and even that isn’t guaranteed to work. So we have to get it right.

Read more via OpenAI: https://openai.com/blog/governance-of-superintelligence

No 10 acknowledges ‘existential’ risk of AI for first time (25/May/2023)

[British Prime Minister] Rishi Sunak and Chloe Smith, the secretary of state for science, innovation and technology, met the chief executives of Google DeepMind, OpenAI and Anthropic AI on Wednesday evening and discussed how best to moderate the development of the technology to limit the risks of catastrophe.

“The PM and CEOs discussed the risks of the technology, ranging from disinformation and national security, to existential threats … The PM set out how the approach to AI regulation will need to keep pace with the fast-moving advances in this technology.”

It is the first time the prime minister has acknowledged the potential “existential” threat of developing a “superintelligent” AI without appropriate safeguards, a risk that contrasts with the UK government’s generally positive approach to AI development.

The growing awareness of that risk comes a day after OpenAI’s chief executive, Sam Altman, published a call for world leaders to establish an international agency similar to the International Atomic Energy Agency, which regulates atomic weapons, in order to limit the speed with which such AI is developed.

Altman, who has been touring Europe meeting users and developers of the ChatGPT platform as well as policymakers, told an event in London that, while he did not want the short-term rules to be too restrictive, “if someone does crack the code and build a superintelligence … I’d like to make sure that we treat this at least as seriously as we treat, say, nuclear material”.

Toys to Play With

2-hour video by head of AI alignment about agency + what’s next (24/Apr/2023)

Make the time to watch this. Dr Paul Christiano runs the ARC, responsible for evaluating GPT-4 and Claude.

I am extremely skeptical of someone who's confident that if you took GPT-4 and scaled up by two orders of magnitude [Alan: from 1T to 100T?] of training compute and then fine-tune the resulting system using existing techniques that we know exactly what would happen.

Watch with transcript: https://youtubetranscript.com/?v=GyFkWb903aU&t=2067

Watch the video: https://youtu.be/GyFkWb903aU

Clipdrop Reimagine XL by Stability AI (26/May/2023)

Drop in any image and receive four AI-ised versions!

Try it (free, no login): https://clipdrop.co/stable-diffusion-reimagine

PrivateGPT (20/May/2023)

Ping LLMs and your own documents… completely offline.

Take a look: https://github.com/imartinez/privateGPT

ChatGPT + ElevenLabs + Unreal Engine 5 (22/Apr/2023)

Podcast generated by GPT-4 (20/May/2023)

Crowdcast uses a subreddit for human-upvoted topics, fed into GPT-4, fed into ElevenLabs for voice. The output is incredible.

Tech stack: GPT-4 + ElevenLabs

View the repo: https://github.com/AdmTal/crowdcast/tree/main

News by GPT-4 (20/May/2023)

Neural Times is an entirely automated news site, fueled by the power of GPT-4. There's no human modification; the AI handles everything from choosing the headlines, researching topics, writing, to finally publishing the articles.

The core aim of Neural Times is not only to demonstrate the capabilities of AI in journalism but more importantly, to contribute to minimizing bias in news reporting and mitigate social polarization. By leveraging AI's ability to produce balanced and fact-based content, Neural Times strives to offer diverse perspectives without human prejudice.

Check it out: https://neuraltimes.org/

Next

Apple’s upcoming Reality headset release will provide user hardware for much of this amazing AI software. Here’s the countdown to the 5/Jun/2023 WWDC23 kick off in your timezone. We’ll be hosting a text-only live discussion on 5/Jun/2023 during the Apple stream.

All my very best,

Alan

LifeArchitect.ai

Discussion | Search | Archives