FOR IMMEDIATE RELEASE: 26/Sep/2023

Welcome back to The Memo.

You’re joining full subscribers from major governments and corporations around the world.

The winner of the The Who Moved My Cheese? AI Awards! for September 2023 is Amazon Kindle, banning publication of more than a certain number of books per author because of generative AI.

In the Toys to play with section, we look at new prompting guides, a massive DALL-E comparison database, the exciting first piece of hardware to combine ChatGPT + ElevenLabs, and more.

The BIG Stuff

Absurd rumors of OpenAI achieving AGI already (Sep/2023)

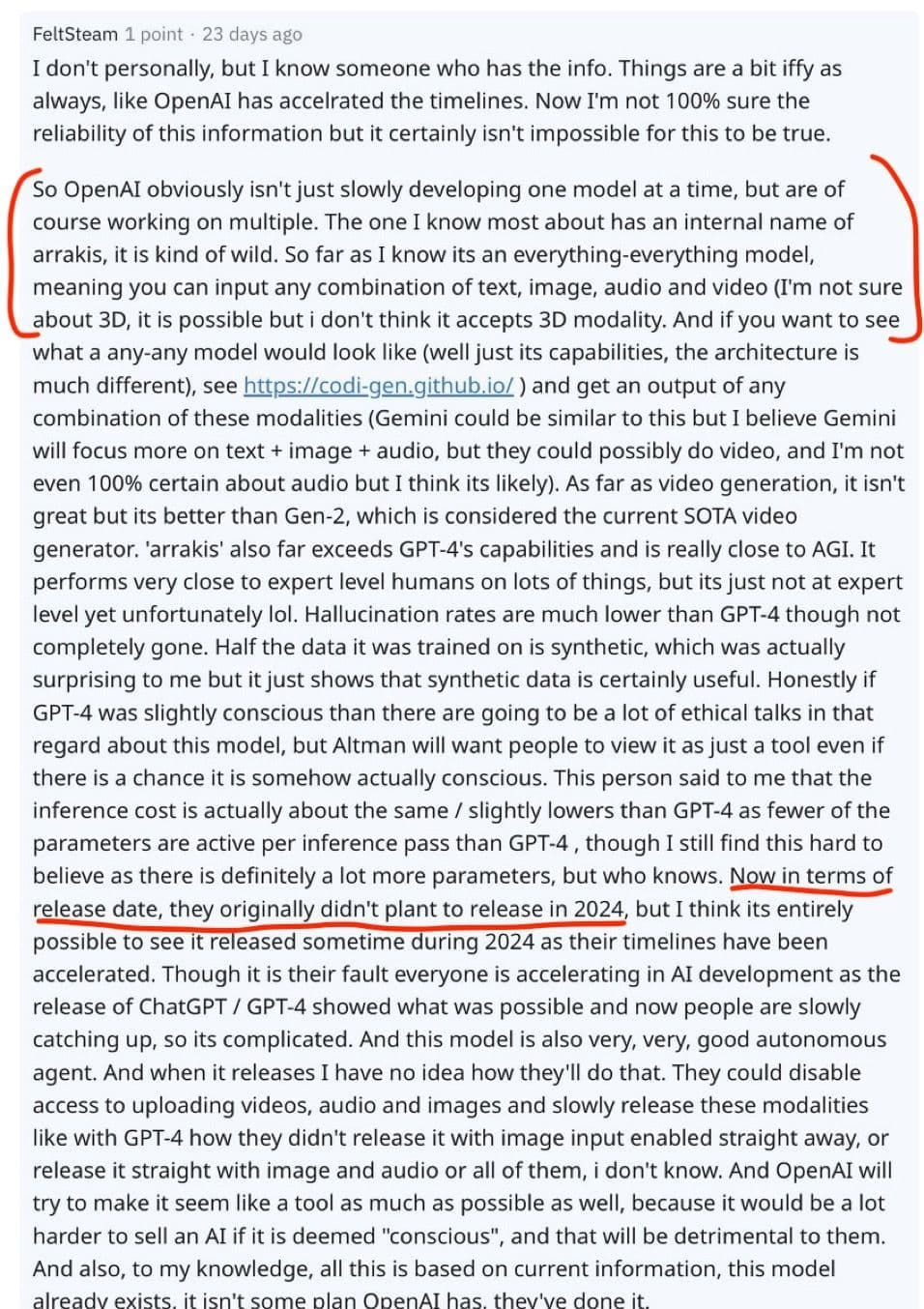

Look, I can’t in good faith follow these rumors all the way through and comment on them. Because they are just that, rumors. However, if you have the time, take a good read of some of the commentary swirling through San Fransisco (and the rest of the world) right now.

1: Via Lesswrong (above).

Amazon will invest $4 billion in Anthropic (25/Sep/2023)

Switching from Google to Amazon, with a similar investment structure to OpenAI/Microsoft.

Enterprises across many industries are already building with Anthropic models on Amazon Bedrock. LexisNexis Legal & Professional, a leading global provider of information and analytics, is using a custom, fine-tuned Claude 2 model to deliver conversational search, insightful summarization, and intelligent legal drafting capabilities via the company’s new Lexis+ AI solution. Premier asset management firm Bridgewater Associates is developing an investment analyst assistant powered by Claude 2 to generate elaborate charts, compute financial indicators, and create summaries of the results. Lonely Planet, a renowned travel publisher, reduced its itinerary generation costs by almost 80 percent after deploying Claude 2; synthesizing its decades of travel content to deliver cohesive, highly accurate travel recommendations.

Read the announce: https://www.anthropic.com/index/anthropic-amazon

ChatGPT can now see, hear, and speak (25/Sep/2023)

The new voice capability is powered by a new text-to-speech model, capable of generating human-like audio from just text and a few seconds of sample speech. We collaborated with professional voice actors to create each of the voices. We also use Whisper, our open-source speech recognition system, to transcribe your spoken words into text.

Read more: https://openai.com/blog/chatgpt-can-now-see-hear-and-speak

OpenAI GPT-4V (25/Sep/2023)

The vision component of GPT-4 is finally being readied for release. They’ve spent more than a year breaking its kneecaps so it’s less useful. But hey, at least it won’t tell us how to make napalm…