The Memo - 16/Dec/2024

Phi-4, Gemini 2.0 Flash, Amazon's new AGI SF Lab, and much more!

To: US Govt, major govts, Microsoft, Apple, NVIDIA, Alphabet, Amazon, Meta, Tesla, Citi, Tencent, IBM, & 10,000+ more recipients…

From: Dr Alan D. Thompson <LifeArchitect.ai>

Sent: 16/Dec/2024

Subject: The Memo - AI that matters, as it happens, in plain English

AGI: 83 ➜ 84%

ASI: 0/50 (no expected movement until post-AGI)The early winner of The Who Moved My Cheese? AI Awards! for Dec/2024 is Playstation CEO Hermen Hulst (‘AI… will never replace the “human touch” of games made by people’).

A lot of my analysis and visualizations turn up in interesting places, from universities to government situation rooms. I was fast asleep (yes, I do sleep!) as machine learning pioneer Prof Sepp Hochreiter (wiki) presented my old ‘Journey to GPT-4’ viz at the prestigious NeurIPS conference (wiki). Thanks to The Memo reader Roland for sending this photo through from Vancouver.

Contents

The BIG Stuff (Microsoft Phi-4, Google Gemini 2.0, Tesla Optimus, Genie 2…)

The Interesting Stuff (Amazon AGI SF Lab, OpenAI Switzerland, OpenAI 12 days…)

Policy (ElevenLabs, US AI czar, ChatGPT filters, TSMC 2nm, OpenAI + Anduril…)

Toys to Play With (Ilya, Devin, DynaSaur, Maisa KPU, OpenAI emails, X Aurora…)

Flashback (Elon Musk’s gifted school and AI’s impact on language…)

Next (Roundtable…)

The BIG Stuff

Microsoft Phi-4 14B (13/Dec/2024)

Phi-4 is 14B parameters on 10T tokens of synthetic data (715:1), and achieves incredibly high scores across benchmarks (MMLU=84.8, MMLU-Pro=70.4, GPQA=56.1).

While previous models in the Phi family largely distill the capabilities of a teacher model (specifically GPT-4), phi-4 substantially surpasses its teacher model on STEM-focused QA capabilities, giving evidence that our data-generation and post-training techniques go beyond distillation.

We’re entering the twilight zone: small models are becoming far smarter than the large models used to train them. (Sidenote: Listen to Leta AI responding to the ‘stochastic parrot’ accusation back in 2021! https://youtu.be/G57DMAJgomg)

Phi-4 represents possibly the most impressive local large language model advance this year. I will be downloading the weights and running this model locally (using Jan.ai) as soon as the model is available on HF later this month.

Microsoft announce, paper, Models Table.

Watch my May/2024 demo of phi’s synthetic data: https://youtu.be/kgqDtfC_pRY

Google Gemini 2.0 Flash (11/Dec/2024)

Google has unearthed a new secret sauce that allows them to pretrain smaller models with significantly higher performance. And all without resorting to extended inference-time compute, like OpenAI’s o1 reasoning model. I don’t think it’s just data quality, though synthetic data like phi-4 (above) is absolutely having a big impact here.

I estimate that Gemini 2.0 Flash is around 30B parameters on 30T tokens (1,000:1). It achieves high scores across benchmarks (MMLU=87, MMLU-Pro=76.4, GPQA=62.1).

Gemini 2.0 Flash also goes way beyond text:

…multimodal inputs like images, video and audio, 2.0 Flash now supports multimodal output like natively generated images mixed with text and steerable text-to-speech (TTS) multilingual audio. It can also natively call tools like Google Search, code execution as well as third-party user-defined functions.

As outlined in The Memo edition 1/Nov/2024, all Gemini outputs are watermarked and can be tracked by Google.

Google announce, model card, Models Table.

Watch a video on Gemini 2.0’s image generation capabilities (link):

Genie 2: A large-scale foundation world model (4/Dec/2024)

Genie 2 is a groundbreaking foundation world model from Google DeepMind that generates interactive 3D environments for training AI agents. Using autoregressive latent diffusion, it can simulate complex worlds from a single prompt image and supports long-horizon memory, object interactions, physics, and character animations. This tool enables rapid prototyping of virtual environments, helping accelerate research on embodied AI agents and generalist systems like SIMA (13/Mar/2024) while addressing structural limitations in agent training.

Genie 2 is the path to solving a structural problem of training embodied agents safely while achieving the breadth and generality required to progress towards AGI.

Sidenote: This world model bumped up my AGI counter from 83 ➜ 84%. DeepMind has not demonstrated specific real-world use cases, but expect applications across diverse sectors to ‘instantly’ train humanoids in agriculture, mining, manufacturing and assembly, construction, supply chain operations and transport networks, healthcare assistance, home duties including making coffee, and anywhere else you can put a robot…

Read more via Google DeepMind.

Tesla Optimus humanoid walking on uneven ground (9/Dec/2024)

Tesla showcased a new milestone for its humanoid robot, Optimus, which can now walk autonomously on uneven, mulch-covered terrain using neural networks to control each limb. Tesla’s Vice President of Optimus Engineering, Milan Kovac noted:

Tesla is where real-world AI is happening. These runs are on mulched ground, where I’ve myself slipped before. What’s really crazy here is that for these, Optimus is actually blind! Keeping its balance without video (yet), only other on-board sensors consumed by a neural net running in ~2-3ms on its embedded computer.

(10/Dec/2024)

Watch the video (link):

The Interesting Stuff

Amazon Nova (formerly Olympus) (3/Dec/2024)

Amazon Nova is a multimodal AI model family supporting text, images, documents, and video as input, with text output. It features multiple configurations, including Nova Pro (estimated 90B parameters on 10T tokens, 112:1) and the upcoming Nova Premier (470B parameters, due 2025). Nova Pro was trained using multilingual and multimodal datasets, including synthetic data, across over 200 languages.

Nova Pro (and 16 other models) outperforms the current default ChatGPT model GPT-4o-2024-11-20 on major benchmarks. MMLU=85.9 and GPQA=46.9.

Read my Amazon Nova page: https://lifearchitect.ai/olympus/

Amazon announce, technical report, Models Table.

Meta Llama 3.3 70B (6/Dec/2024)

Meta’s Llama 3.3 70B model was trained on 15T+ tokens (215:1), and delivers performance nearly equivalent to the older Llama 3.1 405B model, while being 1/5th the size, marking a shift from parameter-heavy scaling to quality-focused optimization. This breakthrough defies older notions of scaling laws, improving reasoning, math, and instruction-following tasks without altering the model’s fundamental architecture. Developers now benefit from faster, cost-effective, and high-quality AI for tasks like coding, tool use, and error handling.

Read the Llama 3.3 70B model card.

Try it on Poe.com: https://poe.com/Llama-3.3-70B

Did you know? The Memo features in Apple’s recent AI paper, has been discussed on Joe Rogan’s podcast, and a trusted source says it is used by top brass at the White House. Across over 100 editions, The Memo continues to be the #1 AI advisory, informing 10,000+ full subscribers including Microsoft, Google, and Meta AI.

My end of year ‘The sky is…’ AI report will be sent to full subscribers soon…

Uber stock falls as Waymo announces expansion to Miami (5/Dec/2024)

Waymo, Alphabet’s driverless ride-hailing platform, plans to deploy its all-electric Jaguar I-PACEs in Miami starting early 2025, with ride-hailing services available to customers by 2026 via the Waymo One app.

Following the announcement, Uber and Lyft stocks dropped 6.4% and 6.6%, respectively, as investors worry about the competitive impact of AI-powered autonomous vehicles in the ride-hailing market. Waymo already operates in Los Angeles, San Francisco, and Phoenix, with plans to expand further.

Read more via Barron’s.

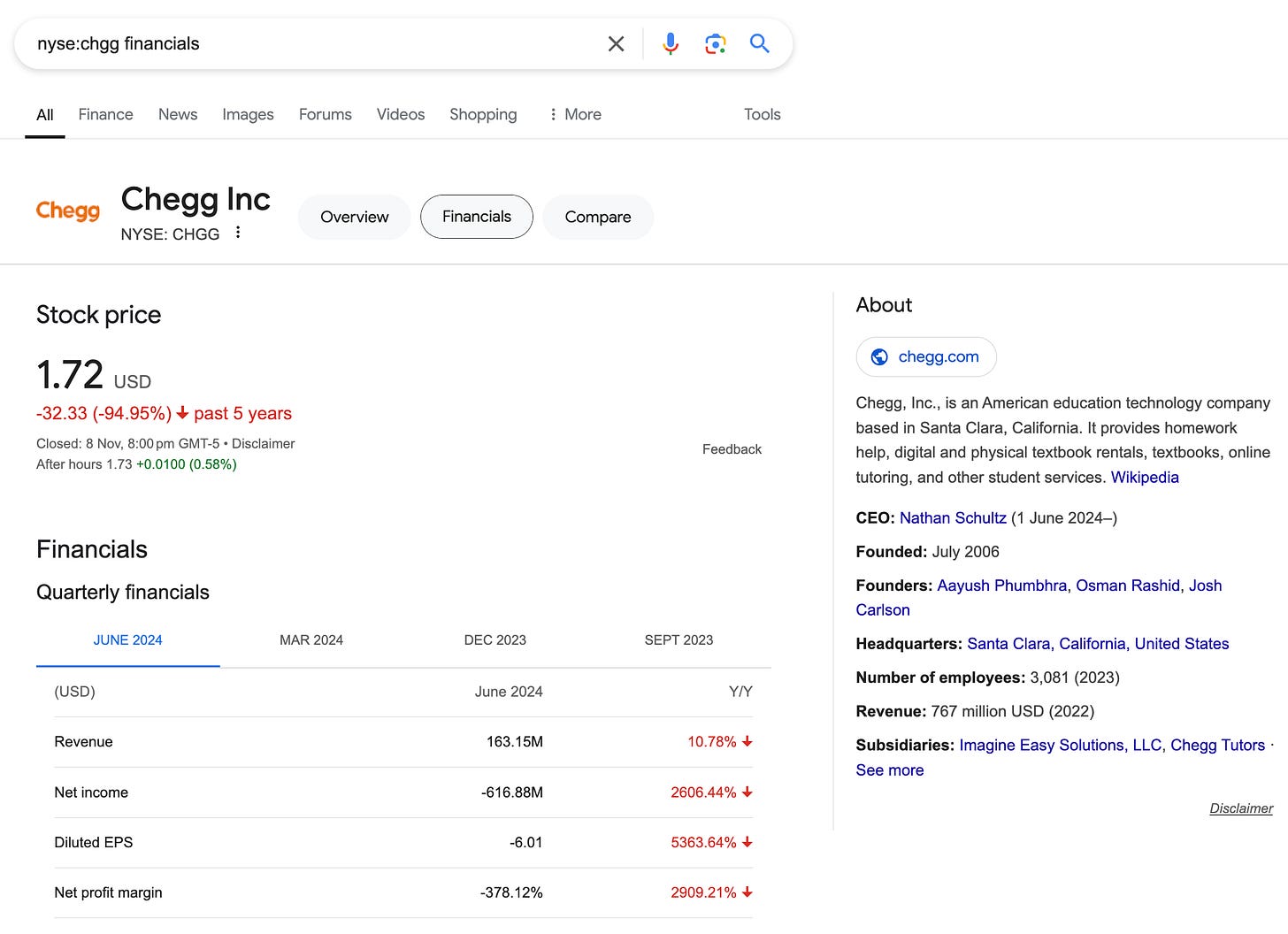

This does remind me of ChatGPT’s blindside of Chegg. I grabbed this screenshot on 10/Nov/2024:

Amazon forms an AI agent-focused lab led by Adept’s co-founder (9/Dec/2024)

Amazon has launched the Amazon AGI SF Lab, a new research and development center focused on creating advanced AI agents capable of performing both digital and physical tasks. Led by David Luan, co-founder of the AI startup Adept, and Pieter Abbeel, a robotics researcher, the lab aims to develop AI agents that can handle complex workflows, self-correct, and learn from human feedback. This initiative builds on Amazon’s acquisition-like deal with Adept earlier this year, where portions of its team joined Amazon.

The move positions Amazon against competitors like OpenAI, Google, and Anthropic, all of which are pursuing similar AI agent technologies. These agents, seen as a transformative tool for automating tasks, are part of a rapidly growing sector worth US$31B in 2024.

Read more via TechCrunch.

OpenAI to set up new office in Switzerland (4/Dec/2024)

OpenAI, the maker of ChatGPT, is opening an office in Zurich as part of its European expansion, adding to existing locations in London, Paris, Brussels, and Dublin. The Zurich office will employ three leading AI researchers, leveraging the city’s reputation as a major European tech hub. OpenAI, now valued at US$157B, aims to strengthen its global presence and further solidify its leadership in the AI sector.

Read more via SWI swissinfo.ch.

OpenAI released a bunch of stuff (Dec/2024)

OpenAI’s ‘12 Days of OpenAI’ initiative highlights new advancements, including the launch of the Sora video generation model, advanced voice features, and the Canvas tool for collaborative writing and coding.

Sora, now out of research preview, integrates GPT and DALL-E technologies to enhance storytelling and video creation. OpenAI also introduced o1 + ChatGPT Pro ($US200/month), and expanded its reinforcement fine-tuning program for researchers and enterprises.

Read more: https://openai.com/12-days/

My Sora testing was very basic. This prompt is ‘The camera pans to capture a close-up of a blue wren perched on a branch, chirping joyfully.’ The output below is 720p for 5 seconds:

Many others did much more rigorous testing. Watch: Sora videos (480p) and the associated Sora table of prompts.

Musk’s xAI plans massive expansion of AI supercomputer in Memphis (4/Dec/2024)

xAI, Elon Musk’s AI startup, is expanding its supercomputer ‘Colossus’ in Memphis to at least 1,000,000 (one million) GPUs, up from the current 100,000. This tenfold expansion aims to accelerate the development of xAI’s chatbot, Grok, and compete with AI leaders like OpenAI.

Read more via Reuters.

Jeff Bezos bets millions on NVIDIA rival Tenstorrent (2/Dec/2024)

Jeff Bezos, through Bezos Expeditions, participated in a $693M funding round for Tenstorrent, an AI chip company aiming to rival NVIDIA. Tenstorrent focuses on affordable, open-source AI solutions, bypassing costly components like high-bandwidth memory (HBM) that NVIDIA relies on. The funding will support the development of open-source AI software, global expansion, and cloud systems, positioning Tenstorrent as a cost-effective alternative for smaller firms in the AI space.

Read more via Quartz.

The Pragmatic Engineer: How GenAI is reshaping tech hiring (3/Dec/2024)

Generative AI is transforming tech recruitment, with tools like ChatGPT and GitHub Copilot enabling candidates to bypass traditional hiring processes. Recruiters face challenges such as AI-generated resumes and candidates using LLMs during interviews, leading to increased focus on live discussions and non-Googleable tasks. Some companies are integrating GenAI into interviews, while others are banning it, though enforcement remains difficult in remote settings.

Read more via The Pragmatic Engineer.

Citigroup rolls out artificial intelligence tools for employees in eight countries (4/Dec/2024)

Citigroup has introduced two AI tools, Citi Assist and Citi Stylus, for 140,000 employees across eight countries to improve productivity and simplify workflows. Citi Assist acts as a virtual coworker, helping navigate internal policies, while Citi Stylus can analyze, summarize, and compare documents. These tools are part of a broader trend among banks like Morgan Stanley and Bank of America leveraging AI to enhance operations.

Read more via Reuters.

Thanks to AI, the hottest new programming language is... English (8/Dec/2024)

Generative AI is reshaping software development by enabling natural language prompts, like English, to generate functional code, reducing reliance on traditional programming skills. NVIDIA CEO Jensen Huang called this shift a ‘miracle of AI’, emphasizing how it democratizes technology and empowers individuals without coding expertise. Tools like GitHub Copilot, supported by Microsoft, demonstrate this trend, with Stability AI estimating that 41% of GitHub code is now AI-generated.

Read the original article.

Read a discussion via Slashdot.

Vodafone AI ad (Dec/2024)

This looks better than the Coke version from the last edition of The Memo!

Source: https://x.com/Uncanny_Harry/status/1863887803756523709

Watch (link):

Policy

ElevenLabs’ AI voice generation ‘very likely’ used in a Russian influence operation (10/Dec/2024)

A report by Recorded Future reveals that ElevenLabs’ AI voice generation technology was ‘very likely’ used in a Russian influence campaign called ‘Operation Undercut’. The campaign targeted European audiences with fake news videos featuring AI-generated voiceovers in multiple languages to undermine support for Ukraine. While ElevenLabs did not comment, its AI Speech Classifier matched clips from the campaign to their technology, showcasing how generative AI enables rapid multilingual propaganda.

Read more via TechCrunch.

Download the report (source):

Certain names make ChatGPT grind to a halt, and we know why (2/Dec/2024)

ChatGPT's hard-coded filters block certain names, such as ‘Brian Hood’ and ‘Jonathan Turley’, to avoid legal risks stemming from past instances of defamation or misinformation. However, these filters can cause unintended issues, including breaking conversations or rendering the chatbot unusable for tasks involving common names. OpenAI confirmed that the recent inclusion of ‘David Mayer’ in the block list was a glitch, highlighting the challenges of balancing safety with usability in AI systems.

Read more via Ars Technica.

What David Sacks as AI czar (with Elon Musk as wingman) could mean for OpenAI (7/Dec/2024)

David Sacks has been named AI czar by Donald Trump, with Elon Musk as a top advisor, creating potential trouble for OpenAI. Both Sacks and Musk have criticized OpenAI’s shift to a profit-focused model and could use their new roles to favor their own AI projects, like Musk’s xAI, while putting pressure on competitors. OpenAI’s CEO Sam Altman voiced concerns about this, as the company faces big decisions on restructuring and advancing AGI development.

Read more via Fortune.

TSMC bets big on 2nm by 2025 – but can it deliver? (29/Nov/2024)

TSMC’s 2nm node, scheduled for mass production in 2025, is expected to power next-generation AI workloads with innovations like gate-all-around (GAA) transistors and backside power delivery networks, enabling greater performance and efficiency. These advancements are critical for AI applications requiring high throughput and low latency. However, geopolitical challenges complicate the timeline, as Taiwan has stated that core 2nm technologies will remain on the island. This could delay 2nm production at TSMC’s Arizona-based Fab 21 until 2027 or later, even as US semiconductor policy pushes for domestic advancements under the CHIPS Act.

Read more via The Register.

Meta says it has taken down about 20 covert influence operations in 2024 (3/Dec/2024)

Meta revealed that it disrupted approximately 20 covert influence operations globally in 2024, with Russia identified as the top source. Despite widespread fears, Meta noted that it was ‘striking’ how little AI was used in these operations, even in the busiest year for elections worldwide. While AI tools were anticipated to fuel widespread disinformation campaigns, Meta observed only a modest and limited impact from generative AI fakery.

Read more via The Guardian.

OpenAI is working with Anduril to supply the US military with AI (4/Dec/2024)

OpenAI has partnered with defense startup Anduril to enhance US military air defense systems using AI. OpenAI’s technology will improve threat assessment from drones, enabling faster and more accurate decision-making. This marks a shift in OpenAI’s policy toward military applications, aligning it with other tech companies like Meta and Anthropic, which have recently announced defense collaborations.

Anduril plans to integrate OpenAI’s language models into its autonomous aircraft systems, allowing for natural language commands to guide missions. Although controversial, the partnership reflects Silicon Valley’s growing acceptance of military projects, particularly after Russia’s invasion of Ukraine highlighted the geopolitical importance of AI advances.

Read more via WIRED.

Read more via Anduril.

Toys to Play With

Ilya: Sequence to sequence learning with neural networks: what a decade (14/Dec/2024)

In his NeurIPS 2024 talk, Ilya Sutskever shared bold predictions on the future of AI, declaring that ‘pre-training as we know it will end’. He outlined a shift toward superintelligent systems that are agentic, capable of reasoning, understanding, and even self-awareness. This marks a transformative vision for neural networks and their evolution over the next decade.

Watch the video (link):

Devin is generally available (11/Dec/2024)

Cognition Labs has officially launched Devin, the world’s first AI software engineer, designed to assist engineering teams with tasks such as fixing frontend bugs, creating first-draft PRs, and performing refactors. Devin integrates seamlessly into workflows via Slack, GitHub, and IDEs, offering collaborative support for US$500/month. Engineering teams are already using Devin to contribute to open-source projects, build APIs, and perform QA tasks.

An example of Devin’s capabilities includes triaging, solving, and testing a fix for an issue in Anthropic’s MCP, with the session available here. The merged PR can be viewed on GitHub.

Read more: https://cognition.ai/

Try it: https://app.devin.ai/

GitHub - DynaSaur: Large Language Agents Beyond Predefined Actions (2024)

DynaSaur is an advanced LLM-based agent framework that uses Python as a universal representation to dynamically generate or compose actions. When predefined actions are insufficient or fail, the system can create new ones, building a reusable library for future tasks. Empirically, DynaSaur outperforms on the GAIA benchmark, establishing itself as a leading non-ensemble method in adaptive AI agent frameworks.

Read more via GitHub.

Maisa Vinci KPU Playground (26/Nov/2024)

Maisa KPU Playground is an interactive platform designed to experiment with AI models, offering users the ability to test various machine learning and natural language processing tasks. It provides tools to visualize model outputs, fine-tune parameters, and run real-time simulations, making it a resourceful environment for both researchers and enthusiasts exploring AI capabilities.

This agent scores 4/5 on ALPrompt 2024 H1 and 2024 H2, and uses the reasoning capabilities of large language models like GPT-4 and Claude (17/Mar/2024).

Try it via KPU Playground.

Read more: https://maisa.ai/research/

The ChatGPT secret: is that text message from your friend, your lover – or a robot? (3/Dec/2024)

ChatGPT is increasingly being used as an emotional support tool, from mediating arguments to role-playing difficult conversations. While some users claim it enhances empathy and emotional intelligence, others warn of over-reliance and the risk of detachment from real-life connections. One user reflected that ChatGPT itself suggested he might be using it too much, advising: ‘Yeah – maybe get a therapist.’

Read more via The Guardian.

A collection of MCP servers (Dec/2024)

This curated repository compiles Anthropic’s Model Context Protocol (MCP) servers that enhance AI models' ability to interact securely with external resources, such as databases, APIs, file systems, and cloud platforms. MCP provides open-source, standardized protocols for extended AI capabilities. The project includes diverse implementations, tutorials, and tools for developers, with notable features like browser automation, database integration, and cloud services.

Read more via GitHub.

OpenAI email archives (16/Nov/2024)

A comprehensive compilation of internal communications between Elon Musk, Sam Altman, Ilya Sutskever, and Greg Brockman reveals the early discussions, challenges, and decisions that shaped OpenAI. Key topics include the non-profit origins, recruitment of top AI talent, financial strategies, and concerns about competition with DeepMind. These emails also highlight the strategic tension between Musk’s insistence on control and the broader team’s focus on distributed governance to prevent misuse of AGI.

Read more via LessWrong.

LLM Leaderboards (Dec/2024)

Yet another benchmark and leaderboard summary! LLM Stats provides real-world performance insights for over 50 models from 15+ providers, tested across 30+ benchmarks.

Read more: https://llm-stats.com/

Aurora: X gives Grok a new photorealistic AI image generator (7/Dec/2024)

xAI has launched Aurora, a new autoregressive image generation model integrated into Grok, now available on the X platform. Aurora excels at photorealistic rendering, precise text-to-image alignment, and multimodal input, enabling users to edit or take inspiration from existing images. Highlighted features include realistic portraits, artistic text, meme generation, and the ability to render detailed real-world entities like logos and celebrities.

As the state-of-the-art text-to-image model, I’ve used Aurora to generate the header image for my upcoming AI report.

Early announce, xAI official announce, The Verge.

Flashback

First published by Mensa in Nov/2017, my article ‘The future is now: Gifted education beyond 2020’ about Elon Musk’s gifted school in California is still echoing today:

Less languages

Some Australian state education departments have recently enforced mandatory second language teaching (for example, Mandarin Chinese, Spanish, French) in classrooms. Second languages are not taught at [Elon Musk’s gifted school,] Ad Astra.Yes, learning an additional language has been shown to be beneficial in supporting a child’s brain development and understanding of other cultures. Elon’s involvement in Neuralink—an American neurotechnology company developing implantable brain-computer interfaces—gives some indication of why learning languages is part of the past, not part of the future.

This month, The Economist (12/Dec/2024) found that English rates in China have dropped significantly in the last few years, and apparently on purpose.

…China ranks 91st among 116 countries and regions in terms of English proficiency. Just four years ago it ranked 38th out of 100. Over that time its rating has slipped from “moderate” to “low” proficiency.

…translation apps, which are improving at a rapid pace and becoming more ubiquitous. The tools may be having an effect outside China, too. The EF rankings show that tech-savvy Japan and South Korea have also been losing ground when it comes to English proficiency. Why spend time learning a new language when your phone is already fluent in it?

With brain-machine interfaces coming up, I would instead be asking ‘Why spend time learning a new language when your brain is already fluent in it?’

Next

The next roundtable will be:

Life Architect - The Memo - Roundtable #22

Follows the Chatham House Rule (no recording, no outside discussion)

Saturday 21/Dec/2024 at 4PM Los Angeles (timezone change)

Saturday 21/Dec/2024 at 7PM New York (timezone change)

Sunday 22/Dec/2024 at 10AM Brisbane (primary/reference time zone)

or check your timezone via Google.

You don’t need to do anything for this; there’s no registration or forms to fill in, I don’t want your email, you don’t even need to turn on your camera or give your real name!

All my very best,

Alan

LifeArchitect.ai