The Memo - 10/May/2023

AI hitting the 100% ceiling in tests like ToM, Inflection Pi, NVIDIA GPT-2B-001, and much more!

FOR IMMEDIATE RELEASE: 10/May/2023

Welcome back to The Memo.

In terms of AI releases, March and April 2023 were absurd. I counted 11 major language models announced in March, and 6 major models in April. I think we’re entering a more balanced cadence now, despite already having 4 major models announced in the first week of May, and with Google set to release PaLM 2 within the next 24 hours (watch live here).

In the Policy section, we look at news from the US, and a report on ChatGPT in education.

In the Toys to play with section, we look at Transformify.ai’s fantastic Zapier-like automation (including a code for The Memo subscribers), a GPT-4 escape room, new LLM integrations from Box and Slack, prompt crafting lessons by Microsoft, and advanced use cases for GPT-4 by power users…

The BIG Stuff

Exclusive: Microsoft’s Chief Economist suggests pause on AI regulation (3/May/2023)

Speaking to the World Economic Forum in Geneva, Microsoft’s Corporate Vice President and Chief Economist Dr Michael A. Schwarz (wiki) said:

What should be our philosophy about regulating AI? Clearly, we have to regulate it, and I think my philosophy there is very simple. We should regulate AI in a way where we don’t throw away the baby with the bathwater.

So, I think that regulation should be based not on abstract principles. As an Economist, I like efficiency, so first, we shouldn’t regulate AI until we see some meaningful harm that is actually happening — not imaginary scenarios.

The first time we starting requiring driver’s license it was after many dozens of people died in car accidents, right, and that was the right thing. If we would have required driver’s licenses where there were the first two cars on the road, that would have been a big mistake. We would have completely screwed up that regulation.

There has to be at least a little bit of harm, so that we see what is the real problem. What is the real problem? Did anybody suffer at least a thousand dollars because of that?

Should we jump to regulate something on a planet of eight billion people where there is not even a thousand dollars of damage? Of course not!

So, once we see real harm, then we have to ask ourselves a simple question, ‘could we regulate it in a way where the good things that will be prevented by this regulation are less important and less valuable than the harm that we prevent?’

You don’t put regulation in place to prevent a thousand dollars worth of harm where the same regulation prevents a million dollars worth of benefit to people around the world.

I agree with most of Dr Michael’s points here, and he brings some much-needed perspective to AI regulation, in contrast to the headless chickens running around panicking about our new discovery of fire (someone might burn us), or electricity (we might get electrocuted), or the Internet (it’s new and scary).

Hear Michael’s words in the WEF video at timecode (45m55s):

Inflection Pi chatbot (3/May/2023)

I’ve been waiting for Inflection (former members of DeepMind) to release their model for many months, and the chatbot version is finally here! Based on my testing, I estimate that it has between 60-100B parameters (Chinchilla scale); making it bigger than GPT-3 but not as big as GPT-4. It is designed to emulated a conversational chatbot, so is not useful for design tasks (like ChatGPT). The platform includes text-to-speech, and you can choose from four different voices.

“There’s lots of things Pi cannot do. It doesn’t do lists, or coding, it doesn’t do travel plans, it won’t write your marketing strategy, or your essay for school,” [One of three founders of DeepMind, Mustafa Suleyman] said in an interview with the Financial Times. “It’s purely designed for relaxed, supportive, informative conversation.”

…To keep up with its well-funded rivals, Inflection has hired AI experts from several competitors, including OpenAI, DeepMind and Google, who have previously helped build some of the world’s most powerful language models. Earlier this year, the company was in discussions to raise up to $675mn from investors. (-via The Fin)

Inflection will offer Pi for free for now, with no token restrictions. (Asked how it will charge users, and when, the company declined to comment.) Built on one of Inflection’s in-house large language models, Pi doesn’t use the company’s most advanced ones, which remain unreleased, according to Suleyman; Inflection already runs one of the world’s largest and best-performing models, he added, without providing specifics. Like OpenAI, Inflection uses Microsoft Azure for its cloud infrastructure. (-via Forbes)

Try it here (free, no login): https://heypi.com/talk

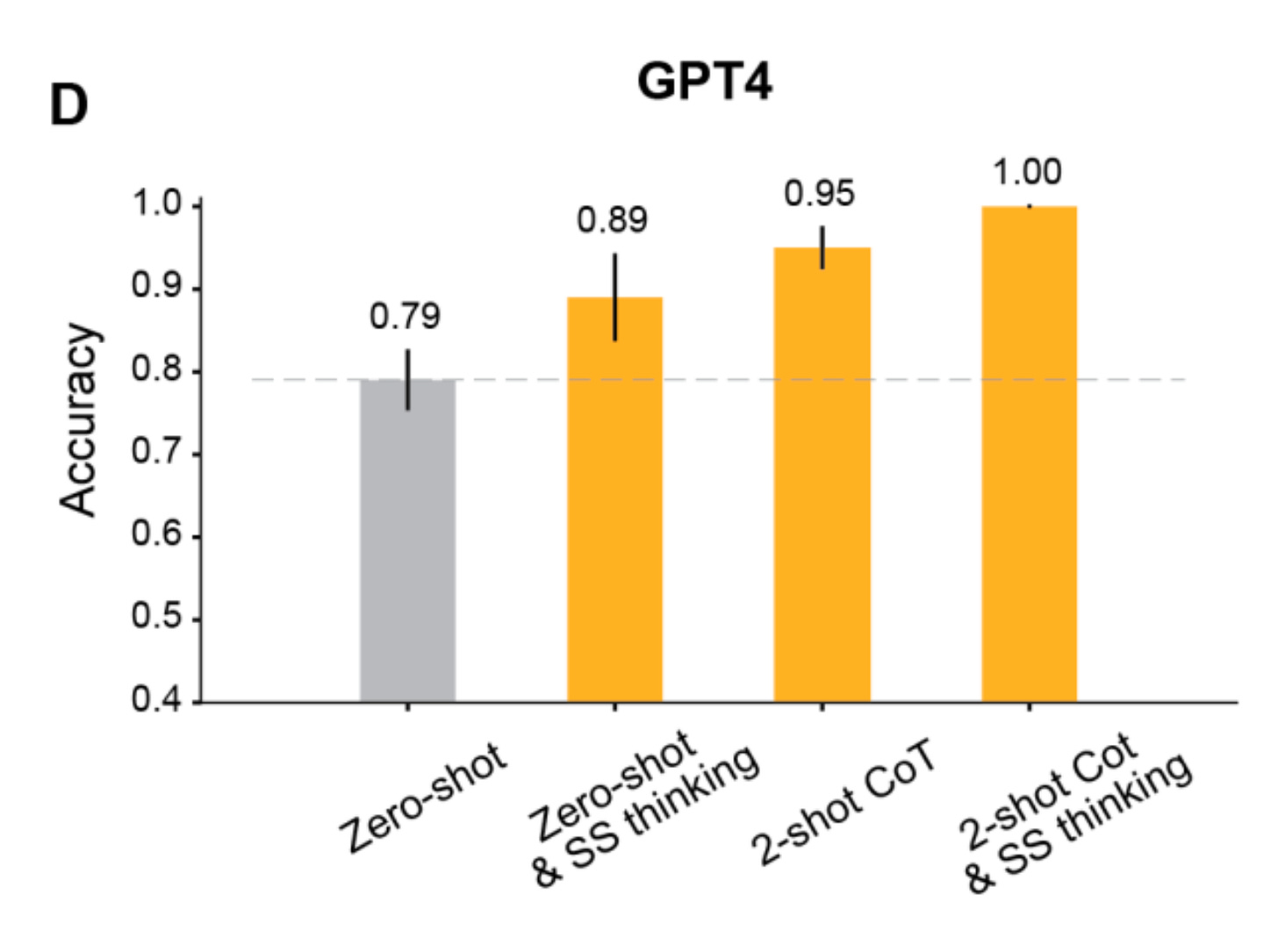

GPT-4 reaches 100% in Theory-of-mind (ToM) testing (26/Apr/2023)

GPT-4 has achieved 100% in ‘Theory of mind’ tasks (wiki). In my work with gifted children, when a student hits 100% on a test, this is a ‘very bad thing’. It means the test was designed poorly, and it was perhaps a waste of time testing that student with that instrument, as they may have been able to score (the equivalent of) 101% or 10,000%… but we’d never know because the test wasn’t comprehensive enough.

We are now seeing large language models outperforming peak human abilities in some areas. For the first time, we are also reaching the ceilings on some benchmarks. This is concerning given that (eventually) we may not be ‘smart enough’ to continue creating new tests for AI. We’re already hitting the ceiling of our human capabilities!

Note that CoT means ‘chain-of-thought’ (like show your working in maths), and ‘SS thinking’ means instructing the model to use ‘step-by-step’ thinking.

Read the paper via Johns Hopkins: https://arxiv.org/abs/2304.11490

Watch my video:

The Interesting Stuff

GPT-4 IQ = 152 (15/Mar/2023)

Dr David Rozado is an Associate Professor at Otago Polytechnic, and formerly at CSIRO and Max Planck. His testing of GPT models using verbal-linguist IQ tests is useful in giving us a real indication of artificial intelligence progress.

IQ

GPT-4 = 152 (99.97th %ile)

ChatGPT = 147 (99.91st %ile)

Mensa minimum = 130 (98th %ile)

Human average = 100 (50th %ile)

Read more about IQ on my ‘visualising brightness’ page.

See the latest benchmarks on my AI + IQ testing (human vs AI) page.

Google’s Luke Sernau and the ‘We have no moat’ document (Apr/2023)

In early April 2023, Google Engineer Luke Sernau published a document on Google’s internal system. The document was recently ‘leaked’, though please note that some think this was done intentionally by Google PR to redirect attention.

Highlights:

[Google isn’t] positioned to win this arms race and neither is OpenAI. While we’ve been squabbling, a third faction has been quietly eating our lunch. I’m talking, of course, about open source. Plainly put, they are lapping us.

Giant models are slowing us down. In the long run, the best models are the ones

which can be iterated upon quickly. [Alan: This is a misdirection at best. Giant models will continue to run the giant/corporate world.]

At the beginning of March [2023] the open source community got their hands on their first really capable foundation model, as Meta’s LLaMA was leaked to the public. It had no instruction or conversation tuning, and no RLHF. Nonetheless, the community immediately understood the significance of what they had been given.

…the one clear winner in all of this is Meta. Because the leaked model was theirs, they have effectively garnered an entire planet's worth of free labor.

The more tightly we control our models, the more attractive we make open alternatives. Google and OpenAI have both gravitated defensively toward release patterns… Anyone seeking to use LLMs for unsanctioned purposes can simply take their pick of the freely available models.

…in the end, OpenAI doesn’t matter. They are making the same mistakes we are in their posture relative to open source, and their ability to maintain an edge is necessarily in question. Open source alternatives can and will eventually eclipse them unless they change their stance.

Read the full thesis on GitHub.

Less publishing, less openness: Google follows DeepMind and OpenAI in restricting publishing (4/May/2023)

I’ve previously expressed my disappointment in the decision by several AI labs to stop publishing their research. The 2017-2022 openness of information in the AI field was a real boon for everyone. Now in 2023, this has come to an end, with Google joining DeepMind and OpenAI in restricting publication of research papers.

"We're not in the business of just publishing everything anymore," one Google Brain staffer described as the message from upper management.

Read more via BusinessInsider.

Datasets: Reddit bans Pushshift from data scraping (1/May/2023)

Pushshift is a data project started and maintained by Jason Baumgartner. It powers the collection of data (mainly web links and comments) from Reddit, which is used as a proxy for ‘popular’ web content for datasets like WebText (used for GPT-3, The Pile, and many other datasets). You can read more about this in my What’s in my AI? paper.

Reddit recently changed their terms and conditions, and has now banned Pushshift. The author noted:

The Reddit Dataset paper [May/2020] that I co-authored has been cited a whopping 630 times and it constantly grows. I don't think Reddit fully understands just how much Pushshift is used in research and the academic world -- but when we speak to the admins sometime this week, we'll try and make a strong case to keep as much functionality as we can in the API.

Datasets are a significant part of the AI race, and this is an interesting move by Reddit. It echoes Elon Musk’s belligerent blocking of the Twitter API and usage by OpenAI in Dec/2022:

[Musk] had learned of a relationship between OpenAI, the start-up behind the popular chatbot ChatGPT, and Twitter, which he had bought in October for $44 billion. OpenAI was licensing Twitter’s data — a feed of every tweet — for about $2 million a year to help build ChatGPT, two people with knowledge of the matter said. Mr. Musk believed the A.I. start-up wasn’t paying Twitter enough, they said.

So Mr. Musk cut OpenAI off from Twitter’s data, they said. (-via NYT)

NVIDIA GPT-2B-001 (May/2023)

NVIDIA should probably have been on my AI race viz, but at the time of publication I had decided that they were more about tooling and less about model-building:

They’re still in the race, and currently testing out training smaller models with enormous amounts of data. Their latest model is the successor to their Megatron-GPT 20B model (2022).

GPT-2B-001 (not related to GPT-2 or OpenAI) is only 2 billion parameters, but was trained on 1.1 trillion tokens. This makes it one of the most ‘data optimal’ models around—and potentially overtrained—though this is what they’re testing. On my Chinchilla viz (Nov/2022), it would be on the far-right at 550:1 tokens per parameter.

It will be interesting to see this progress, as it looks like we are heading towards models that can fit on one GPU for inference, but were trained on more than 3x the amount of data used for GPT-3.

View the HF space: https://huggingface.co/nvidia/GPT-2B-001

View tokens:parameters data on my table, showing comparisons between major models.

MosaicML MPT-7B-Instruct (5/May/2023)

Introducing MPT-7B, the latest entry in our MosaicML Foundation Series. MPT-7B is a transformer trained from scratch on 1T tokens of text and code. It is open source, available for commercial use, and matches the quality of LLaMA-7B. MPT-7B was trained on the MosaicML platform in 9.5 days with zero human intervention at a cost of ~$200k… we are also releasing three finetuned models in addition to the base MPT-7B: MPT-7B-Instruct, MPT-7B-Chat, and MPT-7B-StoryWriter-65k+, the last of which uses a context length of 65k tokens!

65,000 tokens is about 48,750 words (1 token≈0.75 words). Remember that the largest version of GPT-4 caps out at ‘only’ 32,000 tokens or 24,000 words.

So, MPT-7B allows you to generate pretty close to a standard 50,000-word book, OR feed it a 50,000-word book and ask for a one-page summary.

Imagine the possibilities of instantly having a book turned into a screenplay ‘in the style of Aaron Sorkin’ or ‘by Trey Parker and Matt Stone’!

Try the tuned demo (free, no login).

Read the announce: https://www.mosaicml.com/blog/mpt-7b

StarCoder 15.5B by HuggingFace and ServiceNow (5/May/2023)

The StarCoderBase models are 15.5B parameter models trained on 80+ programming languages from The Stack (v1.2), with opt-out requests excluded. The model uses Multi Query Attention, a context window of 8192 tokens, and was trained using the Fill-in-the-Middle objective on 1 trillion tokens.

This model is impressive in many ways:

Goes beyond Chinchilla scaling (65:1 tokens:parameters, vs Chinchilla’s 20:1).

80+ programming languages.

Uses OpenAI’s FIM concepts from Jul/2022 (new research released after GPT-4 started training).

The project also launched a VS Code extension.

Dr Jim Fan from NVIDIA calls this model ‘the Llama moment for coding!’ (6/May/2023).

View the HF space: https://huggingface.co/bigcode/starcoderbase

Try the demo: https://huggingface.co/spaces/bigcode/bigcode-playground

LLMs on your phone… already! (3/May/2023)

Maybe I shouldn’t have called them ‘laptop models’ so soon, as they’re already running on mobile devices.

MLC LLM is a universal solution that allows any language models [including Alpaca and MOSS] to be deployed natively… Everything runs locally with no server support and accelerated with local GPUs on your phone and laptops.

The installer for this will get much easier soon, but for now there are a few steps to get this running on your iPhone or Android device.

Try it: https://github.com/mlc-ai/mlc-llm

GPT-4 + Monocle/AR (26/Apr/2023)

RizzGPT combines GPT-4 with Whisper, an OpenAI-created speech recognition system, and Monocle AR glasses, an open source device. Chiang said generative AI was able to make this app possible because its ability to perceive text and audio allows the AI to understand and process a live conversation.

Countdown to Gato 2 (2/May/2023)

My conservative countdown to AGI currently stands at 48% progress, waiting for groundedness, physical embodiment via robots, and self-improvement. I’ve gone on record as saying that artificial general intelligence (AGI)—where AI can do anything a human can do including physical actions—is ‘a few months, not a few years away’. (Yes, this means less than three years away, or 2023-2026.)

In a backroom at DeepMind in London, I know they have the next version of the generalist agent Gato (‘cat’) underway (watch my video on Gato 1).

Recently, DeepMind’s CEO commented:

I think we’ll have very capable, very general systems in the next few years.

New AI model viz by Amazon researchers (May/2023)

Great to see others working to help visualize all the different large language models available in 2023. This viz also looks at encoder/decoder streams as branches.

Take a look in the paper: https://arxiv.org/abs/2304.13712

My comprehensive version as bubbles: https://lifearchitect.ai/models/#model-bubbles

Deloitte: 48% of people under 42 spend more time socializing online than off (1/May/2023)

Following Dr Ray Kurzweil’s prediction that ‘by 2029, most communication occurs between humans and machines as opposed to human-to-human,’ we may be about half-way there already. In a recent survey, Deloitte found that 48% of people under the age of 42 spend more time communicating with friends online than in real life.

Bing Chat updates (4/May/2023)

Richer, more visual answers including charts and graphs.

Improved summarization capabilities for long documents, including PDFs and longer-form websites.

Image Creator in Bing Chat will be available in over 100 languages.

Visual search in Bing Chat will allow users to upload images and search for related content.

Chat history in Bing Chat will allow users to pick up where they left off and return to previous chats.

Export and share functionalities will be added to chat for easy sharing and collaboration.

Third-party plug-ins will be integrated into Bing chat, enabling developers to add features.

OpenAI Shap-E (3/May/2023)

In all the hubbub of ChatGPT and GPT-4 releases, OpenAI have left just two researchers(!) alone to play with the successor to Point-E, the generative model for 3D assets.

…an explicit generative model over point clouds, Shap·E converges faster and reaches comparable or better sample quality [than Point·E] despite modeling a higher-dimensional, multi-representation output space. We release model weights, inference code, and samples…

Browse the repo: https://github.com/openai/shap-e

Read the paper: https://arxiv.org/abs/2305.02463

Policy

US President meets with CEOs from Anthropic/Google/Microsoft/OpenAI (3/May/2023)

The chief executives of Alphabet Inc's Google, Microsoft, OpenAI and Anthropic will meet with Vice President Kamala Harris and top administration officials to discuss key artificial intelligence (AI) issues on Thursday, said a White House official. The invitation seen by Reuters to the CEOs noted President Joe Biden's "expectation that companies like yours must make sure their products are safe before making them available to the public."

Outcome highlights include:

$140 million in funding to launch seven new National AI Research Institutes.

The Office of Management and Budget (OMB) is announcing that it will be releasing draft policy guidance on the use of AI systems by the U.S. government for public comment. This guidance will establish specific policies for federal departments and agencies to follow…

ChatGPT for Education (1/Mar/2023)

Our new survey of K-12 teachers and students 12-17 years old shows a remarkable level of adoption of ChatGPT among teachers and students. Both groups clearly recognize the potential of the program’s uses for learning. A majority of teachers are already using ChatGPT for their job, as are a third of students. Teachers and students who’ve used ChatGPT overwhelmingly say it’s positively impacted their teaching and learning.

Download the brief report (PDF).

See also my education policy analysis from The Memo 24/March/2023 edition.

Toys to Play With

Prompt engineering by Microsoft (23/Apr/2023)

The techniques in this guide will teach you strategies for increasing the accuracy and grounding of responses you generate with a Large Language Model (LLM) [all tested with GPT-4, but applicable to other models].

GPT-4 power users! (May/2023)

I really enjoyed some of the 50+ comments on advanced use cases for GPT-4 here.

And another 100+ comments on this HN thread.

Sal Khan speaks about personalized education with GPT-4 (May/2023)

Box.com + GPT-4 (3/May/2023)

I use Box to share important files for The Memo.

‘Today we're announcing Box AI, a breakthrough in how you can interact with your content! Box AI leverages leading AI models, starting with GPT 3.5 and 4, to let you ask questions, summarize, pull out insights, and generate new content! http://box.com/ai’

Try it: https://www.box.com/ai

Slack GPT (4/May/2023)

Slack (Salesforce) are beginning to integrate OpenAI ChatGPT, Anthropic Claude, and ‘bring-your-own’ models into the Slack app.

Read the announce: https://slack.com/intl/en-au/blog/news/introducing-slack-gpt

GPT-4 escape room (2/Mar/2023)

AI Escape is a revolutionary escape room platform that utilizes GPT-4 & ChatGPT to generate dynamic, customized escape room experiences. By harnessing the power of GPT-4, each escape room presents endless possibilities, immersive storylines, and challenges tailored to your skill level. AI Escape also incorporates AI-generated images to visualize these unique experiences. Engage in captivating narratives and test your problem-solving skills against our state-of-the-art AI. Join a global community of players and embark on a thrilling adventure where the clock is ticking, and the AI is waiting. Can you escape?

Join the beta: https://www.aiescape.io/

Transformify (1/May/2023)

The Memo reader Ratko is heading up a new GPT-4 app that works a bit like Zapier + the latest LLMs.

Tech stack: Models are based on gpt-4-8k, gpt-4-32k, gpt-3.5-turbo and text-davinci-003 models.

At launch, The Memo subscribers can use the code: [removed] to get a discount in the first 3 months on all paid plans.

Take a look: https://www.transformify.ai/automate

Next

Google will release some big models—the rumour is a release of PaLM 2 and more—in their Google IO presentation within the next 24 hours.

Watch live here: https://io.google/2023/

I think it’s safe to say that Apple’s upcoming keynote event will be an ‘iPhone moment’. I haven’t stayed up to watch one for a while, but I’ll be tuning in to this one for the Reality headset release, which will provide user hardware for much of this amazing AI software.

Here’s the countdown to the 5/Jun/2023 WWDC23 kick off in your timezone.

We’ll be hosting a text-only live discussion on 5/Jun/2023 during the Apple stream.

All my very best,

Alan

LifeArchitect.ai

Discussion | Search | Archives